Hot on the heels of my victory over Wonder Boy 35 years after I first played it, I can now finally claim to have beaten Golden Axe, 25 years after I first played it.

Couldn’t have done it without my magic-wielding 7-year-old co-op buddy.

Hot on the heels of my victory over Wonder Boy 35 years after I first played it, I can now finally claim to have beaten Golden Axe, 25 years after I first played it.

Couldn’t have done it without my magic-wielding 7-year-old co-op buddy.

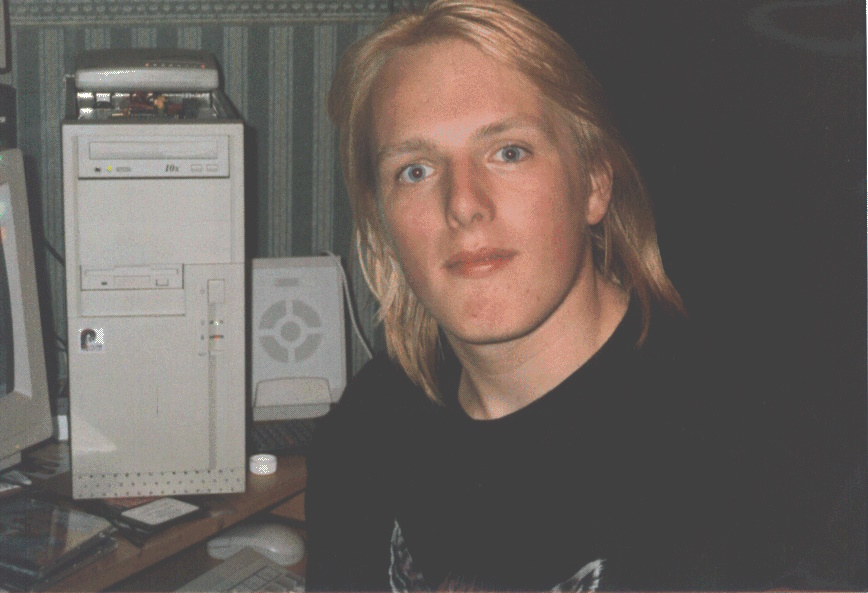

Some younger/hipper friends tell me that there was a thing going around on Instagram this week where people post photos of themselves aged 21.

I might not have any photos of myself aged 21! I certainly can’t find any digital ones…

It must sound weird to young folks nowadays, but prior to digital photography going mainstream in the 2000s (thanks in big part to the explosion of popularity of mobile phones), taking a photo took effort:

I didn’t routinely take digital photos until after Claire and I got together in 2002 (she had a digital camera, with which the photo above was taken). My first cameraphone – I was a relatively early-adopter – was a Nokia 7650, bought late that same year.

It occurs to me that I take more photos in a typical week nowadays, than I took in a typical year circa 2000.

This got me thinking: what’s the oldest digital photo that exists, of me. So I went digging.

I might not have owned a digital camera in the 1990s, but my dad’s company owned one with which to collect pictures when working on-site. It was a Sony MVC-FD7, a camera most-famous for its quirky use of 3½” floppy disks as media (this was cheap and effective, but meant the camera was about the size and weight of a brick and took about 10 seconds to write each photo from RAM to the disk, during which it couldn’t do anything else).

In Spring 1998, almost 26 years ago, I borrowed it and took, among others, this photo:

I’m confident a picture of me was taken by a Connectix QuickCam (an early webcam) in around 1996, but I can’t imagine it still exists.

So unless you’re about to comment to tell me know you differently and have an older picture of me: that snap of me taking my own photo with a bathroom mirror is the oldest digital photo of me that exists.

This post1 is part of my attempt at Bloganuary 2024.2Today’s prompt is:

Think back on your most memorable road trip.

It didn’t take me long to choose a most-memorable road trip, but first: here’s a trio of runners-up that I considered3:

At somewhere between 500 and 600 road miles each way, perhaps the single longest road journey I’ve ever made without an overnight break was to attend a wedding.

The wedding was of my friends Kit and Fi, and took place a long, long way up into Scotland. At the time I (and a few other wedding guests) lived on the West coast of Wales. The journey options between the two might be characterised as follows:

Guess which approach this idiot went for?

Despite having just graduated, I was still living very-much on a student-grade budget. I wasn’t confident that we could afford both the travel to and from the wedding and more than a single night’s accommodation at the other end.

But there were four of us who wanted to attend: me, my partner Claire, and our friends Bryn and Paul. Two of the four were qualified to drive and could be insured on Claire’s car8. This provided an opportunity: we’d make the entire 11-or-so-hour journey by car, with a pair of people sleeping in the back while the other pair drove or navigated!

It was long, and it was arduous, but we chatted and we sang and we saw a frankly ludicrous amount of the A9 trunk road and we made it to and from what was a wonderful wedding on our shoestring budget. It’s almost a shame that the party was so good that the memories of the road trip itself pale, or else this might be a better anecdote! But altogether, entirely a worthwhile, if crazy, exercise.

1 Participating in Bloganuary has now put me into my fifth-longest “daily streak” of blog posts! C-c-c-combo continues!

2 Also, wow: thanks to staying up late with my friend John drinking and mucking about with the baby grand piano in the lobby of the hotel we’re staying at, I might be first to publish a post for today’s Bloganuary!

3 Strangely, all three of the four journeys I’ve considered seem to involve Scotland. Which I suppose shouldn’t be too much of a surprise, given its distance from many of the other places I’ve lived and of course its size (and sometimes-sparse road network).

4 Okay, probably not for the entire journey, but I’m certain it must’ve felt like it.

5 Our cargo included several cats who almost-immediately escaped from their cardboard enclosures and vomited throughout the vehicle.

6 This included, for example, our beds: we spent our first night in our new house camped together in sleeping bags on the floor of what would later become my bedroom, which only added to the sense of adventure in the whole enterprise.

7 It was, fortunately, only a light vehicle, plus our designated driver was at this point so pumped-up on energy drinks he might have been able to lift it by himself!

8 It wasn’t a big car, and in hindsight cramming four people into it for such a long journey might not have been the most-comfortable choice!

This post is part of my attempt at Bloganuary 2024. Today’s prompt is:

Do you spend more time thinking about the future or the past? Why?

I probably spend similar amounts of time and energy on both. And that is: a lot!

I’m nostalgic as anything. I play retro video games (or even reverse-engineer them and vlog about it). I revisit old blog posts on their anniversary, years later. I recreate old interactive advertisements using modern technologies. When I’m not reading about how the Internet used to be, I’m bringing it back to life by reimagining old protocols in modern spaces and sharing the experience with others1.

But I’m also keenly-focussed on the future. I apply a hacker mindset to every new toy that comes my way, asking not “what does it do?” but “what can it be made to do?”. I’ve spent over a decade writing about the future of (tele)working, which faces new challenges today unlike any before. I’m much more-cautious than I was in my youth about jumping on every new tech bandwagon2, but I still try to keep abreast and ahead of developments in my field.

But I also necessarily find myself thinking about the future of our world: the future that our children will grow up in. It’s a scary time, but I’m sure you don’t need me to spell that out for you!

Either way: a real mixture of thinking about the past and the future. It’s possible that I neglect the present?

1 By the way: did you know that much of my blog is accessible over finger (finger

@danq.me), Gopher (gopher://danq.me), and Gemini (gemini://danq.me). Grab yourself a copy of Lagrange

or your favourite smolweb browser and see for yourself!

2 Exactly how many new JavaScript frameworks can you learn each week, anyway?

There are video games that I’ve spent many years playing (sometimes on-and-off) before finally beating them for the first time. I spent three years playing Dune II before I finally beat it as every house. It took twice that to reach the end of Ultima Underworld II. But today, I can add a new contender1 to that list.

Today, over thirty-five years after I first played it, I finally completed Wonder Boy.

My first experience of the game, in the 1980s, was on a coin-op machine where I’d discovered I could get away with trading the 20p piece I’d been given by my parents to use as a deposit on a locker that week for two games on the machine. I wasn’t very good at it, but something about the cutesy graphics and catchy chip-tune music grabbed my attention and it became my favourite arcade game.

I played it once or twice more when I found it in arcades, as an older child. I played various console ports of it and found them disappointing. I tried it a couple of times in MAME. But I didn’t really put any effort into it until a hotel we stayed at during a family holiday to Paris in October had a bank of free-to-play arcade machines rigged with Pandora’s Box clones so they could be used to play a few thousand different arcade classics. Including Wonder Boy.

Off the back of all the fun the kids had, it’s perhaps no surprise that I arranged for a similar machine to be delivered to us as a gift “to the family”2 this Christmas.

And so my interest in the game was awakened and I threw easily a hundred pounds worth of free-play games of Wonder Boy3 over the last few days. Until…

…today, I finally defeated the seventh ogre4, saved Tina5, saved the kingdom, etc. It was a hell of a battle. I can’t count how many times I pressed the “insert coin” button on that final section, how many little axes I’d throw into the beast’s head while dodging his fireballs, etc.

So yeah, that’s done, now. I guess I can get back to finishing Wonder Boy: The Dragon’s Trap, the 2017 remake of a 1989 game I adored!6 It’s aged amazingly well!

1 This may be the final record for time spent playing a video game before beating it, unless someday I ever achieve a (non-cheating) NetHack ascension.

2 The kids have had plenty of enjoyment out of it so far, but their time on the machine is somewhat eclipsed by Owen playing Street Fighter II Turbo and Streets of Rage on it and, of course, by my rediscovered obsession with Wonder Boy.

3 The arcade cabinet still hasn’t quite paid for itself in tenpences-saved, despite my grinding of Wonder Boy. Yet.

4 I took to calling the end-of-world bosses “ogres” when my friends and I swapped tips for the game back in the late 80s, and I refuse to learn any different name for them.

5 Apparently the love interest has a name. Who knew?

6 I completed the original Wonder Boy III: The Dragon’s Trap on a Sega Master System borrowed from my friend Daniel back in around 1990, so it’s not a contender for the list either.

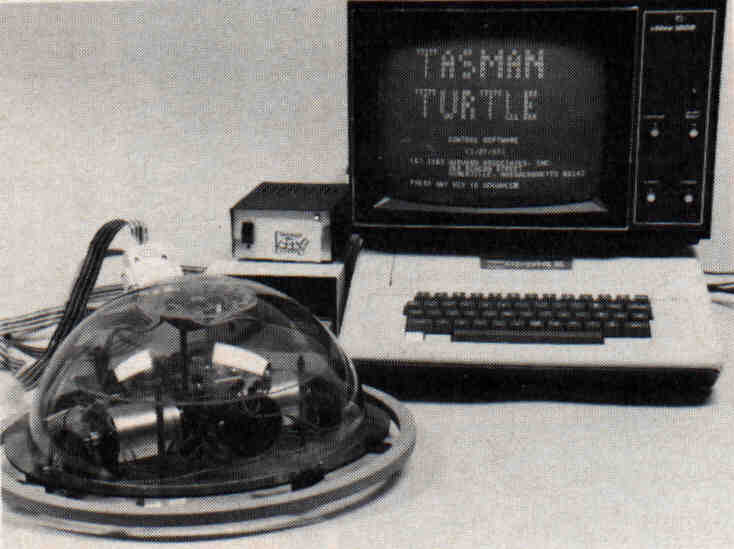

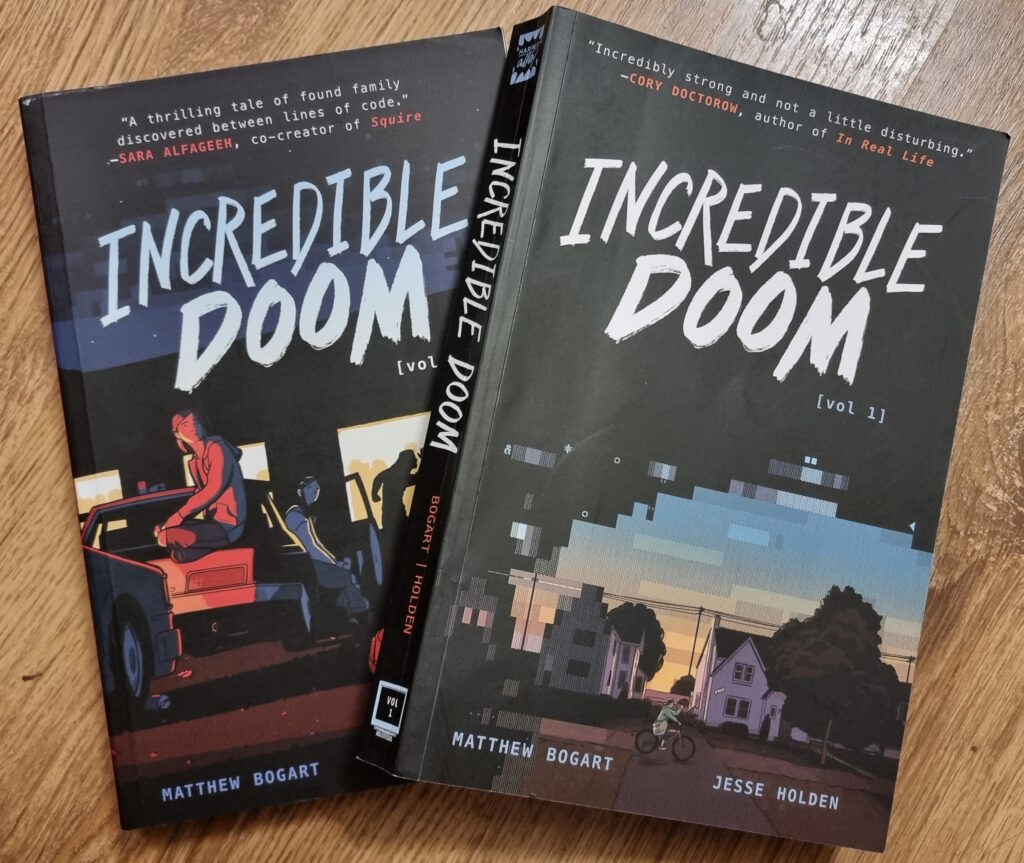

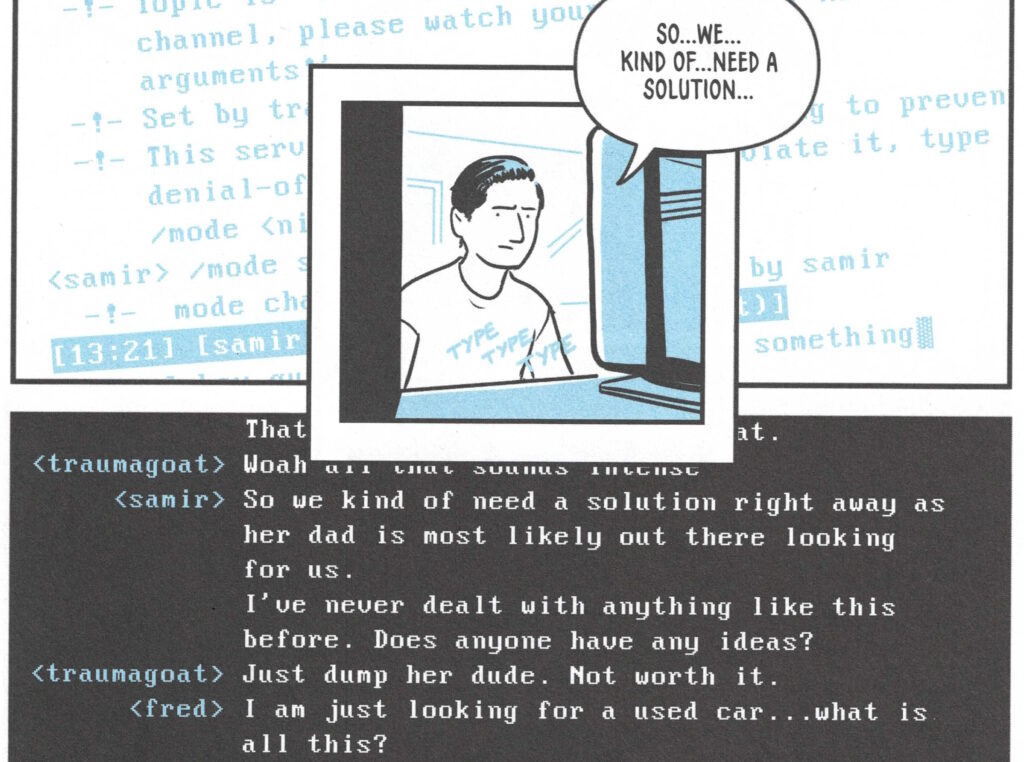

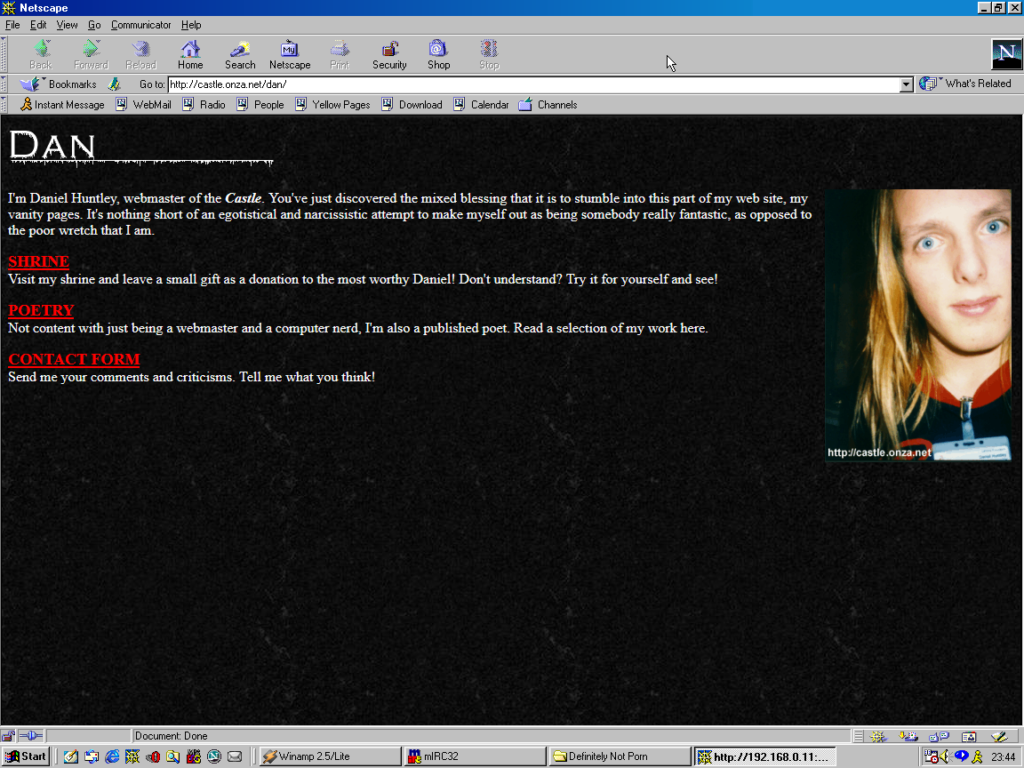

I just finished reading Incredible Doom volumes 1 and 2, by Matthew Bogart and Jesse Holden, and man… that was a heartwarming and nostalgic tale!

Set in the early-to-mid-1990s world in which the BBS is still alive and kicking, and the Internet’s gaining traction but still lacks the “killer app” that will someday be the Web (which is still new and not widely-available), the story follows a handful of teenagers trying to find their place in the world. Meeting one another in the 90s explosion of cyberspace, they find online communities that provide connections that they’re unable to make out in meatspace.

It touches on experiences of 90s cyberspace that, for many of us, were very definitely real. And while my online “scene” at around the time that the story is set might have been different from that of the protagonists, there’s enough of an overlap that it felt startlingly real and believable. The online world in which I – like the characters in the story – hung out… but which occupied a strange limbo-space: both anonymous and separate from the real world but also interpersonal and authentic; a frontier in which we were still working out the rules but within which we still found common bonds and ideals.

Anyway, this is all a long-winded way of saying that Incredible Doom is a lot of fun and if it sounds like your cup of tea, you should read it.

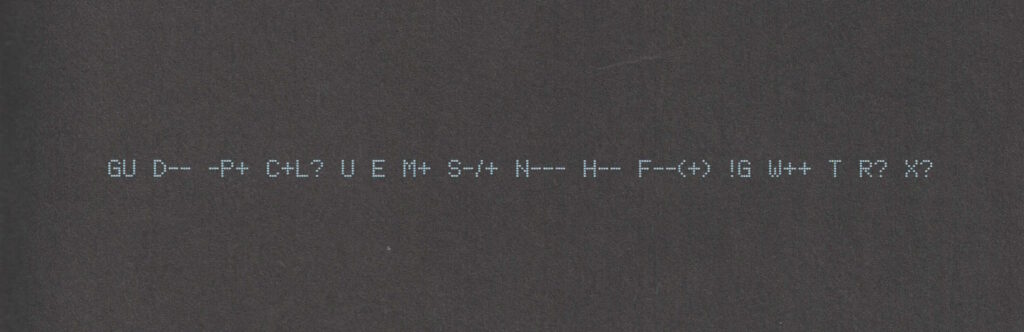

Also: shortly after putting the second volume down, I ended up updating my Geek Code for the first time in… ooh, well over a decade. The standards have moved on a little (not entirely in a good way, I feel; also they’ve diverged somewhat), but here’s my attempt:

----- BEGIN GEEK CODE VERSION 6.0 ----- GCS^$/SS^/FS^>AT A++ B+:+:_:+:_ C-(--) D:+ CM+++ MW+++>++ ULD++ MC+ LRu+>++/js+/php+/sql+/bash/go/j/P/py-/!vb PGP++ G:Dan-Q E H+ PS++ PE++ TBG/FF+/RM+ RPG++ BK+>++ K!D/X+ R@ he/him! ----- END GEEK CODE VERSION 6.0 -----

1 I was amazed to discover that I could still remember most of my Geek Code syntax and only had to look up a few components to refresh my memory.

This post is also available as an article. So if you'd rather read a conventional blog post of this content, you can!

This video accompanies a blog post of the same title. The content is mostly the same; the blog post contains a few extra elements (especially in the footnotes!). Enjoy whichever one you choose.

Also available on YouTube and on Facebook.

This post is also available as a video. If you'd prefer to watch/listen to me talk about this topic, give it a look.

This blog post is also available as a video. Would you prefer to watch/listen to me tell you about the video game that had the biggest impact on my life?

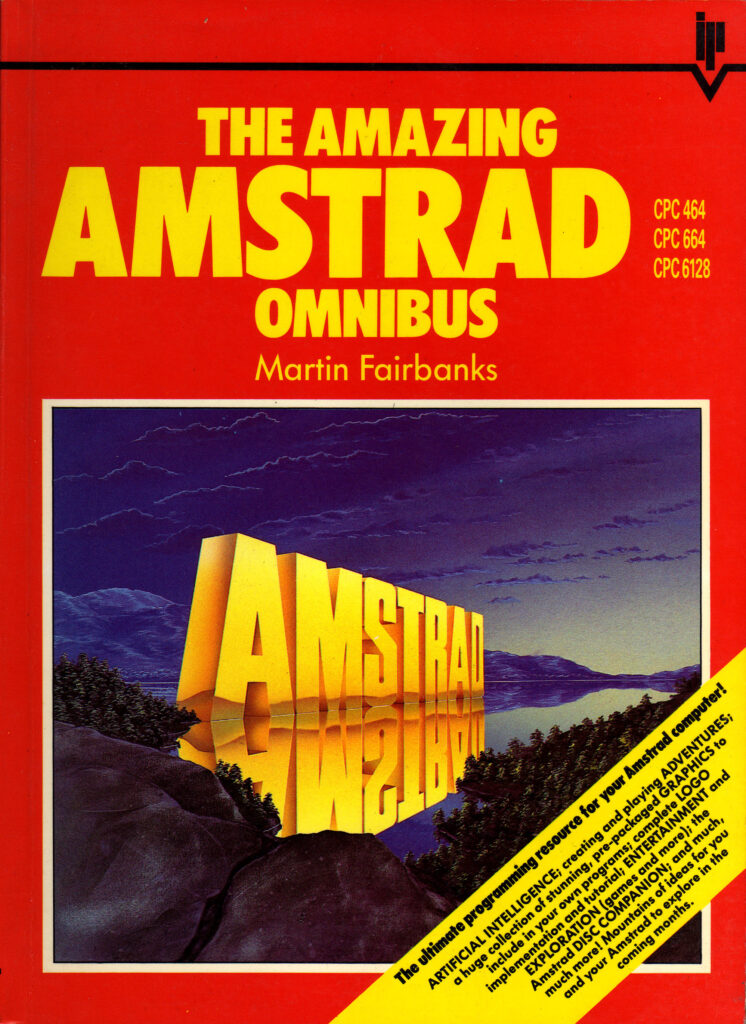

Of all of the videogames I’ve ever played, perhaps the one that’s had the biggest impact on my life1 was: Werewolves and (the) Wanderer.2

This simple text-based adventure was originally written by Tim Hartnell for use in his 1983 book Creating Adventure Games on your Computer. At the time, it was common for computing books and magazines to come with printed copies of program source code which you’d need to re-type on your own computer, printing being significantly many orders of magnitude cheaper than computer media.3

I’d been working my way through the operating manual for our microcomputer, trying to understand it all.5

![Scan of a ring-bound page from a technical manual. The page describes the use of the "INPUT" command, saying "This command is used to let the computer know that it is expecting something to be typed in, for example, the answer to a question". The page goes on to provide a code example of a program which requests the user's age and then says "you look younger than [age] years old.", substituting in their age. The page then explains how it was the use of a variable that allowed this transaction to occur.](https://bcdn.danq.me/_q23u/2023/07/cpc664-manual-input-command.png)

[ENTER] at the end of each line.

In particular, I found myself comparing Werewolves to my first attempt at a text-based adventure. Using what little I’d grokked of programming so far, I’d put together

a series of passages (blocks of PRINT statements6)

with choices (INPUT statements) that sent the player elsewhere in the story (using, of course, the long-considered-harmful GOTO statement), Choose-Your-Own-Adventure style.

Werewolves was… better.

Werewolves and Wanderer was my first lesson in how to structure a program.

Let’s take a look at a couple of segments of code that help illustrate what I mean (here’s the full code, if you’re interested):

10 REM WEREWOLVES AND WANDERER 20 GOSUB 2600:REM INTIALISE 30 GOSUB 160 40 IF RO<>11 THEN 30 50 PEN 1:SOUND 5,100:PRINT:PRINT "YOU'VE DONE IT!!!":GOSUB 3520:SOUND 5,80:PRINT "THAT WAS THE EXIT FROM THE CASTLE!":SOUND 5,200 60 GOSUB 3520 70 PRINT:PRINT "YOU HAVE SUCCEEDED, ";N$;"!":SOUND 5,100 80 PRINT:PRINT "YOU MANAGED TO GET OUT OF THE CASTLE" 90 GOSUB 3520 100 PRINT:PRINT "WELL DONE!" 110 GOSUB 3520:SOUND 5,80 120 PRINT:PRINT "YOUR SCORE IS"; 130 PRINT 3*TALLY+5*STRENGTH+2*WEALTH+FOOD+30*MK:FOR J=1 TO 10:SOUND 5,RND*100+10:NEXT J 140 PRINT:PRINT:PRINT:END ... 2600 REM INTIALISE 2610 MODE 1:BORDER 1:INK 0,1:INK 1,24:INK 2,26:INK 3,18:PAPER 0:PEN 2 2620 RANDOMIZE TIME 2630 WEALTH=75:FOOD=0 2640 STRENGTH=100 2650 TALLY=0 2660 MK=0:REM NO. OF MONSTERS KILLED ... 3510 REM DELAY LOOP 3520 FOR T=1 TO 900:NEXT T 3530 RETURN

...) have been added for readability/your convenience.

What’s interesting about the code above? Well…

GOSUB statements):

2610), the RNG (2620), and player

characteristics (2630 – 2660). This also makes it easy to call it again (e.g. if the player is given the option to “start over”). This subroutine

goes on to set up the adventure map (more on that later).

160: this is the “main game” logic. After it runs, each time, line 40 checks IF RO<>11 THEN 30. This tests

whether the player’s location (RO) is room 11: if so, they’ve exited the castle and won the adventure. Otherwise, flow returns to line 30 and the “main

game” subroutine happens again. This broken-out loop improving the readability and maintainability of the code.8

3520). It just counts to 900! On a known (slow) processor of fixed speed, this is a simpler way to put a delay in than

relying on a real-time clock.

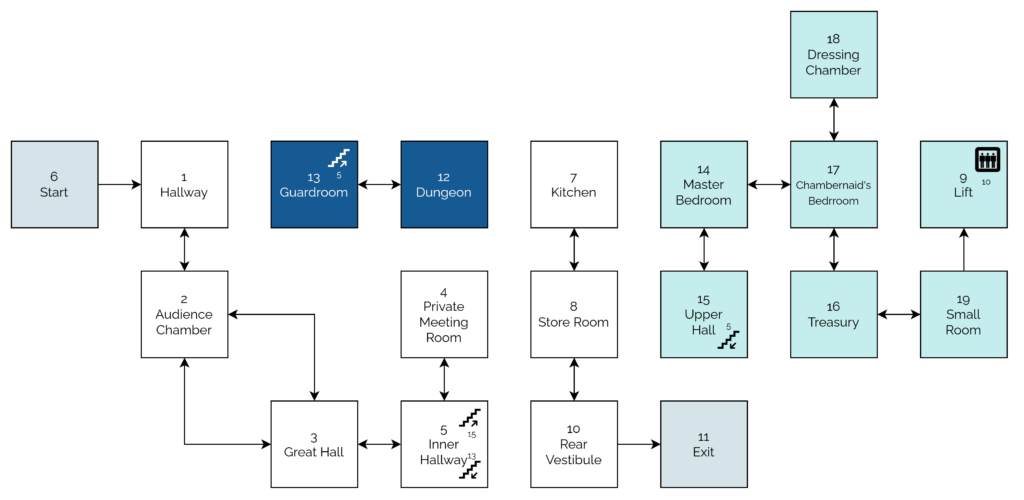

The game setup gets more interesting still when it comes to setting up the adventure map. Here’s how it looks:

2680 REM SET UP CASTLE 2690 DIM A(19,7):CHECKSUM=0 2700 FOR B=1 TO 19 2710 FOR C=1 TO 7 2720 READ A(B,C):CHECKSUM=CHECKSUM+A(B,C) 2730 NEXT C:NEXT B 2740 IF CHECKSUM<>355 THEN PRINT "ERROR IN ROOM DATA":END ... 2840 REM ALLOT TREASURE 2850 FOR J=1 TO 7 2860 M=INT(RND*19)+1 2870 IF M=6 OR M=11 OR A(M,7)<>0 THEN 2860 2880 A(M,7)=INT(RND*100)+100 2890 NEXT J 2910 REM ALLOT MONSTERS 2920 FOR J=1 TO 6 2930 M=INT(RND*18)+1 2940 IF M=6 OR M=11 OR A(M,7)<>0 THEN 2930 2950 A(M,7)=-J 2960 NEXT J 2970 A(4,7)=100+INT(RND*100) 2980 A(16,7)=100+INT(RND*100) ... 3310 DATA 0, 2, 0, 0, 0, 0, 0 3320 DATA 1, 3, 3, 0, 0, 0, 0 3330 DATA 2, 0, 5, 2, 0, 0, 0 3340 DATA 0, 5, 0, 0, 0, 0, 0 3350 DATA 4, 0, 0, 3, 15, 13, 0 3360 DATA 0, 0, 1, 0, 0, 0, 0 3370 DATA 0, 8, 0, 0, 0, 0, 0 3380 DATA 7, 10, 0, 0, 0, 0, 0 3390 DATA 0, 19, 0, 8, 0, 8, 0 3400 DATA 8, 0, 11, 0, 0, 0, 0 3410 DATA 0, 0, 10, 0, 0, 0, 0 3420 DATA 0, 0, 0, 13, 0, 0, 0 3430 DATA 0, 0, 12, 0, 5, 0, 0 3440 DATA 0, 15, 17, 0, 0, 0, 0 3450 DATA 14, 0, 0, 0, 0, 5, 0 3460 DATA 17, 0, 19, 0, 0, 0, 0 3470 DATA 18, 16, 0, 14, 0, 0, 0 3480 DATA 0, 17, 0, 0, 0, 0, 0 3490 DATA 9, 0, 16, 0, 0, 0, 0

DATA statements form a “table”.

What’s this code doing?

2690 defines an array (DIM) with two dimensions9

(19 by 7). This will store room data, an approach that allows code to be shared between all rooms: much cleaner than my first attempt at an adventure with each room

having its own INPUT handler.

2700 through 2730 populates the room data from the DATA blocks. Nowadays you’d probably put that data in a

separate file (probably JSON!). Each “row” represents a room, 1 to 19. Each “column” represents the room you end up

at if you travel in a given direction: North, South, East, West, Up, or Down. The seventh column – always zero – represents whether a monster (negative number) or treasure

(positive number) is found in that room. This column perhaps needn’t have been included: I imagine it’s a holdover from some previous version in which the locations of some or all of

the treasures or monsters were hard-coded.

2850 selects seven rooms and adds a random amount of treasure to each. The loop beginning on line 2920 places each of six

monsters (numbered -1 through -6) in randomly-selected rooms. In both cases, the start and finish rooms, and any room with a treasure or monster, is

ineligible. When my 8-year-old self finally deciphered what was going on I was awestruck at this simple approach to making the game dynamic.

2970 – 2980), replacing any treasure or monster already there: the Private Meeting Room (always

worth a diversion!) and the Treasury, respectively.

2740 is cute, and a younger me appreciated deciphering it. I’m not convinced it’s necessary (it sums all of the values in

the DATA statements and expects 355 to limit tampering) though, or even useful: it certainly makes it harder to modify the rooms, which may undermine

the code’s value as a teaching aid!

Something you might notice is missing is the room descriptions. Arrays in this language are strictly typed: this array can only contain integers and not strings. But there are other reasons: line length limitations would have required trimming some of the longer descriptions. Also, many rooms have dynamic content, usually based on random numbers, which would be challenging to implement in this way.

As a child, I did once try to refactor the code so that an eighth column of data specified the line number to which control should pass to display the room description. That’s

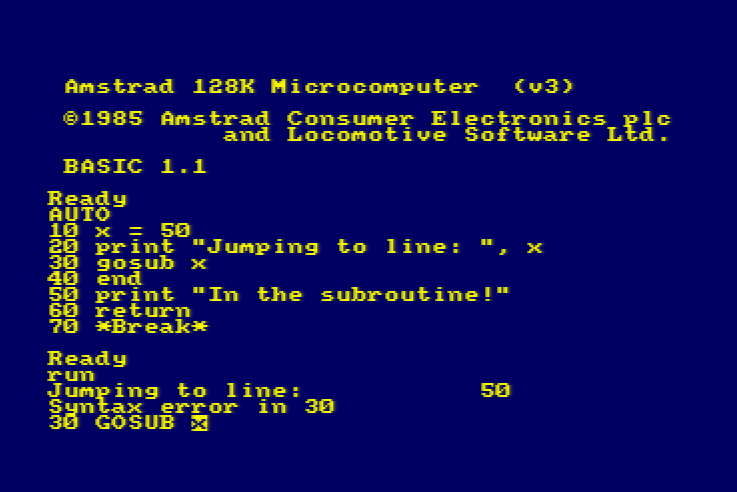

a bit of a no-no from a “mixing data and logic” perspective, but a cool example of metaprogramming before I even knew it! This didn’t work, though: it turns out you can’t pass a

variable to a Locomotive BASIC GOTO or GOSUB. Boo!10

Werewolves and Wanderer has many faults11. But I’m clearly not the only developer whose early skills were honed and improved by this game, or who hold a special place in their heart for it. Just while writing this post, I discovered:

A decade or so later, I’d be taking my first steps as a professional software engineer. A couple more decades later, I’m still doing it.

And perhaps that adventure -the one that’s occupied my entire adult life – was facilitated by this text-based one from the 1980s.

1 The game that had the biggest impact on my life, it might surprise you to hear, is not among the “top ten videogames that stole my life” that I wrote about almost exactly 16 years ago nor the follow-up list I published in its incomplete form three years later. Turns out that time and impact are not interchangable. Who knew?

2 The game is variously known as Werewolves and Wanderer, Werewolves and

Wanderers, or Werewolves and the

Wanderer. Or, on any system I’ve been on, WERE.BAS, WEREWOLF.BAS, or WEREWOLV.BAS, thanks to the CPC’s eight-point-three filename limit.

3 Additionally, it was thought that having to undertake the (painstakingly tiresome) process of manually re-entering the source code for a program might help teach you a little about the code and how it worked, although this depended very much on how readable the code and its comments were. Tragically, the more comprehensible some code is, the more long-winded the re-entry process.

4 The CPC’s got a fascinating history in its own right, but you can read that any time.

5 One of my favourite features of home microcomputers was that seconds after you turned them on, you could start programming. Your prompt was an interface to a programming language. That magic had begun to fade by the time DOS came to dominate (sure, you can program using batch files, but they’re neither as elegant nor sophisticated as any BASIC dialect) and was completely lost by the era of booting directly into graphical operating systems. One of my favourite features about the Web is that it gives you some of that magic back again: thanks to the debugger in a modern browser, you can “tinker” with other people’s code once more, right from the same tool you load up every time. (Unfortunately, mobile devices – which have fast become the dominant way for people to use the Internet – have reversed this trend again. Try to View Source on your mobile – if you don’t already know how, it’s not an easy job!)

6 In particular, one frustration I remember from my first text-based adventure was that I’d been unable to work around Locomotive BASIC’s lack of string escape sequences – not that I yet knew what such a thing would be called – in order to put quote marks inside a quoted string!

7 “Screen editors” is what we initially called what you’d nowadays call a “text editor”: an application that lets you see a page of text at the same time, move your cursor about the place, and insert text wherever you feel like. It may also provide features like copy/paste and optional overtyping. Screen editors require more resources (and aren’t suitable for use on a teleprinter) compared to line editors, which preceeded them. Line editors only let you view and edit a single line at a time, which is how most of my first 6 years of programming was done.

8 In a modern programming language, you might use while true or similar for a

main game loop, but this requires pushing the “outside” position to the stack… and early BASIC dialects often had strict (and small, by modern standards) limits on stack height that

would have made this a risk compared to simply calling a subroutine from one line and then jumping back to that line on the next.

9 A neat feature of Locomotive BASIC over many contemporary and older BASIC dialects was its support for multidimensional arrays. A common feature in modern programming languages, this language feature used to be pretty rare, and programmers had to do bits of division and modulus arithmetic to work around the limitation… which, I can promise you, becomes painful the first time you have to deal with an array of three or more dimensions!

10 In reality, this was rather unnecessary, because the ON x GOSUB command

can – and does, in this program – accept multiple jump points and selects the one

referenced by the variable x.

11 Aside from those mentioned already, other clear faults include: impenetrable controls unless you’ve been given instuctions (although that was the way at the time); the shopkeeper will penalise you for trying to spend money you don’t have, except on food, presumably as a result of programmer laziness; you can lose your flaming torch, but you can’t buy spares in advance (you can pay for more, and you lose the money, but you don’t get a spare); some of the line spacing is sometimes a little wonky; combat’s a bit of a drag; lack of feedback to acknowledge the command you enterted and that it was successful; WHAT’S WITH ALL THE CAPITALS; some rooms don’t adequately describe their exits; the map is a bit linear; etc.

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

This was a delightful vlog. It really adds personality to what might otherwise have been a story only about technology and history.

I subscribed to Codex’s vlog like… four years ago? He went dark soon afterwards, but thanks to the magic of RSS, I got notified as soon as he came back from his hiatus.

The week before last I had the opportunity to deliver a “flash talk” of up to 4 minutes duration at a work meetup in Vienna, Austria. I opted to present a summary of what I’ve learned while adding support for Finger and Gopher protocols to the WordPress installation that powers DanQ.me (I also hinted at the fact that I already added Gemini and Spring ’83 support, and I’m looking at other protocols). If you’d like to see how it went, you can watch my flash talk here or on YouTube.

If you love the idea of working from wherever-you-are but ocassionally meeting your colleagues in person for fabulous in-person events with (now optional) flash talks like this, you might like to look at Automattic’s recruitment pages…

The presentation is a shortened, Automattic-centric version of a talk I’ll be delivering tomorrow at Oxford Geek Nights #53; so if you’d like to see it in-person and talk protocols with me over a beer, you should come along! There’ll probably be blog posts to follow with a more-detailed look at the how-and-why of using WordPress as a CMS not only for the Web but for a variety of zany, clever, retro, and retro-inspired protocols down the line, so perhaps consider the video above a “teaser”, I guess?

You don’t really see it any more, but: if you downloaded some media player software a couple of decades ago, it’d probably appear in a weird-shaped window, and I’ve never understood why.

Mostly, these designs are… pretty ugly. And for what? It’s also worth noting that this kind of design can be found in all kinds of applications, in media players that

it was almost ubiquitous.

Mostly, these designs are… pretty ugly. And for what? It’s also worth noting that this kind of design can be found in all kinds of applications, in media players that

it was almost ubiquitous.

You might think that they’re an overenthusiastic kind of skeuomorphic design: people trying to make these players look like their physical analogues. But hardware players were still pretty boxy-looking at this point, either because of the limitations of their data storage1. By the time flash memory-based portable MP3 players became commonplace their design was copying software players, not the other way around.

So my best guess is that these players were trying to stand out as highly-visible. Like: they were things you’d want to occupy a disproportionate amount of desktop space. Maybe other people were listening to music differently than me… but for me, back when screen real estate was at such a premium2, a music player’s job was to be small, unintrusive, and out-of-the-way.

It’s a mystery to me why anybody would (or still does) make media player software or skins for them that eat so much screen space, frequently looking ugly while they do so, only to look like a hypothetical hardware device that wouldn’t actually become commonplace until years after this kind of player design premiered!

Maybe other people listened to music on their computer differently from me: putting it front and centre, not using their computer for other tasks at the same time. And maybe for these people the choice of player and skin was an important personalisation feature; a fashion statement or a way to show off their personal identity. But me? I didn’t get it then, and I don’t get it now. I’m glad that this particular trend seems to have died and windows are, for the most part, rounded rectangles once more… even for music player software!

1 A walkman, minidisc player, or hard drive-based digital music device is always going to look somewhat square because of what’s inside.

2 I “only” had 1600 × 1200 (UXGA) pixels on the very biggest monitor I owned before I went widescreen, and I spent a lot of time on monitors at lower resolutions e.g. 1024 × 768 (XGA); on such screens, wasting space on a music player when you’re mostly going to be listening “in the background” while you do something else seemed frivolous.

The finger protocol, first standardised way back in 1977, is a lightweight directory system

for querying resources on a local or remote shared system. Despite barely being used today, it’s so well-established that virtually every modern desktop operating system – Windows,

MacOS, Linux etc. – comes with a copy of finger, giving it a similar ubiquity to web browsers! (If you haven’t yet, give it a go.)

If you were using a shared UNIX-like system in the 1970s through 1990s, you might run finger to see who else was logged on at the same time as you, finger

chris to get more information about Chris, or finger alice@example.net to look up the details of Alice on the server example.net. Its ability to transcend the

boundaries of different systems meant that it was, after a fashion, an example of an early decentralised social network!

I first actively used finger when I was a student at Aberystwyth University. The shared central computers osfa and

osfb supported it in what was a pretty typical way: users could add a .plan and/or .project file to their home directory and the contents of these

would be output to anybody using finger to look up that user, along with other information like what department they belonged to. I’m simulating from memory so this won’t be remotely

accurate, but broadly speaking it looked a little like this –

$ finger dlq9@aber.ac.uk

Login: dlq9 Name: Dan Q

Directory: /users/9/d/dlq9 Department: Computer Science

Project:

Working on my BEng Software Engineering.

Plan:

_______

---' ____)____

______) Finger me!

_____)

(____)

---.__(___)

It’s not just about a directory of people, though: you could finger printers to see what their queues were like, finger a time server to ask what time it was,

finger a vending machine to see what drinks it

had available… even finger for a weather forecast where you are (this one still works as shown below; try it for your own location!) –

$ finger oxford@graph.no

-= Meteogram for Oxford, Oxfordshire, England, United Kingdom =-

'C Rain (mm)

12

11

10 ^^^=--=--

9^^^ ===

8 ^^^=== ====== ^^^

7 ====== ===============^^^ =--

6 =--=-----

5

4

3 | | | | | | | 1 mm

17 18 19 20 21 22 23 18/11 02 03 04 05 06 07_08_09_10_11_12_13_14 Hour

W W W W W W W W W W W W W W W W W W W W W W Wind dir.

6 6 7 7 7 7 7 7 6 6 6 5 5 4 4 4 4 5 6 6 5 5 Wind(m/s)

Legend left axis: - Sunny ^ Scattered = Clouded =V= Thunder # Fog

Legend right axis: | Rain ! Sleet * Snow

If you’d just like to play with finger, then finger.farm is a great starting point. They provide free finger hosting and they’re easy to use (try

finger dan@finger.farm to find me!). But I had something bigger in mind…

What if you could finger my blog. I.e. if you ran finger blog@danq.me you’d see a summary of some of my recent posts, along with additional

addresses you could finger to read the full content of each. This could be the world’s first finger-to-WordPress gateway; y’know, for

if you thought the world needed such a thing. Here’s how I did it:

efingerd; I’m using the Debian binaries.

ufw allow 1965; utf reload).

efingerd acts like a “typical” finger server, but it’s highly programmable to make it “smarter”. I:

/etc/efingerd/list to prevent any output from “listing” the server (finger @danq.me).

/etc/efingerd/list and /etc/efingerd/nouser(which are run when a request matches, or doesn’t match, a user account name) with

a call to my script: /usr/local/bin/finger-to-wordpress "$3". $3 holds the username that was requested, so we can act on it.

/usr/local/bin/finger-to-wordpress – a Ruby program that either (a) lists a selection of posts or (b) returns a specific post (stripping the HTML

tags)

In future, I might use some extra tags or metadata to enhance finger-friendly WordPress posts. The infrastructure’s in place already (I already have tags that I use to make

certain kinds of content available only via certain media – shh!). You might rightly as what the point is of this entire enterprise, of course, and you’d be well within your

rights to ask such a question. But I think the best answer available is “because Dan”.

If you want to see my blog in a whole new way, give it a go: run finger blog@danq.me on your computer and follow the instructions.

This post is also available as a podcast. Listen here, download for later, or subscribe wherever you consume podcasts.

Somebody shared with me a tweet about the tragedy of being a Gen X’er and having to buy all your music again and again as formats evolve. Somebody else shared with me Kyla La Grange‘s cover of a particular song .Together… these reminded me that I’ve never told you the story of my first MP3…1

In the Summer of 1995 I bought the CD single of the (still excellent!) Set You Free by N-Trance.2 I’d heard about this new-fangled “MP3” audio format, so soon afterwards I decided to rip a copy of the song to my PC.

I was using a 66MHz 486SX CPU, and without an embedded FPU I didn’t

quite have the spare processing power to rip-and-encode in a single pass.3

So instead I first ripped to an uncompressed PCM .wav file and then performed the encoding: the former step

was done almost in real-time (I listened to the track as it ripped!), about 7 minutes. The latter step took about 20 minutes.

So… about half an hour in total, to rip a single song.

Creating a (what would now be considered an apalling) 32kHz mono-channel file, this meant that I briefly stored both a 27MB wave file and the final ~4MB MP3 file. 31MB might not sound huge, but I only had a total of 145MB of hard drive space at the time, so 31MB consumed over a fifth of my entire fixed storage! Even after deleting the intermediary wave file I was left with a single song consuming around 3% of my space, which is mind-boggling to think about in hindsight.

But it felt like magic. I called my friend Gary to tell him about it. “This is going to be massive!” I said. At the time, I meant for techy people: I could imagine a future in which, with more hard drive space, I’d keep all my music this way… or else bundle entire artists onto writable CDs in this new format, making albums obsolete. I never considered that over the coming decade or so the format would enter the public consciousness, let alone that it’d take off like it did.

The MP3 file I produced had a fault. Most of the way through the encoding process, I got bored and ran another program, and this must’ve interfered with the stream because there was an audible “blip” noise about 30 seconds from the end of the track. You’d have to be listening carefully to hear it, or else know what you were looking for, but it was there. I didn’t want to go through the whole process again, so I left it.

But that artefact uniquely identified that copy of what was, in the end, a popular song to have in your digital music collection. As the years went by and I traded MP3 files in bulk at LAN parties or on CD-Rs or, on at least one ocassion, on an Iomega Zip disk (remember those?), I’d ocassionally see

N-Trance - (Only Love Can) Set You Free.mp34 being passed around and play it, to see if it was “my”

copy.

Sometimes the ID3 tags had been changed because for example the previous owner had decided it deserved to be considered Genre: Dance instead of Genre: Trance5. But I could still identify that file because

of the audio fingerprint, distinct to the first MP3 I ever created.

I still had that file when I went to university (where it occupied a smaller proportion of my hard drive space) and hearing that distinctive “blip” would remind me about the ordeal that was involved in its creation. I don’t have it any more, but perhaps somebody else still does.

1 I might never have told this story on my blog, but eagle-eyed readers may remember that I’ve certainly hinted at it before now.

2 Rewatching that music video, I’m struck by a recollection of how crazy popular crossfades were on 1990s dance music videos. More than just a transition, I’m pretty sure that most of the frames of that video are mid-crossfade: it feels like I’m watching Kelly Llorenna hanging out of a sunroof but I accidentally left one of my eyeballs in a smoky nightclub and can still see out of it as well.

3 I initially tried to convert directly from red book format to an MP3 file, but the encoding process was too slow and the CD drive’s buffer filled up and didn’t get drained by the processor, which was still presumably bogged down with framing or fourier-transforming earlier parts of the track. The CD drive reasonably assumed that it wasn’t actually being used and spun-down the drive motor, and this caused it to lose its place in the track, killing the whole process and leaving me with about a 40 second recording.

4 Yes, that filename isn’t quite the correct title. I was wrong.

5 No, it’s clearly trance. They were wrong.

I got lost on the Web this week, but it was harder than I’d have liked.

There was a discussion this week in the Abnib WhatsApp group about whether a particular illustration of a farm was full of phallic imagery (it was). This left me wondering if anybody had ever tried to identify the most-priapic buildings in the world. Of course towers often look at least a little bit like their architects were compensating for something, but some – like the Ypsilanti Water Tower in Michigan pictured above – go further than others.

I quickly found the Wikipedia article for the Most Phallic Building Contest in 2003, so that was my jumping-off point. It’s easy enough to get lost on Wikipedia alone, but sometimes you feel the need for a primary source. I was delighted to discover that the web pages for the Most Phallic Building Contest are still online 18 years after the competition ended!

Link rot is a serious problem on the Web, to such an extent that it’s pleasing when it isn’t present. The other year, for example, I revisited a post I wrote in 2004 and was pleased to find that a linked 2003 article by Nicholas ‘Aquarion’ Avenell is still alive at its original address! Contrast Jonathan Ames, the author/columnist/screenwriter who created the Most Phallic Building Contest until as late as 2011 before eventually letting his site and blog lapse and fall off the Internet. It takes effort to keep Web content alive, but it’s worth more effort than it’s sometimes given.

Anyway: a shot tower in Bristol – a part of the UK with a long history of leadworking – was among the latecomer entrants to the competition, and seeing this curious building reminded me about something I’d read, once, about the manufacture of lead shot. The idea (invented in Bristol by a plumber called William Watts) is that you pour molten lead through a sieve at the top of a tower, let surface tension pull it into spherical drops as it falls, and eventually catch it in a cold water bath to finish solidifying it. I’d seen an animation of the process, but I’d never seen a video of it, so I went about finding one.

British Pathé‘s YouTube Channel provided me with this 1950 film, and if you follow only one hyperlink from this article, let it be this one! It’s a well-shot (pun intended, but there’s a worse pun in the video!), and while I needed to translate all of the references to “hundredweights” and “Fahrenheit” to measurements that I can actually understand, it’s thoroughly informative.

But there’s a problem with that video: it’s been badly cut from whatever reel it was originally found on, and from about 1 minute and 38 seconds in it switches to what is clearly a very different film! A mother is seen shepherding her young daughter off to bed, and a voiceover says:

Bedtime has a habit of coming round regularly every night. But for all good parents responsibility doesn’t end there. It’s just the beginning of an evening vigil, ears attuned to cries and moans and things that go bump in the night. But there’s no reason why those ears shouldn’t be your neighbours ears, on occasion.

Now my interest’s piqued. What was this short film going to be about, and where could I find it? There’s no obvious link; YouTube doesn’t even make it easy to find the video uploaded “next” by a given channel. I manipulated some search filters on British Pathé’s site until I eventually hit upon the right combination of magic words and found a clip called Radio Baby Sitter. It starts off exactly where the misplaced prior clip cut out, and tells the story of “Mr. and Mrs. David Hurst, Green Lane, Coventry”, who put a microphone by their daughter’s bed and ran a wire through the wall to their neighbours’ radio’s speaker so they can babysit without coming over for the whole evening.

It’s a baby monitor, although not strictly a radio one as the title implies (it uses a signal wire!), nor is it groundbreakingly innovative: the first baby monitor predates it by over a decade, and it actually did use radiowaves! Still, it’s a fun watch, complete with its contemporary fashion, technology, and social structures. Here’s the full thing, re-merged for your convenience:

Wait, what was I trying to do when I started, again? What was I even talking about…

It used to be easier than this to get lost on the Web, and sometimes I miss that.

Obviously if you go back far enough this is true. Back when search engines were much weaker and Internet content was much less homogeneous and more distributed, we used to engage in this kind of meandering walk all the time: we called it “surfing” the Web. Second-generation Web browsers even had names, pretty often, evocative of this kind of experience: Mosaic, WebExplorer, Navigator, Internet Explorer, IBrowse. As people started to engage in the noble pursuit of creating content for the Web they cross-linked their sources, their friends, their affiliations (remember webrings? here’s a reminder; they’re not quite as dead as you think!), your favourite sites etc. You’d follow links to other pages, then follow their links to others still, and so on in that fashion. If you went round the circles enough times you’d start seeing all those invariably-blue hyperlinks turn purple and know you’d found your way home.

But even after that era, as search engines started to become a reliable and powerful way to navigate the wealth of content on the growing Web, links still dominated our exploration. Following a link from a resource that was linked to by somebody you know carried the weight of a “web of trust”, and you’d quickly come to learn whose links were consistently valuable and on what subjects. They also provided a sense of community and interconnectivity that paralleled the organic, chaotic networks of acquaintances people form out in the real world.

In recent times, that interpersonal connectivity has, for many, been filled by social networks (let’s ignore their failings in this regard for now). But linking to resources “outside” of the big social media silos is hard. These advertisement-funded services work hard to discourage or monetise activity that takes you off their platform, even at the expense of their users. Instagram limits the number of external links by profile; many social networks push for resharing of summaries of content or embedding content from other sources, discouraging engagement with the wider Web, Facebook and Twitter both run external links through a linkwrapper (which sometimes breaks); most large social networks make linking to the profiles of other users of the same social network much easier than to users anywhere else; and so on.

The net result is that Internet users use fewer different websites today than they did 20 years ago, and spend most of their “Web” time in app versions of websites (which often provide a better experience only because site owners strategically make it so to increase their lock-in and data harvesting potential). Truly exploring the Web now requires extra effort, like exercising an underused muscle. And if you begin and end your Web experience on just one to three services, that just feels kind of… sad, to me. Wasted potential.

It sounds like I’m being nostalgic for a less-sophisticated time on the Web (that would certainly be in character!). A time before we’d fully-refined the technology that would come to connect us in an instant to the answers we wanted. But that’s not exactly what I’m pining for. Instead, what I miss is something we lost along the way, on that journey: a Web that was more fun-and-weird, more interpersonal, more diverse. More Geocities, less Facebook; there’s a surprising thing to find myself saying.

Somewhere along the way, we ended up with the Web we asked for, but it wasn’t the Web we wanted.

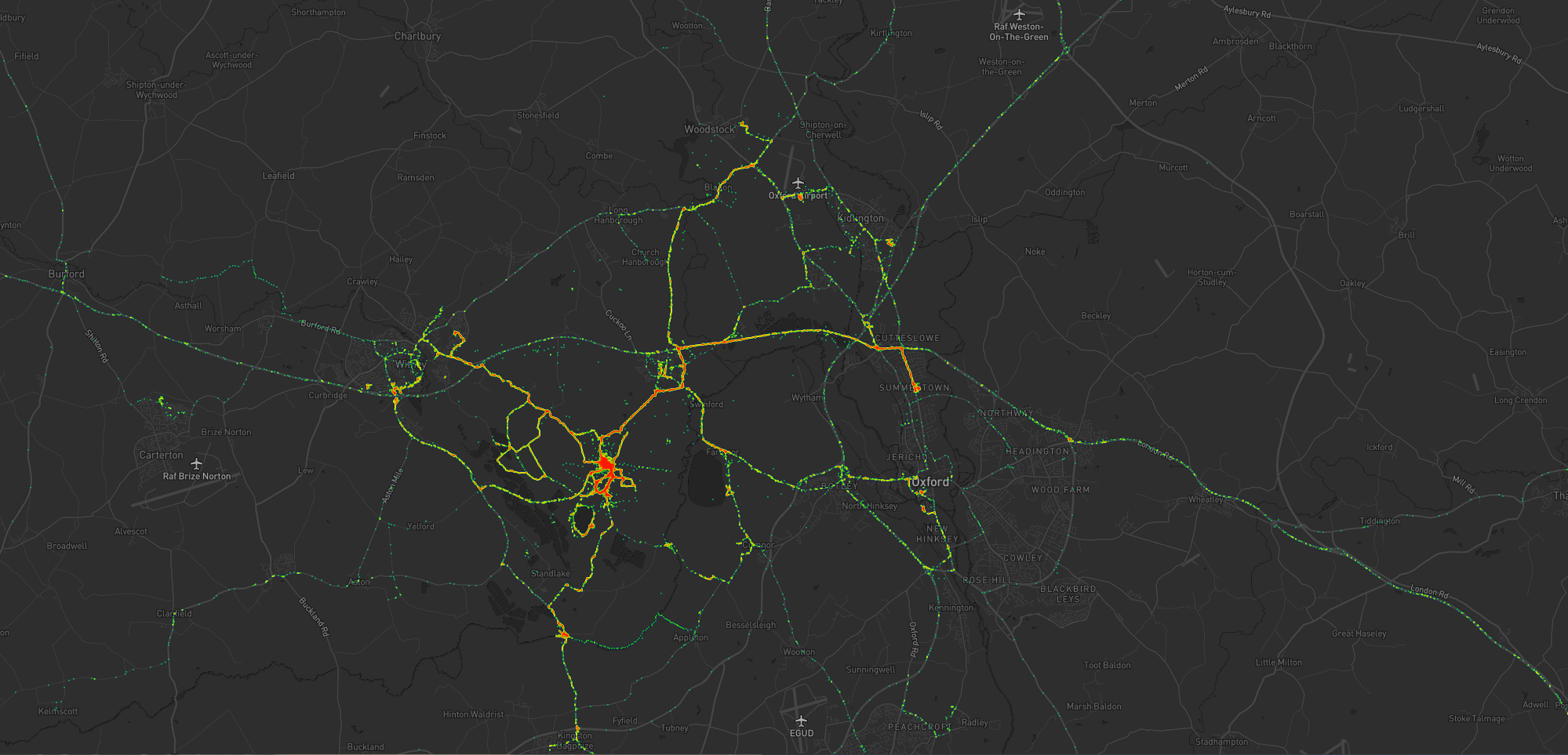

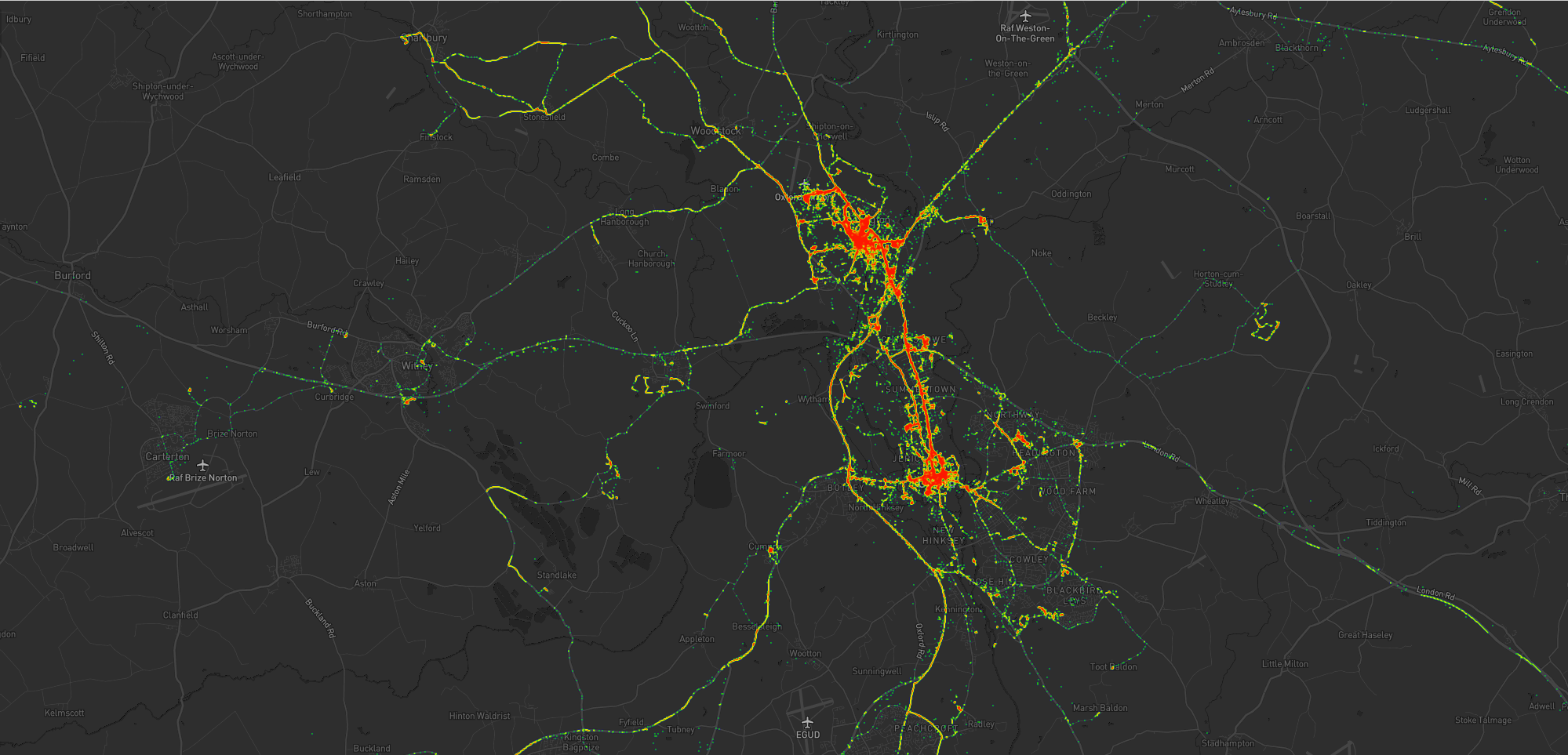

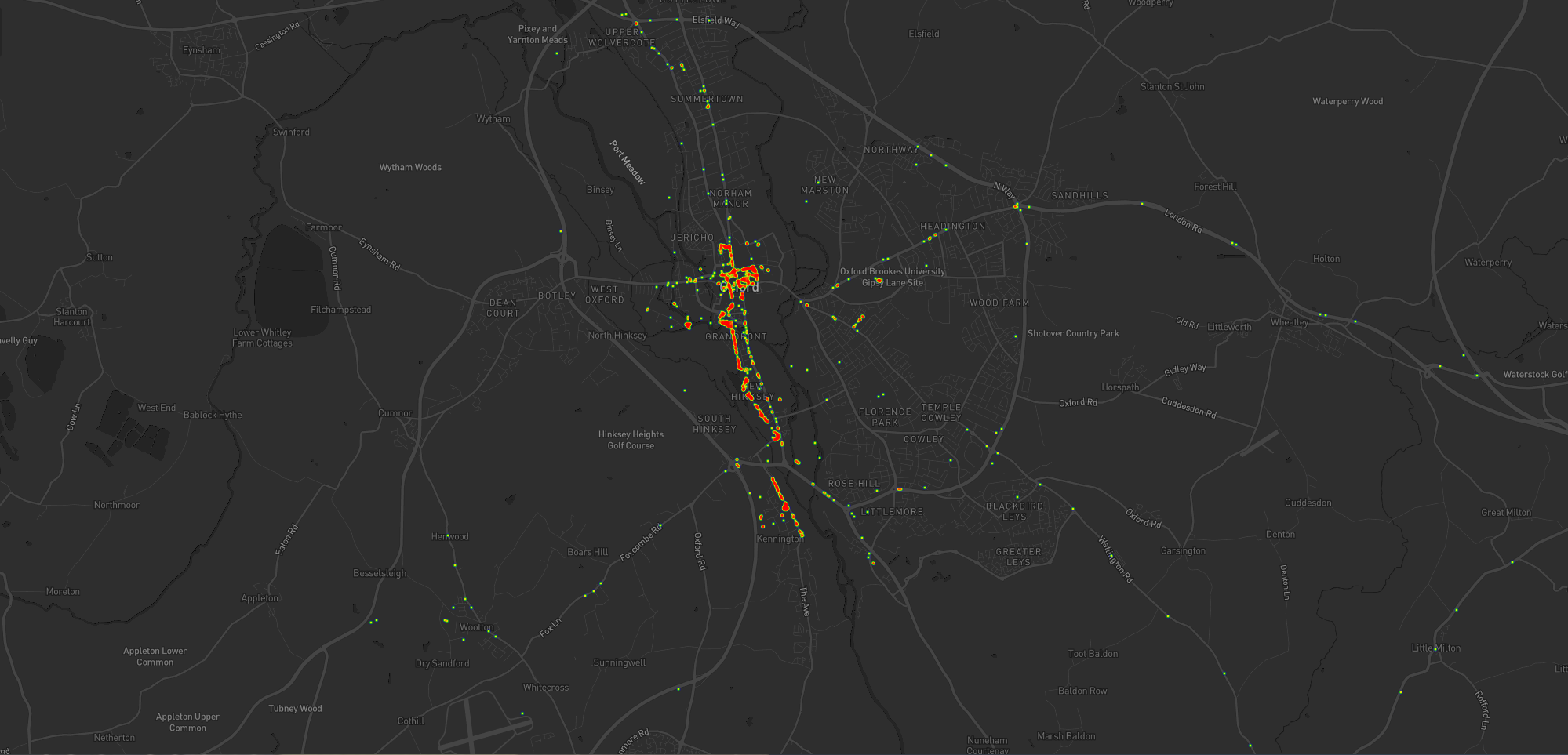

As I mentioned last year, for several years I’ve collected pretty complete historic location data from GPSr devices I carry with me everywhere, which I collate in a personal μlogger server.

Going back further, I’ve got somewhat-spotty data going back a decade, thanks mostly to the fact that I didn’t get around to opting-out of Google’s location tracking until only a few years ago (this data is now also housed in μlogger). More-recently, I now also get tracklogs from my smartwatch, so I’m managing to collate more personal location data than ever before.

Inspired perhaps at least a little by Aaron Parecki, I thought I’d try to do something cool with it.

What you’re looking at is a heatmap showing my location over the last year or so since I moved to The Green. Between the pandemic and switching a few months prior to a job that I do almost-entirely at home there’s not a lot of travel showing, but there’s some. Points of interest include:

Let’s go back to the 7 years prior, when I lived in Kidlington. This paints a different picture:

This heatmap highlights some of the ways in which my life was quite different. For example:

Let’s go back further:

Before 2011, and before we bought our first house, I spent a couple of years living in Kennington, to the South of Oxford. Looking at this heatmap, you’ll see:

I really love maps, and I love the fact that these heatmaps are capable of painting a picture of me and what my life was like in each of these three distinct chapters of my life over the last decade. I also really love that I’m able to collect and use all of the personal data that makes this possible, because it’s also proven useful in answering questions like “How many times did I visit Preston in 2012?”, “Where was this photo taken?”, or “What was the name of that place we had lunch when we got lost during our holiday in Devon?”.

There’s so much value in personal geodata (that’s why unscrupulous companies will try so hard to steal it from you!), but sometimes all you want to do is use it to draw pretty heatmaps. And that’s cool, too.

I have a μlogger instance with the relevant positional data in. I’ve automated my process, but the essence of it if you’d like to try it yourself is as follows:

First, write some SQL to extract all of the position data you need. I round off the latitude and longitude to 5 decimal places to help “cluster” dots for frequency-summing, and I raise the frequency to the power of 3 to help make a clear gradient in my heatmap by making hotspots exponentially-brighter the more popular they are:

SELECT ROUND(latitude, 5) lat, ROUND(longitude, 5) lng, POWER(COUNT(*), 3) `count` FROM positions WHERE `time` BETWEEN '2020-06-22' AND '2021-08-22' GROUP BY ROUND(latitude, 5), ROUND(longitude, 5)

This data needs converting to JSON. I was using Ruby’s mysql2 gem to

fetch the data, so I only needed a .to_json call to do the conversion – like this:

db = Mysql2::Client.new(host: ENV['DB_HOST'], username: ENV['DB_USERNAME'], password: ENV['DB_PASSWORD'], database: ENV['DB_DATABASE']) db.query(sql).to_a.to_json

Approximately following this guide and leveraging my Mapbox

subscription for the base map, I then just needed to include leaflet.js, heatmap.js, and leaflet-heatmap.js before writing some JavaScript code

like this:

body.innerHTML = '<div id="map"></div>'; let map = L.map('map').setView([51.76, -1.40], 10); // add the base layer to the map L.tileLayer('https://api.mapbox.com/styles/v1/{id}/tiles/{z}/{x}/{y}?access_token={accessToken}', { maxZoom: 18, id: 'itsdanq/ckslkmiid8q7j17ocziio7t46', // this is the style I defined for my map, using Mapbox tileSize: 512, zoomOffset: -1, accessToken: '...' // put your access token here if you need one! }).addTo(map); // fetch the heatmap JSON and render the heatmap fetch('heat.json').then(r=>r.json()).then(json=>{ let heatmapLayer = new HeatmapOverlay({ "radius": parseFloat(document.querySelector('#radius').value), "scaleRadius": true, "useLocalExtrema": true, }); heatmapLayer.setData({ data: json }); heatmapLayer.addTo(map); });

That’s basically all there is to it!