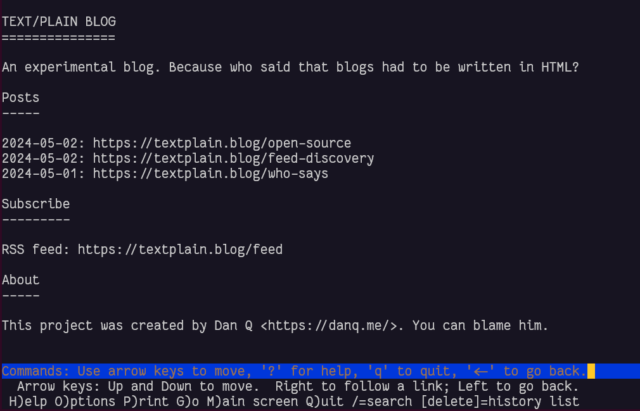

FoundryVTT is a fantastic Web-based environment for tabletop roleplaying adventures1 and something I particularly enjoy is the freedom for virtually-unlimited scripting. Following a demonstration to a fellow DM at work last week I promised to throw together a quick tutorial into scripting simple multi-phase maps using Foundry.2

Why multi-phase maps?

You might use a multi-phase map to:

- Allow the development and expansion of a siege camp outside the fortress where the heroes are holed-up.3

- Rotate through day and night cycles or different times of day, perhaps with different things to interact with in each.4

- Gradually flood a sewer with rising water… increasing the range of the monster that dwells within.5

- Re-arrange parts of the dungeon when the characters flip certain switches, opening new paths… and closing others.

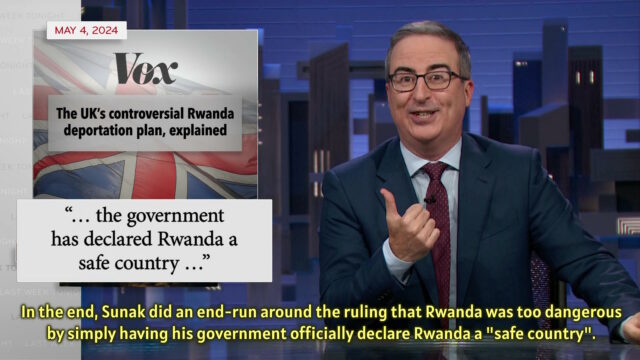

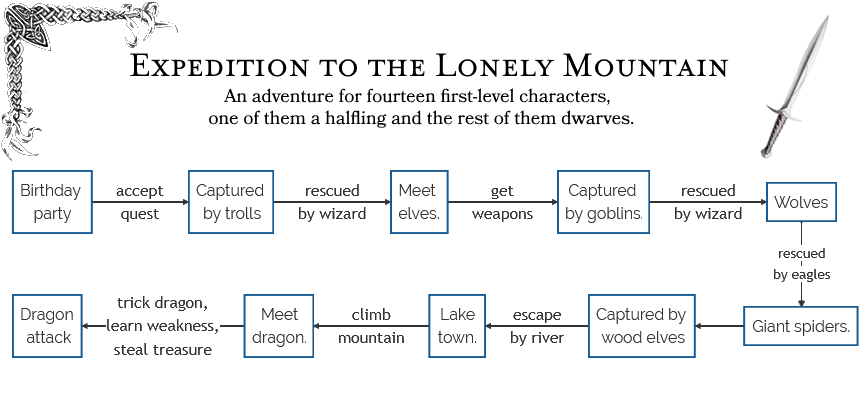

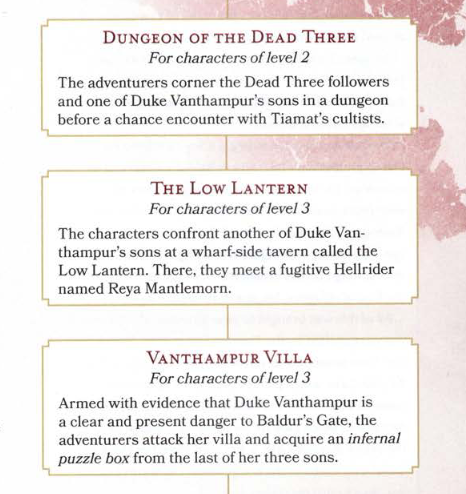

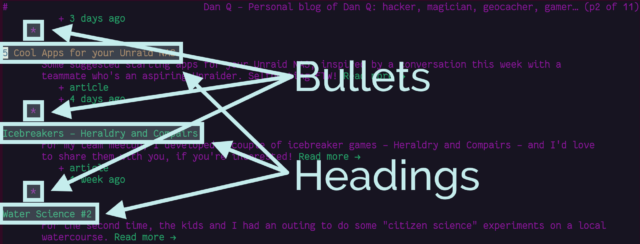

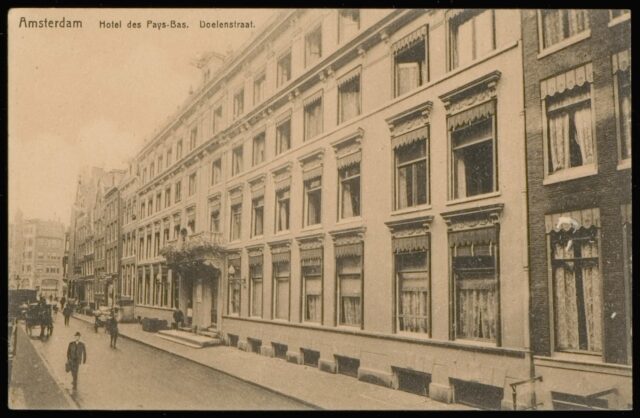

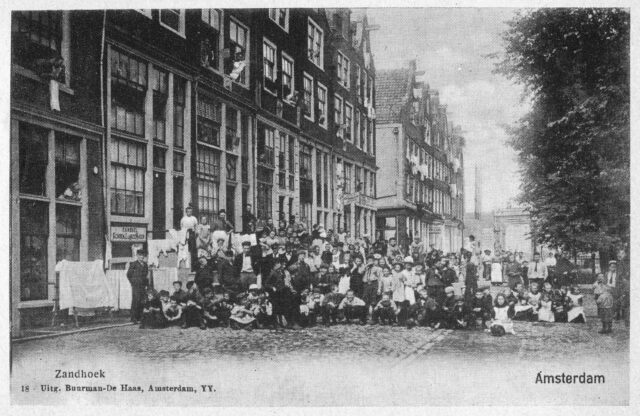

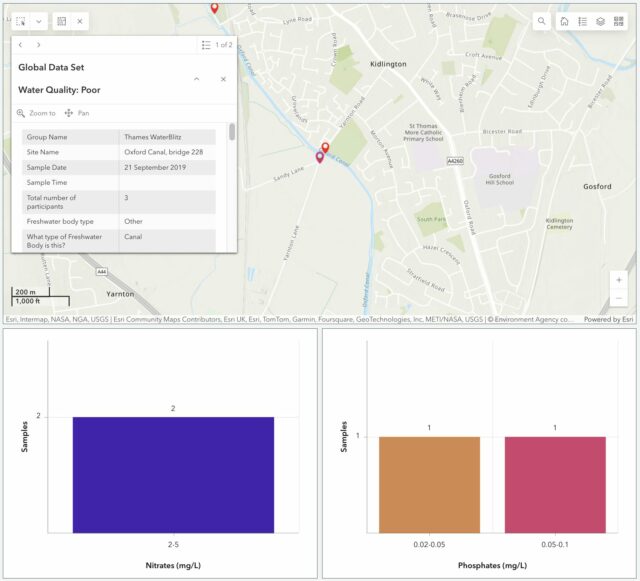

I’ll use the map above to create a simple linear flow, powered by a macro in the hotbar. Obviously, more-complex scenarios are available, and combining this approach with a plugin like Monk’s Active Tile Triggers can even be used to make the map appear to dynamically change in response to the movement or actions of player characters!

Setting the scene

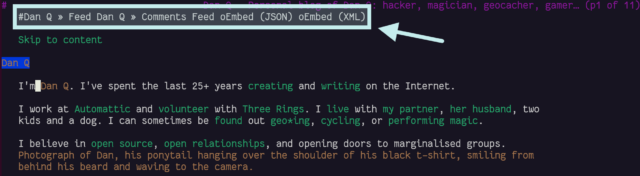

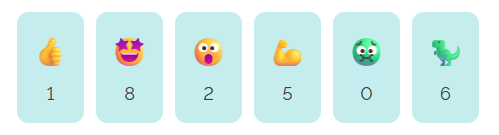

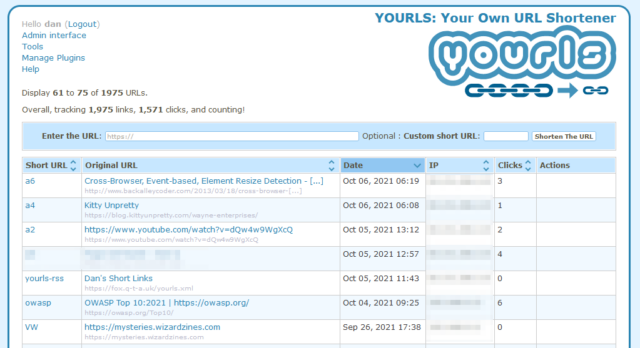

Create a scene, using the final state of the map as the background. Then, in reverse-order, add the previous states as tiles above it.

Make a note of the X-position that your tiles are in when they’re where they supposed to be: we’ll “move” the tiles off to the side when they’re hidden, to prevent their ghostly half-hidden forms getting in your way as game master. We’ll also use this X-position to detect which tiles have already been moved/hidden.

Also make note of each tile’s ID, so your script can reference them. It’s easiest to do this as you go along. When you’re ready to write your macro, reverse the list, because we’ll be hiding each tile in the opposite order from the order you placed them.

Writing the script

Next, create a new script macro, e.g. by clicking an empty slot in the macro bar. When you activate this script, the map will move forward one phase (or, if it’s at the end, it’ll reset).

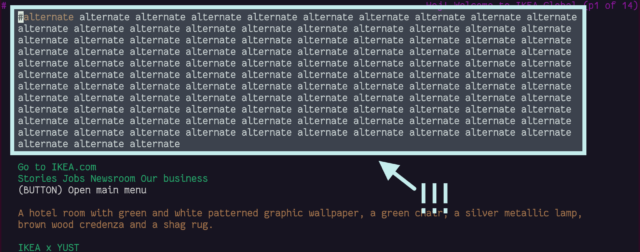

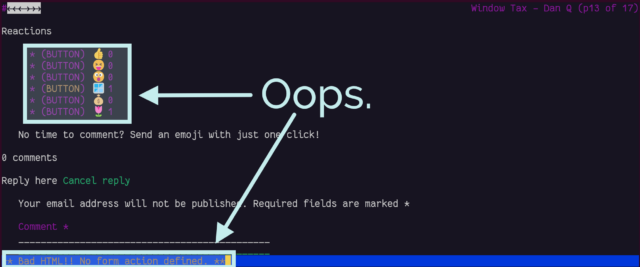

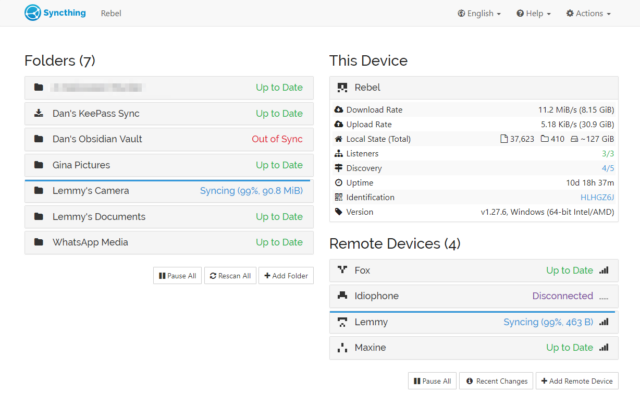

Here’s the code you’ll need – the 👈 emoji identifies the places you’ll need to modify the code, specifically:

-

const revealed_tiles_default_x = 250should refer to the X-position of your tiles when they’re in the correct position. -

const revealed_tiles_modified_x = 2825should refer to the X-position they’ll appear at “off to the right” of your scene. To determine this, just move one tile right until it’s sufficiently out of the way of the battlemap and then check what it’s X-position is! Or just take the default X-position, add the width of your map in pixels, and then add a tiny bit more. -

const revealed_tiles = [ ... ]is a list of the tile IDs of each tile what will be hidden, in turn. In my example there are five of them (the sixth and final image being the scene background).

const revealed_tiles_default_x = 250; // 👈 X-position of tiles when displayed const revealed_tiles_modified_x = 2825; // 👈 X-position of tiles when not displayed const revealed_tiles = [ '2xG7S8Yqk4x1eAdr', // 👈 list of tile IDs in order that they should be hidden 'SjNQDBImHvrjAHWX', // (top to bottom) 'tuYg4FvLgIla1l21', 'auX4sj64PWmkAteR', 'yAL4YP0I4Cv4Sevt', ].map(t=>canvas.tiles.get(t)); /*************************************************************************************************/ // Get the topmost tile that is still visible: const next_revealed_tile_to_move = revealed_tiles.find(t=> t.position.x == revealed_tiles_default_x ); // If there are NO still-visible tiles, we must need to reset the map: if( ! next_revealed_tile_to_move ) { // To reset the map, we go through each tile and put it back where it belongs - for(tile of revealed_tiles){ canvas.scene.updateEmbeddedDocuments("Tile", [ { _id: tile.id, x: revealed_tiles_default_x, hidden: false } ]); } } else { // Otherwise, hide the topmost visible tile (and move it off to the side to help the GM) - canvas.scene.updateEmbeddedDocuments("Tile", [ { _id: next_revealed_tile_to_move.id, x: revealed_tiles_modified_x, hidden: true } ]); }

I hope that the rest of the code is moderately self-explanatory for anybody with a little JavaScript experience, but if you’re just following this kind of simple, linear case then you don’t need to modify it anyway. But to summarise, what it does is:

- Finds the first listed tile that isn’t yet hidden (by comparing its X-position to the pre-set X-position).

- If there aren’t any such tiles, we must have hidden them all already, so perform a reset: to do this – iterate through each tile and set its X-position to the pre-set X-position, and un-hide it.

- Otherwise, move the first not-hidden tile to the alternative X-position and hide it.

I hope you have fun with scripting your own multi-phase maps. Just don’t get so caught-up in your awesome scenes that you fail to give the players any agency!

Footnotes

1 Also, it’s on sale at 20% off this week to celebrate its fourth anniversary. Just sayin’.

2 I can neither confirm nor deny that a multi-phase map might be in the near future of The Levellers‘ adventure…

3 AtraxianBear has a great series of maps inspired by the 1683 siege of Vienna by the Ottomans that could be a great starting point for a “gradually advancing siege” map.

4 If you’re using Dungeon Alchemist as part of your mapmaking process you can just export orthographic or perspective outputs with different times of day and your party’s regular inn can be appropriately lit for any time of day, even if the party decides to just “wait at this table until nightfall”.

5 Balatro made a stunning map with rising water as a key feature: there’s a preview available.