I’ve a notion that during 2025 I might put some effort into tidying up the tagging taxonomy on my blog. There’s a few tags that are duplicates (e.g.

ai and artificial intelligence) or that exhibit significant overlap (e.g. dog and dogs), or that were clearly created when I

speculated I’d write more on the topic than I eventually did (e.g. homa night, escalators1,

or nintendo) or that are just confusing and weird (e.g. not that bacon sandwich picture).

Retro-tagging with AI

One part of such an effort might be to go back and retroactively add tags where they ought to be. For about the first decade of my blog, i.e. prior to around 2008, I rarely used tags to categorise posts. And as more tags have been added it’s apparent that many old posts even after that point might be lacking tags that perhaps they ought to have2.

I remain sceptical about many uses of (what we’re today calling) “AI”, but one thing at which LLMs seem to do moderately well is summarisation3. And isn’t tagging and categorisation only a stone’s throw away from summarisation? So maybe, I figured, AI could help me to tidy up my tagging. Here’s what I was thinking:

- Tell an LLM what tags I use, along with an explanation of some of the quirkier ones.

- Train the LLM with examples of recent posts and lists of the tags that were (correctly, one assumes) applied.

- Give it the content of blog posts and ask what tags should be applied to it from that list.

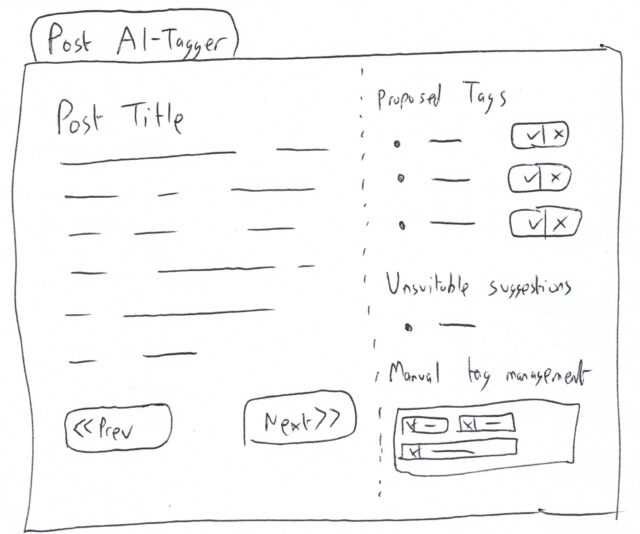

- Script the extraction of the content from old posts with few tags and run it through the above, presenting to me a report of what tags are recommended (which could then be coupled with a basic UI that showed me the post and suggested tags, and “approve”/”reject” buttons or similar.

Extracting training data

First, I needed to extract and curate my tag list, for which I used the following SQL4:

SELECT COUNT(wp_term_relationships.object_id) num, wp_terms.slug FROM wp_term_taxonomy LEFT JOIN wp_terms ON wp_term_taxonomy.term_id = wp_terms.term_id LEFT JOIN wp_term_relationships ON wp_term_taxonomy.term_taxonomy_id = wp_term_relationships.term_taxonomy_id WHERE wp_term_taxonomy.taxonomy = 'post_tag' AND wp_terms.slug NOT IN ( -- filter out e.g. 'rss-club', 'published-on-gemini', 'dancast' etc. -- these are tags that have internal meaning only or are already accurately applied 'long', 'list', 'of', 'tags', 'the', 'ai', 'should', 'never', 'apply' ) GROUP BY wp_terms.slug HAVING num > 2 -- filter down to tags I actually routinely use ORDER BY wp_terms.slug

published on gemini if they’re to appear on gemini://danq.me/ and

dancast if they embed an episode of my podcast. I filtered these out because I never want the AI to suggest applying them.

I took my output and dumped it into a list, and skimmed through to add some clarity to some tags whose purpose might be considered ambiguous, writing my explanation of each in parentheses afterwards. Here’s a part of the list, for example:

- …

- puzzles

- python

- q (explicitly about my unusual surname, which is just the letter Q)

- qparty

- quake

- quakers

- quantum-physics

- quotes

- racing

- racism

- radio

- raid (about RAID storage devices, as might be used in a NAS computer)

- rails (Ruby on Rails)

- rain

- rambling

- …

Prompt derivation

I used that list as the basis for the system message of my initial prompt:

Suggest topical tags from a predefined list that appropriately apply to the content of a given blog post. # Steps 1. **Read the Blog Post**: Carefully read through the provided content of the blog post to identify its main themes and topics. 2. **Analyse Key Aspects**: Identify key topics, themes, or subjects discussed in the blog post. 3. **Match with Tags**: Compare these identified topics against the list of available tags. 4. **Select Appropriate Tags**: Choose tags that best represent the main topics and themes of the blog post. # Output Format Provide a list of suggested tags. Each tag should be presented as a single string. Multiple tags should be separated by commas. # Allowed Tags Tags that can be suggested are as follows. Text in parentheses are not part of the tag but are a description of the kinds of content to which the tag ought to be applied: - aberdyfi - aberystwyth - ... - youtube - zoos # Examples **Input:** The rapid advancement of AI technology has had a significant impact on my industry, even on the ways in which I write my blog posts. This post, for example, used AI to help with tagging. **Output:** ai, technology, blogging, meta, work ...(other examples)... # Notes - Ensure that all suggested tags are relevant to the key themes of the blog post. - Tags should be selected based on their contextual relevance and not just keyword matching.

This system prompt is somewhat truncated, but you get the idea.

Now I was ready to give it a go with some real data. As an initial simple and short (and therefore also computationally cheap) experiment, I tried feeding it a note I wrote last week about the interrobang’s place in the Spanish language, and in Unicode.

That post already has the following tags (but this wasn’t disclosed to the AI in its training set; it had to work from scratch): children, language, languages (a bit of a redundancy there!), spain, and unicode.

Testing it out

Let’s see what the AI suggests:

curl https://api.openai.com/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $OPENAI_TOKEN" \ -d '{ "model": "gpt-4o-mini", "messages": [ { "role": "system", "content": [ { "type": "text", "text": "[PROMPT AS DESCRIBED ABOVE]" } ] }, { "role": "user", "content": [ { "type": "text", "text": "My 8-year-old asked me \"In Spanish, I need to use an upside-down interrobang at the start of the sentence‽\" I assume the answer is yes A little while later, I thought to check whether Unicode defines a codepoint for an inverted interrobang. Yup: ‽ = U+203D, ⸘ = U+2E18. Nice. And yet we dont have codepoints to differentiate between single-bar and double-bar \"cifrão\" dollar signs..." } ] } ], "response_format": { "type": "text" }, "temperature": 1, "max_completion_tokens": 2048, "top_p": 1, "frequency_penalty": 0, "presence_penalty": 0 }'

curl meant I quickly ran up against some Bash escaping issues, but set +H and a little massaging of the blog post content

seemed to fix it.

GPT-4o-mini

When I ran this query against the gpt-4o-mini model, I got back: unicode, language, education, children, symbols.

That’s… not ideal. I agree with the tags unicode, language, and children, but this isn’t really about education. If I tagged

everything vaguely educational on my blog with education, it’d be an even-more-predominant tag than geocaching is! I reserve that tag for things that relate

specifically to formal education: but that’s possibly something I could correct for with a parenthetical in my approved tags list.

symbols, though, is way out. Sure, the post could be argued to be something to do with symbols… but symbols isn’t on the approved tag list in

the first place! This is a clear hallucination, and that’s pretty suboptimal!

Maybe a beefier model will fare better…

GPT-4o

I switched gpt-4o-mini for gpt-4o in the command above and ran it again. It didn’t take noticeably longer to run, which was pleasing.

The model returned: children, language, unicode, typography. That’s a big improvement. It no longer suggests education,

which was off-base, nor symbols, which was a hallucination. But it did suggest typography, which is a… not-unreasonable suggestion.

Neither model suggested spain, and strictly-speaking they were probably right not to. My post isn’t about Spain so much as it’s about Spanish. I don’t

have a specific tag for the latter, but I’ve subbed in the former to “connect” the post to ones which are about Spain, but that might not be ideal. Either way: if this is how

I’m using the tag then I probably ought to clarify as such in my tag list, or else add a note to the system prompt to explain that I use place names as the tags for posts about

the language of those places. (Or else maybe I need to be more-consistent in my tagging).

I experimented with a handful of other well-tagged posts and was moderately-satisfied with the results. Time for a more-challenging trial.

This time, with feeling…

Next, I decided to run the code against a few blog posts that are in need of tags. At this point, I wasn’t quite ready to implement a UI, so I just adapted my little hacky Bash script and copy-pasted HTML-stripped post contents directly into it.

I ran against three old posts:

Hospitals (June 2006)

In this post, I shared that my grandmother and my coworker had (independently) been taken into hospital. It had no tags whatsoever.

The AI suggested the tags hospital, family, injury, work, weddings, pub, humour. Which at

a glance, is probably a superset of the tags that I’d have considered, but there’s a clear logic to them all.

It clearly picked out weddings based on a throwaway comment I made about a cousin’s wedding, so I disagree with that one: the post isn’t strictly about weddings

just because it mentions one.

pub could go either way. It turns out my coworker’s injury occurred at or after a trip to the pub the previous night, and so its relevance is somewhat unknowable from this

post in isolation. I think that’s a reasonable suggestion, and a great example of why I’d want any such auto-tagging system to be a human assistant (suggesting

candidate tags) and not a fully-automated system. Interesting!

Finally, you might think of humour as being a little bit sarcastic, or maybe overly-laden with schadenfreude. But the blog post explicitly states that my coworker

“carefully avoided saying how he’d managed to hurt himself, which implies that it’s something particularly stupid or embarrassing”, before encouraging my friends to speculate on it.

However, it turns out that humour isn’t one of my existing tags at all! Boo, hallucinating AI!

I ended up applying all of the AI’s suggestions except weddings and humour. I also applied smartdata, because that’s where I worked (the AI couldn’t have been expected to guess that without context, though!).

Catch-Up: Concerts (June 2005)

This post talked about Ash and I’s travels around the UK to see REM and Green Day in concert5 and to the National Science Museum in London where I discovered that Ash was prejudiced towards… carrot cake.

The AI suggested: concerts, travel, music, preston, london, science museum, blogging.

Those all seemed pretty good at a first glance. Personally, I’d forgotten that we swung by Preston during that particular grand tour until the AI suggested the tag, and then I had to

look back at the post more-carefully to double-check! blogging initially seemed like a stretch given that I was only blogging about not having blogged much, but on

reflection I think I agree with the robot on this one, because I did explicitly link to a 2002 page that fell off the Internet only a few years ago about

the pointlessness of blogging. So I think it counts.

science museum is a big fail though. I don’t use that tag, but I do use the tag museum. So close, but not quite there, AI!

I applied all of its suggestions, after switching museum in place of science museum.

Geeky Winnage With Bluetooth (September 2004)

I wrote this blog post in celebration of having managed to hack together some stuff to help me remote-control my PC from my phone via Bluetooth, which back then used to be a challenge, in the hope that this would streamline pausing, playing, etc. at pizza-distribution-time at Troma Night, a weekly film night I hosted back then.

It already had the tag technology, which it inherited from a pre-tagging evolution of my blog which used something akin to categories (of which only one

could be assigned to a post). In addition to suggesting this, the AI also picked out the following options: bluetooth, geeky, mobile, troma

night, dvd, technology, and software.

The big failure here was dvd, which isn’t remotely one of my tags (and probably wouldn’t apply here if it were: this post isn’t about DVDs; it barely even mentions

them). Possibly some prompt engineering is required to help ensure that the AI doesn’t make a habit of this “include one tag not from the approved list, every time” trend.

Apart from that it’s a pretty solid list. Annoyingly the AI suggested mobile, which isn’t an approved tag, instead of mobiles, which is. That’s probably a

tokenisation fault, but it’s still annoying and a reminder of why even a semi-automated “human-checked” system would need a safety-check to ensure that no absent tags are

allowed through to the final stage of approval.

This post!

As a bonus experiment, I tried running my code against a version of this post, but with the information about the AI’s own prompt and the examples removed (to reduce the risk

of confusion). It came up with: ai, wordpress, blogging, tags, technology, automation.

All reasonable-sounding choices, and among those I’d made myself… except for tags and automation which, yet again, aren’t among tags that I use. Unless this

tendency to hallucinate can be reined-in, I’m guessing that this tool’s going to continue to have some challenges when used on longer posts like this one.

Conclusion and next steps

The bottom line is: yes, this is a job that an AI can assist with, but no, it’s not one that it can do without supervision. The laser-focus with which gpt-4o was able to

pick out taggable concepts, faster than I’d have been able to do for the same quantity of text, shows that there’s potential here, but it’s not yet proven itself enough of a time-saver

to justify me writing a fluffy UI for it.

However, I might expand on the command-line tools I’ve been using in order to produce a non-interactive list of tagging suggestions, and use that to help inform my work as I tidy up the tags throughout my blog.

You still won’t see any “AI-authored” content on this site (except where it’s for the purpose of talking about AI-generated content, and it’ll always be clearly labelled), and I can’t see that changing any time soon. But I’ll admit that there might be some value in AI-assisted curation and administration, so long as there’s an informed human in the loop at all times.

Footnotes

1 Based on my tagging, I’ve apparently only written about escalators once, while playing Pub Jenga at Robin‘s 21st birthday party. I can’t imagine why I thought it deserved a tag.

2 There are, of course, various other people trying similar approaches to this and similar problems. I might have tried one of them, were it not for the fact that I’m not quite as interested in solving the problem as I am in understanding how one might use an AI to solve the problem. It’s similar to how I don’t enjoy doing puzzles like e.g. sudoku as much as I enjoy writing software that optimises for solving such puzzles. See also, for example, how I beat my children at Mastermind or what the hardest word in Hangman is or my various attempts to avoid doing online jigsaws.

3 Let’s ignore for a moment the farce that was Apple’s attempt to summarise news headlines, shall we?

4 Essentially the same SQL, plus WordClouds.com, was used to produce the word cloud grapic!

5 Two separate concerts, but can you imagine‽ 🤣

0 comments