Multilingual emails

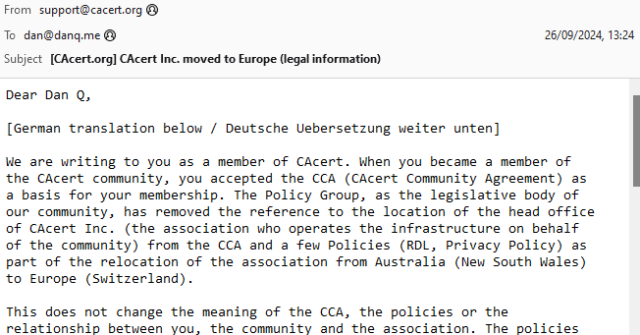

Back when I was a student in Aberystwyth, I used to receive a lot of bilingual emails from the University and its departments1. I was reminded of this when I received an email this week from CACert, delivered in both English and German.

Wouldn’t it be great if there were some kind of standard for multilingual emails? Your email client or device would maintain an “order of preference” of the languages that you speak, and you’d automatically be shown the content in those languages, starting with the one you’re most-fluent in and working down.

The Web’s already got this functionality2, and people have been sending multilingual emails for much longer than they’ve been developing multilingual websites3!

Enter RFC8255!

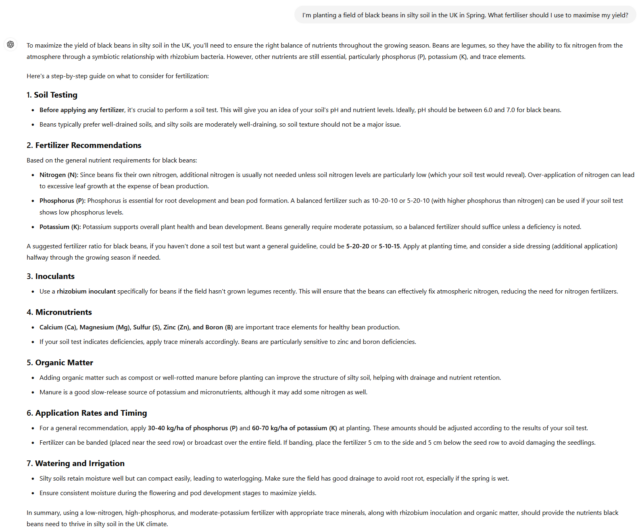

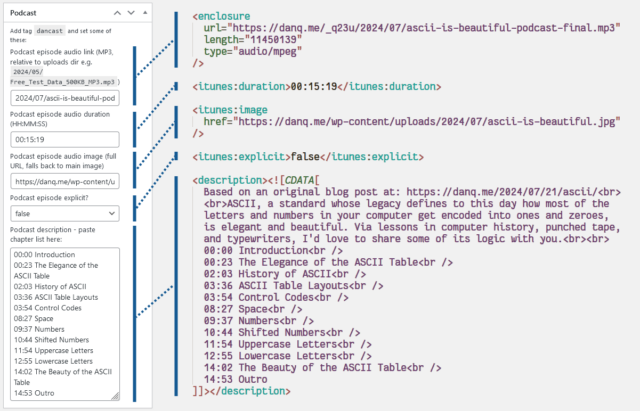

It turns out that this is a (theoretically) solved problem. RFC8255 defines a mechanism for breaking an email into multiple different languages in a way that a machine can understand and that ought to be backwards-compatible (so people whose email software doesn’t support it yet can still “get by”). Here’s how it works:

- You add a

Content-Type: multipart/multilingualheader with a defined boundary marker, just like you would for any other email with multiple “parts” (e.g. with a HTML and a plain text version, or with text content and an attachment). - The first section is just a

text/plain(or similar) part, containing e.g. some text to explain that this is a multilingual email, and if you’re seeing this then your email client probably doesn’t support them, but you should just be able to scroll down (or else look at the attachments) to find content in the language you read. - Subsequent sections have:

-

Content-Disposition: inline, so that for most people using non-compliant email software they can just scroll down until they find a language they can read, -

Content-Type: message/rfc822, so that an entire message can be embedded (which allows other headers, like theSubject:, to be translated too), - a

Content-Language:header, specifying the ISO code of the language represented in that section, and - optionally, a

Content-Translation-Type:header, specifying eitheroriginal(this is the original text),human(this was translated by a human), orautomated(this was the result of machine translation) – this could be used to let a user say e.g. that they’d prefer a human translation to an automated one, given the choice between two second languages.

-

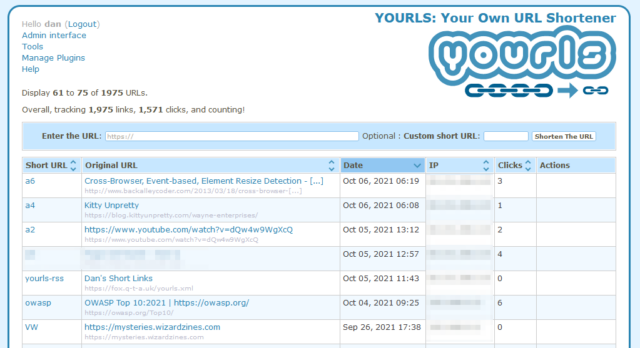

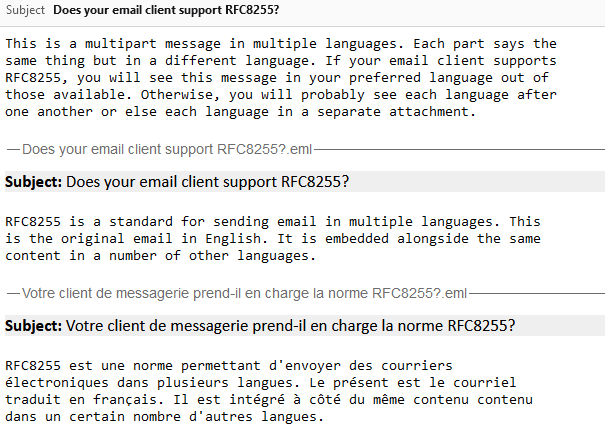

Let’s see a sample email:

Content-Type: multipart/multilingual; boundary=10867f6c7dbe49b2cfc5bf880d888ce1c1f898730130e7968995bea413a65664 To: <b24571@danq.me> From: <rfc8255test-noreply@danq.link> Subject: Does your email client support RFC8255? Mime-Version: 1.0 Date: Fri, 27 Sep 2024 10:06:56 +0000 --10867f6c7dbe49b2cfc5bf880d888ce1c1f898730130e7968995bea413a65664 Content-Transfer-Encoding: quoted-printable Content-Type: text/plain; charset=utf-8 This is a multipart message in multiple languages. Each part says the same thing but in a different language. If your email client supports RFC8255, you will see this message in your preferred language out of those available. Otherwise, you will probably see each language after one another or else each language in a separate attachment. --10867f6c7dbe49b2cfc5bf880d888ce1c1f898730130e7968995bea413a65664 Content-Disposition: inline Content-Type: message/rfc822 Content-Language: en Content-Translation-Type: original Subject: Does your email client support RFC8255? Content-Type: text/plain; charset="UTF-8" Content-Transfer-Encoding: 7bit MIME-Version: 1.0 RFC8255 is a standard for sending email in multiple languages. This is the original email in English. It is embedded alongside the same content in a number of other languages. --10867f6c7dbe49b2cfc5bf880d888ce1c1f898730130e7968995bea413a65664 Content-Disposition: inline Content-Type: message/rfc822 Content-Language: fr Content-Translation-Type: automated Subject: Votre client de messagerie prend-il en charge la norme RFC8255? Content-Type: text/plain; charset="UTF-8" Content-Transfer-Encoding: 7bit MIME-Version: 1.0 RFC8255 est une norme permettant d'envoyer des courriers électroniques dans plusieurs langues. Le présent est le courriel traduit en français. Il est intégré à côté du même contenu contenu dans un certain nombre d'autres langues. --10867f6c7dbe49b2cfc5bf880d888ce1c1f898730130e7968995bea413a65664--

Can I use it?

That proposed standard turns seven years old next month. Sooo… can we start using it?4

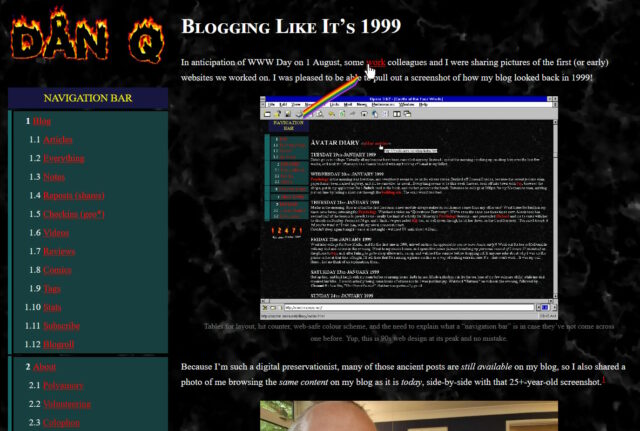

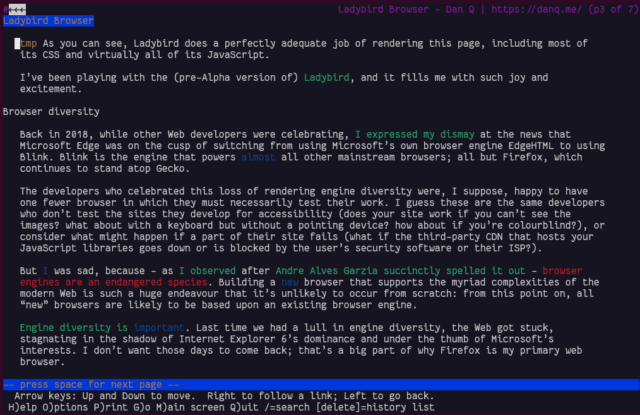

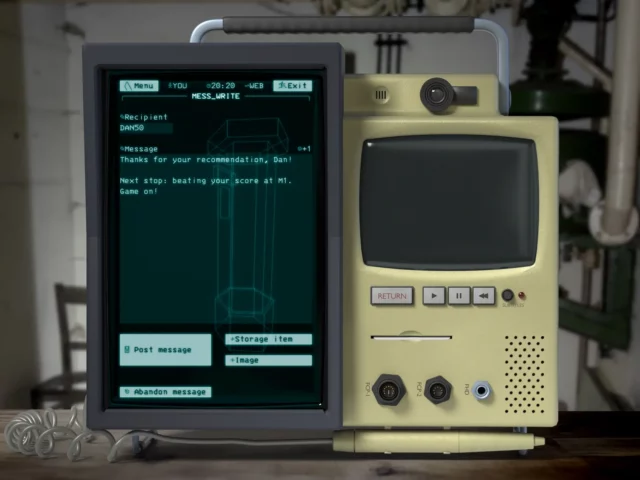

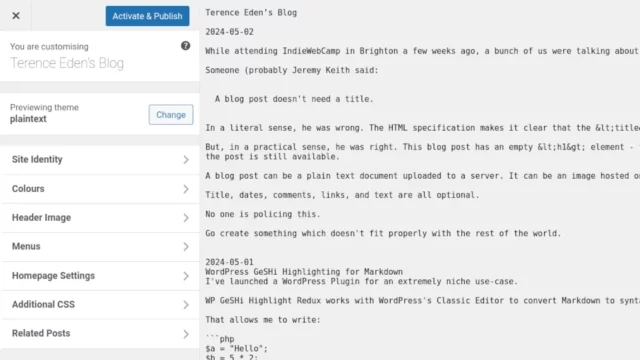

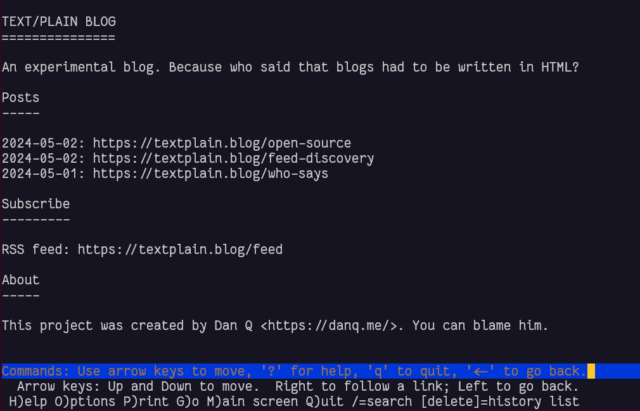

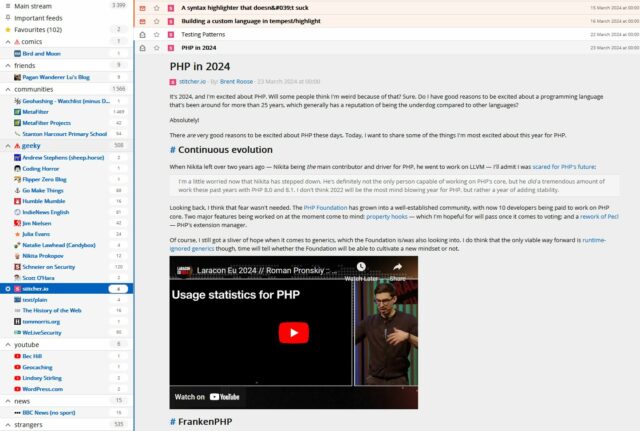

Turns out… not so much. I discovered that NeoMutt supports it:

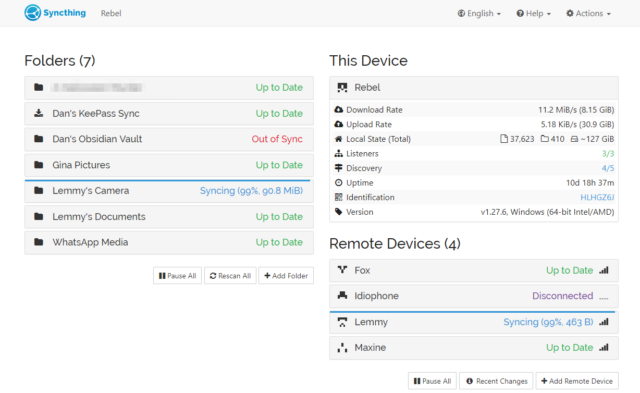

Support in other clients is… variable.

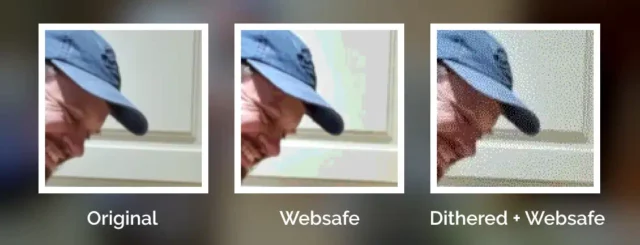

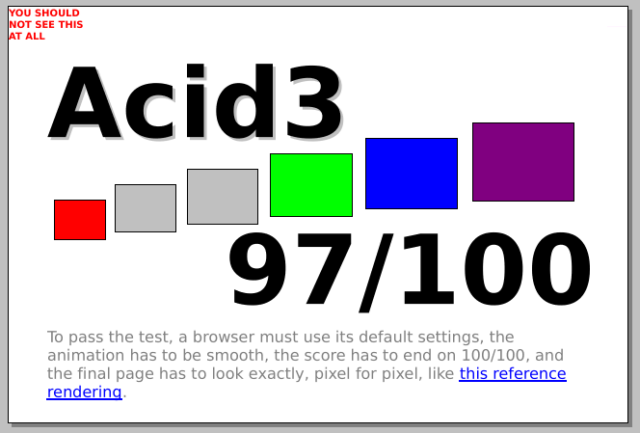

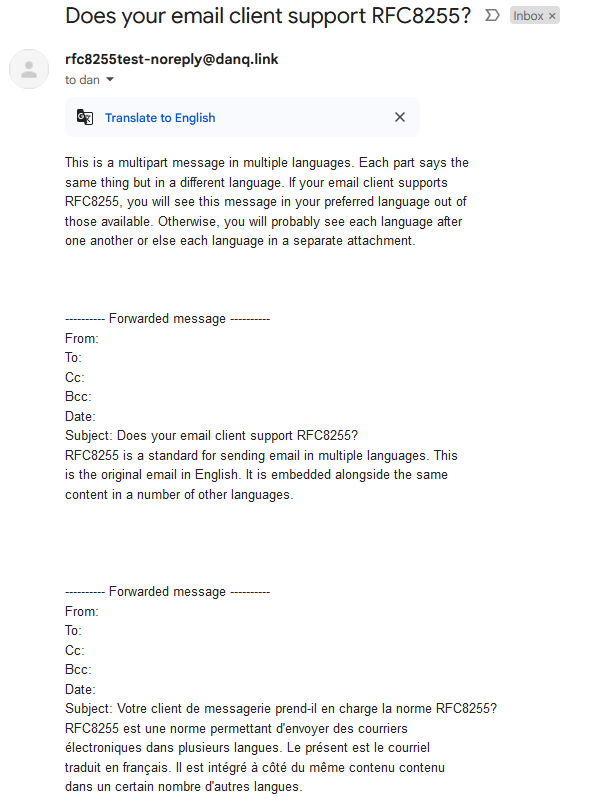

A reasonable number of them don’t understand the multilingual directives but still show the email in a way that doesn’t suck:

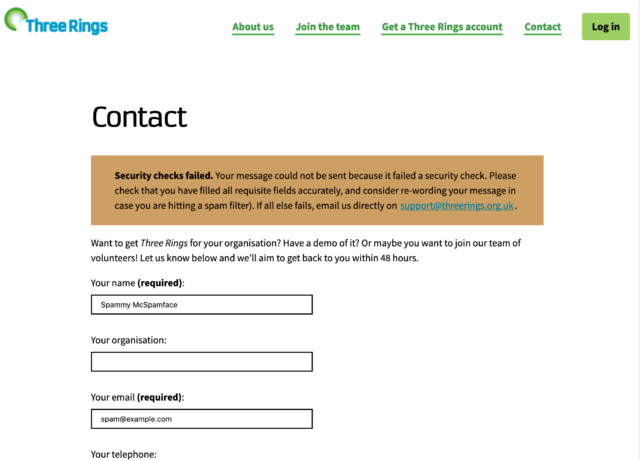

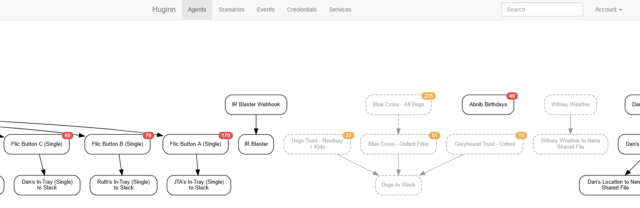

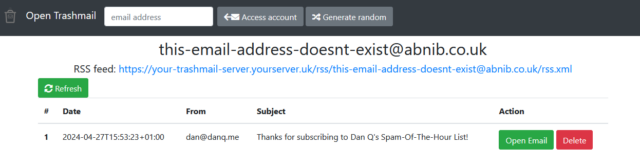

Some shoot for the stars but blow up on the launch pad:

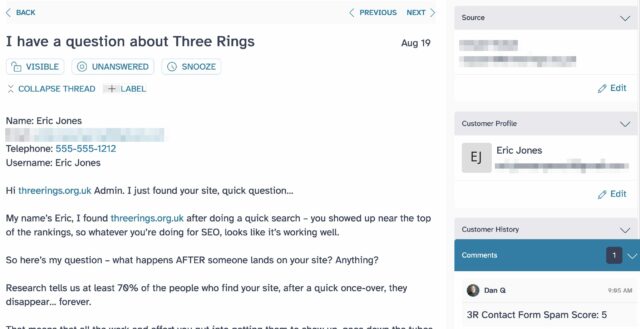

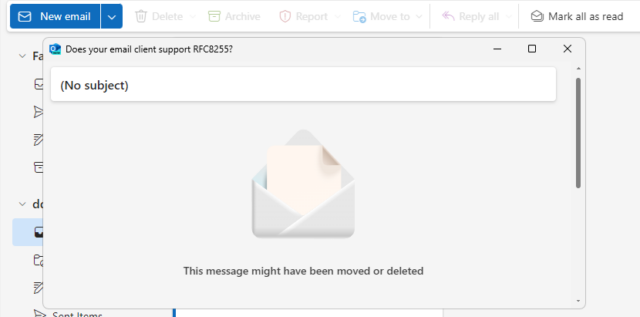

Others still seem to be actively trying to make life harder for you:

.eml attachments… which is then won’t display, forcing you to download them and

find some other email client to look at them in!5

And still others just shit the bed at the idea that you might read an email like this one:

That’s just the clients I’ve tested, but I can’t imagine that others are much different. If you give it a go yourself with something I’ve not tried, then let me know!

I guess this means that standardised multilingual emails might be forever resigned to the “nice to have but it never took off so we went in a different direction” corner of the

Internet, along with the <keygen> HTML element and the concept of privacy.

Footnotes

1 I didn’t receive quite as much bilingual email as you might expect, given that the University committed to delivering most of its correspondence in both English and Welsh. But I received a lot more than I do nowadays, for example

2 Although you might not guess it, given how many websites completely ignore your

Accept-Language header, even where it’s provided, and simply try to “guess” what language you want using IP geolocation or something, and then require that you find

whatever shitty bit of UI they’ve hidden their language selector behind if you want to change it, storing the result in a cookie so it inevitably gets lost and has to be set again the

next time you visit.

3 I suppose that if you were sending HTML emails then you might use the lang="..." attribute to mark up different parts of the message as being in different

languages. But that doesn’t solve all of the problems, and introduces a couple of fresh ones.

4 If it were a cool new CSS feature, you can guarantee that it’d be supported by every major browser (except probably Safari) by now. But email doesn’t get so much love as the Web, sadly.

5 Worse yet, if you’re using ProtonMail with a third-party client, ProtonMail screws up

RFC8255 emails so badly that they don’t even work properly in e.g. NeoMutt any more! ProtonMail swaps the multipart/multilingual content type for

multipart/mixed and strips the Content-Language: headers, making the entire email objectively less-useful.