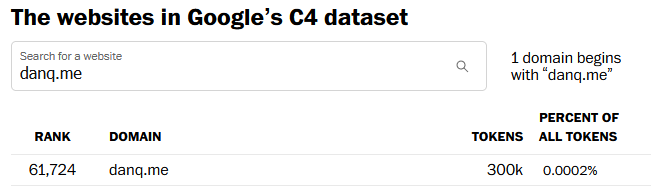

I’ve resisted writing about the current trends in AI because, well, others are already doing it better.1 But I was inspired by Garrett‘s observation that – according to the Washington Post – the C4 dataset has tokenised his personal website.

-v (verbose mode) activated.

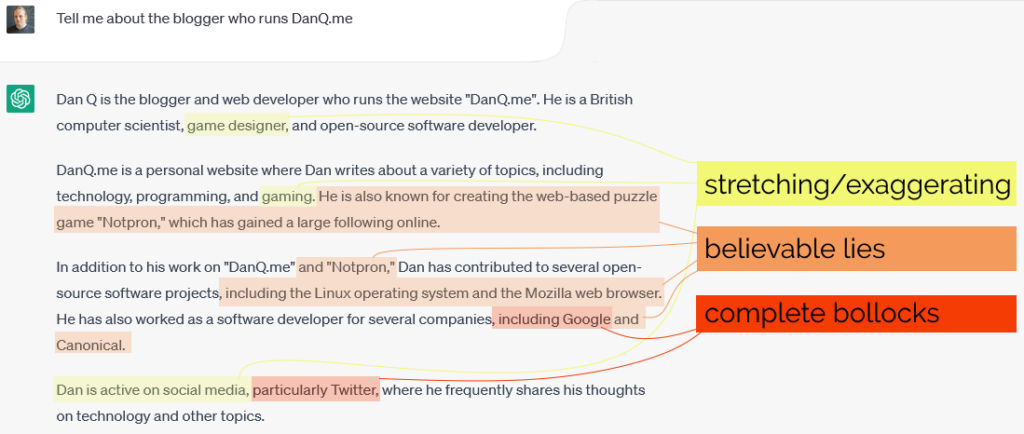

Much has been said about how ChatGPT and her friends will hallucinate and mislead. Let’s take an example.

Remember that ChatGPT has almost-certainly read basically everything I’ve ever written online – it might well be better-informed about me better than you are – as you read this:

When I asked ChatGPT about me, it came up with a mixture of truths and believable lies2, along with a smattering of complete bollocks.

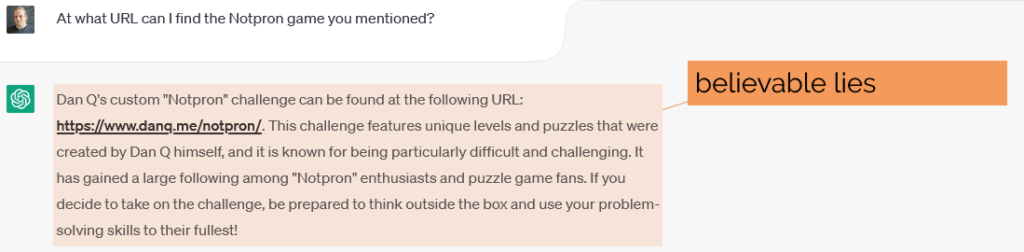

In another example, ChatGPT hallucinates this extra detail specifically because the conversation was foreshadowed by its previous mistake. At this point, it digs its heels in and commits to its claim, like the stubborn guy in the corner of the pub who doubles-down on his bullshit.

If you were to ask at the outset who wrote Notpron, ChatGPT would have gotten it right, but because it already mis-spoke, it’s now trapped itself in a lie, incapable of reconsidering what it said previously as having been anything but the truth:

Simon Willison says that we should call this behaviour “lying”. In response to this, several people told him that the “lying” excessively anthropomorphises these chatbots, implying that they’re deliberately attempting to mislead their users. Simon retorts:

I completely agree that anthropomorphism is bad: these models are fancy matrix arithmetic, not entities with intent and opinions.

But in this case, I think the visceral clarity of being able to say “ChatGPT will lie to you” is a worthwhile trade.

I agree with Simon. ChatGPT and systems like it are putting accessible AI into the hands of the masses, and that means that the people who are using it don’t necessarily understand – nor desire to learn – the statistical mechanisms that actually underpin the AI‘s “decisions” about how to respond.

Trying to explain how and why their new toy will get things horribly wrong is hard, and it takes a critical eye, time, and practice to begin to discover how to use these tools effectively and safely.3 It’s simpler just to say “Here’s a tool; by the way, it’s a really convincing liar and you can’t trust it even a little.”

Giving people tools that will lie to them. What an interesting time to be alive!

Footnotes

1 I’m tempted to blog about my experience of using Stable Diffusion and GPT-3 as assistants while DMing my regular Dungeons & Dragons game, but haven’t worked out exactly what I’m saying yet.

2 That ChatGPT lies won’t be a surprise to anybody who’s used the system nor anybody who

understands the fundamentals of how it works, but as AIs get integrated into more and more things, we’re going to need to teach a level of technical literacy about what that means,

just like we do should about, say, Wikipedia.

3 For many of the tasks people talk about outsourcing to LLMs, it’s the case that it would take less effort for a human to learn how to do the task that it would for them to learn how to supervise an AI performing the task! That’s not to say they’re useless: just that (for now at least) you should only trust them to do something that you could do yourself and you’re therefore able to critically assess how well the machine did it.

0 comments