This checkin to GCM8CB Urania reflects a geocaching.com log entry. See more of Dan's cache logs.

I’ve been in Vienna for a week to meet work colleagues, and today – our meetings at an end and still with a few hours before my plane leaves – I

decided to come out and find some local geocaches.

At the GZ there were lots of good hiding places so I reached over and around. In a few seconds my fingers touched the cache. Great!

But then – disaster! As others have observed, the magnets in this cache aren’t the

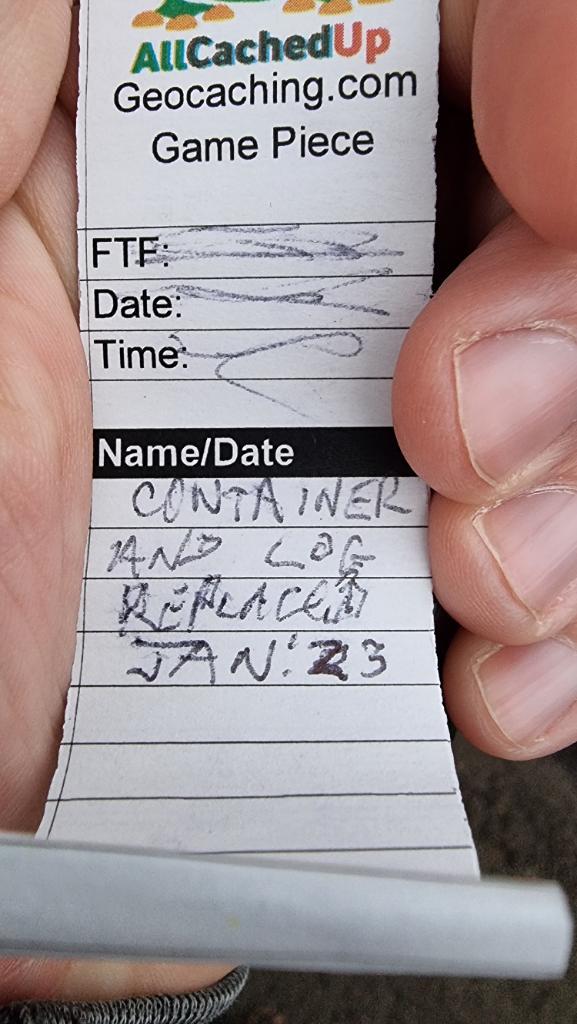

strongest and it bounced free. It fell a long, long way! I rushed across the road and down to the lower level to grab it. Luckily the cache container was unharmed, so I signed the log

as I carried it back to up its hiding place. What an adventure!

FP awarded for the cool container and hiding place, and for the fun story you helped me tell. Greetings from Oxfordshire, UK. TFTC!