As part of my efforts to reclaim the living room from the children, I’m building a new gaming PC for the playroom. She’s called Bee, and – thanks to the absolute insanity that is The Tower 300 case from Thermaltake – she’s one of the most bonkers PC cases I’ve ever worked in.

Tag: computers

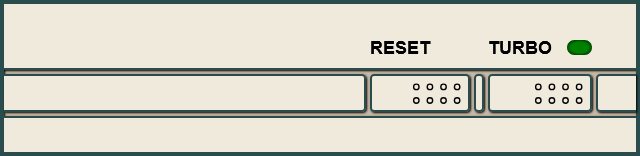

Beige Buttons

Back before PCs were black, they were beige. And even further back, they’d have not only “Reset” and “Power” buttons, but also a “Turbo” button.

I’m not here to tell you what it did1. No, I’m here to show you how to re-live those glory days with a Turbo button of your very own, implemented as a reusable Web Component that you can install on your very own website:

(Don’t press the Reset button; other people are using this website!)

If you’d like some beige buttons of your own, you can get them at Beige-Buttons.DanQ.dev. Two lines of code and you can pop them on any website you like. Also, it’s open-source under the Unlicense so you can take it, break it, or do what you like with it.

I’ve been slumming it in some Web Revivalist circles lately, and it might show. Best Resolution (with all its 88×31s2), which I launched last month, for example.

You might anticipate seeing more retro fun-and-weird going on here. You might be right.

Now… be honest – how many of you pressed the “reset” button even though you were told not to?

Footnotes

1 If you know, you know. And if you don’t, then take a look at the website for my new web component, which has an “about” section.

2 I guess that’s another “if you know, you know”, but at least you’ll get fewer conflicting answers if you search for an explanation than you will if you try to understand the turbo button.

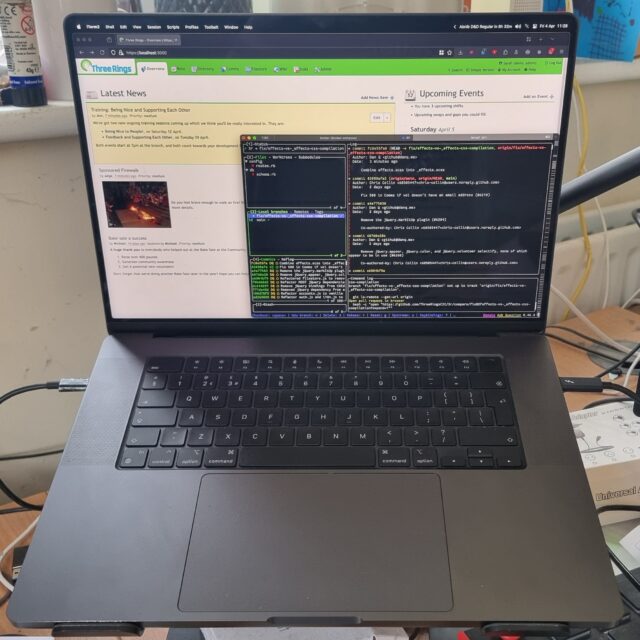

Dan Has Too Many Monitors

My new employer sent me a laptop and a monitor, which I immediately added to my already pretty-heavily-loaded desk. Wanna see?

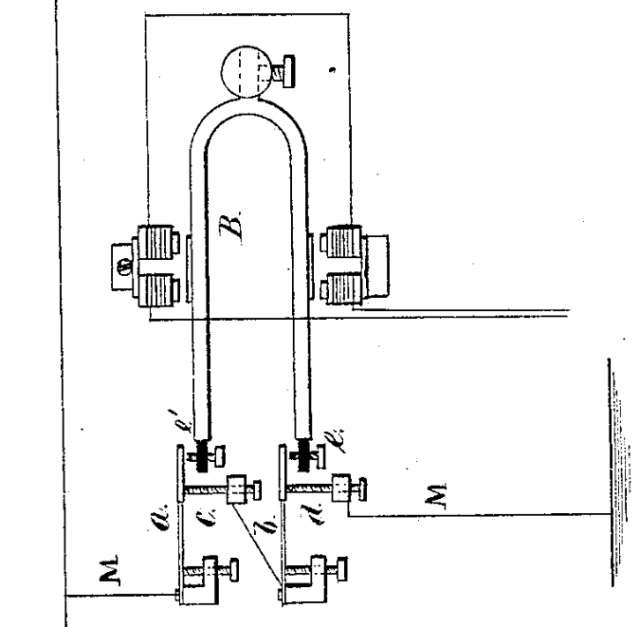

DB13W3

I’d like to nominate DB13W3 for Most Cursed Connector. I mean, just look at that thing!

Bonus: there were at least two different, incompatible, pinout “standards” for this thing, so there was no guarantee that a random monitor with this cable would connect to your workstation, even if it had the right port.

3Camp 2025

I’m off for a week of full-time volunteering with Three Rings at 3Camp, our annual volunteer hack week: bringing together our distributed team for some intensive in-person time, working to make life better for charities around the world.

And if there’s one good thing to come out of me being suddenly and unexpectedly laid-off two days ago, it’s that I’ve got a shiny new laptop to do my voluntary work on (Automattic have said that I can keep it).

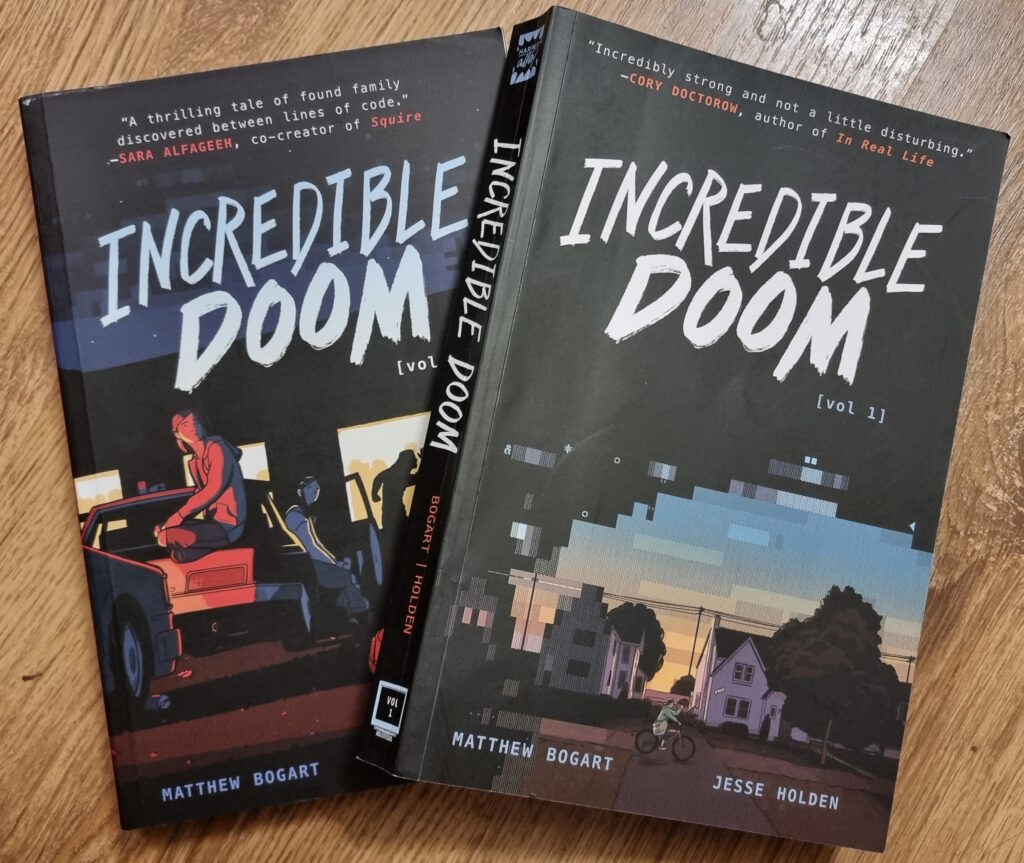

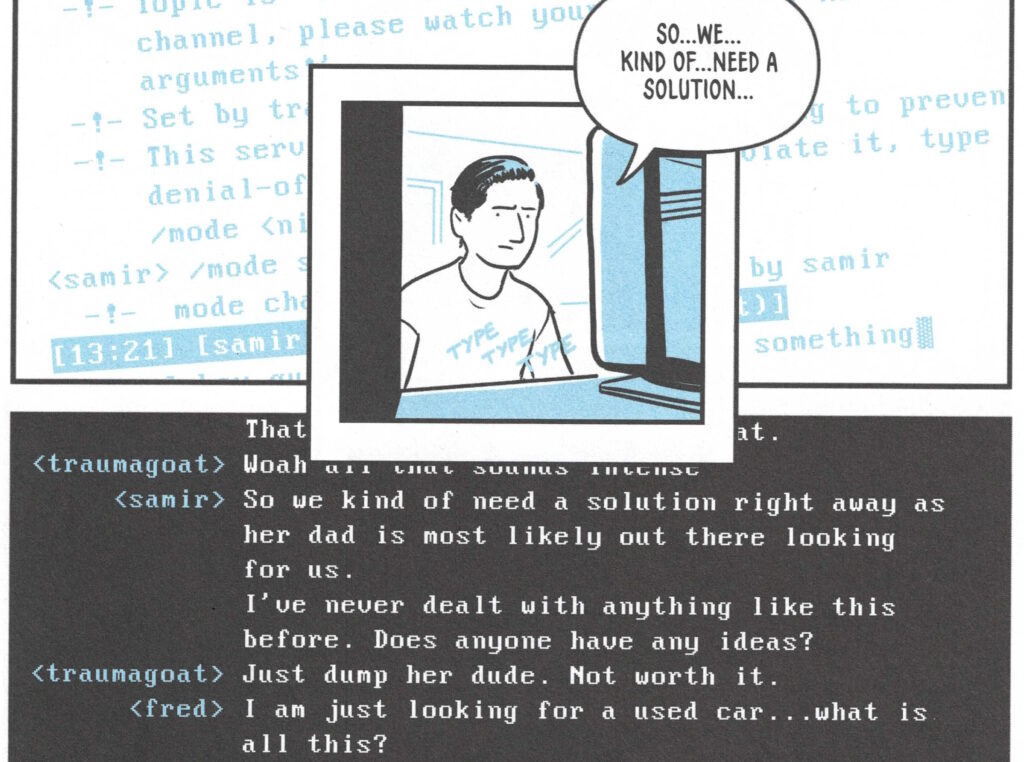

Incredible Doom

I just finished reading Incredible Doom volumes 1 and 2, by Matthew Bogart and Jesse Holden, and man… that was a heartwarming and nostalgic tale!

Set in the early-to-mid-1990s world in which the BBS is still alive and kicking, and the Internet’s gaining traction but still lacks the “killer app” that will someday be the Web (which is still new and not widely-available), the story follows a handful of teenagers trying to find their place in the world. Meeting one another in the 90s explosion of cyberspace, they find online communities that provide connections that they’re unable to make out in meatspace.

It touches on experiences of 90s cyberspace that, for many of us, were very definitely real. And while my online “scene” at around the time that the story is set might have been different from that of the protagonists, there’s enough of an overlap that it felt startlingly real and believable. The online world in which I – like the characters in the story – hung out… but which occupied a strange limbo-space: both anonymous and separate from the real world but also interpersonal and authentic; a frontier in which we were still working out the rules but within which we still found common bonds and ideals.

Anyway, this is all a long-winded way of saying that Incredible Doom is a lot of fun and if it sounds like your cup of tea, you should read it.

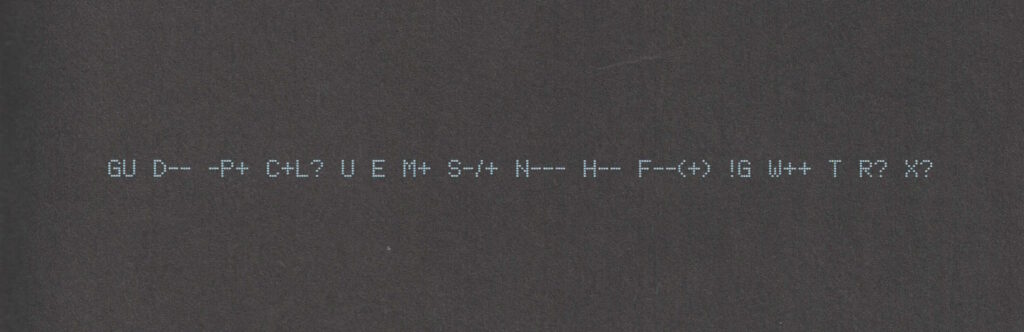

Also: shortly after putting the second volume down, I ended up updating my Geek Code for the first time in… ooh, well over a decade. The standards have moved on a little (not entirely in a good way, I feel; also they’ve diverged somewhat), but here’s my attempt:

----- BEGIN GEEK CODE VERSION 6.0 ----- GCS^$/SS^/FS^>AT A++ B+:+:_:+:_ C-(--) D:+ CM+++ MW+++>++ ULD++ MC+ LRu+>++/js+/php+/sql+/bash/go/j/P/py-/!vb PGP++ G:Dan-Q E H+ PS++ PE++ TBG/FF+/RM+ RPG++ BK+>++ K!D/X+ R@ he/him! ----- END GEEK CODE VERSION 6.0 -----

Footnotes

1 I was amazed to discover that I could still remember most of my Geek Code syntax and only had to look up a few components to refresh my memory.

My First MP3

Podcast Version

This post is also available as a podcast. Listen here, download for later, or subscribe wherever you consume podcasts.

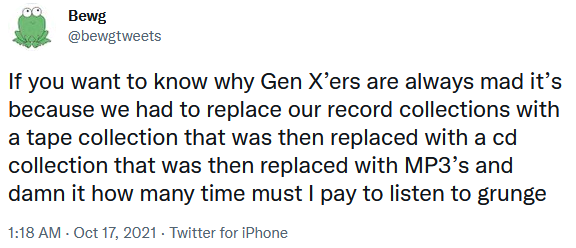

Somebody shared with me a tweet about the tragedy of being a Gen X’er and having to buy all your music again and again as formats evolve. Somebody else shared with me Kyla La Grange‘s cover of a particular song .Together… these reminded me that I’ve never told you the story of my first MP3…1

In the Summer of 1995 I bought the CD single of the (still excellent!) Set You Free by N-Trance.2 I’d heard about this new-fangled “MP3” audio format, so soon afterwards I decided to rip a copy of the song to my PC.

I was using a 66MHz 486SX CPU, and without an embedded FPU I didn’t

quite have the spare processing power to rip-and-encode in a single pass.3

So instead I first ripped to an uncompressed PCM .wav file and then performed the encoding: the former step

was done almost in real-time (I listened to the track as it ripped!), about 7 minutes. The latter step took about 20 minutes.

So… about half an hour in total, to rip a single song.

Creating a (what would now be considered an apalling) 32kHz mono-channel file, this meant that I briefly stored both a 27MB wave file and the final ~4MB MP3 file. 31MB might not sound huge, but I only had a total of 145MB of hard drive space at the time, so 31MB consumed over a fifth of my entire fixed storage! Even after deleting the intermediary wave file I was left with a single song consuming around 3% of my space, which is mind-boggling to think about in hindsight.

But it felt like magic. I called my friend Gary to tell him about it. “This is going to be massive!” I said. At the time, I meant for techy people: I could imagine a future in which, with more hard drive space, I’d keep all my music this way… or else bundle entire artists onto writable CDs in this new format, making albums obsolete. I never considered that over the coming decade or so the format would enter the public consciousness, let alone that it’d take off like it did.

The MP3 file I produced had a fault. Most of the way through the encoding process, I got bored and ran another program, and this must’ve interfered with the stream because there was an audible “blip” noise about 30 seconds from the end of the track. You’d have to be listening carefully to hear it, or else know what you were looking for, but it was there. I didn’t want to go through the whole process again, so I left it.

But that artefact uniquely identified that copy of what was, in the end, a popular song to have in your digital music collection. As the years went by and I traded MP3 files in bulk at LAN parties or on CD-Rs or, on at least one ocassion, on an Iomega Zip disk (remember those?), I’d ocassionally see

N-Trance - (Only Love Can) Set You Free.mp34 being passed around and play it, to see if it was “my”

copy.

Sometimes the ID3 tags had been changed because for example the previous owner had decided it deserved to be considered Genre: Dance instead of Genre: Trance5. But I could still identify that file because

of the audio fingerprint, distinct to the first MP3 I ever created.

I still had that file when I went to university (where it occupied a smaller proportion of my hard drive space) and hearing that distinctive “blip” would remind me about the ordeal that was involved in its creation. I don’t have it any more, but perhaps somebody else still does.

Footnotes

1 I might never have told this story on my blog, but eagle-eyed readers may remember that I’ve certainly hinted at it before now.

2 Rewatching that music video, I’m struck by a recollection of how crazy popular crossfades were on 1990s dance music videos. More than just a transition, I’m pretty sure that most of the frames of that video are mid-crossfade: it feels like I’m watching Kelly Llorenna hanging out of a sunroof but I accidentally left one of my eyeballs in a smoky nightclub and can still see out of it as well.

3 I initially tried to convert directly from red book format to an MP3 file, but the encoding process was too slow and the CD drive’s buffer filled up and didn’t get drained by the processor, which was still presumably bogged down with framing or fourier-transforming earlier parts of the track. The CD drive reasonably assumed that it wasn’t actually being used and spun-down the drive motor, and this caused it to lose its place in the track, killing the whole process and leaving me with about a 40 second recording.

4 Yes, that filename isn’t quite the correct title. I was wrong.

5 No, it’s clearly trance. They were wrong.

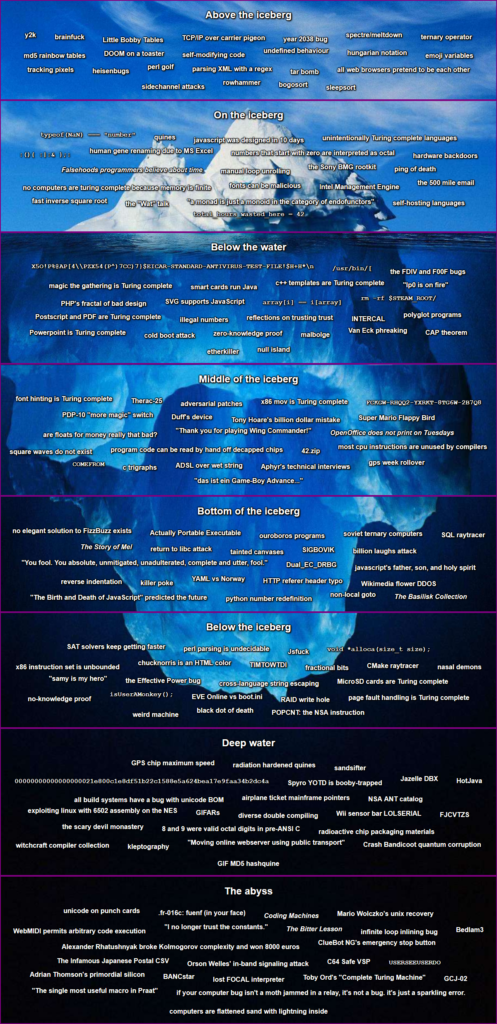

The Cursed Computer Iceberg Meme

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

More awesome from Blackle Mori, whose praises I sung recently over The Basilisk Collection. This time we’re treated to a curated list of 182 articles demonstrating the “peculiarities and weirdness” of computers. Starting from relatively well-known memes like little Bobby Tables, the year 2038 problem, and how all web browsers pretend to be each other, we descend through the fast inverse square root (made famous by Quake III), falsehoods programmers believe about time (personally I’m more of a fan of …names, but then you might expect that), the EICAR test file, the “thank you for playing Wing Commander” EMM386 in-memory hack, The Basilisk Collection itself, and the GIF MD5 hashquine (which I’ve shared previously) before eventually reaching the esoteric depths of posuto and the nightmare that is Japanese postcodes…

Plus many, many things that were new to me and that I’ve loved learning about these last few days.

It’s definitely not a competition; it’s a learning opportunity wrapped up in the weirdest bits of the field. Have an explore and feed your inner computer science geek.

Note #17583

Needed new UPS batteries.

Almost bought from @insight_uk but they require registration to checkout.

Purchased from @SourceUPSLtd instead.

Moral: having no “guest checkout” costs you business.

Rebuilding a Windows box with Chocolatey

Computers Don’t Like Moving House

As always seems to happen when I move house, a piece of computer hardware broke for me during my recent house move. It’s always exactly one piece of hardware, like it’s a symbolic recognition by the universe that being lugged around, rattling around and butting up against one another, is not the natural state of desktop computers. Nor is it a comfortable journey for the hoarder-variety of geek nervously sitting in front of them, tentatively turning their overloaded vehicle around each and every corner. UserFriendly said it right in this comic from 2003.

This time around, it was one of the hard drives in Renegade, my primary Windows-running desktop, that failed. (At least I didn’t break myself, this time.)

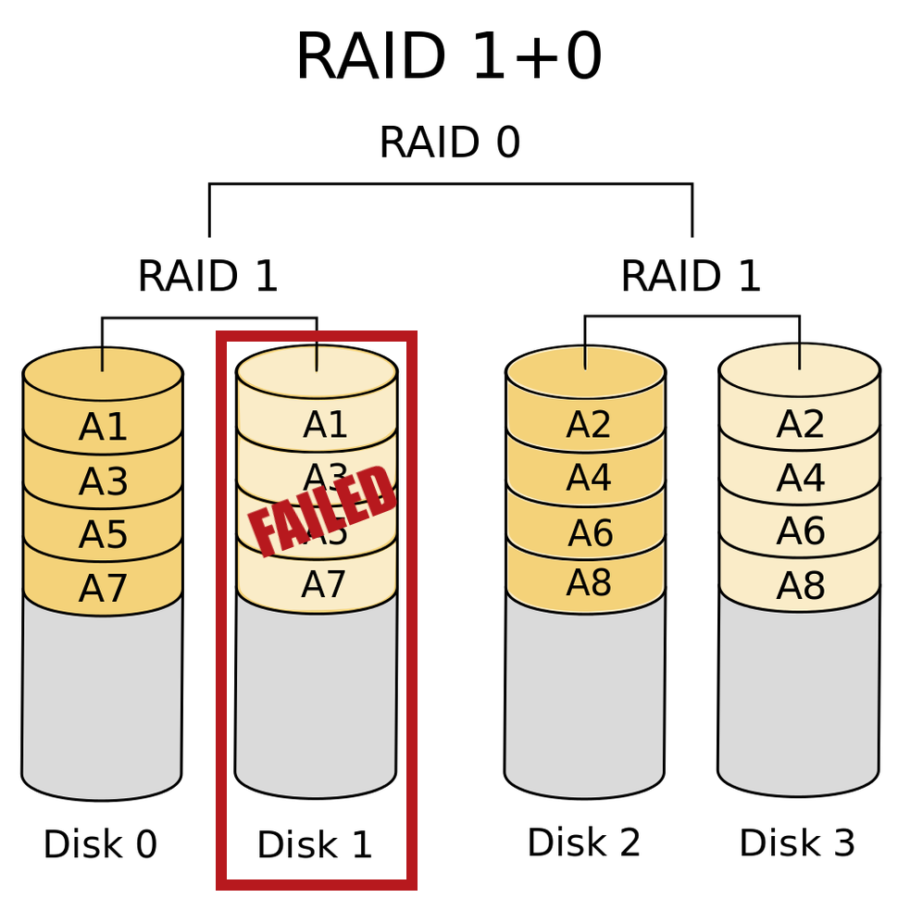

Fortunately, it failed semi-gracefully: the S.M.A.R.T. alarm went off about a week before it actually started causing real problems, giving me at least a little time to prepare, and – better yet – the drive was part of a four-drive RAID 10 hot-swappable array, which means that every single byte of data on that drive was already duplicated to a second drive.

Incidentally, this configuration may have indirectly contributed to its death: before I built Fox, our new household NAS, I used Renegade for many of the same purposes, but WD Blues are not really a “server grade” hard drive and this one and its siblings will have seen more and heavier use than they might have expected over the last few years. (Fox, you’ll be glad to hear, uses much better-rated drives for her arrays.)

So no data was lost, but my array was degraded. I could have simply repaired it and carried on by adding a replacement similarly-sized hard drive, but my needs have changed now that Fox is on the scene, so instead I decided to downgrade to a simpler two-disk RAID 1 array for important data and an “at-risk” unmirrored drive for other data. This retains the performance of the previous array at the expense of a reduction in redundancy (compared to, say, a three-disk RAID 5 array which would have retained redundancy at the expense of performance). As I said: my needs have changed.

Fixing Things… Fast!

In any case, the change in needs (plus the fact that nobody wants watch an array rebuild in a different configuration on a drive with system software installed!) justified a reformat-and-reinstall, which leads to the point of this article: how I optimised my reformat-and-reinstall using Chocolatey.

Chocolatey is a package manager for Windows: think like apt for Debian-like *nices (you know I do!) or Homebrew for MacOS. For previous Windows system rebuilds I’ve enjoyed the simplicity of Ninite, which will build you a one-click installer for your choice of many of your favourite tools, so you can get up-and-running faster. But Chocolatey’s package database is much more expansive and includes bonus switches for specifying particular versions of applications, so it’s a clear winner in my mind.

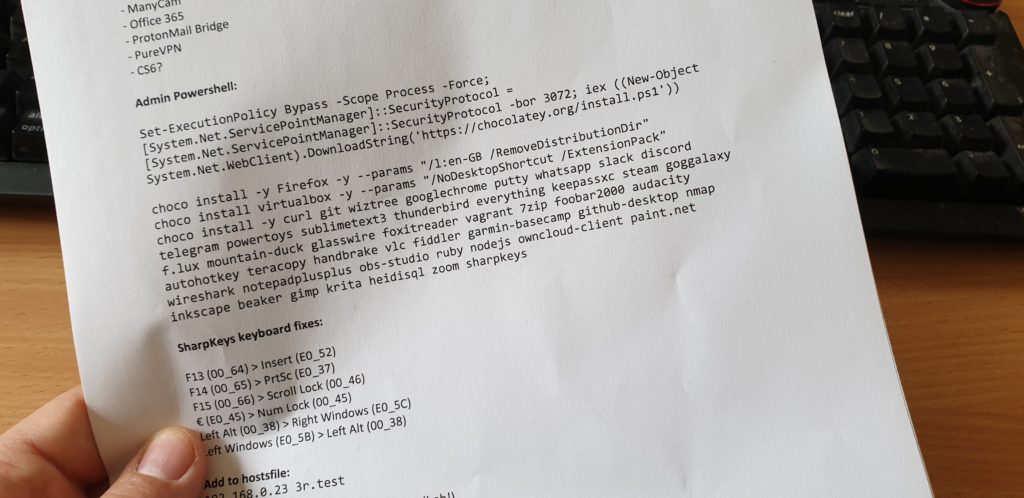

So I made up a Windows installation pendrive and added to it a “script” of things to do to get Renegade back into full working order. You can read the full script here, but the essence of it was:

- Reconfigure the RAID array, reformat, reinstall Windows, and create an account.

- Install things I routinely use that aren’t available on Chocolatey (I’d pre-copied these onto the pendrive for laziness): Synergy, Beamgun, Backblaze, ManyCam, Office 365, ProtonMail Bridge, and PureVPN.

- Install Chocolatey by running:

Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1')) - Install everything else (links provided in case you’re interested in what I “run”!) by running:

choco install -y Firefox -y --params "/l:en-GB /RemoveDistributionDir"

choco install virtualbox -y --params "/NoDesktopShortcut /ExtensionPack" --version 6.0.22

choco install -y 7zip audacity autohotkey beaker curl discord everything f.lux fiddler foobar2000 foxitreader garmin-basecamp gimp git github-desktop glasswire goggalaxy googlechrome handbrake heidisql inkscape keepassxc krita mountain-duck nmap nodejs notepadplusplus obs-studio owncloud-client paint.net powertoys putty ruby sharpkeys slack steam sublimetext3 telegram teracopy thunderbird vagrant vlc whatsapp wireshark wiztree zoom - Configuration (e.g. set up my unusual keyboard mappings, register software, set up remote connections and backups, etc.).

By scripting virtually all of the above I was able to rearrange hard drives in and then completely reimage a (complex) working Windows machine with well under an hour of downtime; I can thoroughly recommend Chocolatey next time you have to set up a new Windows PC (or just to expand what’s installed on your existing one). There’s a GUI if you’re not a fan of the command line, of course.

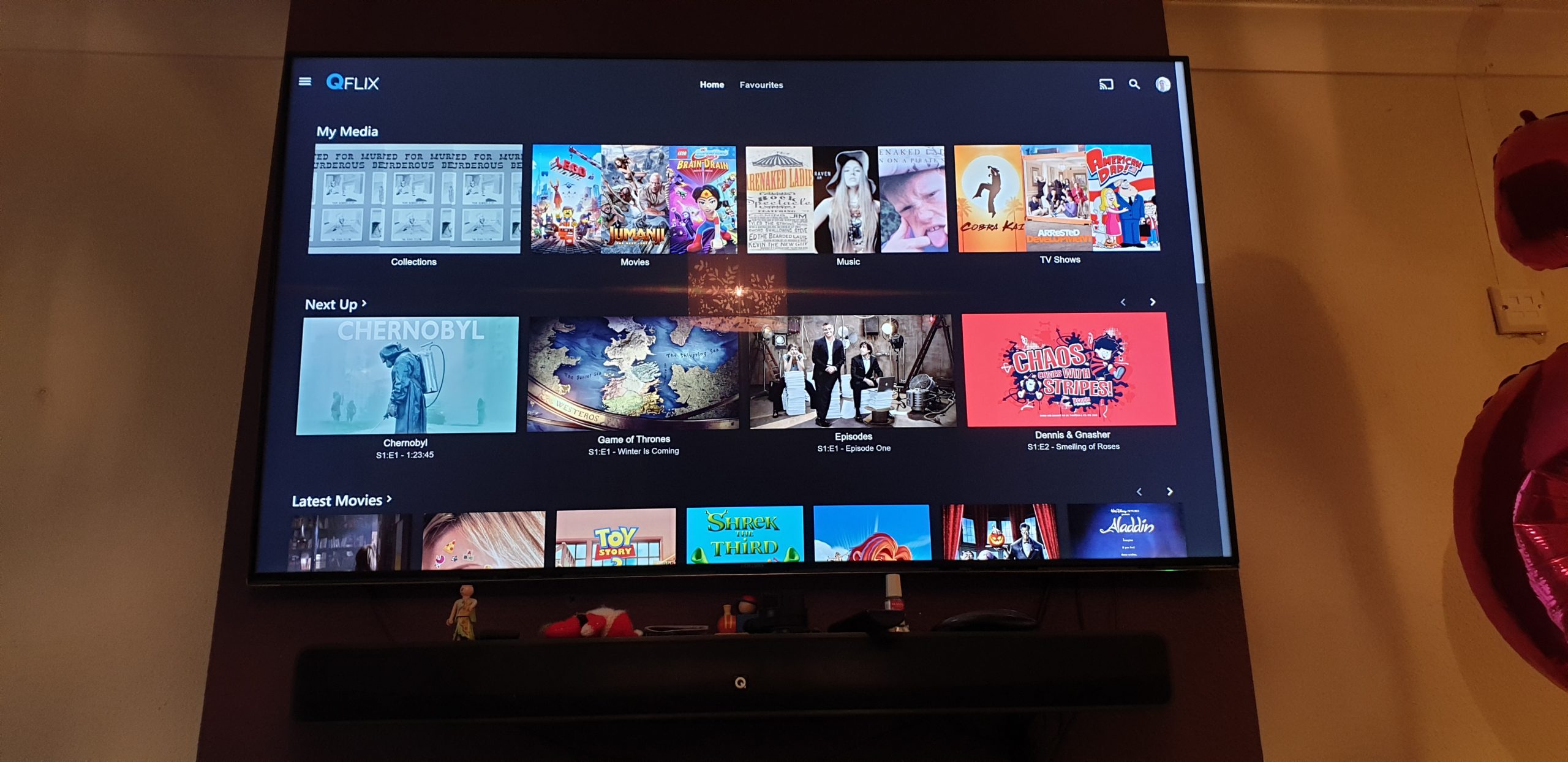

Fox (Household NAS)

Last week I built Fox, the newest addition to our home network. Fox, whose specification called for not one, not two, not three but four 12 terabyte hard disk drives was built principally as a souped-up NAS device – a central place for us all to safely hold and control access to important files rather than having them spread across our various devices – but she’s got a lot more going on that that, too.

Fox has:

- Enough hard drive space to give us 36TB of storage capacity plus 12TB of parity, allowing any one of the drives to fail without losing any data.

- “Headroom” sufficient to double its capacity in the future without significant effort.

- A mediumweight graphics card to assist with real-time transcoding, helping her to convert and stream audio and videos to our devices in whatever format they prefer.

- A beefy processor and sufficient RAM to run a dozen virtual machines supporting a variety of functions like software development, media ripping and cataloguing, photo rescaling, reverse-proxying, and document scanning (a planned future purpose for Fox is to have a network-enabled scanner near our “in-trays” so that we can digitise and OCR all of our post and paperwork into a searchable, accessible, space-saving collection).

The last time I filmed myself building a PC was when I built Cosmo, a couple of desktops ago. He turned out to be a bit of a nightmare: he was my first fully-watercooled computer and he leaked everywhere: by the time I’d done all the fixing and re-fixing to make him behave nicely, I wasn’t happy with the video footage and I never uploaded it. I’d been wary, almost-superstitious, about filming a build since then, but I shot a timelapse of Fox’s construction and it turned out pretty well: you can watch it below or on YouTube or QTube.

The timelapse slows to real-time, about a minute in, to illustrate a point about the component test I did with only a CPU (and cooler), PSU, and RAM attached. Something I routinely do when building computers but which I only recently discovered isn’t commonly practised is shown: that the easiest way to power on a computer without attaching a power switch is just to bridge the power switch pins using your screwdriver!

Fox is running Unraid, an operating system basically designed for exactly these kinds of purposes. I’ve been super-impressed by the ease-of-use and versatility of Unraid and I’d recommend it if you’ve got a similar NAS project in your future! I’d also like to sing the praises of the Fractal Design Node 804 case: it’s not got quite as many bells-and-whistles as some cases, but its dual-chamber design is spot-on for a multipurpose NAS, giving ample room for both full-sized expansion cards and heatsinks and lots of hard drives in a relatively compact space.

Fix your PC with a crystal pendulum

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Uploaded to YouTube 11 years ago, in what now feels like an earlier era, this old classic still makes me smile every time.

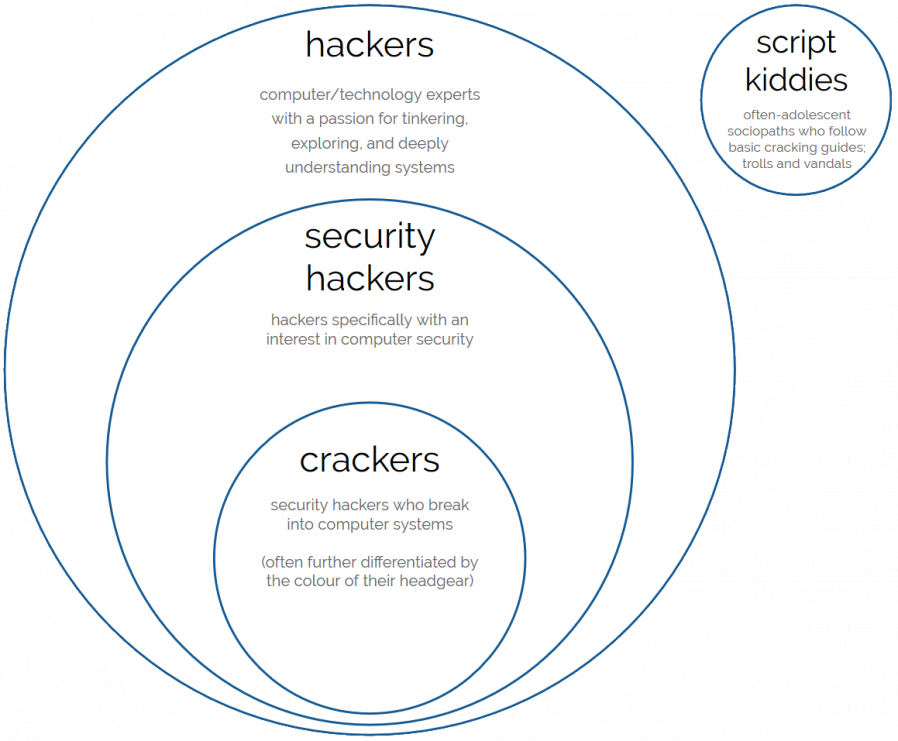

Evolving Computer Words: “Hacker”

This is part of a series of posts on computer terminology whose popular meaning – determined by surveying my friends – has significantly diverged from its original/technical one. Read more evolving words…

Few words have seen such mutation of meaning over their lifetimes as the word “silly”. The earliest references, found in Old English, Proto-Germanic, and Old Norse and presumably having an original root even earlier, meant “happy”. By the end of the 12th century it meant “pious”; by the end of the 13th, “pitiable” or “weak”; only by the late 16th coming to mean “foolish”; its evolution continues in the present day.

But there’s little so silly as the media-driven evolution of the word “hacker” into something that’s at least a little offensive those of us who probably would be described as hackers. Let’s take a look.

Hacker

What people think it means

Computer criminal with access to either knowledge or tools which are (or should be) illegal.

What it originally meant

Expert, creative computer programmer; often politically inclined towards information transparency, egalitarianism, anti-authoritarianism, anarchy, and/or decentralisation of power.

The Past

The earliest recorded uses of the word “hack” had a meaning that is unchanged to this day: to chop or cut, as you might describe hacking down an unruly bramble. There are clear links between this and the contemporary definition, “to plod away at a repetitive task”. However, it’s less certain how the word came to be associated with the meaning it would come to take on in the computer labs of 1960s university campuses (the earliest references seem to come from around April 1955).

There, the word hacker came to describe computer experts who were developing a culture of:

- sharing computer resources and code (even to the extent, in extreme cases, breaking into systems to establish more equal opportunity of access),

- learning everything possible about humankind’s new digital frontiers (hacking to learn, not learning to hack)

- judging others only by their contributions and not by their claims or credentials, and

- discovering and advancing the limits of computers: it’s been said that the difference between a non-hacker and a hacker is that a non-hacker asks of a new gadget “what does it do?”, while a hacker asks “what can I make it do?”

It is absolutely possible for hacking, then, to involve no lawbreaking whatsoever. Plenty of hacking involves writing (and sharing) code, reverse-engineering technology and systems you own or to which you have legitimate access, and pushing the boundaries of what’s possible in terms of software, art, and human-computer interaction. Even among hackers with a specific interest in computer security, there’s plenty of scope for the legal pursuit of their interests: penetration testing, security research, defensive security, auditing, vulnerability assessment, developer education… (I didn’t say cyberwarfare because 90% of its application is of questionable legality, but it is of course a big growth area.)

So what changed? Hackers got famous, and not for the best reasons. A big tipping point came in the early 1980s when hacking group The 414s broke into a number of high-profile computer systems, mostly by using the default password which had never been changed. The six teenagers responsible were arrested by the FBI but few were charged, and those that were were charged only with minor offences. This was at least in part because there weren’t yet solid laws under which to prosecute them but also because they were cooperative, apologetic, and for the most part hadn’t caused any real harm. Mostly they’d just been curious about what they could get access to, and were interested in exploring the systems to which they’d logged-in, and seeing how long they could remain there undetected. These remain common motivations for many hackers to this day.

News media though – after being excited by “hacker” ideas introduced by WarGames – rightly realised that a hacker with the same elementary resources as these teens but with malicious intent could cause significant real-world damage. Bruce Schneier argued last year that the danger of this may be higher today than ever before. The press ran news stories strongly associating the word “hacker” specifically with the focus on the illegal activities in which some hackers engage. The release of Neuromancer the following year, coupled with an increasing awareness of and organisation by hacker groups and a number of arrests on both sides of the Atlantic only fuelled things further. By the end of the decade it was essentially impossible for a layperson to see the word “hacker” in anything other than a negative light. Counter-arguments like The Conscience of a Hacker (Hacker’s Manifesto) didn’t reach remotely the same audiences: and even if they had, the points they made remain hard to sympathise with for those outside of hacker communities.

A lack of understanding about what hackers did and what motivated them made them seem mysterious and otherworldly. People came to make the same assumptions about hackers that they do about magicians – that their abilities are the result of being privy to tightly-guarded knowledge rather than years of practice – and this elevated them to a mythical level of threat. By the time that Kevin Mitnick was jailed in the mid-1990s, prosecutors were able to successfully persuade a judge that this “most dangerous hacker in the world” must be kept in solitary confinement and with no access to telephones to ensure that he couldn’t, for example, “start a nuclear war by whistling into a pay phone”. Yes, really.

The Future

Every decade’s hackers have debated whether or not the next decade’s have correctly interpreted their idea of “hacker ethics”. For me, Steven Levy’s tenets encompass them best:

- Access to computers – and anything which might teach you something about the way the world works – should be unlimited and total.

- All information should be free.

- Mistrust authority – promote decentralization.

- Hackers should be judged by their hacking, not bogus criteria such as degrees, age, race, or position.

- You can create art and beauty on a computer.

- Computers can change your life for the better.

Given these concepts as representative of hacker ethics, I’m convinced that hacking remains alive and well today. Hackers continue to be responsible for many of the coolest and most-important innovations in computing, and are likely to continue to do so. Unlike many other sciences, where progress over the ages has gradually pushed innovators away from backrooms and garages and into labs to take advantage of increasingly-precise generations of equipment, the tools of computer science are increasingly available to individuals. More than ever before, bedroom-based hackers are able to get started on their journey with nothing more than a basic laptop or desktop computer and a stack of freely-available open-source software and documentation. That progress may be threatened by the growth in popularity of easy-to-use (but highly locked-down) tablets and smartphones, but the barrier to entry is still low enough that most people can pass it, and the new generation of ultra-lightweight computers like the Raspberry Pi are doing their part to inspire the next generation of hackers, too.

That said, and as much as I personally love and identify with the term “hacker”, the hacker community has never been less in-need of this overarching label. The diverse variety of types of technologist nowadays coupled with the infiltration of pop culture by geek culture has inevitably diluted only to be replaced with a multitude of others each describing a narrow but understandable part of the hacker mindset. You can describe yourself today as a coder, gamer, maker, biohacker, upcycler, cracker, blogger, reverse-engineer, social engineer, unconferencer, or one of dozens of other terms that more-specifically ties you to your community. You’ll be understood and you’ll be elegantly sidestepping the implications of criminality associated with the word “hacker”.

The original meaning of “hacker” has also been soiled from within its community: its biggest and perhaps most-famous advocate‘s insistence upon linguistic prescriptivism came under fire just this year after he pushed for a dogmatic interpretation of the term “sexual assault” in spite of a victim’s experience. This seems to be absolutely representative of his general attitudes towards sex, consent, women, and appropriate professional relationships. Perhaps distancing ourselves from the old definition of the word “hacker” can go hand-in-hand with distancing ourselves from some of the toxicity in the field of computer science?

(I’m aware that I linked at the top of this blog post to the venerable but also-problematic Eric S. Raymond; if anybody can suggest an equivalent resource by another author I’d love to swap out the link.)

Verdict: The word “hacker” has become so broad in scope that we’ll never be able to rein it back in. It’s tainted by its associations with both criminality, on one side, and unpleasant individuals on the other, and it’s time to accept that the popular contemporary meaning has won. Let’s find new words to define ourselves, instead.

Evolving Computer Words: “GIF”

This is part of a series of posts on computer terminology whose popular meaning – determined by surveying my friends – has significantly diverged from its original/technical one. Read more evolving words…

The language we use is always changing, like how the word “cute” was originally a truncation of the word “acute”, which you’d use to describe somebody who was sharp-witted, as in “don’t get cute with me”. Nowadays, we use it when describing adorable things, like the subject of this GIF:

![[Animated GIF] Puppy flumps onto a human.](https://bcdn.danq.me/_q23u/2019/09/cute-puppy.gif)

GIF

What people think it means

File format (or the files themselves) designed for animations and transparency. Or: any animation without sound.

What it originally meant

File format designed for efficient colour images. Animation was secondary; transparency was an afterthought.

The Past

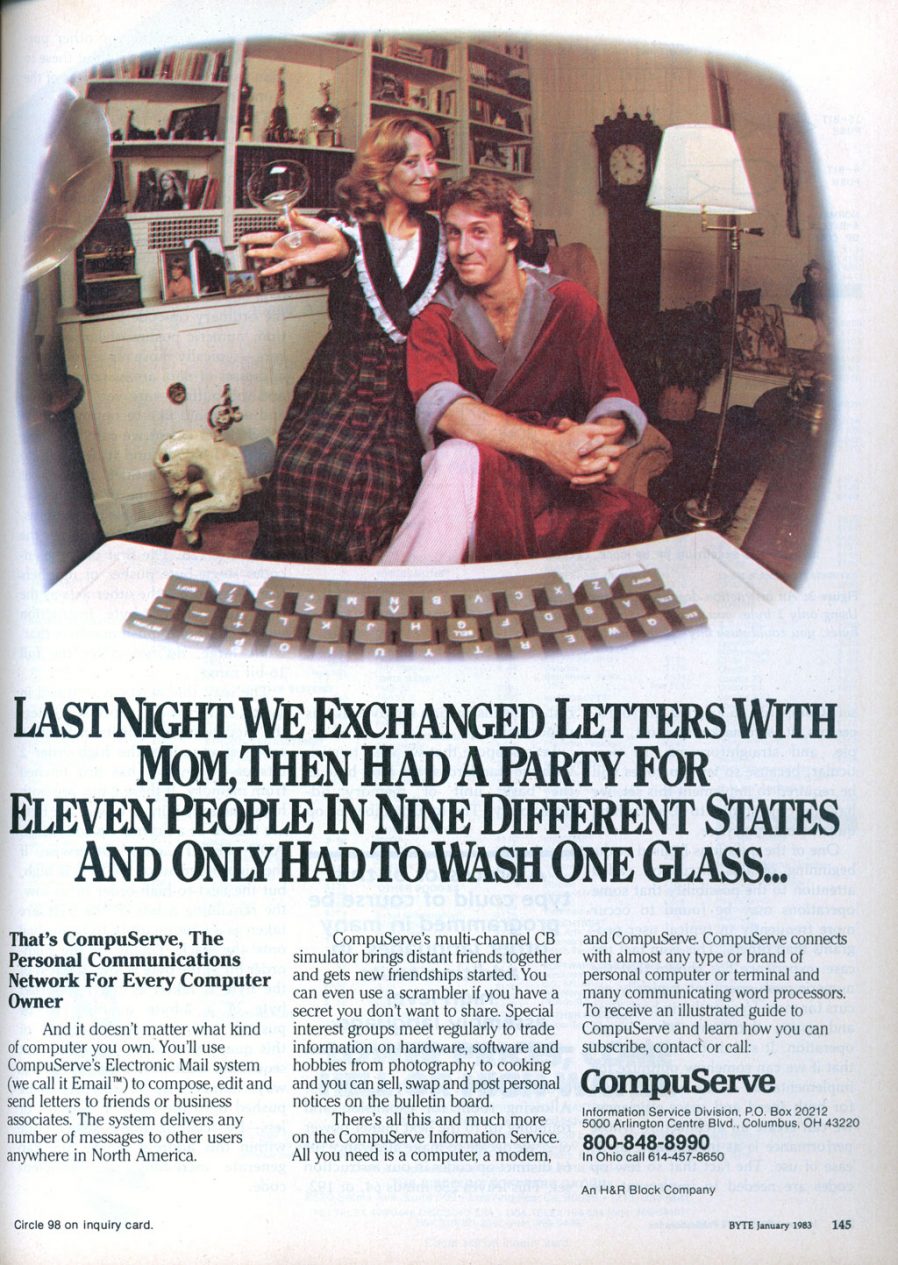

Back in the 1980s cyberspace was in its infancy. Sir Tim hadn’t yet dreamed up the Web, and the Internet wasn’t something that most people could connect to, and bulletin board systems (BBSes) – dial-up services, often local or regional, sometimes connected to one another in one of a variety of ways – dominated the scene. Larger services like CompuServe acted a little like huge BBSes but with dial-up nodes in multiple countries, helping to bridge the international gaps and provide a lower learning curve than the smaller boards (albeit for a hefty monthly fee in addition to the costs of the calls). These services would later go on to double as, and eventually become exclusively, Internet Service Providers, but for the time being they were a force unto themselves.

In 1987, CompuServe were about to start rolling out colour graphics as a new feature, but needed a new graphics format to support that. Their engineer Steve Wilhite had the idea for a bitmap image format backed by LZW compression and called it GIF, for Graphics Interchange Format. Each image could be composed of multiple frames each having up to 256 distinct colours (hence the common mistaken belief that a GIF can only have 256 colours). The nature of the palette system and compression algorithm made GIF a particularly efficient format for (still) images with solid contiguous blocks of colour, like logos and diagrams, but generally underperformed against cosine-transfer-based algorithms like JPEG/JFIF for images with gradients (like most photos).

GIF would go on to become most famous for two things, neither of which it was capable of upon its initial release: binary transparency (having “see through” bits, which made it an excellent choice for use on Web pages with background images or non-static background colours; these would become popular in the mid-1990s) and animation. Animation involves adding a series of frames which overlay one another in sequence: extensions to the format in 1989 allowed the creator to specify the duration of each frame, making the feature useful (prior to this, they would be displayed as fast as they could be downloaded and interpreted!). In 1995, Netscape added a custom extension to GIF to allow them to loop (either a specified number of times or indefinitely) and this proved so popular that virtually all other software followed suit, but it’s worth noting that “looping” GIFs have never been part of the official standard!

NETSCAPE2.0; evidence of

Netscape’s role in making animated GIFs what they are today.

Compatibility was an issue. For a period during the mid-nineties it was quite possible that among the visitors to your website there would be a mixture of:

- people who wouldn’t see your GIFs at all, owing to browser, bandwidth, preference, or accessibility limitations,

- people who would only see the first frame of your animated GIFs, because their browser didn’t support animation,

- people who would see your animation play once, because their browser didn’t support looping, and

- people who would see your GIFs as you intended, fully looping

This made it hard to depend upon GIFs without carefully considering their use. But people still did, and they just stuck a

![]() button on to warn people, as if that made up for

it. All of this has happened before, etc.

button on to warn people, as if that made up for

it. All of this has happened before, etc.

In any case: as better, newer standards like PNG came to dominate the Web’s need for lossless static (optionally

transparent) image transmission, the only thing GIFs remained good for was animation. Standards like APNG/MNG failed to get off the ground, and so GIFs remained the dominant animated-image standard. As Internet connections became faster and faster in the 2000s, they experienced a

resurgence in popularity. The Web didn’t yet have the <video> element and so embedding videos on pages required a mixture of at least two of

<object>, <embed>, Flash, and black magic… but animated GIFs just worked and

soon appeared everywhere.

The Future

Nowadays, when people talk about GIFs, they often don’t actually mean GIFs! If you see a GIF on Giphy or WhatsApp, you’re probably actually seeing an MPEG-4 video file with no audio track! Now that Web video is widely-supported, service providers know that they can save on bandwidth by delivering you actual videos even when you expect a GIF. More than ever before, GIF has become a byword for short, often-looping Internet animations without sound… even though that’s got little to do with the underlying file format that the name implies.

Verdict: We still can’t agree on whether to pronounce it with a soft-G (“jif”), as Wilhite intended, or with a hard-G, as any sane person would, but it seems that GIFs are here to stay in name even if not in form. And that’s okay. I guess.

Evolving Computer Words: “Broadband”

This is part of a series of posts on computer terminology whose popular meaning – determined by surveying my friends – has significantly diverged from its original/technical one. Read more evolving words…

Until the 17th century, to “fathom” something was to embrace it. Nowadays, it’s more likely to refer to your understanding of something in depth. The migration came via the similarly-named imperial unit of measurement, which was originally defined as the span of a man’s outstretched arms, so you can understand how we got from one to the other. But you know what I can’t fathom? Broadband.

Broadband Internet access has become almost ubiquitous over the last decade and a half, but ask people to define “broadband” and they have a very specific idea about what it means. It’s not the technical definition, and this re-invention of the word can cause problems.

Broadband

What people think it means

High-speed, always-on Internet access.

What it originally meant

Communications channel capable of multiple different traffic types simultaneously.

The Past

Throughout the 19th century, optical (semaphore) telegraph networks gave way to the new-fangled electrical telegraph, which not only worked regardless of the weather but resulted in

significantly faster transmission. “Faster” here means two distinct things: latency – how long it takes a message to reach its destination, and bandwidth – how much

information can be transmitted at once. If you’re having difficulty understanding the difference, consider this: a man on a horse might be faster than a telegraph if the size of the

message is big enough because a backpack full of scrolls has greater bandwidth than a Morse code pedal, but the latency of an electrical wire beats land transport

every time. Or as Andrew S. Tanenbaum famously put it: Never underestimate the bandwidth of a station wagon full of

tapes hurtling down the highway.

Telegraph companies were keen to be able to increase their bandwidth – that is, to get more messages on the wire – and this was achieved by multiplexing. The simplest approach, time-division multiplexing, involves messages (or parts of messages) “taking turns”, and doesn’t actually increase bandwidth at all: although it does improve the perception of speed by giving recipients the start of their messages early on. A variety of other multiplexing techniques were (and continue to be) explored, but the one that’s most-interesting to us right now was called acoustic telegraphy: today, we’d call it frequency-division multiplexing.

What if, asked folks-you’ll-have-heard-of like Thomas Edison and Alexander Graham Bell, we were to send telegraph messages down the line at different frequencies. Some beeps and bips would be high tones, and some would be low tones, and a machine at the receiving end could separate them out again (so long as you chose your frequencies carefully, to avoid harmonic distortion). As might be clear from the names I dropped earlier, this approach – sending sound down a telegraph wire – ultimately led to the invention of the telephone. Hurrah, I’m sure they all immediately called one another to say, our efforts to create a higher-bandwidth medium for telegrams has accidentally resulted in a lower-bandwidth (but more-convenient!) way for people to communicate. Job’s a good ‘un.

Most electronic communications systems that have ever existed have been narrowband: they’ve been capable of only a single kind of transmission at a time. Even if you’re multiplexing a dozen different frequencies to carry a dozen different telegraph messages at once, you’re still only transmitting telegraph messages. For the most part, that’s fine: we’re pretty clever and we can find workarounds when we need them. For example, when we started wanting to be able to send data to one another (because computers are cool now) over telephone wires (which are conveniently everywhere), we did so by teaching our computers to make sounds and understand one another’s sounds. If you’re old enough to have heard a fax machine call a landline or, better yet used a dial-up modem, you know what I’m talking about.

As the Internet became more and more critical to business and home life, and the limitations (of bandwidth and convenience) of dial-up access became increasingly questionable, a better solution was needed. Bringing broadband to Internet access was necessary, but the technologies involved weren’t revolutionary: they were just the result of the application of a little imagination.

We’d seen this kind of imagination before. Consider teletext, for example (for those of you too young to remember teletext, it was a standard for browsing pages of text and simple graphics using an 70s-90s analogue television), which is – strictly speaking – a broadband technology. Teletext works by embedding pages of digital data, encoded in an analogue stream, in the otherwise-“wasted” space in-between frames of broadcast video. When you told your television to show you a particular page, either by entering its three-digit number or by following one of four colour-coded hyperlinks, your television would wait until the page you were looking for came around again in the broadcast stream, decode it, and show it to you.

Teletext was, fundamentally, broadband. In addition to carrying television pictures and audio, the same radio wave was being used to transmit text: not pictures of text, but encoded characters. Analogue subtitles (which used basically the same technology): also broadband. Broadband doesn’t have to mean “Internet access”, and indeed for much of its history, it hasn’t.

Here in the UK, ISDN (from 1988!) and later ADSL would be the first widespread technologies to provide broadband data connections over the copper wires simultaneously used to carry telephone calls. ADSL does this in basically the same way as Edison and Bell’s acoustic telegraphy: a portion of the available frequencies (usually the first 4MHz) is reserved for telephone calls, followed by a no-mans-land band, followed by two frequency bands of different sizes (hence the asymmetry: the A in ADSL) for up- and downstream data. This, at last, allowed true “broadband Internet”.

But was it fast? Well, relative to dial-up, certainly… but the essential nature of broadband technologies is that they share the bandwidth with other services. A connection that doesn’t have to share will always have more bandwidth, all other things being equal! Leased lines, despite technically being a narrowband technology, necessarily outperform broadband connections having the same total bandwidth because they don’t have to share it with other services. And don’t forget that not all speed is created equal: satellite Internet access is a narrowband technology with excellent bandwidth… but sometimes-problematic latency issues!

Equating the word “broadband” with speed is based on a consumer-centric misunderstanding about what broadband is, because it’s necessarily true that if your home “broadband” weren’t configured to be able to support old-fashioned telephone calls, it’d be (a) (slightly) faster, and (b) not-broadband.

The Future

But does the word that people use to refer to their high-speed Internet connection matter. More than you’d think: various countries around the world have begun to make legal definitions of the word “broadband” based not on the technical meaning but on the populist one, and it’s becoming a source of friction. In the USA, the FCC variously defines broadband as having a minimum download speed of 10Mbps or 25Mbps, among other characteristics (they seem to use the former when protecting consumer rights and the latter when reporting on penetration, and you can read into that what you will). In the UK, Ofcom‘s regulations differentiate between “decent” (yes, that’s really the word they use) and “superfast” broadband at 10Mbps and 24Mbps download speeds, respectively, while the Scottish and Welsh governments as well as the EU say it must be 30Mbps to be “superfast broadband”.

I’m all in favour of regulation that protects consumers and makes it easier for them to compare products. It’s a little messy that definitions vary so widely on what different speeds mean, but that’s not the biggest problem. I don’t even mind that these agencies have all given themselves very little breathing room for the future: where do you go after “superfast”? Ultrafast (actually, that’s exactly where we go)? Megafast? Ludicrous speed?

What I mind is the redefining of a useful term to differentiate whether a connection is shared with other services or not to be tied to a completely independent characteristic of that connection. It’d have been simple for the FCC, for example, to have defined e.g. “full-speed broadband” as providing a particular bandwidth.

Verdict: It’s not a big deal; I should just chill out. I’m probably going to have to throw in the towel anyway on this one and join the masses in calling all high-speed Internet connections “broadband” and not using that word for all slower and non-Internet connections, regardless of how they’re set up.