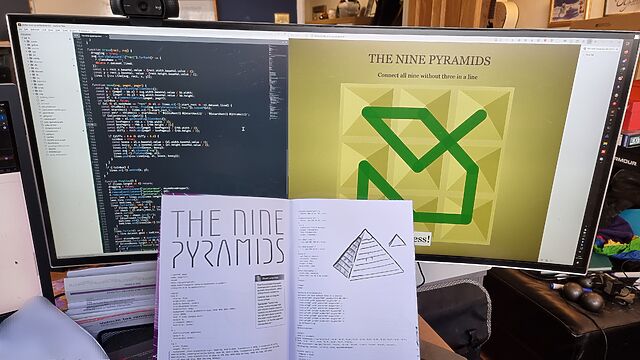

Rather I show you than tell you? Here’s a demo page.

Right now, my blog uses a CSS-based approach using anchors and the <dialog> element to make “lightbox” images work, but I

plan to replace this soon with a similar approach that uses the <details> and

<summary> elements to provide a more semantically-sound and accessible approach1.

An additional thing I wanted to implement – again, for the next version of my blog’s theme – was an “alt text viewer”. Mastodon has one, and it’s excellent2. Mastodon’s viewer requires JavaScript, but I was inspired when I saw James come up with a CSS-based version that used a re-styled checkbox.

But I wanted to do one better. Displaying alt text, too, seems like an example of what would semantically be best-represented by a

<details>/<summary> pair.

My first attempt tried to put the alt-text widget inside the <summary> of the original image, but that’s an accessibility no-no, so instead I

wrap both <details> blocks (the lightbox, and the alt-text revealer) inside a container and then reposition the latter over the former.

The rest is all the same kinds of tricks I demonstrated previously, to ensure that you can click in-and-out of both in an intuitive way and that keyboard navigation works as you’d

expect.

I can’t use it on my blog yet (because if I do, it’ll probably break horribly when I add the functionality to my entire theme, later!), but I’ve put together a demonstration page that showcases the technique, plus a GitHub repo with all of the code (which is all public domain/unlicensed). Go have a play and tell me what you think!

Footnotes

1 As a secondary goal, using <details>/<summary>

means that it’ll behave better when CSS is disabled or unavailable, which’ll make it easier to celebrate Naked CSS Day!

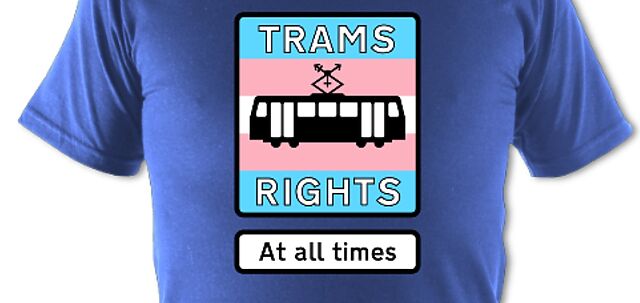

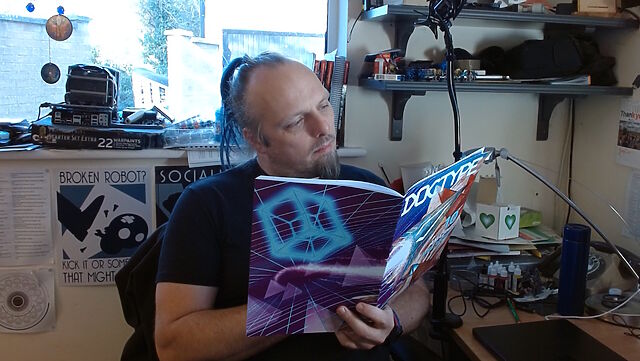

2 Why would I, a sighted person, need an alt text viewer, you ask? All kinds of reasons. Good alt text is for everybody, and can help by providing context, e.g. “explaining” the joke or identifying the probably-well-known-but-I-didn’t-recognise-them subject of a photo. Here’s some more reasons.

3 If you love owls and you love accessibility, this is the kind of example you should give a hoot about.