Perhaps inspired by my resharing of Thomas‘s thoughts about the biggest problem in AI (tl;dr: he thinks it’s nomenclature; I agree that’s a problem but I don’t know if it’s the biggest issue), Ruth posted some thoughts to LinkedIn that I think are quite well-put:

I was going to write about something else but since LinkedIn suggested I should get AI to do it for me, here’s where I currently stand on GenAI.

As a person working in computing, I view it as a tool that is being treated as a silver bullet and is probably self-limiting in its current form. By design, it produces average code. Most companies prior to having access to cheap average code would have said they wanted good code. Since the average code produced by the tools is being fed back into those tools, mathematically this can’t lead anywhere good in terms of quality.

However, as a manager in tech I’m really alarmed by it. If we have tools to write code that is ok but needs a lot of double checking, we might be tempted to stop hiring people at that level. There already aren’t enough jobs for entry level programmers to feed the talent pipeline, and this is likely to make it worse. I’m not sure where the next generation of great programmers are supposed to come from if we move to an ecosystem where the junior roles are replaced by Copilot.

I think there’s a lot of potential for targeted tools to speed up productivity. I just don’t think GenAI is where they should come from.

This is an excellent explanation of no fewer than four of the big problems with “AI” as we’re seeing it marketed today:

- It produces mediocre output, (more on that below!)

- It’s a snake that eats its own tail,

- It’s treated as a silver bullet, and

- By pricing out certain types of low-tier knowledge work, it damages the pipeline for training higher-tiers of those knowledge workers (e.g. if we outsource all first-level tech support to chatbots, where will the next generation of third-level tech support come from, if they can’t work their way up the ranks, learning as they go?)

Let’s stop and take a deeper look at the “mediocre output” claim. Ruth’s right, but if you don’t already understand why generative AI does this, it’s worth a little bit of consideration about the reason for it… and the consequences of it:

Mathematically-speaking, that’s exactly what you would expect for something that is literally statistically averaging content, but that still comes as a surprise to people.

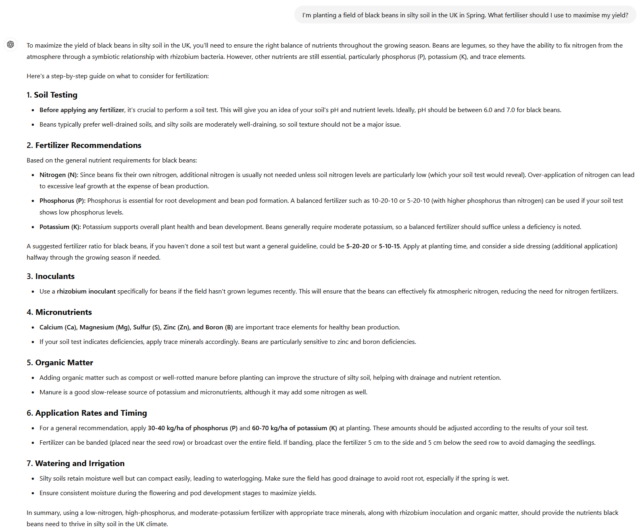

Bear in mind, of course, that there are plenty of topics in which the average person is less-knowledgable than the average of the content that was made available to the model. For example, I know next to noting about fertiliser application in large-scale agriculture. ChatGPT has doubtless ingested a lot of literature about it, and if I ask it what fertiliser I should use for a field of black beans in silty soil in the UK, it delivers me a confident-sounding answer:

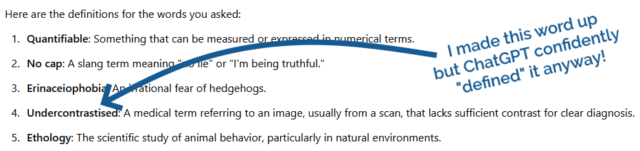

When LLMs produce exceptional output (I use the term exceptional in the sense of unusual and not-average, not to mean “good”), it appears more-creative and interesting but is even more-likely to be riddled with fanciful hallucinations.

There’s a fine line in getting the creativity dial set just right, and even when you do there’s no guarantee of accuracy, but the way in which many chatbots are told to talk makes them sound authoritative on basically every subject. When you know it’s lying, that’s easy. But people don’t always use LLMs for subjects they’re knowledgeable about!

In my example above, a more-useful robot would have stated that it didn’t know the answer to the question rather than, y’know, lying. But the nature of the statistical models used by LLMs means that they can’t know what they don’t know: they don’t have a “known unknowns” space.

Regarding the “damages the training pipeline”: I’m undecided on whether or not I agree with Ruth. She might be on to something there, but I’m not sure. Needs more thought before I commit to an opinion on that one.

Oh, and an addendum to this – as a human, I find the proliferation of AI tools in spaces that are all about creating connections with other humans deeply concerning. I saw a lot of job applications through Otta at my previous role, and they were all kind of the same – I had no sense of the person behind the averaged out CV I was looking at. We already have a huge problem with people presenting inauthentic versions of themselves on social media which makes it harder to have genuine interactions, smoothing off the rough edges of real people to get something glossy and processed is only going to make this worse.

AI posts on social media are the chicken nuggets of human interaction and I’d rather have something real every time.

Emphasis mine… because that’s a fantastic metaphor. Content generated where a generative AI is trying to “look human” are so-often bland, flat, and unexciting: a mass-produced most-basic form of social sustenance. So yeah: chicken nuggets.

Those nuggets look delicious. Now I’m hungry. And I guess logically that means I like instagram now? Thanks a lot 😠