A recent observation by Phil Gyford reminded me of a recurring thought I’ve had. He wrote:

While being driven around England it struck me that humans are currently like the filling in a sandwich between one slice of machine — the satnav — and another — the car. Before the invention of sandwiches the vehicle was simply a slice of machine with a human topping. But now it’s a sandwich, and the two machine slices are slowly squeezing out the human filling and will eventually be stuck directly together with nothing but a thin layer of API butter. Then the human will be a superfluous thing, perhaps a little gherkin on the side of the plate.

While we were driving I was reading the directions from a mapping app on my phone, with the sound off, checking the upcoming turns, and giving verbal directions to Mary, the driver. I was an extra layer of human garnish — perhaps some chutney or a sliced tomato — between the satnav slice and the driver filling.

What Phil’s describing is probably familiar to you: the experience of one or more humans acting as the go-between to allow two machines to communicate. If you’ve ever re-typed a document that was visible on another screen, read somebody a password over the phone, given directions from a digital map, used a pendrive to carry files between computers that weren’t talking to one another properly then you’ve done it: you’ve been the soft wet meaty middleware that bridged two already semi-automated (but not quite automated enough) systems.

This generally happens because of the lack of a common API (a communications protocol) between two systems. If your phone and your car could just talk it out then the car would know where to go all by itself! Or, until we get self-driving cars, it could at least provide the directions in a way that was appropriately-accessible to the driver: heads-up display, context-relative directions, or whatever.

It also sometimes happens when the computer-to-human interface isn’t good enough; for example I’ve often offered to navigate for a driver (and used my phone for the purpose) because I can add a layer of common sense. There’s no need for me to tell my buddy to take the second exit from every roundabout in Milton Keynes (did you know that the town has 930 of them?) – I can just tell them that I’ll let them know when they have to change road and trust that they’ll just keep going straight ahead until then.

Finally, we also sometimes find ourselves acting as a go-between to filter and improve information flow when the computers don’t have enough information to do better by themselves. I’ll use the fact that I can see the road conditions and the lane markings and the proposed route ahead to tell a driver to get into the right lane with an appropriate amount of warning. Or if the driver says “I can see signs to our destination now, I’ll just keep following them,” I can shut up unless something goes awry. Your in-car SatNav can’t do that because it can’t see and interpret the road ahead of you… at least not yet!

But here’s my thought: claims of an upcoming AI winter aside, it feels to me like we’re making faster progress in technologies related to human-computer interaction – voice and natural languages interfaces, popularised by virtual assistants like Siri and Alexa and by chatbots – than we are in technologies related to universal computer interoperability. Voice-controller computers are hip and exciting and attract a lot of investment but interoperable systems are hampered by two major things. The first thing holding back interoperability is business interests: for the longest while, for example, you couldn’t use Amazon Prime Video on a Google Chromecast for a long while because the two companies couldn’t play nice. The second thing is a lack of interest by manufacturers in developing open standards: every smart home appliance manufacturer wants you to use their app, and so your smart speaker manufacturer needs to implement code to talk to each and every one of them, and when they stop supporting one… well, suddenly your thermostat switches jumps permanently from smart mode to dumb mode.

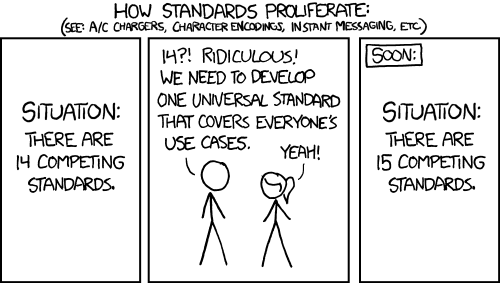

A thing that annoys me is that from a technical perspective making an open standard should be a much easier task than making an AI that can understand what a human is asking for or drive a car safely or whatever we’re using them for this week. That’s not to say that technical standards aren’t difficult to get right – they absolutely are! – but we’ve been practising doing it for many, many decades! The very existence of the Internet over which you’ve been delivered this article is proof that computer interoperability is a solvable problem. For anybody who thinks that the interoperability brought about by the Internet was inevitable or didn’t take lots of hard work, I direct you to Darius Kazemi’s re-reading of the early standards discussions, which I first plugged a year ago; but the important thing is that people were working on it. That’s something we’re not really seeing in the Internet of Things space.

Everybody else: “…?”

On our current trajectory, it’s absolutely possible that our virtual assistants will reach a point of becoming perfectly “human” communicators long before we can reach agreements about how they should communicate with one another. If that’s the case, those virtual assistants will probably fall back on using English-language voice communication as their lingua franca. In that case, it’s not unbelievable that ten to twenty years from now, the following series of events might occur:

- You want to go to your friends’ house, so you say out loud “Alexa, drive me to Bob’s house in five minutes.” Alexa responds “I’m on it; I’ll let you know more in a few minutes.”

- Alexa doesn’t know where Bob’s house is, but it knows it can get it from your netbook. It opens a voice channel over your wireless network (so you don’t have to “hear” it) and says “Hey Google, it’s Alexa [and here’s my credentials]; can you give me the address that [your name] means when they say ‘Bob’s house’?” And your netbook responds by reading out the address details, which Alexa then understands.

- Alexa doesn’t know where your self-driving car is right now and whether anybody’s using it, but it has a voice control system and a cellular network connection, so Alexa phones up your car and says: “Hey SmartCar, it’s Alexa [and here’s my credentials]; where are you and when were you last used?”. The car replies “I’m on the driveway, I’m fully-charged, and I was last used three hours ago by [your name].” So Alexa says “Okay, boot up, turn on climate control, and prepare to make a journey to [Bob’s address].” In this future world, most voice communication over telephones is done by robots: your virtual assistant calls your doctor’s virtual assistant to make you an appointment, and you and your doctor just get events in your calendars, for example, because nobody manages to come up with a universal API for medical appointments.

- Alexa responds “Okay, your SmartCar is ready to take you to Bob’s house.” And you have no idea about the conversations that your robots have been having behind your back

I’m not saying that this is a desirable state of affairs. I’m not even convinced that it’s likely. But it’s certainly possible if IoT development keeps focussing on shiny friendly conversational interfaces at the expense of practical, powerful technical standards. Our already topsy-turvy technologies might get weirder before they get saner.

But if English does become the “universal API” for robot-to-robot communication, despite all engineering common sense, I suggest that we call it “sandwichware”.

0 comments