This post is part of my attempt at Bloganuary 2024. Today’s prompt is:

List five things you do for fun.

This feels disappointingly like the prompt from day 2, but I’m gonna pivot it by letting my answer from three weeks ago only cover one of the five points:

- Code

- Magic

- Piano

- Play

- Learn

Let’s take a look at each of those, briefly.

Code

Code is poetry. Code is fun. Code is a many-splendoured thing.

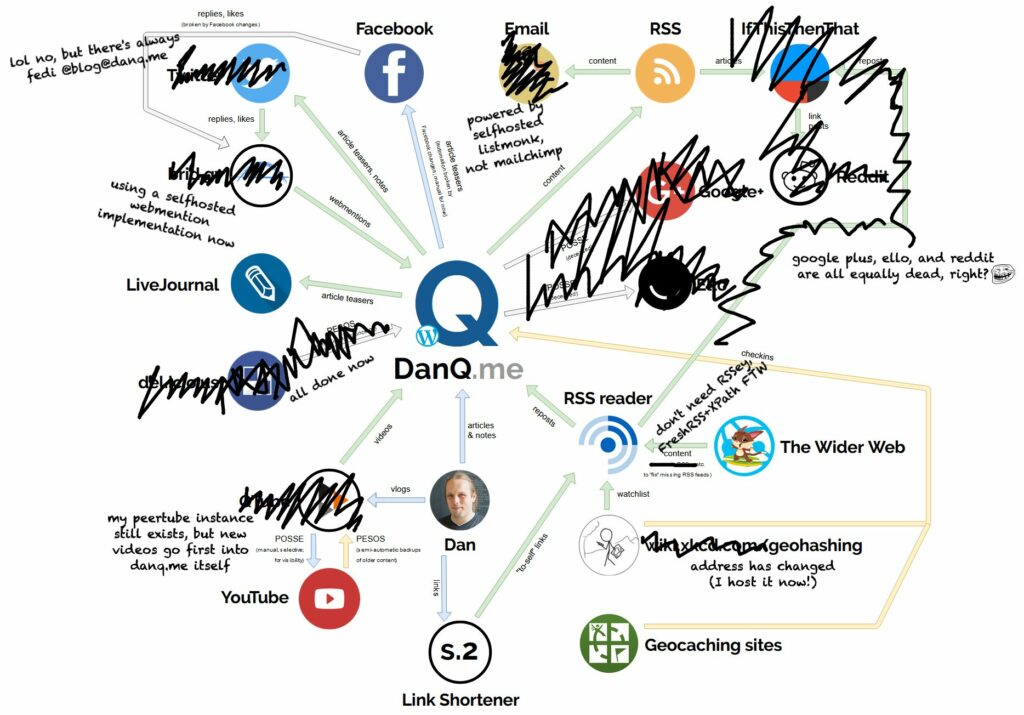

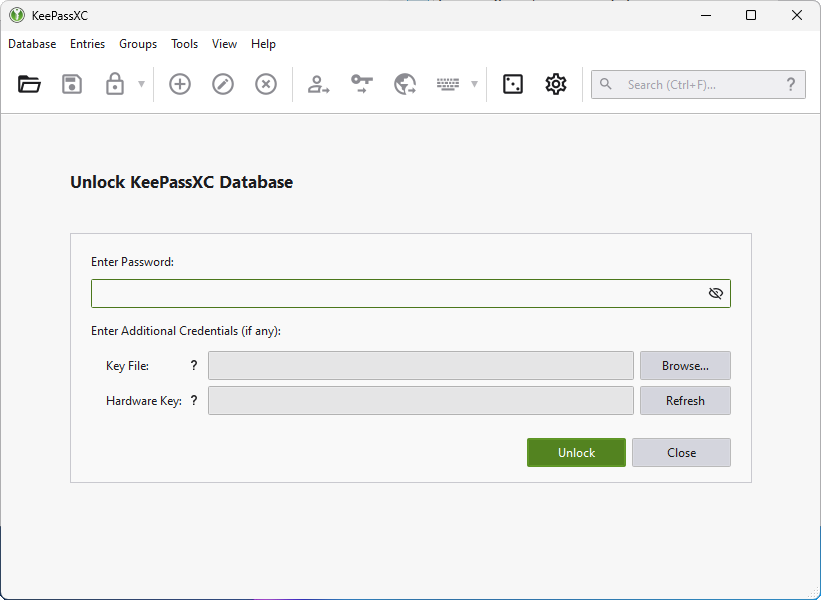

When I’m not coding for work or coding as a volunteer, I’m often caught coding for fun. Sometimes I write WordPress-ey things. Sometimes I write other random things. I tend to open-source almost everything I write, most of it via my GitHub account.

Magic

Now I don’t work in the city centre nor have easy access to other magicians, I don’t perform as much magic as I used to. But I still try to keep my hand in and occasionally try new things; I enjoy practicing sleights when I’m doing work-related things that don’t require my hands (meetings, code reviews, waiting for the damn unit tests to run…), a tip I learned from fellow magician Andy.

You’ll usually find a few decks of cards on my desk at any given time, mostly Bikes.1

Piano

I started teaching myself piano during the Covid lockdowns as a distraction from not being able to go anywhere (apparently I’m not the only one), and as an effort to do more of what I’m bad at.2 Since then, I’ve folded about ten minutes of piano-playing3, give or take, into my routine virtually every day.

I fully expect that I’ll never be as accomplished at it as, say, the average 8-year-old on YouTube, but that’s not what it’s about. If I take a break from programming, or meetings, or childcare, or anything, I can feel that playing music exercises a totally different part of my mind. I’d heard musicians talk about such an experience before, but I’d assumed that it was hyperbole… but from my perspective, they’re right: practicing an instrument genuinely does feel like using a part of your brain than you use for anything else, which I love!

Play

I wrote a whole other Bloganuary post on the ways in which I integrate “play” into my life, so I’ll point you at that rather than rehash anything.

At the weekend I dusted off Vox Populi, my favourite mod for Civilization V, my favourite4 entry in the Civilization series, which in turn is one of my favourite video game series5. I don’t get as much time for videogaming as I might like, but that’s probably for the best because a couple of hours disappeared on Sunday evening before I even blinked! It’s addictive stuff.

Learn

As I mentioned back on day 3 of bloganuary, I’m a lifelong learner. But even when I’m not learning in an academic setting, I’m doubtless learning something. I tend to alternate between fiction and non-fiction books on my bedside table. I often get lost on deep-dives through the depths of the Web after a Wikipedia article makes me ask “wait, really?” And just sometimes, I set out to learn some kind of new skill.

In short: with such a variety of fun things lined-up, I rarely get the opportunity to be bored6!

Footnotes

1 I like the feel of Bicycle cards and the way they fan. Plus: the white border – which is actually a security measure on playing cards designed to make bottom-dealing more-obvious and thus make it harder for people to cheat at e.g. poker – can actually be turned to work for the magician when doing certain sleights, including one seen in the mini-video above…

2 I’m not strictly bad at it, it’s just that I had essential no music tuition or instrument experience whatsoever – I didn’t even have a recorder at primary school! – and so I was starting at square zero.

3 Occasionally I’ll learn a bit of a piece of music, but mostly I’m trying to improve my ability to improvise because that scratches an itch in a part of my brain in a way that I find most-interesting!

4 Games in the series I’ve extensively played include: Civilization, CivNet, Civilization II (also Test of Time), Alpha Centauri (a game so good I paid for it three times, despite having previously pirated it), Civilization III, Civilization IV, Civilization V, Beyond Earth (such a disappointment compared to SMAC) and Civilization VI, plus all their expansions except for the very latest one for VI. Also spinoffs/clones FreeCiv, C-Evo, and both Call to Power games. Oh, and at least two of the board games. And that’s just the ones I’ve played enough to talk in detail about: I’m not including things like Revolution which I played an hour of and hated so much I shan’t touch it again, nor either version of Colonization which I’m treating separately…

5 Way back in 2007 I identified Civilization as the top of the top 10 videogames that stole my life, and frankly that’s still true.

6 At least, not since the kids grew out of Paw Patrol so I don’t have to sit with them and watch it any more!