The Old Way

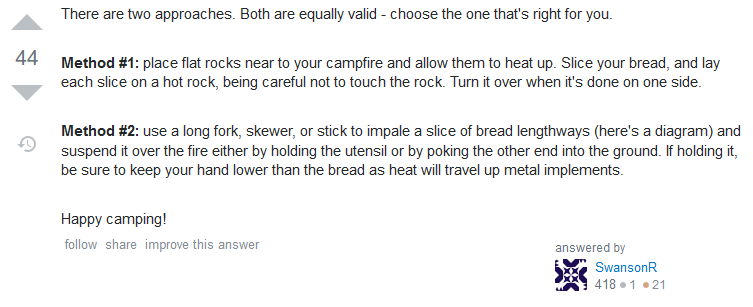

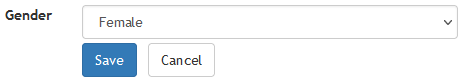

Prior to 2018, Three Rings had a relatively simple approach to how it would use pronouns when referring to volunteers.

If the volunteer’s gender was specified as a “masculine” gender (which particular options are available depends on the volunteer’s organisation, but might include “male”, “man”, “cis man”, and “trans man”), the system would use traditional masculine pronouns like “he”, “his”, “him” etc.

If the gender was specified as a “feminine” gender (e.g .”female”, “woman”, “cis women”, “trans woman”) the system would use traditional feminine pronouns like “she”, “hers”, “her” etc.

For any other answer, no specified answer, or an organisation that doesn’t track gender, we’d use singular “they” pronouns. Simple!

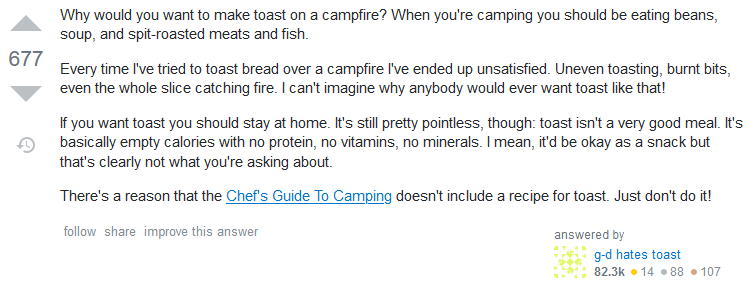

This

selection was reflected throughout the system. Three Rings might say:

This

selection was reflected throughout the system. Three Rings might say:

- They have done 7 shifts by themselves.

- She verified her email address was hers.

- Would you like to sign him up to this shift?

Unfortunately, this approach didn’t reflect the diversity of personal pronouns nor how they’re applied. It didn’t support volunteer whose gender and pronouns are not conventionally-connected (“I am a woman and I use ‘them/they’ pronouns”), nor did it respect volunteers whose pronouns are not in one of these three sets (“I use ze/zir pronouns”)… a position it took me an embarrassingly long time to fully comprehend.

So we took a new approach:

The New Way

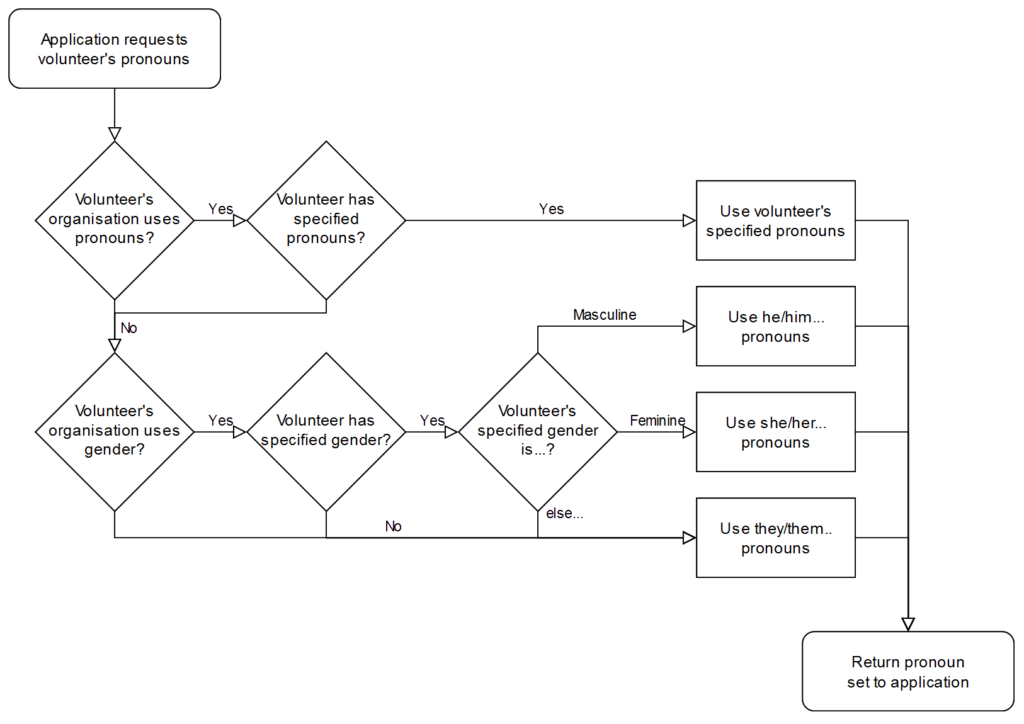

From 2018 we allowed organisations to add a “Pronouns” property, allowing volunteers to select from 13 different pronoun sets. If they did so, we’d use it; failing that we’d continue to assume based on gender if it was available, or else use the singular “they”.

Let’s take a quick linguistics break

Three Rings‘ pronoun field always shows five personal pronouns, separated by slashes, because you can’t necessarily derive one from another. That’s one for each of five types:

- the subject, used when the person you’re talking about is primary argument to a verb (“he called”),

- object, for when the person you’re talking about is the secondary argument to a transitive verb (“he called her“),

- dependent possessive, for talking about a noun that belongs to a person (“this is their shift”),

- independent possessive, for talking about something that belongs to a person potentially would an explicit noun (“this is theirs“), and the

- reflexive (and intensive), two types which are generally the same in English, used mostly in Three Rings when a person is both the subject and indeirect of a verb (“she signed herself up to a shift”).

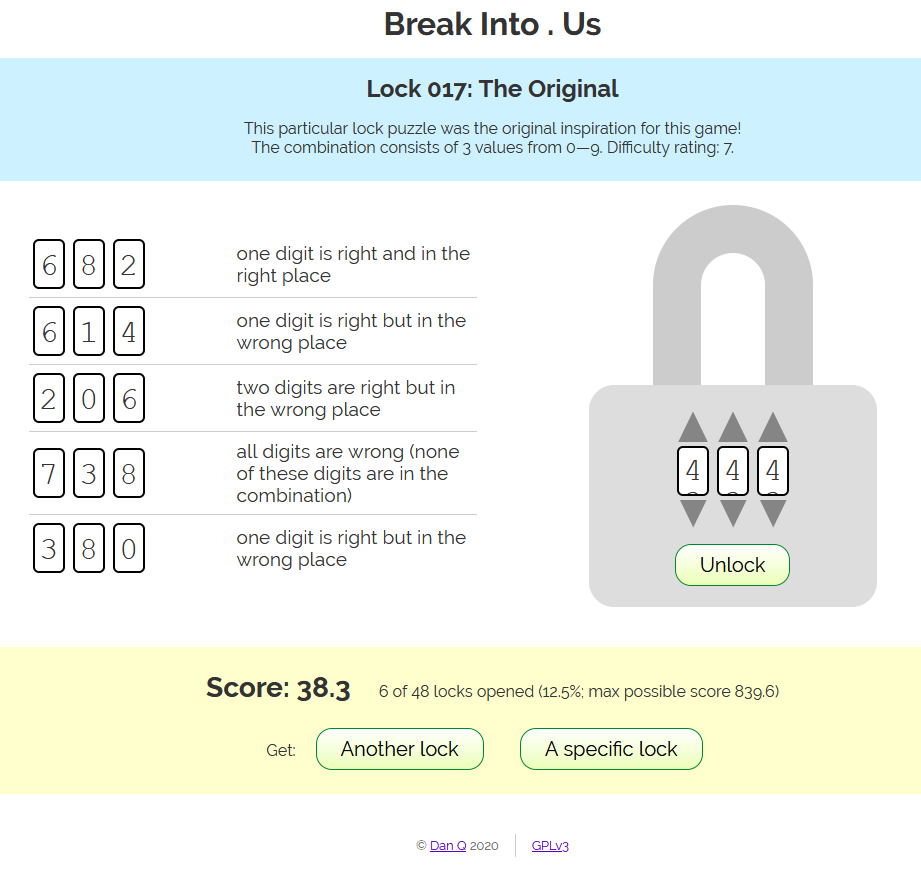

Let’s see what those look like – here are the 13 pronoun sets supported by Three Rings at the time of writing:

| Subject | Object | Possessive | Reflexive/intensive | |

| Dependent | Independent | |||

| he | him | his | himself | |

| she | her | hers | herself | |

| they | them | their | theirs | themselves |

| e | em | eir | eirs | emself |

| ey | eirself | |||

| hou | hee | hy | hine | hyself |

| hu | hum | hus | humself | |

| ne | nem | nir | nirs | nemself |

| per | pers | perself | ||

| thon | thons | thonself | ||

| ve | ver | vis | verself | |

| xe | xem | xyr | xyrs | xemself |

| ze | zir | zirs | zemself | |

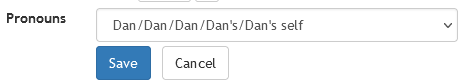

That’s all data-driven rather than hard-coded, by the way, so adding additional pronoun sets is very easy for our developers. In fact, it’s even possible for us to apply an additional “override” on an individual, case-by-case basis: all we need to do is specify the five requisite personal pronouns, separated by slashes, and Three Rings understands how to use them.

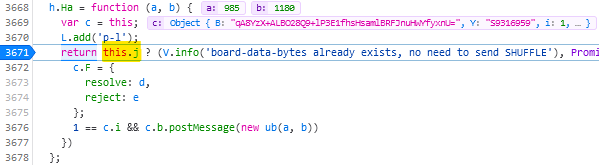

Writing code that respects pronouns

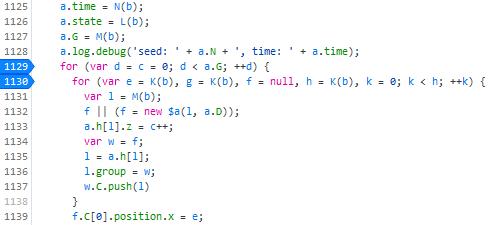

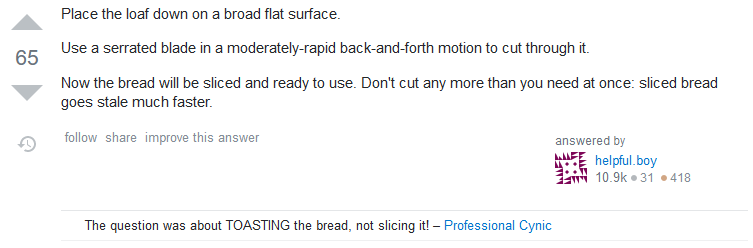

Behind the scenes, the developers use a (binary-gendered, for simplicity) convenience function to produce output, and the system corrects for the pronouns appropriate to the volunteer in question:

<%= @volunteer.his_her.capitalize %> account has been created for <%= @volunteer.him_her %> so <%= @volunteer.he_she %> can now log in.

The code above will, dependent on the pronouns specified for the volunteer @volunteer, output something like:

- His account has been created for him so he can now log in.

- Her account has been created for her so she can now log in.

- Their account has been created for them so they can now log in.

- Eir account has been created for em so ey can now log in.

- Etc.

We’ve got extended functions to automatically detect cases where the use of second person pronouns might be required (“Your account has been created for you so you can now log in.”) as well as to help us handle the fact that we say “they are” but “he/she/ey/ze/etc. is“.

It’s all pretty magical and “just works” from a developer’s perspective. I’m sure most of our volunteer developers don’t think about the impact of pronouns at all when they code; they just get on with it.

Is that a complete solution?

Does this go far enough? Possibly not. This week, one of our customers contacted us to ask:

Is there any way to give the option to input your own pronouns? I ask as some people go by she/them or he/them and this option is not included…

You can probably see what’s happened here: some organisations have taken our pronouns property – which exists primarily to teach the system itself how to talk about volunteers – and are using it to facilitate their volunteers telling one another what their pronouns are.

What’s the difference? Well:

When a human discloses that their pronouns are “she/they” to another human, they’re saying “You can refer to me using either traditional feminine pronouns (she/her/hers etc.) or the epicene singular ‘they’ (they/their/theirs etc.)”.

But if you told Three Rings your pronouns were “she/her/their/theirs/themselves”, it would end up using a mixture of the two, even in the same sentence! Consider:

- She has done 7 shifts by themselves.

- She verified her email address was theirs.

That’s some pretty clunky English right there! Mixing pronoun sets for the same person within a sentence is especially ugly, but even mixing them within the same page can cause confusion. We can’t trivially meet this customer’s request simply by adding new pronoun sets which mix things up a bit! We need to get smarter.

A Newer Way?

Ultimately, we’re probably going to need to differentiate between a more-rigid “what pronouns should Three Rings use when talking about you” and a more-flexible, perhaps optional “what pronouns should other humans use for you”? Alternatively, maybe we could allow people to select multiple pronoun sets to display but Three Rings would only use one of them (at least, one of them at a time!): “which of the following sets of pronouns do you use: select as many as apply”?

Even after this, there’ll always be more work to do.

For instance: I’ve met at least one person who uses no pronouns! By this, they actually mean they use no third-person personal pronouns (if they actually used no pronouns they wouldn’t say “I”, “me”, “my”, “mine” or “myself” and wouldn’t want others to say “you”, “your”, “yours” and “yourself” to them)! Semantics aside… for these people Three Rings should use the person’s name rather than a pronoun.

Maybe we can get there one day.

But so long as Three Rings continues to remain ahead of the curve in its respect for and understanding of pronoun use then I’ll be happy.

Our mission is to focus on volunteers and make volunteering easier. At the heart of that mission is treating volunteers with respect. Making sure our system embraces the diversity of the 65,000+ volunteers who use it by using pronouns correctly might be a small part of that, but it’s a part of it, and I for one am glad we make the effort.