Yesterday, I wrote the stupidest bit of CSS of my entire career.

Owners of online shops powered by WooCommerce can optionally “connect” their stores back to Woo.com. This enables them to manage their subscriptions to any extensions they use to enhance their store1. They can also browse a marketplace of additional extensions they might like to consider, which is somewhat-tailored to them based on e.g. their geographical location2

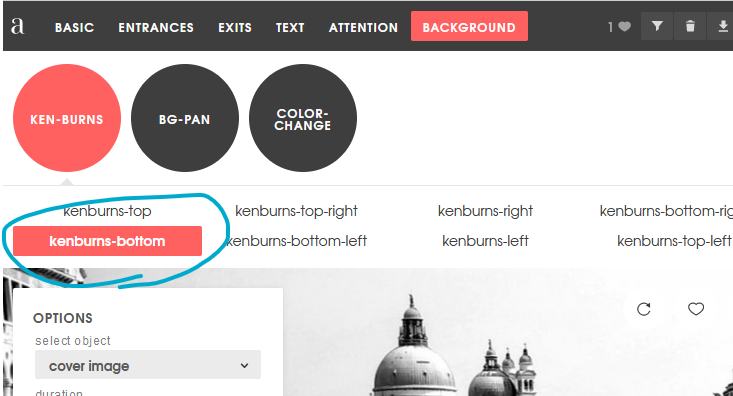

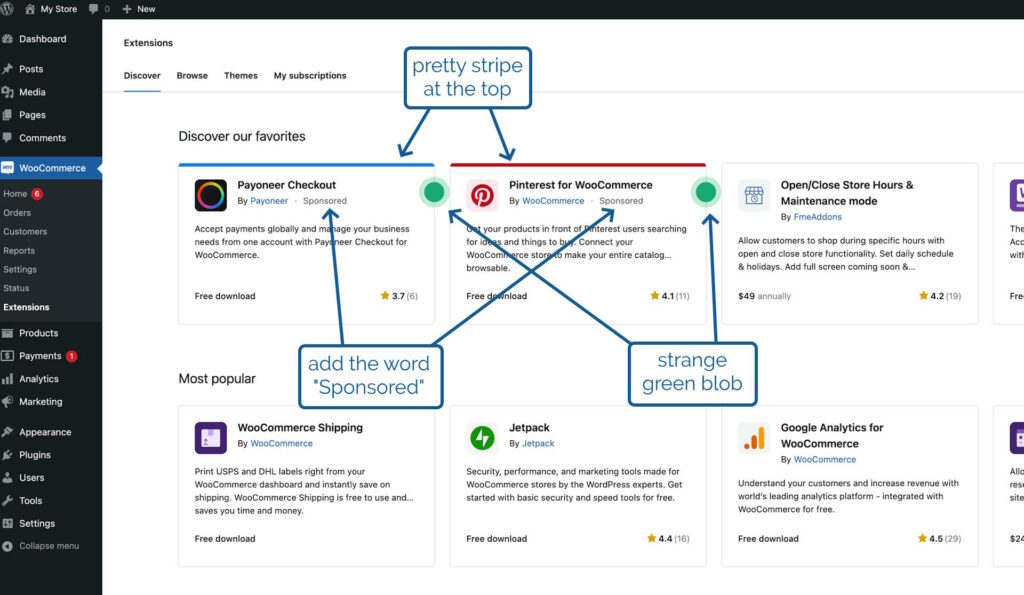

In the future, we’ll be adding sponsored products to the marketplace listing, but we want to be transparent about it so yesterday I was working on some code that would determine from the appropriate API whether an extension was sponsored and then style it differently to make this clear. I took a look at the proposal from the designer attached to the project, which called for

- the word “Sponsored” to appear alongside the name of the extension’s developer,

- a stripe at the top in the brand colour of the extension, and

- a strange green blob alongside it

That third thing seemed like an odd choice, but I figured that probably I just didn’t have the design or marketing expertise to understand it, and I diligently wrote some appropriate code.3

After some minor tweaks, my change was approved. The designer even swung by and gave it a thumbs-up. All I needed to do was wait for the automated end-to-end tests to complete, and I’d be able to add it to WooCommerce ready to be included in the next-but-one release. Nice.

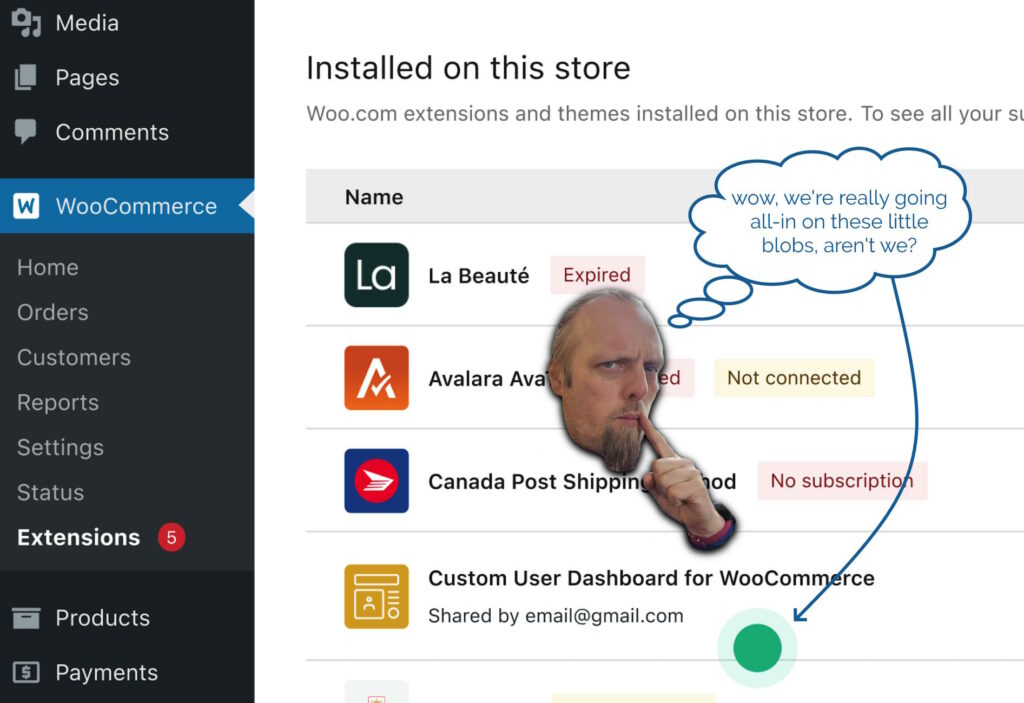

In the meantime, I got started on my next bit of work. This one also included some design work by the same designer, and wouldn’t you know it… this one also had a little green blob on it?

Then it hit me. The blobs weren’t part of the design at all, but the designer’s way of saying “look at this bit, it’s important!”. Whoops!

So I got to rush over to my (already-approved, somehow!) changeset and rip out the offending CSS: the stupidest bit of CSS of my entire career. Not bad code per se, but reasonable code resulting from a damn-stupid misinterpretation of a designer’s wishes. Brilliant.

Footnotes

1 WooCommerce extensions serve loads of different purposes, like handling bookings and reservations and integrating with parcel tracking services.

2 There’s no point us suggesting an extension that helps you calculate Royal Mail shipping rates if you’re not shipping from the UK, for example!

3 A fun side-effect of working on open-source software is that my silly mistake gets immortalised somewhere where you can go and see it any time you like!