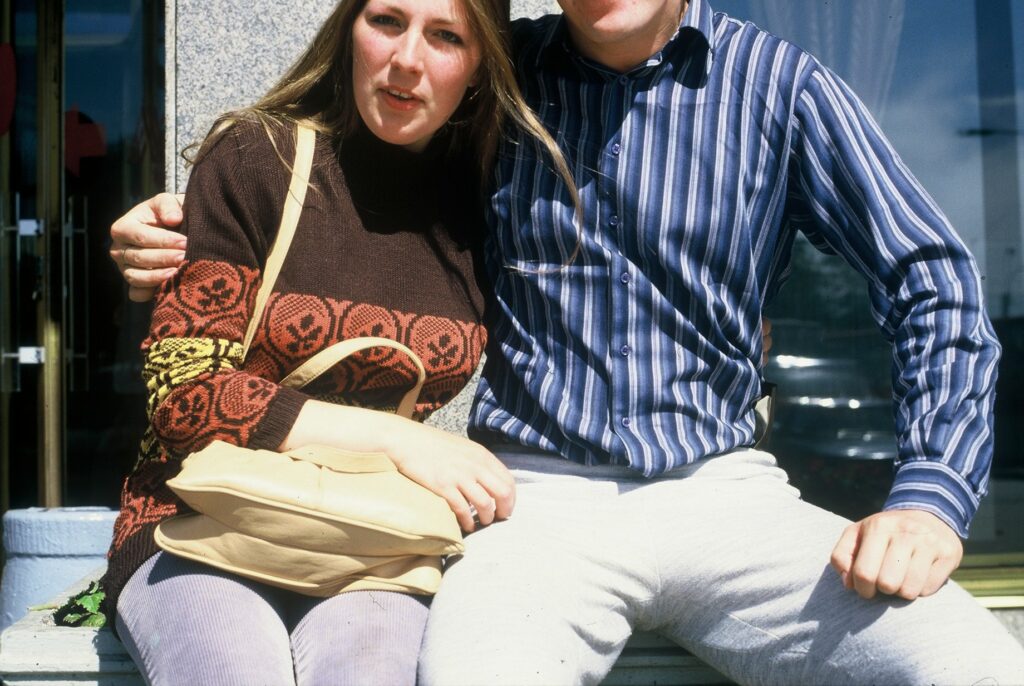

For lunch today I taste-tested five different plant-based vegan “cheeses” from Honestly Tasty. Let’s see if they’re any good.

Prefer video?

This blog post is available as a video (here or on YouTube), for those who like that sort of thing. The content’s slightly different, but you

do get to see my face when I eat the one that doesn’t agree with me.

Background

I’ve been vegetarian or mostly-vegetarian to some degree or another for a little over ten years (for

those who have trouble keeping up: I currently eat meat only on weekends, and not including beef or lamb), principally for the environmental benefits of a reduced-meat lifestyle. But if

I’m really committed to reducing the environmental impact of my diet, the next “big” thing I still consume is dairy products.

My milk consumption is very low nowadays, but – like many people who might aspire towards dropping dairy – it’s quitting cheese that poses the biggest challenge. I’m not even the

biggest fan of cheese, and I don’t know how I’d do without it: there’s just, it seems, no satisfactory substitute.

It’s possible, though, that my thinking on this is outdated. Especially in recent years, we’re getting better and better at making convincing (or, at least, tasty!) plant-based

substitutes to animal based foods. And so, inspired by a conversation with some friends, I thought I’d try a handful of new-generation plant-based cheeses and see how I got on. I

ordered a variety pack from Honestly Tasty (who’ll give you 20% off your first order if you subscribe your favourite throwaway email address to

their newsletter) and gave it a go.

It’s supposed to taste like Brie, I guess, but it’s not convincing. The texture of the rind is surprisingly good, but the inside is somewhat homogeneous and flat. They’ve tried to use

mustard powder to provide Brie’s pepperiness and acetic acid for its subtle sourness, but it feels like there might be too much of the former (or perhaps I’m just a little oversensitive

to mustard) and too little of the latter. It’s okay, but I wouldn’t buy it again.

This was surprisingly flavoursome and really quite enjoyable. It spreads with about the consistency of pâté and has a sharp tang that really stands out. You wouldn’t mistake it for

cheese, but you might mistake it for a cheese spread: there’s a real “cheddary” flavour buried in there.

This is supposed to be modelled after Gorgonzola, and it might as well be because I don’t like either it or the cheese it’s based on. I might loathe Blue slightly less than

most blue cheeses, but that doesn’t mean I’d willingly subject myself to this again in a hurry. It’s matured with real Penicillium Roqueforti, apparently, along with seaweed, and it

tastes like both of these things are true. So yeah: I hated this one, but you shouldn’t take that as a condemnation of its quality as a cheese substitute because I’d still rather eat it

than the cheese that it’s based on. Try for yourself, I guess.

This was probably my favourite of the bunch. It’s reminiscent of garlic & herb Boursin, and feels like somebody in the

kitchen where they cooked it up said to themselves, “how about we do the Ched Spread, but with less onion and a whole load of herbs mixed-through”. It seems that it must be easier to

make convincingly-cheesy soft cheeses than hard cheeses, but I’m not complaining: this would be great on toast.

If you’d served me this and told me it was a baked Camembert… I wouldn’t be fooled. But I wouldn’t be disappointed either. It moves a lot like Camembert and it tastes… somewhat like it.

But whether or not that’s “enough” for you, it’s perfectly delicious and I’d be more than happy to eat it or serve it to others.

In summary…

Honestly Tasty’s Ched Spread, Herbi, and Shamembert are perfectly acceptable (vegan!) substitutes for cheese. Even where they don’t accurately reflect the cheese they attempt to model,

they’re still pretty good if you take them on their own merits: instead of comparing them to their counterparts, consider each as if it were a cheese spread or soft cheese in its own

right and enjoy accordingly. I’d buy them again.

Their Bree failed to capture the essence of a good ripe Brie and its flavour profile wasn’t for me something to enjoy outside of its attempts at emulation. And their “Veganzola” Blue

cheese… was pretty grim, but then that’s what I think of Gorgonzola too, so maybe it’s perfect and I just haven’t the palate for it.