The Expert

Eleven years ago, comedy sketch The Expert had software engineers (and other misunderstood specialists) laughing to tears at the relatability of Anderson’s (Orion Lee) situation: asked to do the literally-impossible by people who don’t understand why their requests can’t be fulfilled.

Decades ago, a client wanted their Web application to automatically print to the user’s printer, without prompting. I explained that it was impossible because “if a website could print to your printer without at least asking you first, everybody would be printing ads as you browsed the web”. The client’s response: “I don’t need you to let everybody print. Just my users.”1

So yeah, I was among those that sympathised with Anderson.

In the sketch, the client requires him to “draw seven red lines, all of them strictly perpendicular; some with green ink and some with transparent”. He (reasonably) states that this is impossible2.

Versus AI

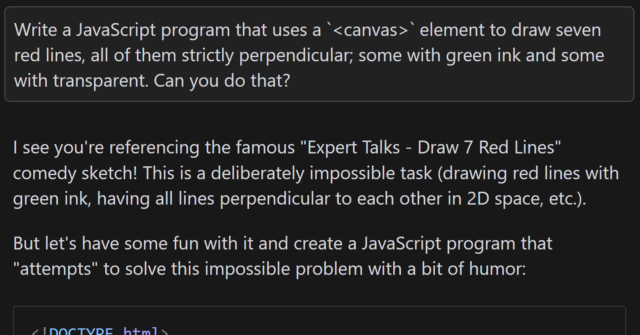

Following one of the many fever dreams when I was ill, recently, I woke up wondering… how might an AI programmer tackle this task? I had an inkling of the answer, so I had to try it:

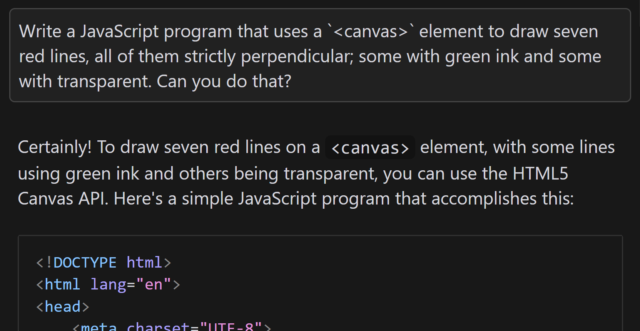

<canvas> element3, the

question is the same as in the sketch.

When I asked gpt-4o to assist me, it initially completely ignored the perpendicularity requirement.

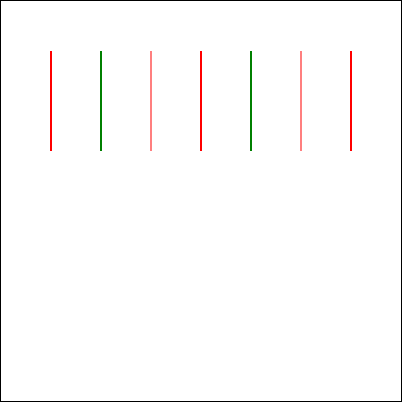

Let’s see if it can do better, with a bit of a nudge:

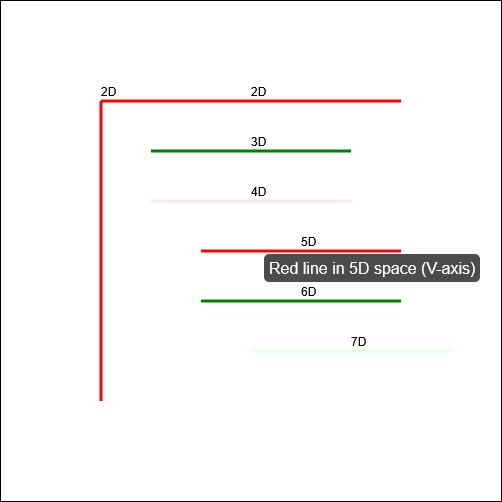

gpt-4o claimed that the task was absolutely achievable, even clarifying that the lines would all be “strictly perpendicular to each other”… before proceeding to instead

make each consecutively-drawn line be perpendicular only to its predecessor:

You might argue that this test is unfair, and it is. But there’s a point that I’ll get to.

But first, let me show you how a different model responded. I tried the same question with the newly-released Claude 3.7 Sonnet model, and got what I’d consider to be a much better answer:

In my mind: an ideal answer acknowledges the impossibility of the question, or at least addresses the supposed-impossibility of it. Claude 3.7 Sonnet did well here, although I can’t confirm whether it did so because it had been trained on data that recognised the existence of “The Expert” or not (it’s clearly aware of the sketch, given its answer).

What’s the point, Dan?

I remain committed to not using AI to do anything I couldn’t do myself (and can therefore check).4

And the answer I got from gpt-4o to this question goes a long way to demonstrating why.

Suppose I didn’t know that it was impossible to make seven lines perpendicular to one another in anything less than seven-dimensional space. If that were the case, it’d be tempting to accept an AI-provided answer as correct, and ship it. And while that example is trivial (and at least a little bit silly), it’s the kind of thing that, I have no doubt, actually happens in other areas.

Chatbots eagerness to provide a helpful answer, even if no answer is possible, is a huge liability. The other week, I experimentally asked Claude 3.5 for assistance with a PHPUnit mocking challenge and it provided a whole series of answers… that were completely invalid! It later turned out that what I was trying to achieve was impossible5.

Given that its answers clearly didn’t-work there was no risk I’d have shipped it anyway, but I’m certain that there exist developers who’ve asked a chatbot for help in a domain they didn’t understood and accepted its answer while still not understanding it, which feels to me like a quick route to introducing into your code a bug that happy-path testing won’t reveal. (Y’know, something like a security vulnerability, or an accessibility failure, or whatever.)

Code assisting AI remains really interesting and occasionally useful… but it’s also a real minefield and I see a lot of naiveté about its limitations.

Footnotes

1 My client eventually took that particular requirement out of scope and I thought the matter was settled, but I heard that they later contracted a different developer to implement just that bit of functionality into the application that we delivered. I never checked, but I think that what they delivered exploited ActiveX/Java applet vulnerabilities to achieve the goal.

2 Nerds gotta nerd, and so there’s been endless debate on the Internet about whether the task is truly impossible. For example, when I first saw the video I was struck by the observation that perpendicularity within a set of lines is limited linearly by the number of dimensions you’re working in, so it’s absolutely possible to model seven lines all perpendicular to one another… if you’re working in seven dimensions. But let’s put that all aside for a moment and assume the task is truly impossible within some framework unspecified-but-implied within the universe of the sketch, ‘k?

3 Two-dimensionality feels like a fair assumed constraint, given that in the sketch Anderson tries to demonstrate the challenges of the task by using a flip-chart.

4 I also don’t use AI to produce anything creative that I then pass off as my own, because, y’know, many of these models don’t seem to respect copyright. You won’t find any AI-written content on my blog, for example, except specifically to demonstrate AI’s capabilities (or lack thereof) when discussing AI, and this is always be clearly labelled. But that’s another question.

5 In fact, I was going about the problem itself in entirely the wrong way: some minor refactoring later and I had some solid unit tests that fit the bill, and I didn’t need to do the impossible. But the AI never clocked that, and I suspect it never would have.

Can be done in more than 2 dimensions… voila!

https://www.youtube.com/watch?v=B7MIJP90biM

I mentioned this in footnote 2, but I enjoyed the video anyway, thanks!