Mostly for my own benefit, as most other guides online are outdated, here’s my set-up for intercepting TLS-encrypted communications from an emulated Android device (in Android Emulator)

using Fiddler. This is useful if you want to debug, audit, reverse-engineer, or evaluate the security of an Android app. I’m using Fiddler

5.0 and Android Studio 2.3.3 (but it should work with newer versions too) to intercept connections from an Android 8 (Oreo) device

using Windows. You can easily adapt this set-up to work with physical devices too, and it’s not hard to adapt these instructions for other configurations too.

1. Configure Fiddler

Install Fiddler and run it.

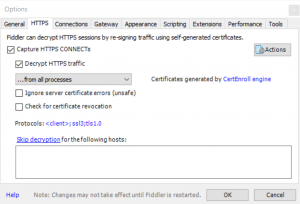

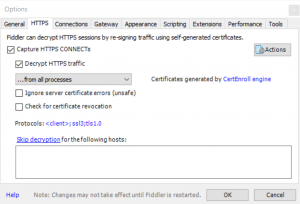

Under Tools > Options > HTTPS, enable “Decrypt HTTPS traffic” and allow a root CA certificate to be created.

Click Actions > Export Root Certificate to Desktop to get a copy of the root CA public key.

On the Connections tab, ensure that “Allow remote computers to connect” is ticked. You’ll need to restart Fiddler after changing this and may be prompted to grant it additional

permissions.

If Fiddler changed your system proxy, you can safely change this back (and it’ll simplify your output if you do because you won’t be logging your system’s connections, just the Android

device’s ones). Fiddler will complain with a banner that reads “The system proxy was changed. Click to reenable capturing.” but you can ignore it.

2. Configure your Android device

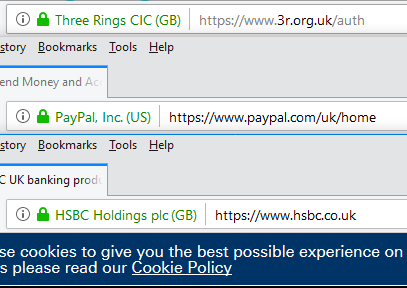

Install Android Studio. Click Tools > Android > AVD Manager to get a list of virtual devices. If you haven’t created one already, create one: it’s now possible to create Android

devices with Play Store support (look for the icon, as shown above), which means you can easily intercept traffic from third-party applications without doing APK-downloading hacks: this

is great if you plan on working out how a closed-source application works (or what it sends when it “phones home”).

In

Android’s Settings > Network & Internet, disable WiFi. Then, under Mobile Network > Access Point Names > {Default access point, probably T-Mobile} set Proxy to the local IP

address of your computer and Port to 8888. Now all traffic will go over the virtual cellular data connection which uses the proxy server you’ve configured in Fiddler.

In

Android’s Settings > Network & Internet, disable WiFi. Then, under Mobile Network > Access Point Names > {Default access point, probably T-Mobile} set Proxy to the local IP

address of your computer and Port to 8888. Now all traffic will go over the virtual cellular data connection which uses the proxy server you’ve configured in Fiddler.

Drag the root CA file you exported to your desktop to your virtual Android device. This will automatically copy the file into the virtual device’s “Downloads” folder (if you’re using a

physical device, copy via cable or network). In Settings > Security & Location > Encryption & Credentials > Install from SD Card, use the hamburger menu to get to the Downloads

folder and select the file: you may need to set up a PIN lock on the device to do this. Check under Trusted credentials > User to check that it’s there, if you like.

Test your configuration by visiting a HTTPS website: as you browse on the Android device, you’ll see the (decrypted) traffic appear in Fiddler. This also works with apps other than the

web browser, of course, so if you’re reverse-engineering a API-backed application encryption then encryption doesn’t have to impede you.

3. Not working? (certificate pinning)

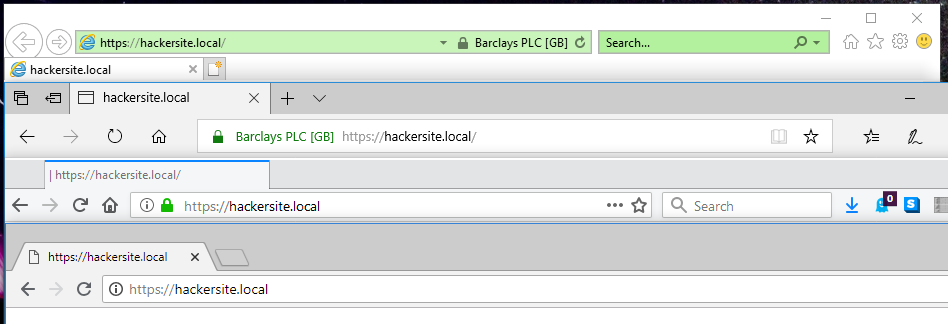

A small but increasing number of Android apps implement some variation of built-in key pinning, like HPKP but usually

implemented in the application’s code (which is fine, because most people auto-update their apps). What this does is ensures that the certificate presented by the server is signed by a

certification authority from a trusted list (a trusted list that doesn’t include Fiddler’s CA!). But remember: the app is running on your device, so you’re ultimately in

control – FRIDA’s bypass script “fixed” all of the apps I tried, but if it

doesn’t then I’ve heard good things about Inspeckage‘s “SSL uncheck” action.

Summary of steps

If you’re using a distinctly different configuration (different OS, physical device, etc.) or this guide has become dated, here’s the fundamentals of what you’re aiming to achieve:

- Set up a decrypting proxy server (e.g. Fiddler, Charles, Burp, SSLSplit – note that Wireshark isn’t suitable) and export its root certificate.

- Import the root certificate into the certificate store of the device to intercept.

- Configure the device to connect via the proxy server.

- If using an app that implements certificate pinning, “fix” the app with FRIDA or another tool.