This is part of a series of posts on computer terminology whose popular meaning – determined by surveying my friends – has significantly diverged from its original/technical one. Read more evolving words…

A few hundred years ago, the words “awesome” and “awful” were synonyms. From their roots, you can see why: they mean “tending to or causing awe” and “full or or characterised by awe”, respectively. Nowadays, though, they’re opposites, and it’s pretty awesome to see how our language continues to evolve. You know what’s awful, though? Computer viruses. Right?

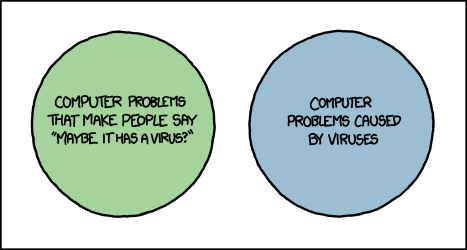

You know what I mean by a virus, right? A malicious computer program bent on causing destruction, spying on your online activity, encrypting your files and ransoming them back to you, showing you unwanted ads, etc… but hang on: that’s not right at all…

Virus

What people think it means

Malicious or unwanted computer software designed to cause trouble/commit crimes.

What it originally meant

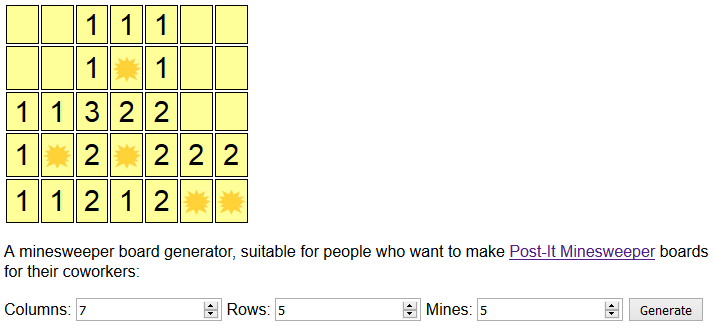

Computer software that hides its code inside programs and, when they’re run, copies itself into other programs.

The Past

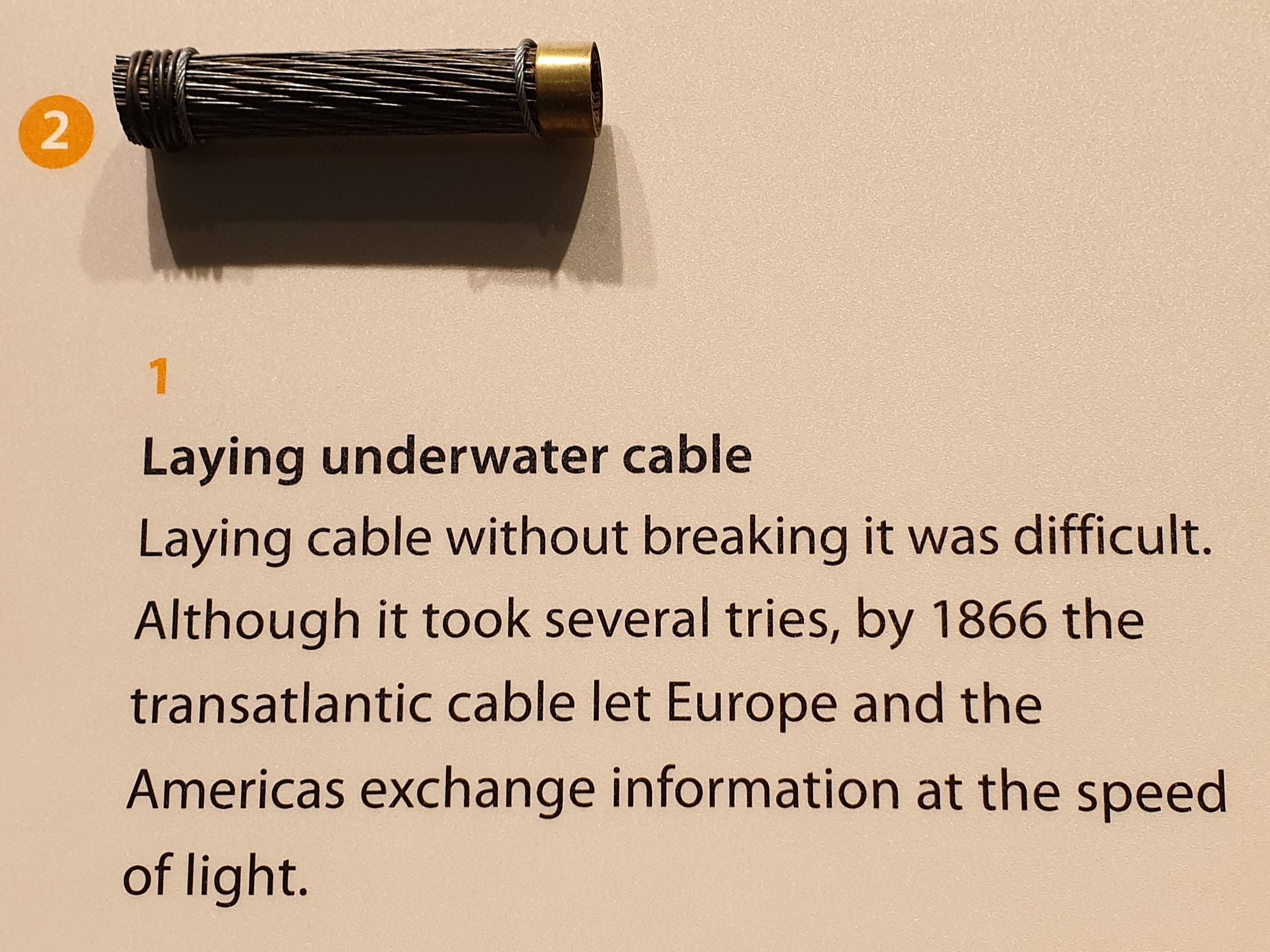

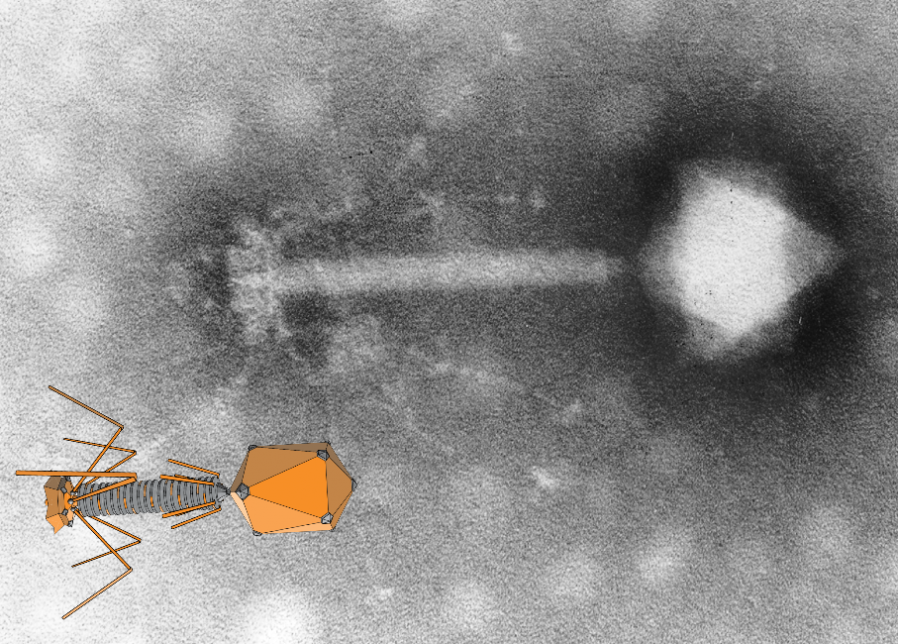

Only a hundred and thirty years ago it was still widely believed that “bad air” was the principal cause of disease. The idea that tiny germs could be the cause of infection was only just beginning to take hold. It was in this environment that the excellent scientist Ernest Hankin travelled around India studying outbreaks of disease and promoting germ theory by demonstrating that boiling water prevented cholera by killing the (newly-discovered) vibrio cholerae bacterium. But his most-important discovery was that water from a certain part of the Ganges seemed to be naturally inviable as a home for vibrio cholerae… and that boiling this water removed this superpower, allowing the special water to begin to once again culture the bacterium.

Hankin correctly theorised that there was something in that water that preyed upon vibrio cholerae; something too small to see with a microscope. In doing so, he was probably the first person to identify what we now call a bacteriophage: the most common kind of virus. Bacteriophages were briefly seen as exciting for their medical potential. But then in the 1940s antibiotics, which were seen as far more-convenient, began to be manufactured in bulk, and we stopped seriously looking at “phage therapy” (interestingly, phages are seeing a bit of a resurgence as antibiotic resistance becomes increasingly problematic).

But the important discovery kicked-off by the early observations of Hankin and others was that viruses exist. Later, researchers would discover how these viruses work1: they inject their genetic material into cells, and this injected “code” supplants the unfortunate cell’s usual processes. The cell is “reprogrammed” – sometimes after a dormant period – to churns out more of the virus, becoming a “virus factory”.

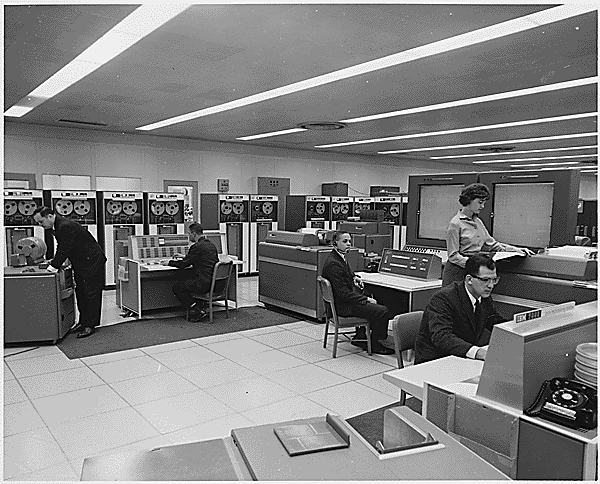

Let’s switch to computer science. Legendary mathematician John von Neumann, fresh from showing off his expertise in calculating how shaped charges should be used to build the first atomic bombs, invented the new field of cellular autonoma. Cellular autonoma are computationally-logical, independent entities that exhibit complex behaviour through their interactions, but if you’ve come across them before now it’s probably because you played Conway’s Game of Life, which made the concept popular decades after their invention. Von Neumann was very interested in how ideas from biology could be applied to computer science, and is credited with being the first person to come up with the idea of a self-replicating computer program which would write-out its own instructions to other parts of memory to be executed later: the concept of the first computer virus.

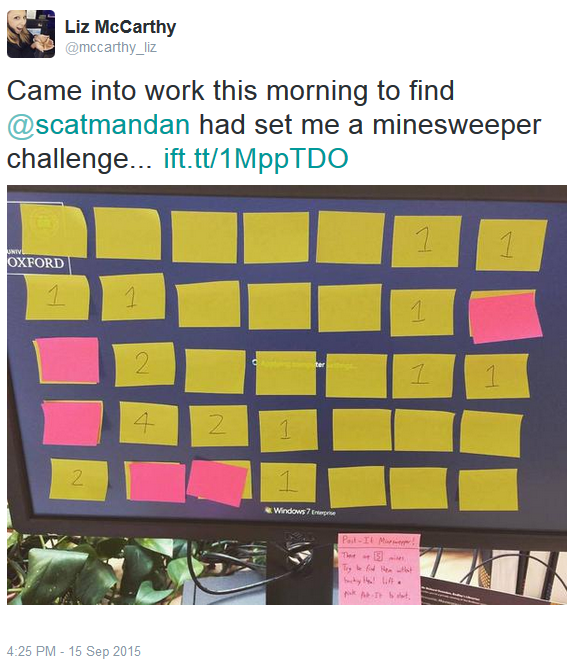

Retroactively-written lists of early computer viruses often identify 1971’s Creeper as the first computer virus: it was a program which, when run, moved (later copied) itself to another computer on the network and showed the message “I’m the creeper: catch me if you can”. It was swiftly followed by a similar program, Reaper, which replicated in a similar way but instead of displaying a message attempted to delete any copies of Creeper that it found. However, Creeper and Reaper weren’t described as viruses at the time and would be more-accurately termed worms nowadays: self-replicating network programs that don’t inject their code into other programs. An interesting thing to note about them, though, is that – contrary to popular conception of a “virus” – neither intended to cause any harm: Creeper‘s entire payload was a relatively-harmless message, and Reaper actually tried to do good by removing presumed-unwanted software.

Another early example that appears in so-called “virus timelines” came in 1975. ANIMAL presented as a twenty questions-style guessing game. But while the user played it would try to copy itself into another user’s directory, spreading itself (we didn’t really do directory permissions back then). Again, this wasn’t really a “virus” but would be better termed a trojan: a program which pretends to be something that it’s not.

It took until 1983 before Fred Cooper gave us a modern definition of a computer virus, one which – ignoring usage by laypeople – stands to this day:

A program which can ‘infect’ other programs by modifying them to include a possibly evolved copy of itself… every program that gets infected may also act as a virus and thus the infection grows.

This definition helps distinguish between merely self-replicating programs like those seen before and a new, theoretical class of programs that would modify host programs such that – typically in addition to the host programs’ normal behaviour – further programs would be similarly modified. Not content with leaving this as a theoretical, Cooper wrote the first “true” computer virus to demonstrate his work (it was never released into the wild): he also managed to prove that there can be no such thing as perfect virus detection.

(Quick side-note: I’m sure we’re all on the same page about the evolution of language here, but for the love of god don’t say viri. Certainly don’t say virii. The correct plural is clearly viruses. The Latin root virus is a mass noun and so has no plural, unlike e.g. fungus/fungi, and so its adoption into a count-noun in English represents the creation of a new word which should therefore, without a precedent to the contrary, favour English pluralisation rules. A parallel would be bonus, which shares virus‘s linguistic path, word ending, and countability-in-Latin: you wouldn’t say “there were end-of-year boni for everybody in my department”, would you? No. So don’t say viri either.)

Viruses came into their own as computers became standardised and commonplace and as communication between them (either by removable media or network/dial-up connections) and Cooper’s theoretical concepts became very much real. In 1986, The Virdim method brought infectious viruses to the DOS platform, opening up virus writers’ access to much of the rapidly growing business and home computer markets.

The Virdim method has two parts: (a) appending the viral code to the end of the program to be infected, and (b) injecting early into the program a call to the appended code. This exploits the typical layout of most DOS executable files and ensures that the viral code is run first, as an infected program loads, and the virus can spread rapidly through a system. The appearance of this method at a time when hard drives were uncommon and so many programs would be run from floppy disks (which could be easily passed around between users) enabled this kind of virus to spread rapidly.

For the most part, early viruses were not malicious. They usually only caused harm as a side-effect (as we’ve already seen, some – like Reaper – were intended to be not just benign but benevolent). For example, programs might run slower if they’re also busy adding viral code to other programs, or a badly-implemented virus might even cause software to crash. But it didn’t take long before viruses started to be used for malicious purposes – pranks, adware, spyware, data ransom, etc. – as well as to carry political messages or to conduct cyberwarfare.

The Future

Nowadays, though, viruses are becoming less-common. Wait, what?

Yup, you heard me right: new viruses aren’t being produced at remotely the same kind of rate as they were even in the 1990s. And it’s not that they’re easier for security software to catch and quarantine; if anything, they’re less-detectable as more and more different types of file are nominally “executable” on a typical computer, and widespread access to powerful cryptography has made it easier than ever for a virus to hide itself in the increasingly-sprawling binaries that litter modern computers.

The single biggest reason that virus writing is on the decline is, in my opinion, that writing something as complex as a a virus is longer a necessary step to illicitly getting your program onto other people’s computers2! Nowadays, it’s far easier to write a trojan (e.g. a fake Flash update, dodgy spam attachment, browser toolbar, or a viral free game) and trick people into running it… or else to write a worm that exploits some weakness in an open network interface. Or, in a recent twist, to just add your code to a popular library and let overworked software engineers include it in their projects for you. Modern operating systems make it easy to have your malware run every time they boot and it’ll quickly get lost amongst the noise of all the other (hopefully-legitimate) programs running alongside it.

In short: there’s simply no need to have your code hide itself inside somebody else’s compiled program any more. Users will run your software anyway, and you often don’t even have to work very hard to trick them into doing so.

Verdict: Let’s promote use of the word “malware” instead of “virus” for popular use. It’s more technically-accurate in the vast majority of cases, and it’s actually a more-useful term too.

Footnotes

1 Actually, not all viruses work this way. (Biological) viruses are, it turns out, really really complicated and we’re only just beginning to understand them. Computer viruses, though, we’ve got a solid understanding of.

2 There are other reasons, such as the increase in use of cryptographically-signed binaries, protected memory space/”execute bits”, and so on, but the trend away from traditional viruses and towards trojans for delivery of malicious payloads began long before these features became commonplace.