Maybe it’s because I was at Render Conf at the end of last month or perhaps it’s because Three Rings DevCamp – which always gets me inspired – was earlier this month, but I’ve been particularly excited lately to get the chance to play with some of the more “cutting edge” (or at least, relatively-new) web technologies that are appearing on the horizon. It feels like the Web is having a bit of a renaissance of development, spearheaded by the fact that it’s no longer Microsoft that are holding development back (but increasingly Apple) and, perhaps for the first time, the fact that the W3C are churning out standards “ahead” of where the browser vendors are managing to implement technical features, rather than simply reflecting what’s already happening in the world.

It seems to me that HTML5 may well be the final version of HTML. Rather than making grand new releases to the core technology, we’re now – at last! – in a position where it’s possible to iteratively add new techniques in a resilient, progressive manner. We don’t need “HTML6” to deliver us any particular new feature, because the modern web is more-modular and is capable of having additional features bolted on. We’re in a world where browser detection has been replaced with feature detection, to the extent that you can even do non-hacky feature detection in pure CSS, now, and this (thanks to the nature of the Web as a loosely-coupled, resilient platform) means that it’s genuinely possible to progressively-enhance content and get on board with each hot new technology that comes along, if you want, while still delivering content to users on older browsers.

And that’s the dream! A web of progressive-enhancement stays true to Sir Tim’s dream of universal interoperability while still moving forward technologically. I’ve no doubt that there’ll always be people who want to break the Web – even Google do it, sometimes – with single-page Javascript-only web apps, “app shell” websites, mobile-only or desktop-only experiences and “apps” that really ought to have been websites (and perhaps PWAs) to begin with… but the fact that the tools to make a genuinely “progressively-enhanced” web, and those tools are mainstream, is a big deal. If you don’t think we’re at that point yet, I invite you to watch Rachel Andrews‘ fantastic presentation, “Start Using CSS Grid Layout Today”.

Some of the things I’ve been playing with recently include:

Intersection Observers

Only really supported in Chrome, but there’s a great polyfill, the Intersection Observer API is one of those technologies that make you say “why didn’t we have that already?” It’s very simple: all an Intersection Observer does is to provide event hooks for target objects entering or leaving the viewport, without resorting to polling or hacky code on scroll event captures.

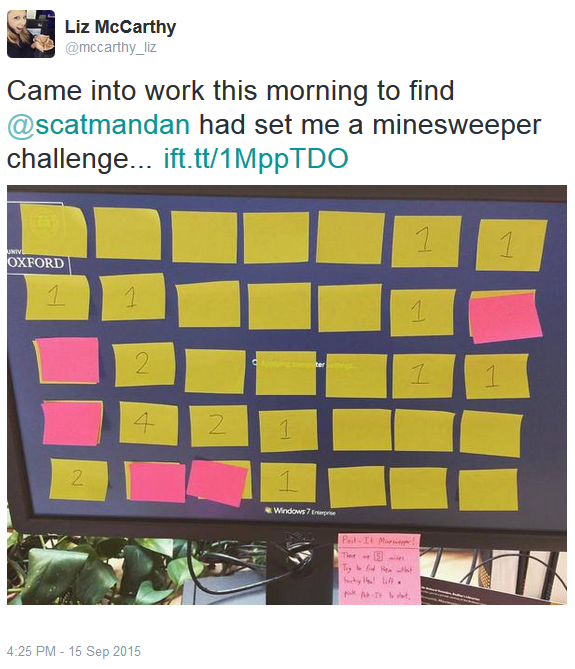

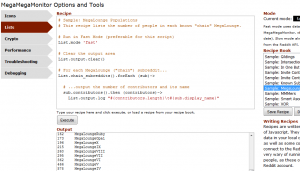

What’s it for? Well the single most-obvious use case is lazy-loading images, a-la Medium or Google Image Search: delivering users a placeholder image or a low-resolution copy until they scroll far enough for the image to come into view (or almost into view) and then downloading the full-resolution version and dynamically replacing it. My first foray into Intersection Observers was to take Medium’s approach and then improve it with a Service Worker in order to make it behave nicely even if the user’s Internet connection was unreliable, but I’ve since applied it to my Reddit browser plugin MegaMegaMonitor: rather than hammering the browser with Javascript the plugin now waits until relevant content enters the viewport before performing resource-intensive tasks.

Web Workers

I’d briefly played with Service Workers before and indeed we’re adding a Service Worker to the next version of Three Rings, which, in conjunction with a manifest.json and the service’s (ongoing) delivery over HTTPS (over H2, where available, since last year), technically makes it a Progressive Web App… and I’ve been looking for opportunities to make use of Service Workers elsewhere in my work, too… but my first dive in to Web Workers was in introducing one to the next upcoming version of MegaMegaMonitor.

Web Workers add true multithreading to Javascript, and in the case of MegaMegaMonitor this means the possibility of pushing the more-intensive work that the plugin has to do out of the main thread and into the background, allowing the user to enjoy an uninterrupted browsing experience while the heavy-lifting goes on in the background. Because I don’t control the domain on which this Web Worker runs (it’s reddit.com, of course!), I’ve also had the opportunity to play with Blobs, which provided a convenient way for me to inject Worker code onto somebody else’s website from within a userscript. This has also lead me to the discovery that it ought to be possible to implement userscripts that inject Service Workers onto websites, which could be used to mashup additional functionality into websites far in advance of that which is typically possible with a userscript… more on that if I get around to implementing such a thing.

Fetch

The final of the new technologies I’ve been playing with this month is the Fetch API. I’m not pulling any punches when I say that the Fetch API is exactly what XMLHttpRequests should have been from the very beginning. Understanding them properly has finally given me the confidence to stop using jQuery for the one thing for which I always seemed to have had to depend on it for – that is, simplifying Ajax requests! I mean, look at this elegant code:

fetch('posts.json')

.then(function(response) {

return response.json();

})

.then(function(json) {

console.log(json.something.otherThing);

});

Whether or not you’re a fan of Javascript, you’ve got to admit that that’s infinitely more readable than XMLHttpRequest hackery (at least, without the help of a heavyweight library like jQuery).

So that’s some of the stuff I’ve been playing with lately: Intersection Observers, Web Workers, Blobs, and the Fetch API. And I feel all full of optimism on behalf of the Web.

![GIF showing a variety people watching VR porn. [SFW]](https://media.giphy.com/media/l41lQODMertvSTIjK/giphy.gif)