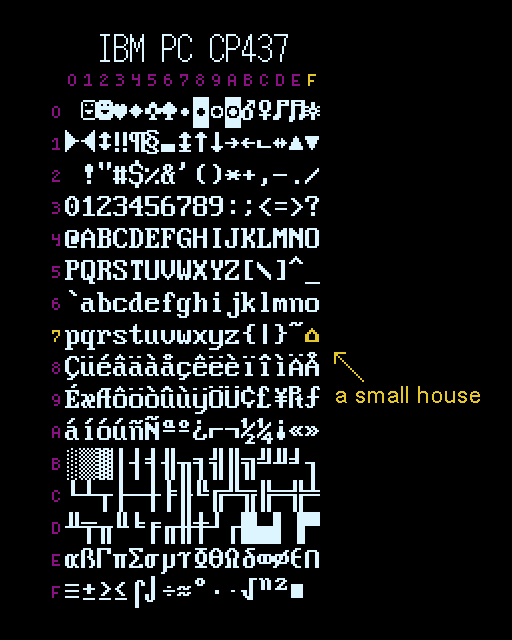

There’s a small house ( ⌂ ) in the middle of IBM’s infamous character set Code Page 437. “Small house”—that’s the official IBM name given to the glyph at code position 0x7F, where a control character for “Delete” (DEL) should logically exist. It’s cute, but a little strange. I wonder, how did it get there? Why did IBM represent DEL as a house, of all things?

…

It probably ought to be no surprise that I, somebody who’s written about the beauty and elegance of the ASCII table, would love this deep dive into the

specifics of the unusual graphical representation of the DEL character in IBM Code Page 437.

It’s highly accessible, so even if you’ve only got a passing interest in, I don’t know, text encoding or typography or the history of computing, it’s a great read.