You know how sometimes you get an idea, and you already wrote and extended the code that makes it possible so surely you only need to do a little audio editing and CSS animation tweaking and graphic design and HOLY SHIT HOW DID IT GET SO LATE?

Tag: web

The problem with single page apps

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

Single-page apps (or SPAs as they’re sometimes called) serve all of the code for an entire multi-UI app from a single

index.htmlfile.They use JavaScript to handle URL routing with real URLs. For this to work, you need to:

- Configure the server to point all paths on a domain back to the root

index.htmlfile. For example,todolist.comandtodolist.com/listsshould both point to the same file.- Suppress the default behavior when someone clicks a link that points to another page in the app.

- Use more JavaScript—

history.pushState()—to update the URL without triggering a page reload.- Match the URL against a map of routes, and serve the right content based on it.

- If your URL has variable information in it (like a todolist ID, for example), parse that data out of the URL.

- Detect when someone clicks the browser’s back button/forward button, and update the URL and UI.

- Update the

titleelement on the page.- Use even more JavaScript to dynamically focus the content area when the content changes (for screen-reader users).

(Shoutout to Ashley Bischoff for those last two!)

You end up recreating with JavaScript a lot of the features the browser gives you out-of-the-box.

This becomes more code to maintain, more complexity to manage, and more things to break. It makes the whole app more fragile and bug-prone than it has to be.

I’m going to share some alternatives that I prefer.

…

Like – it seems – Chris Ferdinandi, I’ve got nothing against Single Page Applications in their place.

My biggest concern with SPAs is that they’re routinely seen as an inevitable progression of web development: that is, that an increasing number of web developers have been brainwashed into thinking that they’re intrinsically superior to traditional multi-page websites. As Adam Silver observed the other year, using your heavyweight Javascript framework to Ajaxify your page loads does make the application feel faster… but only because the download and processing time of the heavyweight Javascript framework made it feel slow in the first place! The net result: web bloat, penalising of mobile users, and brittle applications with many failure points.

Whenever I see a new front-end framework sing the praises of its routing engine I wonder how we got to this point. After all: the Web’s had a routing engine since 1990, and most efforts to reinvent it invariably make it worse: less-accessible, less-archivable, less-sharable, less-discoverable, less-reliable, or several of these.

BBC News… without the sport

I love RSS, but it’s a minor niggle for me that if I subscribe to any of the BBC News RSS feeds I invariably get all the sports news, too. Which’d be fine if I gave even the slightest care about the world of sports, but I don’t.

It only takes a couple of seconds to skim past the sports stories that clog up my feed reader, but because I like to scratch my own

itches, I came up with a solution. It’s more-heavyweight perhaps than it needs to be, but it does the job. If you’re just looking for a BBC News (UK) feed but with sports filtered

out you’re welcome to share mine: https://f001.backblazeb2.com/file/Dan–Q–Public/bbc-news-nosport.rss https://fox.q-t-a.uk/bbc-news-no-sport.xml.

If you’d like to see how I did it so you can host it yourself or adapt it for some similar purpose, the code’s below or on GitHub:

#!/usr/bin/env ruby # # Sample crontab: # # At 41 minutes past each hour, run the script and log the results # 41 * * * * ~/bbc-news-rss-filter-sport-out.rb > ~/bbc-news-rss-filter-sport-out.log 2>&1 # Dependencies: # * open-uri - load remote URL content easily # * nokogiri - parse/filter XML # * b2 - command line tools, described below require 'bundler/inline' gemfile do source 'https://rubygems.org' gem 'nokogiri' end require 'open-uri' # Regular expression describing the GUIDs to reject from the resulting RSS feed # We want to drop everything from the "sport" section of the website REJECT_GUIDS_MATCHING = /^https:\/\/www\.bbc\.co\.uk\/sport\// # Assumption: you're set up with a Backblaze B2 account with a bucket to which # you'd like to upload the resulting RSS file, and you've configured the 'b2' # command-line tool (https://www.backblaze.com/b2/docs/b2_authorize_account.html) B2_BUCKET = 'YOUR-BUCKET-NAME-GOES-HERE' B2_FILENAME = 'bbc-news-nosport.rss' # Load and filter the original RSS rss = Nokogiri::XML(open('https://feeds.bbci.co.uk/news/rss.xml?edition=uk')) rss.css('item').select{|item| item.css('guid').text =~ REJECT_GUIDS_MATCHING }.each(&:unlink) begin # Output resulting filtered RSS into a temporary file temp_file = Tempfile.new temp_file.write(rss.to_s) temp_file.close # Upload filtered RSS to a Backblaze B2 bucket result = `b2 upload_file --noProgress --contentType application/rss+xml #{B2_BUCKET} #{temp_file.path} #{B2_FILENAME}` puts Time.now puts result.split("\n").select{|line| line =~ /^URL by file name:/}.join("\n") ensure # Tidy up after ourselves by ensuring we delete the temporary file temp_file.close temp_file.unlink end

bbc-news-rss-filter-sport-out.rb

When executed, this Ruby code:

- Fetches the original BBC news (UK) RSS feed and parses it as XML using Nokogiri

- Filters it to remove all entries whose GUID matches a particular regular expression (removing all of those from the “sport” section of the site)

- Outputs the resulting feed into a temporary file

- Uploads the temporary file to a bucket in Backblaze‘s “B2” repository (think: a better-value competitor S3); the bucket I’m using is publicly-accessible so anybody’s RSS reader can subscribe to the feed

I like the versatility of the approach I’ve used here and its ability to perform arbitrary mutations on the feed. And I’m a big fan of Nokogiri. In some ways, this could be considered a lower-impact, less real-time version of my tool RSSey. Aside from the fact that it won’t (easily) handle websites that require Javascript, this approach could probably be used in exactly the same ways as RSSey, and with significantly less set-up: I might look into whether its functionality can be made more-generic so I can start using it in more places.

remysharp comments on “Bringing back the Web of 1990”

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Hi @avapoet, I’m the author of the JavaScript for the WorldWideWeb project, and I did read your thread on the user-agent missing and I thought I’d land the fix ;-)

The original WorldWideWeb browser that we based our work on was 0.12 with screenshots from 0.16. Both browsers supported HTTP 0.9 which didn’t send headers. Obviously unintentional that I send the `request` user-agent, so I spent some painful hours trying to get my emulator running NeXT with a networked connection _and_ the WorldWideWeb version 1.0 – which _did_ use HTTP 1.0 and would send a User-Agent, so I could copy it accurately into the emulator code base.

So now metafilter.com renders in the emulator, and the User Agent sent is: CERN-NextStep-WorldWideWeb.app/1.1 libwww/2.07

Thanks again :)

I blogged about the reimplementation of WorldWideWeb by a hackathon team at CERN, and posted a commentary to MetaFilter, too. In doing so, some others observed that it wasn’t capable of showing MetaFilter pages, which was obviously going to be the first thing that anybody did with it and I ought to have checked first. In any case, I later checked out the source code and did some debugging, finding and proposing a fix. It feels cool to be able to say “I improved upon some code written at CERN,” even if it’s only by a technicality.

This comment on the MetaFilter thread, which I only just noticed, is by Remy Sharp, who was part of the team that reimplemented WorldWideWeb as part of that hackathon (his blog posts about the experience: 1, 2, 3, 4, 5), and acknowledges my contribution. Squee!

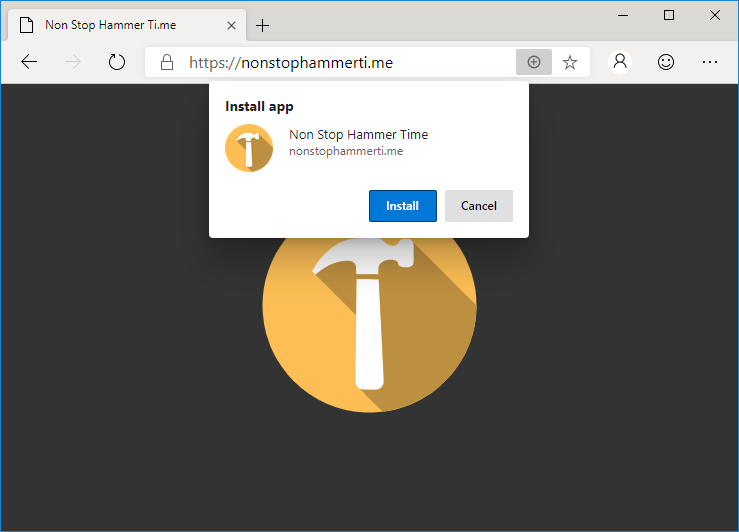

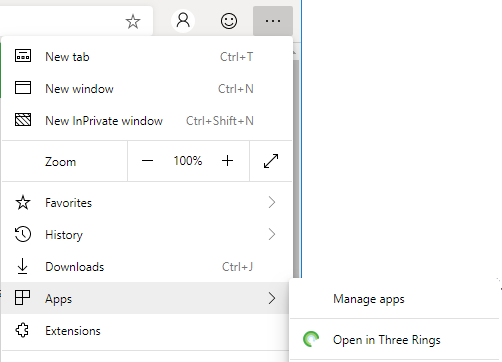

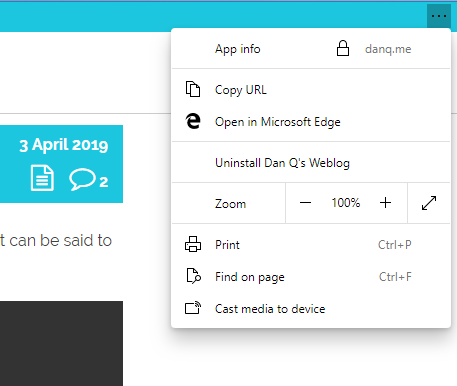

Edge Blink and Progressive Web Apps

As I’ve previously mentioned (sadly), Microsoft Edge is to drop its own rendering engine EdgeHTML and replace it with Blink, Google’s one (more of my and others related sadness here, here, here, and here). Earlier this month, Microsoft made available the first prerelease versions of the browser, and I gave it a go.

All of the Chrome-like features you’d expect are there, including support for Chrome plugins, but Microsoft have also clearly worked to try to integrate as much as possible of the important features that they felt were distinct to Edge in there, too. For example, Edge Blink supports SmartScreen filtering and uses Microsoft accounts for sync, and Incognito is of course rebranded InPrivate.

But what really interested me was the approach that Edge Dev has taken with Progressive Web Apps.

Edge Dev may go further than any other mainstream browser in its efforts to make Progressive Web Apps visible to the user, putting a plus sign (and sometimes an extended install prompt) right in the address bar, rather than burying it deep in a menu. Once installed, Edge PWAs “just work” in exactly the way that PWAs ought to, providing a simple and powerful user experience. Unlike some browsers, which make installing PWAs on mobile devices far easier than on desktops, presumably in a misguided belief in the importance of mobile “app culture”, it doesn’t discriminate against desktop users. It’s a slick and simple user experience all over.

Feature support is stronger than it is for Progressive Web Apps delivered as standalone apps via the Windows Store, too, with the engine not falling over at the first sign of a modal dialog for example. Hopefully (as I support one of these hybrid apps!) these too will begin to be handled properly when Edge Dev eventually achieves mainstream availability.

But perhaps most-impressive is Edge Dev’s respect for the importance of URLs. If, having installed the progressive “app” version of a site you subsequently revisit any address within its scope, you can switch to the app version via a link in the menu. I’d rather have seen a nudge in the address bar, where the user might expect to see such things (based on that being where the original install icon was), but this is still a great feature… especially given that cookies and other state maintainers are shared between the browser, meaning that performing such a switch in a properly-made application will result in the user carrying on from almost exactly where they left off.

Similarly, and also uncommonly forward-thinking, Progressive Web Apps installed as standalone applications from Edge Dev enjoy a “copy URL” option in their menu, even if the app runs without an address bar (e.g. as a result of a "display": "standalone" directive

in the manifest.json). This is a huge boost to sharability and is enormously (and unusually) respectful of the fact that addresses are the

Web’s killer feature! Furthermore, it respects the users’ choice to operate their “apps” in whatever way suits them best: in a browser (even a competing browser!), on their

mobile device, or wherever. Well done, Microsoft!

I’m still very sad overall that Edge is becoming part of the Chromium family of browsers. But if the silver lining is that we get a pioneering and powerful new Progressive Web App engine then it can’t be all bad, can it?

Yet Another JavaScript Framework

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

It is impossible to answer all of these questions simply. They can, however, be framed by the ideological project of the web itself. The web was built to be open, both technologically as a decentralized network, and philosophically as a democratizing medium. These questions are tricky because the web belongs to no one, yet was built for everyone. Maintaining that spirit takes a lot of work, and requires sometimes slow, but always deliberate decisions about the trajectory of web technologies. We should be careful to consider the mountains of legacy code and libraries that will likely remain on the web for its entire existence. Not just because they are often built with the best of intentions, but because many have been woven into the fabric of the web. If we pull on any one thread too hard, we risk unraveling the whole thing.

…

A great story about how Firefox nearly broke tens of thousands of websites by following standards, and then didn’t. tl;dr: Javascript has a messy history.

Google AMP lowered our page speed, and there’s no choice but to use it – unlike kinds

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

We here at unlike kinds decided that we had to implement Google AMP. We have to be in the Top Stories section because otherwise we’re punted down the page and away from potential readers. We didn’t really want to; our site is already fast because we made it fast, largely with a combination of clever caching and minimal code. But hey, maybe AMP would speed things up. Maybe Google’s new future is bright.

It isn’t. According to Google’s own Page Speed Insights audit (which Google recommends to check your performance), the AMP version of articles got an average performance score of 87. The non-AMP versions? 95. (Note: I updated these numbers recently with an average after running the test 6 times per version.)

…

I’ve complained about AMP before plenty – starting here, for example – but it’s even harder to try to see the alleged “good sides” of the technology when it doesn’t even deliver the one thing it was supposed to. The Internet should be boycotting this shit, not drinking the Kool-Aid.

Non Stop Hammer Ti.me

You know how sometimes I make a thing and, in hindsight, it doesn’t make much sense? And at best, all it can be said to do is to make the Internet more fun and weird?

I give you: NonStopHammerTi.me.

Things that make it awesome:

- Well, the obvious.

- Vanilla Javascript.

- CSS animations timed to every-other-beat.

- Using an SVG

stroke-dasharrayas a progress bar. - Progressively-enhanced; in the worst case you just get to download the audio.

- PWA-enhanced; install it to your mobile!

- Open source!

- Decentralised (available via the peer-web at dat://nonstophammerti.me/ / dat://0a4a8a..00/)

- Accessible to screen readers, keyboard navigators, partially-sighted users, just about anybody.

- Compatible with digital signage at my workplace…

That is all.

How many people are missing out on JavaScript enhancement?

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

few weeks back, we were chatting about the architecture of the Individual Electoral Registration web service. We started discussing the pros and cons of an approach that would provide a significantly different interaction for any people not running JavaScript.

“What proportion of people is that?” an inquisitive mind asked.

Silence.

We didn’t really have any idea how many people are experiencing UK government web services without the enhancement of JavaScript. That’s a bad thing for a team that is evangelical about data driven design, so I thought we should find out.

The answer is:

1.1% of people aren’t getting Javascript enhancements (1 in 93)

…

This article by the GDS is six years old now, but its fundamental point is still as valid as ever: a small proportion (probably in the region of 1%) of your users won’t experience some or all of the whizzy Javascript stuff on your website, and it’s not because they’re a power user who disables Javascript.

There are so many reasons a user won’t run your Javascript, including:

- They’re using a browser that doesn’t support Javascript (or doesn’t support the version you’re using)

- They, or somebody they share their device with, has consciously turned-off Javascript either wholesale or selectively, in order to for example save bandwidth, improve speed, reinforce security, or improve compatibility with their accessibility technologies

- They’re viewing a locally-saved, backed-up, or archived version of your page (possibly in the far future long after your site is gone)

- Their virus scanner mis-classified your Javascript as potentially malicious

- One or more of your Javascript files contains a bug which, on their environment, stops execution

- One or more of your Javascript files failed to be delivered, for example owing to routing errors, CDN downtime, censorship, cryptographic handshake failures, shaky connections, cross-domain issues, stale caches…

- On their device, your Javascript takes too long to execute or consumes too many resources and is stopped by the browser

Fundamentally, you can’t depend on Javascript and so you shouldn’t depend on it being there, 100% of the time, when it’s possible not to. Luckily, the Web already gives us all the tools we need to develop the vast, vast majority of web content in a way that doesn’t depend on Javascript. Back in the 1990s we just called it “web development”, but nowadays Javascript (and other optional/under-continuous-development web technologies like your favourite so-very-2019 CSS hack) is so ubiquitous that we give it the special name “progressive enhancement” and make a whole practice out of it.

The Web was designed for forwards- and backwards-compatibility. When you break that, you betray your users and you make work for yourself.

(by the way: I know I plugged the unpoly framework already, the other day, but you should really give it a look if you’re just learning how to pull off progressive enhancement)

I Used The Web For A Day On Internet Explorer 8

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

Who In The World Uses IE8?

Before we start; a disclaimer: I am not about to tell you that you need to start supporting IE8.

There’s every reason to not support IE8. Microsoft officially stopped supporting IE8, IE9 and IE10 over three years ago, and the Microsoft executives are even telling you to stop using Internet Explorer 11.

But as much as we developers hope for it to go away, it just. Won’t. Die. IE8 continues to show up in browser stats, especially outside of the bubble of the Western world.

…

Sure, you aren’t developing for IE8 any more. But you should be developing with progressive enhancement, and if you do that right, you get all kinds of compatibility, accessibility, future- and past-proofing built-in. This isn’t just about supporting the (many) African countries where IE8 usage remains at over 1%… it’s about supporting the Web’s openness and archivibility and following best-practice in your support of new technologies.

Killed by Google – The Google Graveyard & Cemetery

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Fusion Tables… Fabric… Inbox… Google+… goo.gl… Goggles… Site Search… Glass… Now… Code… Bump!… Gears… Desktop Search…

Just some of the projects and services that Google has offered and then killed; this site aims to catalogue them all. Some, like Wave, were given to the community (Wave lived on for a while as an Apache project but is now basically dead), but most, like Reader, were assassinated in a misguided attempt to drive traffic to other services (ultimately, Reader was killed perhaps to try to get people onto Google+, which was then also killed).

Google can’t be trusted to maintain the services of theirs that you depend upon (relevant XKCD?). That’s not a phenomenon that’s unique to Google, of course: it’s perhaps just that they produce so many new and often-experimental services that they inevitably cease supporting more of them than some of the many other providers who’ve killed the silos that people depended upon.

How could things be better? For a start, Google could make a better commitment to open-source and developing standards rather than platforms. But if you don’t think you can trust them to do that – and you can’t – then the only solution for individuals is to use fewer Google products to break the Google-monoculture. Encourage the competition to weaken their position, and break free from silos in general where it’s possible to do so.

148+ projects and services dead. But hey, we’re getting Stadia so everything’s okay, right? <sigh>

We are actively destroying the web

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

One of the central themes of my talk on The Lean Web is that we as developers repeatedly take all of the great things the web and browsers give us out-of-the-box, break them, and then re-implement them poorly with JavaScript.

This point smacked me in the face hard a few weeks ago after WebAIM released their survey of the top million websites.

As Ethan Marcotte noted in his article on the survey:

Pages containing popular JavaScript frameworks were more likely to have accessibility errors than those that didn’t use those frameworks.

…

JavaScript routing has always perplexed me.

You take something the browser just gives you for free, break it with JavaScript, then reimplement it with more JavaScript, often poorly. You have to account for on-page clicks, on-site clicks, off-site clicks, forward and back button usage, and so on.

…

JavaScript routing has always perplexed me, too. Back when SPA-centric front-end frameworks started taking off I thought that there must be something wrong with me, as a developer. Why was I unable to see why this “new hotness” was so popular, so immediately ubiquitous? I taught myself a couple of different frameworks in the hope that in learning to use them in anger I’d “click” and understand why this approach to routing made any sense, but I still couldn’t get it.

That’s when I remembered, later than I ought to have, that just because something is popular doesn’t mean that it’s a good idea.#

Front-end routing isn’t necessarily poisonous. By building on-top of what you already have in a progressive-enhancement kind-of way (like unpoly does for example!) you can potentially provide some minor performance or look-and-feel improvements to people in ideal circumstances (right browser(s), right compatibility, no bugs, no blocks, no accessibility needs, no “power users” who like to open-in-new-tab and the like, speedy connection, etc.) without damaging the fundamentals of what makes your web application work… but you’ve got to appreciate that doing this is going to be more work. For some applications, that’s worthwhile.

But when you do it at the expense of the underlying fundamentals… when you say “we’re moving everything to the front-end so we’re not going to bother with real URLs any more”… that’s when you break the web. And in doing so, you break a lot of other things too:

- You break your user experience for people who don’t fit into your perfect vision of what your users look like in terms of technology, connection, or able-bodiedness

- You break the sustainability and archivability of your site, making it into another piece of trash that’ll be lost to the coming digital dark age

- You break the usability of the site by anything but your narrow view of what’s right

- You break a lot of the technology that’s made the web as great as it is already: caching, manipulatable URLs, widespread compatibility… and many other things become harder when you have to re-invent the wheel to get basic features like preloading, sharability/bookmarking, page saving, the back button, stateful refreshes, SEO, hyperlinks…

Regarding the Thoughtful Cultivation of the Archived Internet

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

With 20+ years of kottke.org archives, I’ve been thinking about this issue [continuing to host old content that no longer reflects its authors views] as well. There are many posts in the archive that I am not proud of. I’ve changed my mind in some cases and no longer hold the views attributed to me in my own words. I was too frequently a young and impatient asshole, full of himself and knowing it all. I was unaware of my privilege and too frequently assumed things of other people and groups that were incorrect and insensitive. I’ve amplified people and ideas in the past that I wouldn’t today.

…

Very much this! As another blogger with a 20+ year archive, I often find myself wondering how much of an impression of me is made upon my readers by some of my older posts, and what it means to retain them versus the possibility – never yet exercised – of deleting them. I certainly have my fair share of posts that don’t represent me well or that are frankly embarrassing, in hindsight!

I was thinking about this recently while following a thread on BoardGameGeek in which a poster advocated for the deletion of a controversial article from the site because, as they said:

…people who stumble on our site and see this game listed could get a very (!!!) bad impression of the hobby…

This is a similar concern: a member of an online community is concerned that a particular piece of archived content does not reflect well on them. They don’t see any way in which the content can be “fixed”, and so they propose that it is removed from the community. Parallels can be drawn to the deletionist faction within Wikipedia (if you didn’t know that Wikipedia had large-scale philosophical disputes before now, you’re welcome: now go down the meta-wiki rabbit hole).

As for my own blog, I fall on the side of retention: it’s impossible to completely “hide” my past by self-censorship anyway as there’s sufficient archives and metadata to reconstruct it, and moreover it feels dishonest to try. Instead, though, I do occasionally append rebuttals to older content – where I’ve time! – to help contextualise them and show that they’re outdated. I’ve even considered partially automating this by e.g. adding a “tag” that I can rapidly apply to older posts that haven’t aged well which would in turn add a disclaimer to the top of them.

Cool URIs don’t change. But the content behind them can. The fundamental message ought to be preserved, where possible, and so appending and retaining history seems to be a more-valid approach than wholesale deletion.

The “Backendification” of Frontend Development

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Asynchronous JavaScript in the form of Single Page Applications (SPA) offer an incredible opportunity for improving the user experience of your web applications. CSS frameworks like Bootstrap enable developers to quickly contribute styling as they’re working on the structure and behaviour of things.

Unfortunately, SPA and CSS frameworks tend to result in relatively complex solutions where traditionally separated concerns – HTML-structure, CSS-style, and JS-behaviour – are blended together as a matter of course — Counter to the lessons learned by previous generations.

This blending of concerns can prevent entry level developers and valued specialists (Eg. visual design, accessibility, search engine optimization, and internationalization) from making meaningful contributions to a project.

In addition to the increasing cost of the few developers somewhat capable of juggling all of these concerns, it can also result in other real world business implications.

…

What is a front-end developer? Does anybody know, any more? And more-importantly, how did we get to the point where we’re actively encouraging young developers into habits like writing (cough React cough) files containing a bloaty, icky mixture of content, HTML (markup), CSS (style), and Javascript (behaviour)? Yes, I get that the idea is that individual components should be packaged together (if you’re thinking in a React-like worldview), but that alone doesn’t justify this kind of bullshit antipattern.

It seems like the Web used to have developers. Then it got complex so we started differentiating back-end from front-end developers and described those who, like me, spanned the divide, as full-stack developers We gradually became a minority as more and more new developers, deprived of the opportunity to learn each new facet organically in this newly-complicated landscape, but that’s fine. But then… we started treating the front-end as the only end, and introducing all kinds of problems as a result… and most people don’t seem to have noticed, yet, exactly how much damage we’re doing to Web applications’ security, maintainability, future-proofibility, archivability, addressibility…

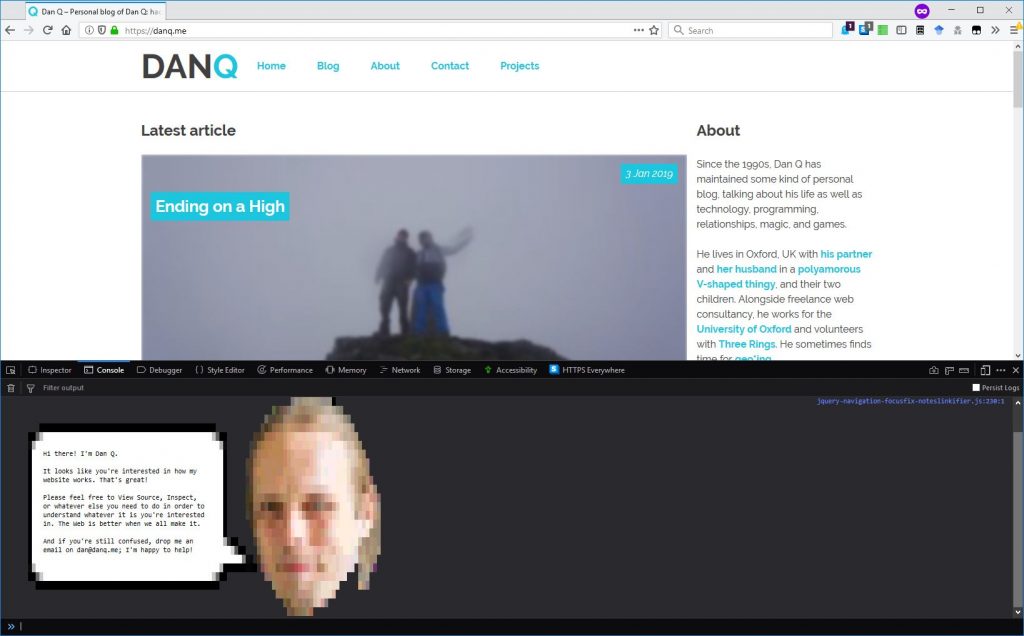

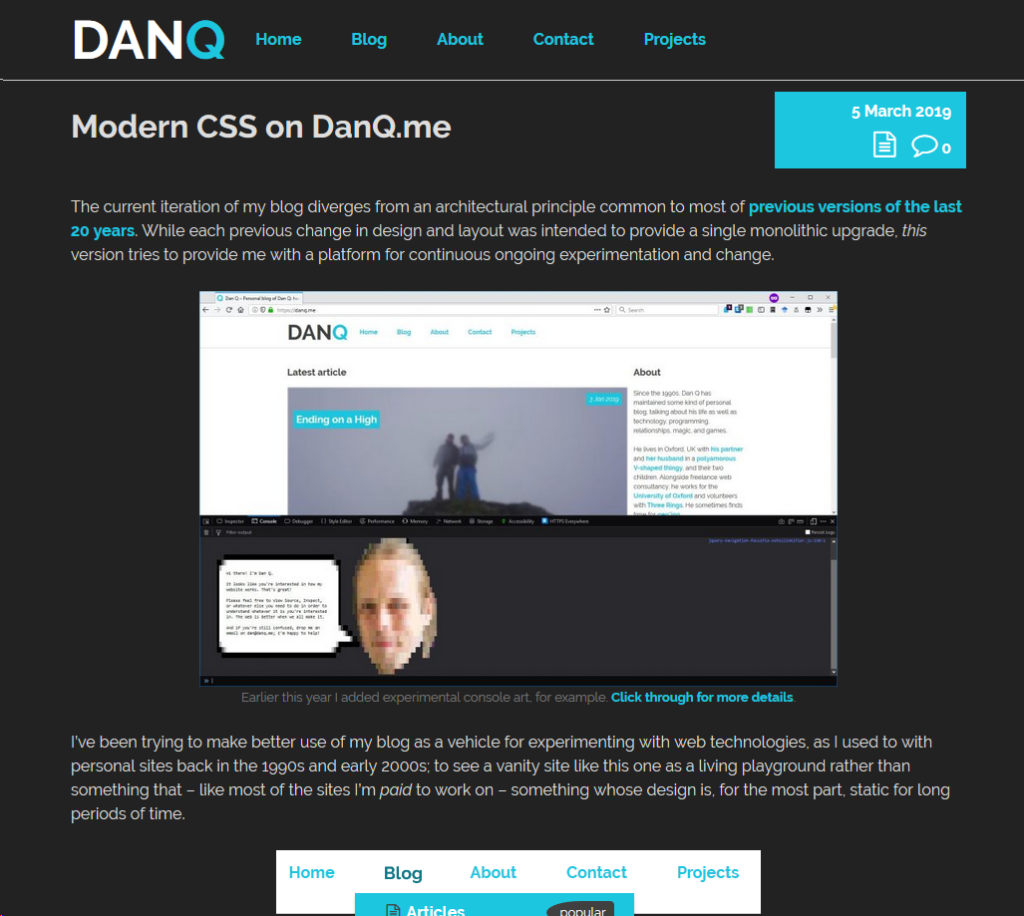

Modern CSS on DanQ.me

The current iteration of my blog diverges from an architectural principle common to most of previous versions of the last 20 years. While each previous change in design and layout was intended to provide a single monolithic upgrade, this version tries to provide me with a platform for continuous ongoing experimentation and change.

I’ve been trying to make better use of my blog as a vehicle for experimenting with web technologies, as I used to with personal sites back in the 1990s and early 2000s; to see a vanity site like this one as a living playground rather than something that – like most of the sites I’m paid to work on – something whose design is, for the most part, static for long periods of time.

Among the things I’ve added prior to the initial launch of this version of the design are gracefully-degrading grids, reduced-motion support, and dark-mode support – three CSS features of increasing levels of “cutting edge”-ness but each of which is capable of being implemented in a way that does not break the site’s compatibility. This site’s pages are readable using (simulations of) ancient rendering engines or even in completely text-based browsers, and that’s just great.

Here’s how I’ve implemented those three features:

Gracefully-degrading grids

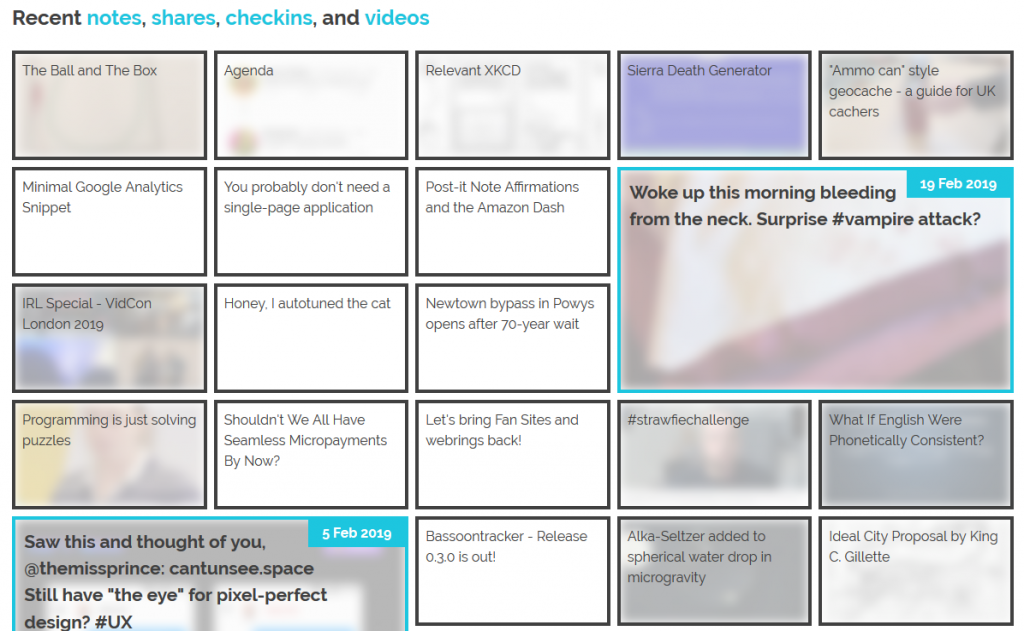

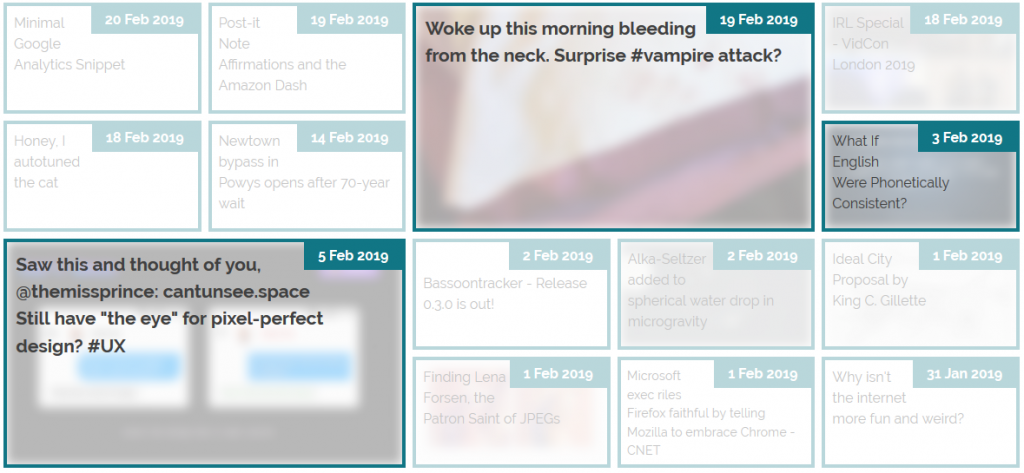

The grid of recent notes, shares, checkins and videos on my

homepage is powered by the display: grid; CSS directive. The number of columns varies by screen width from six

on the widest screens down to three or just one on increasingly small screens. Crucially, grid-auto-flow: dense; is used to ensure an even left-to-right filling of the

available space even if one of the “larger” blocks (with grid-column: span 2; grid-row: span 2;) is forced for space reasons to run onto the next line. This means that

content might occasionally be displayed in a different order from that in which it is written in the HTML (which is reverse

order of publication), but in exchange the items are flush with both sides.

grid-auto-flow:

dense; means that the “3 Feb” item is allowed to bubble-up and fill the gap, appearing out-of-order but flush with the edge.

Not all web browsers support display: grid; and while that’s often only one of design and not of readability because these browsers will fall back to usually-very-safe

default display modes like block and inline, as appropriate, sometimes there are bigger problems. In Internet Explorer 11, for example, I found (with thanks to

@_ignatg) a problem with my directives specifying the size of these cells (which are actually <li> elements because, well,

semantics matter). Because it understood the directives that ought to impact the sizing of the list items but not

the one that redeclared its display type, IE made… a bit of a mess of things…

Do websites need to look the same in every browser? No. But the content should be readable regardless, and here my CSS was rendering my content unreadable. Given that Internet Explorer users represent a little under 0.1% of visitors to my site I don’t feel the need to hack it to have the same look-and-feel: I just need it to have the same content readability. CSS Feature Queries to the rescue!

CSS Feature Queries – the @supports selector – make it possible to apply parts of your stylesheet if and only if

the browser supports specific CSS features, for example grids. Better yet, using it in a positive manner (i.e. “apply these

rules only if the browser supports this feature”) is progressive enhancement, because browsers that don’t understand the @supports selector act in

the same way as those that understand it but don’t support the specified feature. Fencing off the relevant parts of my stylesheet in a @supports (display: grid) { ... }

block instructed IE to fall back to displaying that content as a boring old list: exactly what I needed.

Reduced-motion support

I like to put a few “fun” features into each design for my blog, and while it’s nowhere near as quirky as having my head play peek-a-boo when you hover your cursor over it, the current header’s animations are in the same ballpark: hover over or click on some of the items in the header menu to see for yourself..

These kinds of animations are fun, but they can also be problematic. People with inner ear disorders (as well as people who’re just trying to maximise the battery life on their portable devices!) might prefer not to see them, and web designers ought to respect that choice where possible. Luckily, there’s an emerging standard to acknowledge that: prefers-reduced-motion. Alongside its cousins inverted-colors, prefers-reduced-transparency, prefers-contrast and prefers-color-scheme (see below for that last one!), these new CSS tools allow developers to optimise based on the accessibility features activated by the user within their operating system.

If you’ve tweaked your accessibility settings to reduce the amount of animation your operating system shows you, this website will respect that choice as well by not animating the

contents of the title, menu, or the homepage “tiles” any more than is absolutely necessary… so long as you’re using a supported browser, which right now means Safari or Firefox (or the

“next” version of Chrome). Making the change itself is pretty simple: I just added a @media screen and (prefers-reduced-motion: reduce) { ... } block to disable or

otherwise cut-down on the relevant animations.

Dark-mode support

Similarly, operating systems are beginning to support “dark mode”, designed for people trying to avoid eyestrain when using their computer at night. It’s possible for your browser to respect this and try to “fix” web pages for you, of course, but it’s better still if the developer of those pages has anticipated your need and designed them to acknowledge your choice for you. It’s only supported in Firefox and Safari so far and only on recent versions of Windows and MacOS, but it’s a start and a helpful touch for those nocturnal websurfers out there.

It’s pretty simple to implement. In my case, I just stacked some overrides into a @media (prefers-color-scheme: dark) { ... } block, inverting the background and primary

foreground colours, softening the contrast, removing a few “bright” borders, and darkening rather than lightening background images used on homepage tiles. And again, it’s an example of

progressive enhancement: the (majority!) of users whose operating systems and/or browsers don’t yet support this feature won’t be impacted by its inclusion in my stylesheet, but those

who can make use of it can appreciate its benefits.

This isn’t the end of the story of CSS experimentation on my blog, but it’s a part of the it that I hope you’ve enjoyed.