This morning it took me three attempts to put on a t-shirt the right way around.

I don’t think I slept too well.

This morning it took me three attempts to put on a t-shirt the right way around.

I don’t think I slept too well.

I have a credit card with HSBC1. It doesn’t see much use2, but I still get a monthly statement from them, and an email to say it’s available.

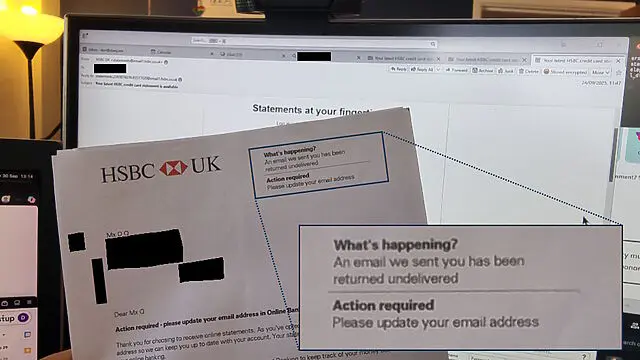

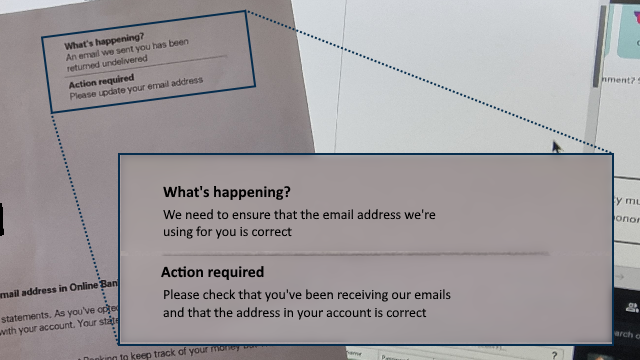

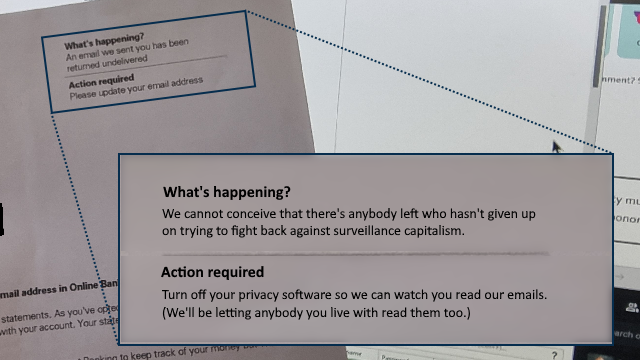

Not long ago I received a letter from them telling me that emails to me were being “returned undelivered” and they needed me to update the email address on my account.

I logged into my account, per the instructions in the letter, and discovered my correct email address already right there, much to my… lack of surprise3.

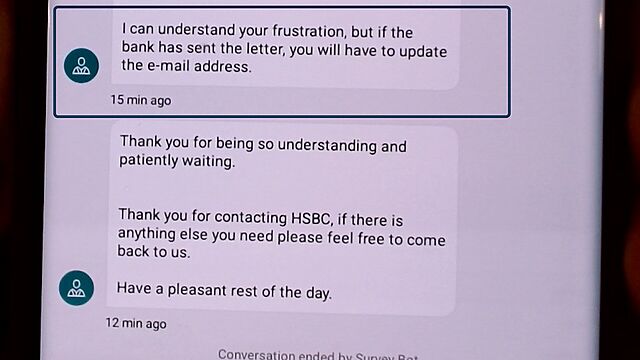

So I kicked off a live chat via their app, with an agent called Ankitha. Over the course of a drawn-out hour-long conversation, they repeatedly told to tell me how to update my email address (which was never my question). Eventually, when they understood that my email address was already correct, then they concluded the call, saying (emphasis mine):

I can understand your frustration, but if the bank has sent the letter, you will have to update the e-mail address.

This is the point at which a normal person would probably just change the email address in their online banking to a “spare” email address.

But aside from the fact that I’d rather not4, by this point I’d caught the scent of a deeper underlying issue. After all, didn’t I have a conversation a little like this one but with a different bank, about four years ago?

So I called Customer Services directly5, who told me that if my email address is already correct then I can ignore their letter.

I suggested that perhaps their letter template might need updating so it doesn’t say “action required” if action is not required. Or that perhaps what they mean to say is “action required: check your email address is correct”.

So anyway, apparently everything’s fine… although I reserved final judgement until I’d seen that they were still sending me emails!

I think I can place a solid guess about what went wrong here. But it makes me feel like we’re living in the Darkest Timeline.

I dissected HSBC’s latest email to me: it was of the “your latest statement is available” variety. Deep within the email, down at the bottom, is this code:

<img src="http://www.email1.hsbc.co.uk:8080/Tm90IHRoZSByZWFsIEhTQkMgcGF5bG9hZA==" width="1" height="1" alt=""> <img src="http://www.email1.hsbc.co.uk:8080/QWxzbyBub3QgcmVhbCBIU0JDIHBheWxvYWQ=" width="1" height="1" alt="">

What you’re seeing are two tracking pixels: tiny 1×1 pixel images, usually transparent or white-on-white to make them even-more invisible, used to surreptitiously track when somebody reads an email. When you open an email from HSBC – potentially every time you open an email from them – your email client connects to those web addresses to get the necessary images. The code at the end of each identifies the email they were contained within, which in turn can be linked back to the recipient.

You know how invasive a read-receipt feels? Tracking pixels are like those… but turned up to eleven. While a read-receipt only says “the recipient read this email” (usually only after the recipient gives consent for it to do so), a tracking pixel can often track when and how often you refer to an email6.

If I re-read a year-old email from HSBC, they’re saying that they want to know about it.

But it gets worse. Because HSBC are using http://, rather than https:// URLs for their tracking pixels, they’re also saying that every time you read an email

from them, they’d like everybody on the same network as you to be able to know that you did so, too. If you’re at my house, on my WiFi, and you open an email from HSBC, not

only might HSBC know about it, but I might know about it too.

An easily-avoidable security failure there, HSBC… which isn’t the kind of thing one hopes to hear about a bank!

But… tracking pixels don’t actually work. At least, they doesn’t work on me. Like many privacy-conscious individuals, my devices are configured to block tracking pixels (and a variety of other instruments of surveillance capitalism) right out of the gate.

This means that even though I do read most of the non-spam email that lands in my Inbox, the sender doesn’t get to know that I did so unless I choose to tell them. This is the way that email was designed to work, and is the only way that a sender can be confident that it will work.

But we’re in the Darkest Timeline. Tracking pixels have become so endemic that HSBC have clearly come to the opinion that if they can’t track when I open their emails, I must not be receiving their emails. So they wrote me a letter to tell me that my emails have been “returned undelivered” (which seems to be an outright lie).

Surveillance capitalism has become so ubiquitous that it’s become transparent. Transparent like the invisible spies at the bottom of your bank’s emails.

So in summary, with only a little speculation:

Instead of sending me a misleading letter about undelivered emails, perhaps a better approach for HSBC could be:

Also, to quote your own principles once more: when you make a mistake like assuming your spying is a flawless way to detect the validity of email addresses, perhaps you should “be transparent with our customers and other stakeholders about how we use their data”.

Wouldn’t that be better than writing to a customer to say that their emails are being returned undelivered (when they’re not)… and then having your staff tell them that having received such an email they have no choice but to change the email address they use (which is then disputed by your other staff)?

</rant>

1 You know, the bank with virtue-signalling multiculturalism that we used to joke about.

2 Long, long ago I also had a current account with HSBC which I forgot to close when I switched banks… 20 years ago… and I possibly still owe them for the six pence the account was in debt at the time.

3 After all, I’d been reading their emails!

4 After all, as I’ll stress again: the email address HSBC have for me, and are using, is already correct.

5 In future, I’ll just do this in the first instance. The benefits of live chat being able to be done “in the background” while one gets on with some work are totally outweighed when the entire exchange takes an hour only to reach an unsatisfactory conclusion, whereas a telephone call got things sorted (well hopefully…) within 10 minutes.

6 A tracking pixel can also collect additional personal information about you, such as your IP address at the time that you opened the email, which might disclose your location.

7 It could be even worse still, actually! A sophisticated attacker could “inject” images into the bottom of a HSBC email; those images could, for example, be pictures of text saying things like “You need to urgently call HSBC on [attacker’s phone number].” This would allow a scammer to hijack a legitimate HSBC email by injecting their own content into the bottom of it. Seriously, HSBC, you ought to fix this.

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Have you ever wished there were more to the internet than the same handful of apps and sites you toggle between every day? Then you’re in for a treat.

Welcome to the indie web, a vibrant and underrated part of the internet, aesthetically evocative of the late 1990s and early 2000s. Here, the focus is on personal websites, authentic self-expression, and slow, intentional exploration driven by curiosity and interest.

These kinds of sites took a backseat to the mainstream web around the advent of big social media platforms, but recently the indie web has been experiencing a revival, as more netizens look for connection outside the walled gardens created by tech giants. And with renewed interest comes a new generation of website owner-operators, intent on reclaiming their online experience from mainstream social media imperatives of growth and profit.

…

I want to like this article. It draws attention to the indieweb, smolweb, independent modern personal web, or whatever you want to call it. It does so in a way that inspires interest. And by way of example, it features several of my favourite retronauts. Awesome.

But it feels painfully ironic to read this article… on Substack!

Substack goes… let’s say half-way… to representing the opposite of what the indieweb movement is about! Sure, Substack isn’t Facebook or Twitter… but it’s still very much in the same place as, say, Medium, in that it’s a place where you go if you want other people to be in total control of your Web presence.

The very things that the author praises of the indieweb – its individuality and personality, its freedom control by opaque corporate policies, its separation from the “same handful of apps and sites you toggle between every day” – are exactly the kinds of things that Substack fails to provide.

It’s hardly the biggest thing to hate about Substack, mind – that’d probably be their continued platforming of Covid conspriacy theorists and white nationalist hate groups. But it’s still a pretty big irony to hear the indieweb praised there!

Soo… nice article, shame about the platform it’s published on, I guess?

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

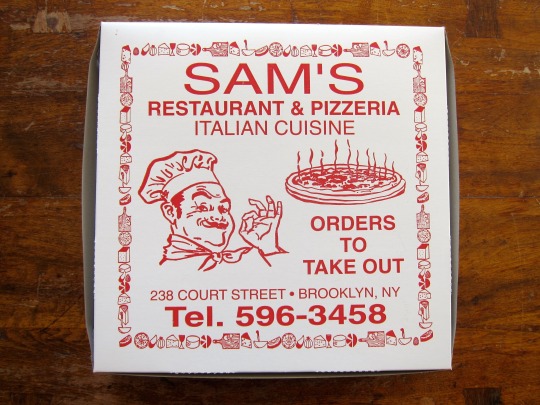

One of my goals was to uncover the origin of the ubiquitous Winking Chef. We’ve all seen him – the chubby mustachioed man wearing a chef’s hat and often making a gesture of approval with his hand. I dug around as much as I could – searching old magazines and websites looking for the origin of the image. Of course generic chef images go way back in print advertising but I was looking for one image in particular, the one I grew up with on my pizza boxes in New Jersey. Who was this guy? Was the image based on a real person? What’s the deal????

…

There are few people in this world who are more-obsessed with pizza than I, but Scott’s gotta be one of them. Since discovering this blog post of his I now really want to go on one of his pizza-themed walking tours of New York City. But you might have guessed that.

Anyway: Scott – who has a collection of pizza boxes, by the way (in case you needed evidence that he’s even more pizza-fixated than me) – noticed the “winking chef” image, traced its origin, and would love to tell you about it. An enjoyable little read.

As previously indicated, I’m not anticipating cosplaying anybody. But I think I could do Greg Universe.

Not young Greg Universe, the Star Child of ‘Story for Steven’… which seems to be the only variety anybody’s ever cosplayed as before if an image search is to be believed. No, I mean: overweight old balding Greg Universe. I could totally pull that look off.

Fake tan lines, white t-shirt (I’d probably make a ‘guitar dad’ one!), sweatpants, carrying a guitar. Easy.

Again, not that I’m planning to. Just saying that I could…

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

Further analysis on a smaller pcap pointed to these mysterious packets arriving ~20ms apart.

This was baffling to me (and to Claude Code). We kicked around several ideas like:

- SSH flow control messages

- PTY size polling or other status checks

- Some quirk of bubbletea or wish

One thing stood out – these exchanges were initiated by my ssh client (stock ssh installed on MacOS) – not by my server.

…

In 2023, ssh added keystroke timing obfuscation. The idea is that the speed at which you type different letters betrays some information about which letters you’re typing. So ssh sends lots of “chaff” packets along with your keystrokes to make it hard for an attacker to determine when you’re actually entering keys.

That makes a lot of sense for regular ssh sessions, where privacy is critical. But it’s a lot of overhead for an open-to-the-whole-internet game where latency is critical.

…

Keystroke timing obfuscation: I could’ve told you that! Although I wouldn’t necessarily have leapt to the possibility of mitigating it server-side by patching-out support for (or at least: the telegraphing of support for!) it; that’s pretty clever.

Altogether this is a wonderful piece demonstrating the whole “engineer mindset”. Detecting a problem, identifying it, understanding it, fixing it, all tied-up in an engaging narrative.

And after playing with his earlier work, ssh tiny.christmas – which itself inspired me to learn a little Bubble Tea/Wish (I’ve got Some Ideas™️) – I’m quite excited to see where this new

ssh-based project of Royalty’s is headed!

Still at MegaConLive. I’ve not done this kind of con before (and still wouldn’t, were it not for my tweenager and her various obsessions). Not my jam, and that’s fine.

But if there’s one thing for which I can sing it’s praises: everybody we’ve met is super friendly and nice. Sure, you can loudly telegraph your fandoms and identities via cosplay, accessories, masks, badges, bracelets or whatever… but it’s also just a friendly community of folks to just talk to.

The fashion choices are, more than anything, just an excuse to engage: a way to say “hey, here’s a conversation starter if you’d like to talk to me!”

Overheard a conversation between my kid and another of a similar age, and there was a heartwarming moment where the other kid said, “oh wow, I thought I was the only one!” Adorbs.

My 12-year-old’s persuaded me to take her to MegaConLive London this weekend.

As somebody who doesn’t pay much attention to the pop culture circles represented by such an event (and hasn’t for 15+ years, or whenever it was that Asdfbook came out?)… have you got any advice for me, Internet?

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

…

…

What this tells me?

Well, quite a lot, actually. It tells me that there’s loads of you fine people reading the content on this site, which is very heart-warming. It also tells me that RSS is by far the main way people consume my content. Which is also fantastic, as I think RSS is very important and should always be a first class citizen when it comes to delivering content to people.

…

I didn’t get a chance to participate in Kev’s survey because, well, I don’t target “RSS Zero” and I don’t always catch up on new articles – even by authors I follow closely – until up to a few weeks after they’re published1. But needless to say, I’d have been in the majority: I follow Kev via my feed reader2.

But I was really interested by this approach to understanding your readership: like Kev, I don’t run any kind of analytics on my personal sites. But he’s onto something! If you want to learn about people, why not just ask them?

Okay, there’s going to be a bias: maybe readers who subscribe by RSS are simply more-likely to respond to a survey? Or are more-likely to visit new articles quickly, which was definitely a factor in this short-lived survey? It’s hard to be certain whether these or other factors might have thrown-off Kev’s results.

But then… what isn’t biased? Were Kev running, say, Google Analytics (or Fathom, or Strike, or Hector, or whatever)… then I wouldn’t show up in his results because I block those trackers3 – another, different, kind of bias.

We can’t dodge such bias: not using popular analytics platforms, and not by surveying users. But one of these two options is, at least, respectful of your users’ privacy and bandwidth.

I’m tempted to run a similar survey myself. I might wait until after my long-overdue redesign – teased here – launches, though. Although perhaps that’s just a procrastination stemming from my insecurity that I’ll hear, like, an embarrassingly-low number of responses like three or four and internalise it as failing some kind of popularity contest4! Needs more thought.

1 I’m happy with this approach: I enjoy being able to treat my RSS reader as sort-of a “magazine”, using my categorisations of feeds – which are partially expressed on my Blogroll page – as a theme. Like: “I’m going to spend 20 minutes reading… tech blogs… or personal blogs by people I know personally… or indieweb-centric content… or news (without the sports, of course)…” This approach makes consuming content online feel especially deliberate and intentional: very much like being in control of what I read and when.

2 In fact, it’s by doing so – with a little help from Matthias Pfefferle – that I was inspired to put a “thank you” message in my RSS feed, among other “secret” features!

3 In fact, I block all third-party JavaScript (and some first-party JavaScript!) except where explicitly permitted, but even for sites that I do allow to load all such JavaScript I still have to manually enable analytics trackers if I want them, which I don’t. Also… I sandbox almost all cookies, and I treat virtually all persistent cookies as session cookies and I delete virtually all session cookies 15 seconds after I navigate away from a its sandbox domain or close its tab… so I’m moderately well-anonymised even where I do somehow receive a tracking cookie.

4 Perhaps something to consider after things have gotten easier and I’ve caught up with my backlog a bit.

I just needed to spin up a new PHP webserver and I was amazed how fast and easy it was, nowadays. I mean: Caddy already makes it pretty easy, but I was delighted to see that, since the last time I did this, the default package repositories had 100% of what I needed!

Apart from setting the hostname, creating myself a user and adding them to the sudo group, and reconfiguring sshd to my preference, I’d

done nothing on this new server. And then to set up a fully-functioning PHP-powered webserver, all I needed to run (for a domain “example.com”) was:

sudo apt update && sudo apt upgrade -y sudo apt install -y caddy php8.4-fpm sudo mkdir -p /var/www/example.com printf "example.com {\n" | sudo tee /etc/caddy/Caddyfile printf " root * /var/www/example.com\n" | sudo tee -a /etc/caddy/Caddyfile printf " encode zstd gzip\n" | sudo tee -a /etc/caddy/Caddyfile printf " php_fastcgi unix//run/php/php8.4-fpm.sock\n" | sudo tee -a /etc/caddy/Caddyfile printf " file_server\n" | sudo tee -a /etc/caddy/Caddyfile printf "}\n" | sudo tee -a /etc/caddy/Caddyfile sudo service caddy restart

After that, I was able to put an index.php file into /var/www/example.com and it just worked.

And when I say “just worked”, I mean with all the bells and whistles you ought to expect from Caddy. HTTPS came as standard (with a solid QualSys grade). HTTP/3 was supported with a 0-RTT handshake.

Mind blown.

Inspired by a conversation with Elle (@e11e@mastodon.social), I expanded my FreshRSS-to-blogroll generator to support injecting 88×31 buttons (where I have them) for folks I follow.

There’s still a way to go, but my blogroll‘s looking a little prettier as a result.

As I lay in bed the other night, I became aware of an unusually-bright LED, glowing in the corner of my room1. Lying still in the dark, I noticed that as I looked directly at the light meant that I couldn’t see it… but when I looked straight ahead – not at it – I could make it out.

This phenomenon seems to be most-pronounced when the thing you’re using a single eye to looking at something small and pointlike (like an LED), and where there’s an obstacle closer to your eye than to the thing you’re looking at. But it’s still a little spooky2.

It’s strange how sometimes you might be less-able to see something that you’re looking directly at… than something that’s only in your peripheral vision.

I’m now at six months since I started working for Firstup.3 And as I continue to narrow my focus on the specifics of the company’s technology, processes, and customers… I’m beginning to lose a sight of some of the things that were in my peripheral vision.

I’m a big believer in the idea that folks who are new to your group (team, organisation, whatever) have a strange superpower that fades over time: the ability to look at “how you work” as an outsider and bring new ideas. It requires a certain boldness to not just accept the status quo but to ask “but why do we do things this way?”. Sure, the answer will often be legitimate and unchallengeable, but by using your superpower and raising the question you bring a chance of bringing valuable change.

That superpower has a sweet spot. A point at which a person knows enough about your new role that they can answer the easy questions, but not so late that they’ve become accustomed to the “quirks” that they can’t see them any longer. The point at which your peripheral vision still reveals where there’s room for improvement, because you’re not yet so-focussed on the routine that you overlook the objectively-unusual.

I feel like I’m close to that sweet spot, right now, and I’m enjoying the opportunity to challenge some of Firstup’s established patterns. Maybe there are things I’ve learned or realised over the course of my career that might help make my new employer stronger and better? Whether not not that turns out to be the case, I’m enjoying poking at the edges to find out!

1 The LED turned out to be attached to a laptop charger that was normally connected in such a way that it wasn’t visible from my bed.

2 Like the first time you realise that you have a retinal blind spot and that your brain is “filling in” the gaps based on what’s around it, like Photoshop’s “smart remove” tool is running within your head.

3 You might recall that I wrote about my incredibly-efficient experience of the recruitment process at Firstup.

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Honestly I just wanted to play around with gradients. But gradients without anything on the horizon lack something, so I added horses. Since I can’t draw horses, now you can draw them. And watch them parade across the screen alongside horses drawn by people you probably wouldn’t like. Or maybe you would, how should I know?!

…

I love a good (by which I mean stupid) use of a .horse domain name. I’m not sure anything will ever beat endless.horse, but gradient.horse might be a close second.

Draw a horse. Watch it get animated and run wild and free with the horses that other people have drawn. That is all.

Keep the Internet fun and weird, people.

This checkin to GC8YPVJ Finn1 reflects a geocaching.com log entry. See more of Dan's cache logs.

Last time I was caching up this neck of the woods was December 2018 (GLXJJWGN, GLXJJX7P). And despite the fact that I was staying in different accommodation, in a different month of the year, I was still in the vicinity for the exact same reason: attending the Christmas party of my nonprofit.

By longstanding tradition, I get up early in the morning at these kinds of events – well before sunrise, at this point in the year! – for a quick walk to a nearby geocache, which today meant this one! To make my hunt in the dark easier I scoped the GZ on Google Street View first and caught sight of a likely hiding spot which later turned it to be exactly right!

It was soon found – the coordinates aren’t great but the hint sent me right to the object I’d scouted earlier – but extraction was challenging – I needed to manufacture a tool from nearby dead wood with which to pry it from its hiding place!

TFTC.