Proud to say that danq.me is the newest site to be admitted into Kev Quirk‘s 512kb club. Let’s keep the web lean!

Author: Dan Q

RSS Zero isn’t the path to RSS Joy

Feed overload is real

The week before last, Katie shared with me that article from last month, Who killed Google Reader? I’d read it before so I didn’t bother clicking through again, but we did end up chatting about RSS a bit1.

Katie “abandoned feeds a few years ago” because they were “regularly ending up with 200+ unread items that felt overwhelming”.

Conversely: I think that dropping your feed reader because there’s too much to read is… solving the wrong problem.

Dave Rupert last week wrote about his feed reader’s “unread” count having grown to a mammoth 2,000+ items, and his plan to reduce that.

I think that he, like Katie, might be looking at his reader in a different way than I do mine.

RSS is not email!

I’ve been in the position that Katie and David describe: of feeling overwhelmed by the sheer volume of unread items. And I know others have, too. So let me share something I’ve learned sooner:

There’s nothing special about reaching Inbox Zero in your feed reader.

It’s not noble nor enlightened to get to the bottom of your “unread” list.

Your 👏 feed 👏 reader 👏 is 👏 not 👏 an 👏 email 👏 client. 👏

The idea of Inbox Zero as applied to your email inbox is about productivity. Any message in your email might be something that requires urgent action, and you won’t know until you filter through and categorise .

But your RSS reader doesn’t (shouldn’t?) be there to add to your to-do list. Your RSS reader is a list of things you might like to read. In an ideal world, reaching “RSS Zero” would mean that you’ve seen everything on the Internet that you might enjoy. That’s not enlightened; that’s sad!

Use RSS for joy

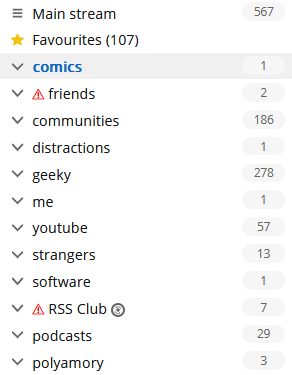

My RSS reader is a place of joy, never of stress. I’ve tried to boil down the principles that makes it so, and here they are:

-

Zero is not the target.

The numbers are to inspire about how much there is “out there” for you, not to enumerate how much work need have to do. -

Group your feeds by importance.

Your feed reader probably lets you group (folder, tag…) your feeds, so you can easily check-in on what you care about and leave other feeds for a rainy day.2 This is good. -

Don’t read every article.

Your feed reader gives you the convenience of keeping content in one place, but you’re not obligated to read every single one. If something doesn’t interest you, mark it as read and move on. No judgement. -

Keep things for later.

Something you want to read, but not now? Find a way to “save for later” to get it out of your main feed so you. Don’t have to scroll past it every day! Star it or tag it3 or push it to your link-saving or note-taking app. I use a link shortener which then feeds back into my feed reader into a “for later” group! -

Let topical content expire.

Have topical/time-dependent feeds (general news media, some social media etc.)? Have reader “purge” unread articles after a time. I have my subscription to BBC News headlines expire after 5 days: if I’ve taken that long to read a headline, it might as well disappear.4 -

Use your feed reader deliberately.

You don’t need popup notifications (a new article’s probably already up to an hour stale by the time it hits your reader). We’re all already slaves to notifications! Visit your reader when it suits you. I start and end every day in mine; most days I hit it again a couple of other times. I don’t need a notification: there’s always new content. The reader keeps track of what I’ve not looked at. -

It’s not just about text.

Don’t limit your feed reader to just text. Podcasts are nothing more than RSS feeds with attached audio files; you can keep track in your reader if you like. Most video platforms let you subscribe to a feed of new videos on a channel or playlist basis, so you can e.g. get notified about YouTube channel updates without having to fight with The Algorithm. Features like XPath Scraping in FreshRSS let you subscribe to services that don’t even have feeds: to watch the listings of dogs on local shelter websites when you’re looking to adopt, for example. -

Do your reading in your reader.

Your reader respects your preferences: colour scheme, font size, article ordering, etc. It doesn’t nag you with newsletter signup popups, cookie notices, or ads. Make the most of that. Some RSS feeds try to disincentivise this by providing only summary content, but a good feed reader can work around this for you, fetching actual content in the background.5 -

Use offline time to catch up on your reading.

Some of the best readers support offline mode. I find this fantastic when I’m on an aeroplane, because I can catch up on all of the interesting articles I’d not had time to yet while grounded, and my reading will get synchronised when I touch down and disable flight mode. -

Make your reader work for you.

A feed reader is a tool that works for you. If it’s causing you pain, switch to a different tool6, or reconfigure the one you’ve got. And if the way you find joy from RSS is different from me, that’s fine: this is a personal tool, and we don’t have to have the same answer.

And if you’d like to put those tips in your RSS reader to digest later or at your own pace, you can: here’s an RSS feed containing (only) these RSS tips!

Footnotes

1 You’d be forgiven for thinking that RSS was my favourite topic, given that so-far-this-year I’ve written about improving WordPress’s feeds, about mathematical quirks in FreshRSS, on using XPath scraping as an RSS alternative (twice), and the joy of getting notified when a vlog channel is ressurected (thanks to RSS). I swear I have other interests.

2 If your feed reader doesn’t support any kind of grouping, get a better reader.

3 If your feed reader doesn’t support any kind of marking/favouriting/tagging of articles, get a better reader.

4 If your feed reader doesn’t support customisable expiry times… well that’s not too unusual, but you might want to consider getting a better reader.

5 FreshRSS calls the feature that fetches actual post content from the resulting page “Article CSS selector on original website”, which is a bit of a mouthful, but you can see what it’s doing. If your feed reader doesn’t support fetching full content… well, it’s probably not that big a deal, but it’s a good nice-to-have if you’re shopping around for a reader, in my opinion.

6 There’s so much choice in feed readers, and migrating between them is (usually) very easy, so everybody can find the best choice for them. Feedly, Inoreader, and The Old Reader are popular, free, and easy-to-use if you’re looking to get started. I prefer a selfhosted tool so I use the amazing FreshRSS (having migrated from Tiny Tiny RSS). Here’s some more tips on getting started. You might prefer a desktop or mobile tool, or even something exotic: part of the beauty of RSS feeds is they’re open and interoperable, so if for example you love using Slack, you can use Slack to push feed updates to you and get almost all the features you need to do everything in my list, including grouping (using channels) and saving for later (using Slackbot/”remind me about this”). Slack’s a perfectly acceptable feed reader for some people!

Better WordPress RSS Feeds

I’ve made a handful of tweaks to my RSS feed which I feel improves upon WordPress’s default implementation, at least in my use-case.1 In case any of these improvements help you, too, here’s a list of them:

Post Kinds in Titles

Since 2020, I’ve decorated post titles by prefixing them with the “kind” of post they are (courtesy of the Post Kinds plugin). I’ve already written about how I do it, if you’re interested.

RSS Only posts

A minority of my posts are – initially, at least – publicised only via my RSS feed (and places that are directly fed by it, like email subscribers). I use a tag to identify posts to be hidden in this way. I’ve written about my implementation before, but I’ve since made a couple of additional improvements:

- Suppressing the tag from tag clouds, to make it harder to accidentally discover these posts by tag-surfing,

- Tweaking the title of such posts when they appear in feeds (using the same technique as above), so that readers know when they’re seeing “exclusive” content, and

- Setting a

X-Robots-Tag: noindex, nofollowHTTP header when viewing such tag or a post, to discourage search engines (code for this not shown below because it’s so very specific to my theme that it’s probably no use to anybody else!).

// 1. Suppress the "rss club" tag from tag clouds/the full tag list function rss_club_suppress_tags_from_display( string $tag_list, string $before, string $sep, string $after, int $post_id ): string { foreach(['rss-club'] as $tag_to_suppress){ $regex = sprintf( '/<li>[^<]*?<a [^>]*?href="[^"]*?\/%s\/"[^>]*?>.*?<\/a>[^<]*?<\/li>/', $tag_to_suppress ); $tag_list = preg_replace( $regex, '', $tag_list ); } return $tag_list; } add_filter( 'the_tags', 'rss_club_suppress_tags_from_display', 10, 5 ); // 2. In feeds, tweak title if it's an RSS exclusive function rss_club_add_rss_only_to_rss_post_title( $title ){ $post_tag_slugs = array_map(function($tag){ return $tag->slug; }, wp_get_post_tags( get_the_ID() )); if ( ! in_array( 'rss-club', $post_tag_slugs ) ) return $title; // if we don't have an rss-club tag, drop out here return trim( "{$title} [RSS Exclusive!]" ); return $title; } add_filter( 'the_title_rss', 'rss_club_add_rss_only_to_rss_post_title', 6 );

Adding a stylesheet

Adding a stylesheet to your feeds can make them much friendlier to beginner users (which helps drive adoption) without making them much less-convenient for people who know how to use feeds already. Darek Kay and Terence Eden both wrote great articles about this just earlier this year, but I think my implementation goes a step further.

In addition to adding some “Q” branding, I made tweaks to make it work seamlessly with both my RSS and Atom feeds by using

two <xsl:for-each> blocks and exploiting the fact that the two standards don’t overlap in their root namespaces. Here’s my full XSLT; you need to

override your feed template as Terence describes to use it, but mine can be applied to both RSS and Atom.2

I’ve still got more I’d like to do with this, for example to take advantage of the thumbnail images I attach to posts. On which note…

Thumbnail images

When I first started offering email subscription options I used Mailchimp’s RSS-to-email service, which was… okay, but not great, and I didn’t like the privacy implications that came along with it. Mailchimp support adding thumbnails to your email template from your feed, but WordPress themes don’t by-default provide the appropriate metadata to allow them to do that. So I installed Jordy Meow‘s RSS Featured Image plugin which did it for me.

<item> <title>[Checkin] Geohashing expedition 2023-07-27 51 -1</title> <link>https://danq.me/2023/07/27/geohashing-expedition-2023-07-27-51-1/</link> ... <media:content url="https://bcdn.danq.me/_q23u/2023/07/20230727_141710-1024x576.jpg" medium="image" /> <media:description>Dan, wearing a grey Three Rings hoodie, carrying French Bulldog Demmy, standing on a path with trees in the background.</media:description> </item>

During my little redesign earlier this year I decided to go two steps further: (1) ditching the

plugin and implementing the functionality directly into my theme (it’s really not very much code!), and (2) adding not only a <media:content medium="image" url="..."

/> element but also a <media:description> providing the default alt-text for that image. I don’t know if any feed readers (correctly) handle this

accessibility-improving feature, but my stylesheet above will, some day!

Here’s how that’s done:

function rss_insert_namespace_for_featured_image() { echo "xmlns:media=\"http://search.yahoo.com/mrss/\"\n"; } function rss_insert_featured_image( $comments ) { global $post; $image_id = get_post_thumbnail_id( $post->ID ); if( ! $image_id ) return; $image = get_the_post_thumbnail_url( $post->ID, 'large' ); $image_url = esc_url( $image ); $image_alt = esc_html( get_post_meta( $image_id, '_wp_attachment_image_alt', true ) ); $image_title = esc_html( get_the_title( $image_id ) ); $image_description = empty( $image_alt ) ? $image_title : $image_alt; if ( !empty( $image ) ) { echo <<<EOF <media:content url="{$image_url}" medium="image" /> <media:description>{$image_description}</media:description> EOF; } } add_action( 'rss2_ns', 'rss_insert_namespace_for_featured_image' ); add_action( 'rss2_item', 'rss_insert_featured_image' );

So there we have it: a little digital gardening, and four improvements to WordPress’s default feeds.

RSS may not be as hip as it once was, but little improvements can help new users find their way into this (enlightened?) way to consume the Web.

If you’re using RSS to follow my blog, great! If it’s not for you, perhaps pick your favourite alternative way to get updates, from options including email, Telegram, the Fediverse (e.g. Mastodon), and more…

Update 4 September 2023: More-recently, I’ve improved WordPress RSS feeds by preventing them from automatically converting emoji into images.

Footnotes

1 The changes apply to the Atom feed too, for anybody of such an inclination. Just assume that if I say RSS I’m including Atom, okay?

2 The experience of writing this transformation/stylesheet also gave me yet another opportunity to remember how much I hate working with XSLTs. This time around, in addition to the normal namespace issues and headscratching syntax, I had to deal with the fact that I initially tried to use a feature from XSLT version 2.0 (a 22-year-old version) only to discover that all major web browsers still only support version 1.0 (specified last millenium)!

Not the Isle of Man

This week, Ruth and I didn’t go the Isle of Man.

It’s (approximately) our 0x10th anniversary1,

and, struggling to find a mutually-convenient window in our complex work schedules, we’d opted to spend a few days exploring the Isle of Man. Everything was fine, until we were aboard

the ‘plane.

Once everybody was seated and ready to take off, the captain stood up at the front of the ‘plane and announced that it had been cancelled2.

The Isle of Man closes, he told us (we assume he just meant the airport) and while they’d be able to get us there before it did, there wouldn’t be sufficient air traffic control crew to allow them to get back (to, presumably, the cabin crews’ homes in London).

Back at the terminal we made our way through border control (showing my passport despite having not left the airport, never mind the country) and tried to arrange a rebooking, only to be told that they could only manage to get us onto a flight that’d be leaving 48 hours later, most of the way through our mini-break, so instead we opted for a refund and gave up.3

We resolved to try to do the same kinds of things that we’d hoped to do on the Isle of Man, but closer to home: some sightseeing, some walks, some spending-time-together. You know the drill.

A particular highlight of our trip to the North Leigh Roman Villa – one of those “on your doorstep so you never go” places – was when the audio tour advised us to beware of the snails when crossing what was once the villa’s central courtyard.

At first we thought this was an attempt at humour, but it turns out that the Romans brought with them to parts of Britain a variety of large edible snail – helix pomatia – which can still be found in concentration in parts of the country where they were widely farmed.4

There’s a nice little geocache near the ruin, too, which we were able to find on our way back.

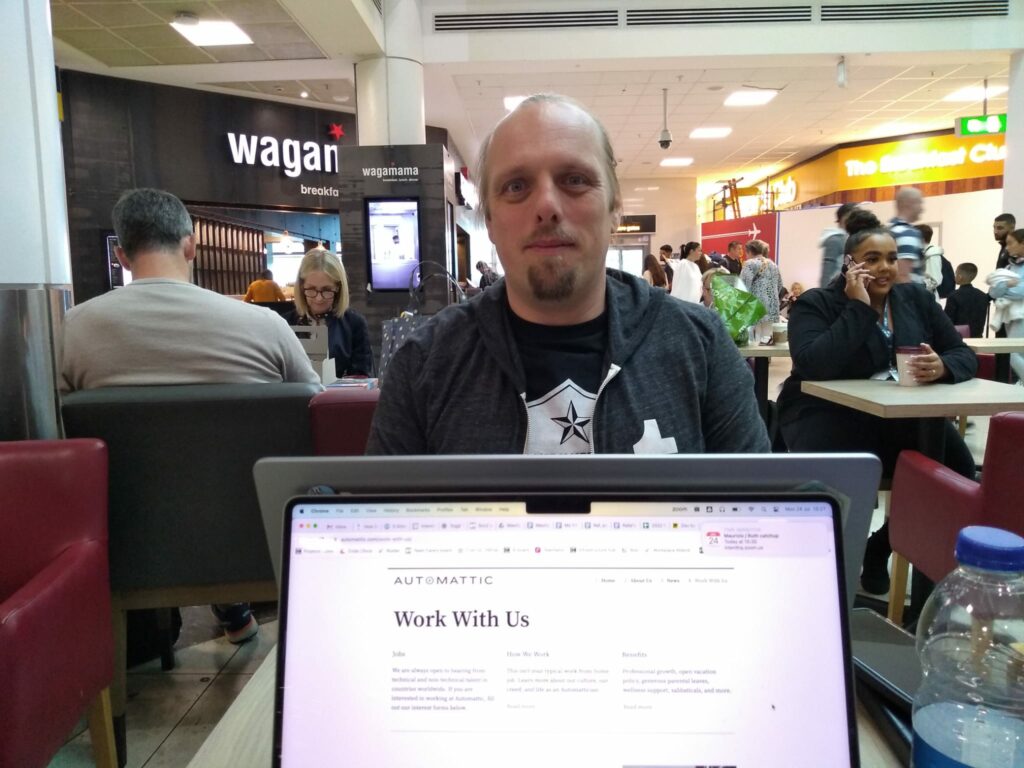

Before you think that I didn’t get anything out of my pointless hours at the airport, though, it turns out I’d brought home a souvenier… a stinking cold! How about that for efficiency: I got all the airport-germs, but none of the actual air travel. By mid-afternoon on Tuesday I was feeling pretty rotten, and it only got worse from then on.

I’m confident that Ruth didn’t mind too much that I spent Wednesday mostly curled up in a sad little ball, because it let her get on with applying to a couple of jobs she’s interested in. Because it turns out there was a third level of disaster to this week: in addition to our ‘plane being cancelled and me getting sick, this week saw Ruth made redundant as her employer sought to dig itself out of a financial hole. A hat trick of bad luck!

As Ruth began to show symptoms (less-awful than mine, thankfully) of whatever plague had befallen me, we bundled up in bed and made not one but two abortive attempts at watching a film together:

- Spin Me Round, which looked likely to be a simple comedy that wouldn’t require much effort by my mucus-filled brain, but turned out to be… I’ve no idea what it was supposed to be. It’s not funny. It’s not dramatic. The characters are, for the most part, profoundly uncompelling. There’s the beginnings of what looks like it was supposed to be a romantic angle but it mostly comes across as a creepy abuse of power. We watched about half and gave up.

- Ant-Man and the Wasp: Quantumania, because we figured “how bad can a trashy MCU sequel be anyway; we know what to expect!” But we couldn’t connect to it at all. Characters behave in completely unrealistic ways and the whole thing feels like it was produced by somebody who wanted to be making one of the new Star Wars films, but with more CGI. We watched about half and gave up.

As Thursday drew on and the pain in my head and throat was replaced with an unrelenting cough, I decided I needed some fresh air.

So while Ruth collected the shopping, I found my way to the 2023-07-27 51 -1 geohashpoint. And came back wheezing and in need of a lie-down.

I find myself wondering if (despite three jabs and a previous infection) I’ve managed to contract covid again, but I haven’t found the inclination to take a test. What would I do differently if I do have it, now, anyway? I feel like we might be past that point in our lives.

All in all, probably the worst anniversary celebration we’ve ever had, and hopefully the worst we’ll ever have. But a fringe benefit of a willingness to change bases is that we can celebrate our 10th5 anniversary next year, too. Here’s to that.

Footnotes

1 Because we’re that kind of nerds, we count our anniversaries in base 16

(0x10 is 16), or – sometimes – in whatever base is mathematically-pleasing and gives us a nice round number. It could be our 20th anniversary, if you prefer octal.

2 I’ve been on some disastrous aeroplane journeys before, including one just earlier this year which was supposed to take me from Athens to Heathrow, got re-arranged to go to Gatwick, got delayed, ran low on fuel, then instead had to fly to Stansted, wait on the tarmac for a couple of hours, then return to Gatwick (from which I travelled – via Heathrow – home). But this attempt to get to the Isle of Man was somehow, perhaps, even worse.

3 Those who’ve noticed that we were flying EasyJet might rightly give a knowing nod at this point.

4 The warning to take care not to tread on them is sound legal advice: this particular variety of snail is protected under the Wildlife and Countryside Act 1981!

5 Next year will be our 10th anniversary… in base 17. Eww, what the hell is base 17 for and why does it both offend and intrigue me so?

Geohashing expedition 2023-07-27 51 -1

This checkin to geohash 2023-07-27 51 -1 reflects a geohashing expedition. See more of Dan's hash logs.

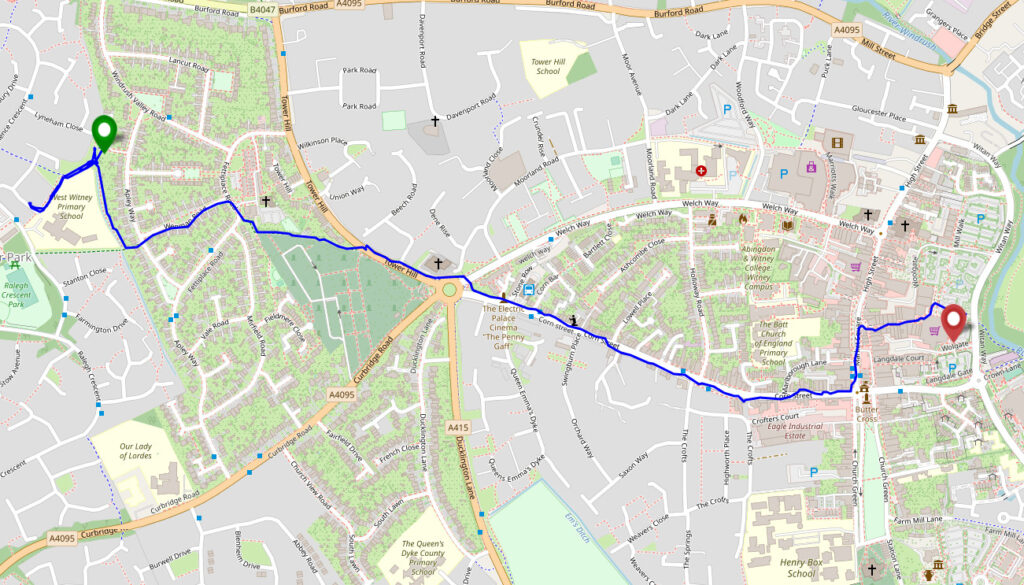

Location

Northern boundary hedge of West Witney Primary School, Witney

Participants

Expedition

I wasn’t supposed to be here. I was supposed to be on the Isle of Man with my partner, celebrating our 0x10th anniversary. But

this week’s been a week of disasters: my partner lost her job, our plane to the Isle of Man got cancelled, and then I got sick (most-likely, I got to catch airport germs from people I

got to sit next to on an aircraft which was then cancelled before it had a chance to take off). So mostly this week I’ve been sat at home playing video games.

But the dog needed a walk, and my partner needed to go to the supermarket, so I had her drop me and the geopooch off in West Witney to find the hashpoint and then walk to meet them after she’d collected the shopping. I couldn’t find my GPSr, so I used my phone, and it was reporting low accuracy until I rebooted it, by which time I’d walked past the hashpoint and had to double-back, much to the doggo’s confusion.

I reached the hashpoint at 14:16 BST (and probably a few points before than, owing to my navigation failure). I needed to stand very close to the fence to get within the circle of uncertainty, but at least I didn’t have to reach through and into the school grounds.

Tracklog

My smartwatch kept a tracklog:

Photos

Dan Q found GCXWEX Villa View

This checkin to GCXWEX Villa View reflects a geocaching.com log entry. See more of Dan's cache logs.

Found with Ruth after coming out to explore the spectacular Roman villa. We’d supposed to have been out of the Isle of Man celebrating our anniversary, but our ‘plane got cancelled, so we’ve opted for staying at home and doing local cycling expeditions instead. SL, TFTC.

Short-Term Blogging

There’s a perception that a blog is a long-lived, ongoing thing. That it lives with and alongside its author.1

But that doesn’t have to be true, and I think a lot of people could benefit from “short-term” blogging. Consider:

-

Photoblogging your holiday, rather than posting snaps to social media

You gain the ability to add context, crosslinking, and have permanent addresses (rather than losing eveything to the depths of a feed). You can crosspost/syndicate to your favourite socials if that’s your poison..

-

Blogging your studies, rather than keeping your notes to yourself

Writing what you learn helps you remember it; writing what you learn in a public space helps others learn too and makes it easy to search for your discoveries later.2 -

Recording your roleplaying, rather than just summarising each session to your fellow players

My D&D group does this at levellers.blog! That site won’t continue to be updated forever – the party will someday retire or, more-likely, come to a glorious but horrific end – but it’ll always live on as a reminder of what we achieved.

One of my favourite examples of such a blog was 52 Reflect3 (now integrated into its successor The Improbable Blog). For 52 consecutive weeks my partner‘s brother Robin blogged about adventures that took him out of his home in London and it was amazing. The project’s finished, but a blog was absolutely the right medium for it because now it’s got a “forever home” on the Web (imagine if he’d posted instead to Twitter, only for that platform to turn into a flaming turd).

I don’t often shill for my employer, but I genuinely believe that the free tier on WordPress.com is an excellent way to give a forever home to your short-term blog4. Did you know that you can type new.blog (or blog.new; both work!) into your browser to start one?

What are you going to write about?

Footnotes

1 This blog is, of course, an example of a long-term blog. It’s been going in some form or another for over half my life, and I don’t see that changing. But it’s not the only kind of blog.

2 Personally, I really love the serendipity of asking a web search engine for the solution to a problem and finding a result that turns out to be something that I myself wrote, long ago!

3 My previous posts about 52 Reflect: Challenge Robin, Twatt, Brixton to Brighton by Boris Bike, Ending on a High (and associated photo/note)

4 One of my favourite features of WordPress.com is the fact that it’s built atop the world’s most-popular blogging software and you can export all your data at any time, so there’s absolutely no lock-in: if you want to migrate to a competitor or even host your own blog, it’s really easy to do so!

Werewolves and Wanderer [Video]

This post is also available as an article. So if you'd rather read a conventional blog post of this content, you can!

This video accompanies a blog post of the same title. The content is mostly the same; the blog post contains a few extra elements (especially in the footnotes!). Enjoy whichever one you choose.

Also available on YouTube and on Facebook.

Werewolves and Wanderer

This post is also available as a video. If you'd prefer to watch/listen to me talk about this topic, give it a look.

This blog post is also available as a video. Would you prefer to watch/listen to me tell you about the video game that had the biggest impact on my life?

Of all of the videogames I’ve ever played, perhaps the one that’s had the biggest impact on my life1 was: Werewolves and (the) Wanderer.2

This simple text-based adventure was originally written by Tim Hartnell for use in his 1983 book Creating Adventure Games on your Computer. At the time, it was common for computing books and magazines to come with printed copies of program source code which you’d need to re-type on your own computer, printing being significantly many orders of magnitude cheaper than computer media.3

I’d been working my way through the operating manual for our microcomputer, trying to understand it all.5

![Scan of a ring-bound page from a technical manual. The page describes the use of the "INPUT" command, saying "This command is used to let the computer know that it is expecting something to be typed in, for example, the answer to a question". The page goes on to provide a code example of a program which requests the user's age and then says "you look younger than [age] years old.", substituting in their age. The page then explains how it was the use of a variable that allowed this transaction to occur.](https://bcdn.danq.me/_q23u/2023/07/cpc664-manual-input-command.png)

[ENTER] at the end of each line.

In particular, I found myself comparing Werewolves to my first attempt at a text-based adventure. Using what little I’d grokked of programming so far, I’d put together

a series of passages (blocks of PRINT statements6)

with choices (INPUT statements) that sent the player elsewhere in the story (using, of course, the long-considered-harmful GOTO statement), Choose-Your-Own-Adventure style.

Werewolves was… better.

Werewolves and Wanderer was my first lesson in how to structure a program.

Let’s take a look at a couple of segments of code that help illustrate what I mean (here’s the full code, if you’re interested):

10 REM WEREWOLVES AND WANDERER 20 GOSUB 2600:REM INTIALISE 30 GOSUB 160 40 IF RO<>11 THEN 30 50 PEN 1:SOUND 5,100:PRINT:PRINT "YOU'VE DONE IT!!!":GOSUB 3520:SOUND 5,80:PRINT "THAT WAS THE EXIT FROM THE CASTLE!":SOUND 5,200 60 GOSUB 3520 70 PRINT:PRINT "YOU HAVE SUCCEEDED, ";N$;"!":SOUND 5,100 80 PRINT:PRINT "YOU MANAGED TO GET OUT OF THE CASTLE" 90 GOSUB 3520 100 PRINT:PRINT "WELL DONE!" 110 GOSUB 3520:SOUND 5,80 120 PRINT:PRINT "YOUR SCORE IS"; 130 PRINT 3*TALLY+5*STRENGTH+2*WEALTH+FOOD+30*MK:FOR J=1 TO 10:SOUND 5,RND*100+10:NEXT J 140 PRINT:PRINT:PRINT:END ... 2600 REM INTIALISE 2610 MODE 1:BORDER 1:INK 0,1:INK 1,24:INK 2,26:INK 3,18:PAPER 0:PEN 2 2620 RANDOMIZE TIME 2630 WEALTH=75:FOOD=0 2640 STRENGTH=100 2650 TALLY=0 2660 MK=0:REM NO. OF MONSTERS KILLED ... 3510 REM DELAY LOOP 3520 FOR T=1 TO 900:NEXT T 3530 RETURN

...) have been added for readability/your convenience.

What’s interesting about the code above? Well…

- The code for “what to do when you win the game” is very near the top. “Winning” is the default state. The rest of the adventure exists to obstruct that. In a language with enforced line numbering and no screen editor7, it makes sense to put fixed-length code at the top… saving space for the adventure to grow below.

- Two subroutines are called (the

GOSUBstatements):- The first sets up the game state: initialising the screen (

2610), the RNG (2620), and player characteristics (2630–2660). This also makes it easy to call it again (e.g. if the player is given the option to “start over”). This subroutine goes on to set up the adventure map (more on that later). - The second starts on line

160: this is the “main game” logic. After it runs, each time, line40checksIF RO<>11 THEN 30. This tests whether the player’s location (RO) is room 11: if so, they’ve exited the castle and won the adventure. Otherwise, flow returns to line30and the “main game” subroutine happens again. This broken-out loop improving the readability and maintainability of the code.8

- The first sets up the game state: initialising the screen (

- A common subroutine is the “delay loop” (line

3520). It just counts to 900! On a known (slow) processor of fixed speed, this is a simpler way to put a delay in than relying on a real-time clock.

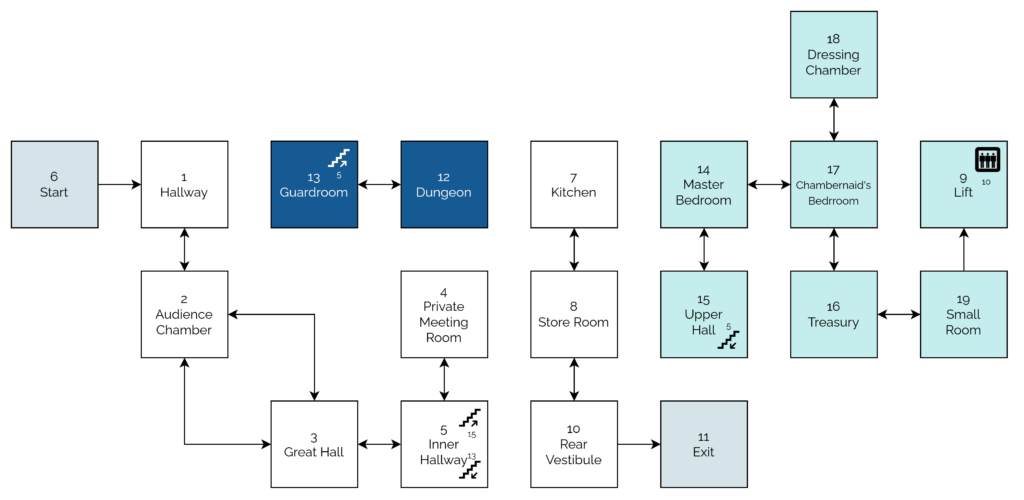

The game setup gets more interesting still when it comes to setting up the adventure map. Here’s how it looks:

2680 REM SET UP CASTLE 2690 DIM A(19,7):CHECKSUM=0 2700 FOR B=1 TO 19 2710 FOR C=1 TO 7 2720 READ A(B,C):CHECKSUM=CHECKSUM+A(B,C) 2730 NEXT C:NEXT B 2740 IF CHECKSUM<>355 THEN PRINT "ERROR IN ROOM DATA":END ... 2840 REM ALLOT TREASURE 2850 FOR J=1 TO 7 2860 M=INT(RND*19)+1 2870 IF M=6 OR M=11 OR A(M,7)<>0 THEN 2860 2880 A(M,7)=INT(RND*100)+100 2890 NEXT J 2910 REM ALLOT MONSTERS 2920 FOR J=1 TO 6 2930 M=INT(RND*18)+1 2940 IF M=6 OR M=11 OR A(M,7)<>0 THEN 2930 2950 A(M,7)=-J 2960 NEXT J 2970 A(4,7)=100+INT(RND*100) 2980 A(16,7)=100+INT(RND*100) ... 3310 DATA 0, 2, 0, 0, 0, 0, 0 3320 DATA 1, 3, 3, 0, 0, 0, 0 3330 DATA 2, 0, 5, 2, 0, 0, 0 3340 DATA 0, 5, 0, 0, 0, 0, 0 3350 DATA 4, 0, 0, 3, 15, 13, 0 3360 DATA 0, 0, 1, 0, 0, 0, 0 3370 DATA 0, 8, 0, 0, 0, 0, 0 3380 DATA 7, 10, 0, 0, 0, 0, 0 3390 DATA 0, 19, 0, 8, 0, 8, 0 3400 DATA 8, 0, 11, 0, 0, 0, 0 3410 DATA 0, 0, 10, 0, 0, 0, 0 3420 DATA 0, 0, 0, 13, 0, 0, 0 3430 DATA 0, 0, 12, 0, 5, 0, 0 3440 DATA 0, 15, 17, 0, 0, 0, 0 3450 DATA 14, 0, 0, 0, 0, 5, 0 3460 DATA 17, 0, 19, 0, 0, 0, 0 3470 DATA 18, 16, 0, 14, 0, 0, 0 3480 DATA 0, 17, 0, 0, 0, 0, 0 3490 DATA 9, 0, 16, 0, 0, 0, 0

DATA statements form a “table”.

What’s this code doing?

- Line

2690defines an array (DIM) with two dimensions9 (19 by 7). This will store room data, an approach that allows code to be shared between all rooms: much cleaner than my first attempt at an adventure with each room having its ownINPUThandler. - The two-level loop on lines

2700through2730populates the room data from theDATAblocks. Nowadays you’d probably put that data in a separate file (probably JSON!). Each “row” represents a room, 1 to 19. Each “column” represents the room you end up at if you travel in a given direction: North, South, East, West, Up, or Down. The seventh column – always zero – represents whether a monster (negative number) or treasure (positive number) is found in that room. This column perhaps needn’t have been included: I imagine it’s a holdover from some previous version in which the locations of some or all of the treasures or monsters were hard-coded. - The loop beginning on line

2850selects seven rooms and adds a random amount of treasure to each. The loop beginning on line2920places each of six monsters (numbered-1through-6) in randomly-selected rooms. In both cases, the start and finish rooms, and any room with a treasure or monster, is ineligible. When my 8-year-old self finally deciphered what was going on I was awestruck at this simple approach to making the game dynamic. - Rooms 4 and 16 always receive treasure (lines

2970–2980), replacing any treasure or monster already there: the Private Meeting Room (always worth a diversion!) and the Treasury, respectively. - Curiously, room 9 (the lift) defines three exits, even though it’s impossible to take an action in this location: the player teleports to room 10 on arrival! Again, I assume this is vestigal code from an earlier implementation.

- The “checksum” that’s tested on line

2740is cute, and a younger me appreciated deciphering it. I’m not convinced it’s necessary (it sums all of the values in theDATAstatements and expects355to limit tampering) though, or even useful: it certainly makes it harder to modify the rooms, which may undermine the code’s value as a teaching aid!

Something you might notice is missing is the room descriptions. Arrays in this language are strictly typed: this array can only contain integers and not strings. But there are other reasons: line length limitations would have required trimming some of the longer descriptions. Also, many rooms have dynamic content, usually based on random numbers, which would be challenging to implement in this way.

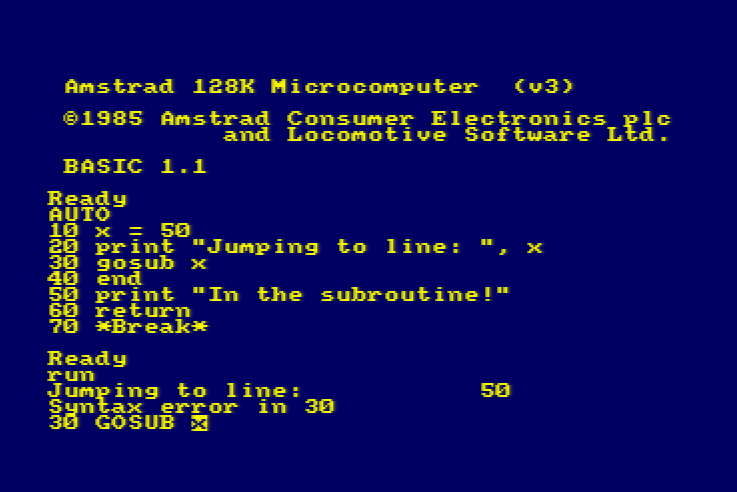

As a child, I did once try to refactor the code so that an eighth column of data specified the line number to which control should pass to display the room description. That’s

a bit of a no-no from a “mixing data and logic” perspective, but a cool example of metaprogramming before I even knew it! This didn’t work, though: it turns out you can’t pass a

variable to a Locomotive BASIC GOTO or GOSUB. Boo!10

Werewolves and Wanderer has many faults11. But I’m clearly not the only developer whose early skills were honed and improved by this game, or who hold a special place in their heart for it. Just while writing this post, I discovered:

- A moderately-faithful Inform reimplementation

- A less-faithful semi-graphical adaptation

- A C# reimplementation with a web interface (video)

- An ongoing livestreamed effort to reimplement as a Sierra-style point-and-click adventure

- An Applesoft BASIC implementation which includes a dynamically-revealed map

- A C reimplementation with a high score table

- A somewhat-faithful reimplementation in Rust (playable online via WebAssembly)

- A very accurate rendition in Python

- A Ruby/Python microservices-based implementation

- Many, many people commenting on the above or elsewhere about how instrumental the game was in their programming journey, too.

A decade or so later, I’d be taking my first steps as a professional software engineer. A couple more decades later, I’m still doing it.

And perhaps that adventure -the one that’s occupied my entire adult life – was facilitated by this text-based one from the 1980s.

Footnotes

1 The game that had the biggest impact on my life, it might surprise you to hear, is not among the “top ten videogames that stole my life” that I wrote about almost exactly 16 years ago nor the follow-up list I published in its incomplete form three years later. Turns out that time and impact are not interchangable. Who knew?

2 The game is variously known as Werewolves and Wanderer, Werewolves and

Wanderers, or Werewolves and the

Wanderer. Or, on any system I’ve been on, WERE.BAS, WEREWOLF.BAS, or WEREWOLV.BAS, thanks to the CPC’s eight-point-three filename limit.

3 Additionally, it was thought that having to undertake the (painstakingly tiresome) process of manually re-entering the source code for a program might help teach you a little about the code and how it worked, although this depended very much on how readable the code and its comments were. Tragically, the more comprehensible some code is, the more long-winded the re-entry process.

4 The CPC’s got a fascinating history in its own right, but you can read that any time.

5 One of my favourite features of home microcomputers was that seconds after you turned them on, you could start programming. Your prompt was an interface to a programming language. That magic had begun to fade by the time DOS came to dominate (sure, you can program using batch files, but they’re neither as elegant nor sophisticated as any BASIC dialect) and was completely lost by the era of booting directly into graphical operating systems. One of my favourite features about the Web is that it gives you some of that magic back again: thanks to the debugger in a modern browser, you can “tinker” with other people’s code once more, right from the same tool you load up every time. (Unfortunately, mobile devices – which have fast become the dominant way for people to use the Internet – have reversed this trend again. Try to View Source on your mobile – if you don’t already know how, it’s not an easy job!)

6 In particular, one frustration I remember from my first text-based adventure was that I’d been unable to work around Locomotive BASIC’s lack of string escape sequences – not that I yet knew what such a thing would be called – in order to put quote marks inside a quoted string!

7 “Screen editors” is what we initially called what you’d nowadays call a “text editor”: an application that lets you see a page of text at the same time, move your cursor about the place, and insert text wherever you feel like. It may also provide features like copy/paste and optional overtyping. Screen editors require more resources (and aren’t suitable for use on a teleprinter) compared to line editors, which preceeded them. Line editors only let you view and edit a single line at a time, which is how most of my first 6 years of programming was done.

8 In a modern programming language, you might use while true or similar for a

main game loop, but this requires pushing the “outside” position to the stack… and early BASIC dialects often had strict (and small, by modern standards) limits on stack height that

would have made this a risk compared to simply calling a subroutine from one line and then jumping back to that line on the next.

9 A neat feature of Locomotive BASIC over many contemporary and older BASIC dialects was its support for multidimensional arrays. A common feature in modern programming languages, this language feature used to be pretty rare, and programmers had to do bits of division and modulus arithmetic to work around the limitation… which, I can promise you, becomes painful the first time you have to deal with an array of three or more dimensions!

10 In reality, this was rather unnecessary, because the ON x GOSUB command

can – and does, in this program – accept multiple jump points and selects the one

referenced by the variable x.

11 Aside from those mentioned already, other clear faults include: impenetrable controls unless you’ve been given instuctions (although that was the way at the time); the shopkeeper will penalise you for trying to spend money you don’t have, except on food, presumably as a result of programmer laziness; you can lose your flaming torch, but you can’t buy spares in advance (you can pay for more, and you lose the money, but you don’t get a spare); some of the line spacing is sometimes a little wonky; combat’s a bit of a drag; lack of feedback to acknowledge the command you enterted and that it was successful; WHAT’S WITH ALL THE CAPITALS; some rooms don’t adequately describe their exits; the map is a bit linear; etc.

Mastodon Re-Introduction

Finally got around to rewriting my Mastodon introduction, now that my selfhosted server’s got enough interconnection that people might actually see it!

If you’re on the Fediverse and you’re not already doing so, you can follow me at @dan@danq.me. Or follow my blog at @blog@danq.me.

Solitary Nouns

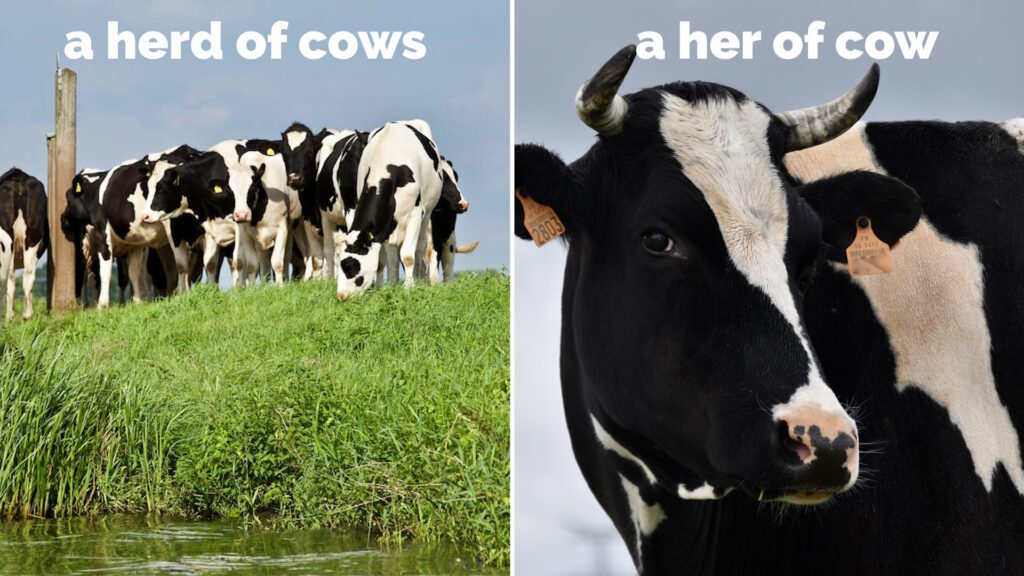

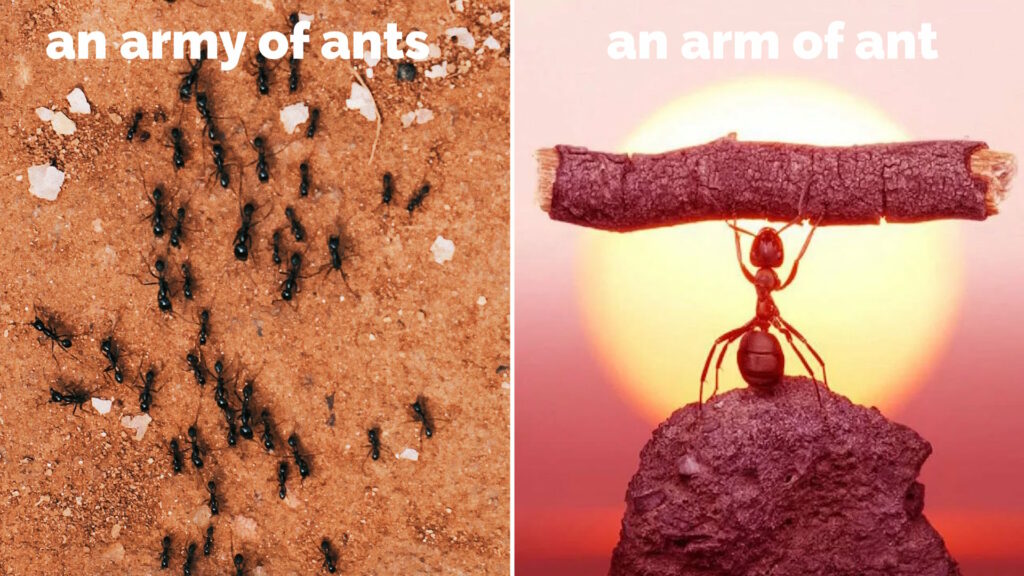

The other night, Ruth and I were talking about collective nouns (y’know, like a herd of cows or a flock of sheep) and came up with the somewhat batty idea of solitary nouns. Like collective nouns, but for a singular subject (one cow, sheep, or whatever).

Then, we tried to derive what the words could be. Some of the results write themselves.1

Some of them involve removing one or more letters from the collective noun to invent a shorter word to be the solitary noun.

Did I miss any obvious ones?

Footnotes

1 Also consider “parliament of owls” ➔ “politician of owl”, “troop of monkeys” ➔ “soldier of monkey”, “band of gorillas” ➔ “musician of gorilla”. Hey… is that where that band‘s name come from?

2 Is “cluster of stars” ➔ “luster of star” anything?

3 Ruth enjoyed the singularised “a low of old bollock”, too.

Note #21687

Threads

Meta are launching Threads tomorrow.

If you’re wondering how this will impact the world and society’s social connections, I direct you to the predictive powers of 1984 British cinema.

The Miracle Sudoku

This is a repost promoting content originally published elsewhere. See more things Dan's reposted.

I first saw this video when it was doing the rounds three years ago and was blown away. I was reminded of it recently when it appeared in a blog post about AI’s possible future role in research by Terence Eden.

I don’t even like sudoku. And if you’d told me in advance that I’d enjoy watching a man slowly solve a sudoku-based puzzle in real-time, I’d have called you crazy. But I watched it again today, for what must’ve been the third time, and it’s still magical. The artistry of puzzle creator Mitchell Lee is staggering.

If you somehow missed it the first time around, now’s your chance. Put this 25-minute video on in the background and prepare to have your mind blown.

Dan Q found GC3742 SP9

This checkin to GC3742 SP9 reflects a geocaching.com log entry. See more of Dan's cache logs.

Well this was a challenge! The woods threw off my GPS, but I’d brought a backup device so I averaged between them and found a likely GZ. The dog and I did an increasingly large spiral, checking all the obvious hiding spots, to no avail. Returning to our start point we began another pass, and something caught my eye! It was the cache!

A few things had made it challenging:

- I put the coordinates 13m from where the CO does. Could be the woods, but I’m not the first to say about this distance.

- This cache is by no means a “regular”. It’s not even a “small”. It would fit inside a 35mm film canister, which in my mind makes it clearly a “micro”!

- It wasn’t in the hiding place indicated by the hint! I found in on the ground, beneath leaf litter, with thanks to my energetic leaf-kicking geohound!

Signed log and returned cache to the nearest hiding spot that fits the hint, hopefully others will find it more easily than we did! TFTC from Demmy the Dog and I!