I normally reserve my “on this day” posts to look back at my own archived content, but once in a while I get a moment of nostalgia for something of somebody else’s that “fell off the web”. And so I bring you something you probably haven’t seen in over a decade: Paul and Jon‘s Birmingham Egg.

It was a simpler time: a time when YouTube was a new “fringe” site (which is probably why I don’t have a surviving copy of the original video) and not yet owned by Google, before Facebook was universally-available, and when original Web content remained decentralised (maybe we’re moving back in that direction, but I wouldn’t count on it…). And only a few days after issue 175 of the b3ta newsletter wrote:

* BIRMINGHAM EGG - Take 5 scotch eggs, cut in half and cover in masala sauce. Place in Balti dish and serve with naan and/or chips. We'll send a b3ta t-shirt to anyone who cooks this up, eats it and makes a lovely little photo log / write up of their adventure.

Clearly-inspired, Paul said “Guess what we’re doing on Sunday?” and sure enough, he delivered. On this day 13 years ago and with the help of Jon, Liz, Siân, and Andy R, Paul whipped up the dish and presented his findings to the Internet: the original page is long-gone, but I’ve resurrected it for posterity. I don’t know if he ever got his promised free t-shirt, but he earned it: the page went briefly viral and brought joy to the world before being forgotten the following week when we all started arguing about whether 9 Songs was a good film or not.

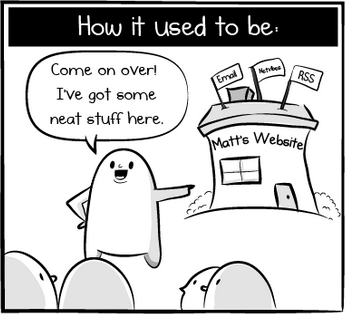

It was a simpler time, when, having fewer responsibilities, we were able to do things like this “for the lulz”. But more than that, it was still at the tail-end of the era in which individuals putting absurd shit online was still a legitimate art form on the Web. Somewhere along the way, the Web got serious and siloed. It’s not all a bad thing, but it does mean that we’re publishing less weirdness than we were back then.