Max props to my employer for providing pronoun pins not just in a diversity of options but also offering blank ones for people not represented by any of the pre-printed options.

Tag: travel

Buenos días

Note #24643

Pack Light

Think I blew Ruth‘s mind this morning when I set off for a week in Mexico with only a medium-sized, underseat-suitable backpack.

But since working for Automattic five years ago I’ve totally been bitten by the travelling-light bug. Highly recommended!

Nerd Sniped Traveller

I think I might be more-prone to nerd sniping when I’m travelling.

Last week, a coworker pointed out an unusually-large chimney on the back of a bus depot and I lost sleep poring over 50s photos of Dutch building sites to try to work out if it was original.

When a boat tour guide told me that the Netherlands used to have a window tax, I fell down a rabbit hole of how it influenced local architecture and why the influence was different in the UK.

Why does travelling make me more-prone to nerd sniping? Maybe I should see if there’s any likely psychological effect that might cause that…

Window Tax

Podcast Version

This post is also available as a podcast. Listen here, download for later, or subscribe wherever you consume podcasts.

…in England and Wales

From 1696 until 1851 a “window tax” was imposed in England and Wales1. Sort-of a precursor to property taxes like council tax today, it used an estimate of the value of a property as an indicator of the wealth of its occupants: counting the number of windows provided the mechanism for assessment.

(A particular problem with window tax as enacted is that its “stepping”, which was designed to weigh particularly heavily on the rich with their large houses, was that it similarly weighed heavily on large multi-tenant buildings, whose landlord would pass on those disproportionate costs to their tenants!)

Why a window tax? There’s two ways to answer that:

- A window tax – and a hearth tax, for that matter – can be assessed without the necessity of the taxpayer to disclose their income. Income tax, nowadays the most-significant form of taxation in the UK, was long considered to be too much of an invasion upon personal privacy3.

- But compared to a hearth tax, it can be validated from outside the property. Counting people in a property in an era before solid recordkeeping is hard. Counting hearths is easier… so long as you can get inside the property. Counting windows is easier still and can be done completely from the outside!

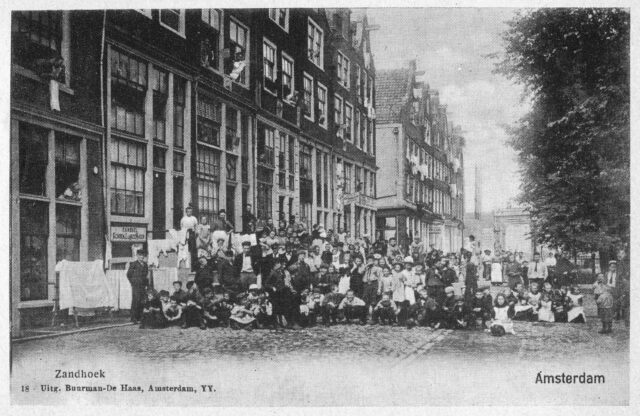

…in the Netherlands

I recently got back from a trip to Amsterdam to meet my new work team and get to know them better.

One of the things I learned while on this trip was that the Netherlands, too, had a window tax for a time. But there’s an interesting difference.

The Dutch window tax was introduced during the French occupation, under Napoleon, in 1810 – already much later than its equivalent in England – and continued even after he was ousted and well into the late 19th century. And that leads to a really interesting social side-effect.

Glass manufacturing technique evolved rapidly during the 19th century. At the start of the century, when England’s window tax law was in full swing, glass panes were typically made using the crown glass process: a bauble of glass would be spun until centrifugal force stretched it out into a wide disk, getting thinner towards its edge.

The very edge pieces of crown glass were cut into triangles for use in leaded glass, with any useless offcuts recycled; the next-innermost pieces were the thinnest and clearest, and fetched the highest price for use as windows. By the time you reached the centre you had a thick, often-swirly piece of glass that couldn’t be sold for a high price: you still sometimes find this kind among the leaded glass in particularly old pub windows5.

As the 19th century wore on, cylinder glass became the norm. This is produced by making an iron cylinder as a mould, blowing glass into it, and then carefully un-rolling the cylinder while the glass is still viscous to form a reasonably-even and flat sheet. Compared to spun glass, this approach makes it possible to make larger window panes. Also: it scales more-easily to industrialisation, reducing the cost of glass.

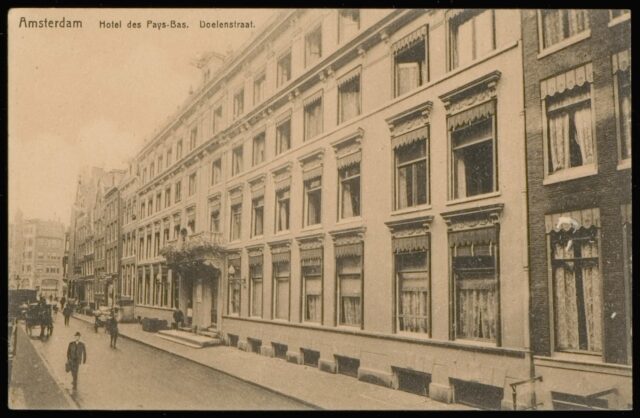

The Dutch window tax survived into the era of large plate glass, and this lead to an interesting phenomenon: rather than have lots of windows, which would be expensive, late-19th century buildings were constructed with windows that were as large as possible to maximise the ratio of the amount of light they let in to the amount of tax for which they were liable6.

That’s an architectural trend you can still see in Amsterdam (and elsewhere in Holland) today. Even where buildings are renovated or newly-constructed, they tend – or are required by preservation orders – to mirror the buildings they neighbour, which influences architectural decisions.

It’s really interesting to see the different architectural choices produced in two different cities as a side-effect of fundamentally the same economic choice, resulting from slightly different starting conditions in each (a half-century gap and a land shortage in one). While Britain got fewer windows, the Netherlands got bigger windows, and you can still see the effects today.

…and social status

But there’s another interesting this about this relatively-recent window tax, and that’s about how people broadcast their social status.

In some of the traditionally-wealthiest parts of Amsterdam, you’ll find houses with more windows than you’d expect. In the photo above, notice:

- How the window density of the central white building is about twice that of the similar-width building on the left,

- That a mostly-decorative window has been installed above the front door, adorned with a decorative leaded glass pattern, and

- At the bottom of the building, below the front door (up the stairs), that a full set of windows has been provided even for the below-ground servants quarters!

When it was first constructed, this building may have been considered especially ostentatious. Its original owners deliberately requested that it be built in a way that would attract a higher tax bill than would generally have been considered necessary in the city, at the time. The house stood out as a status symbol, like shiny jewellery, fashionable clothes, or a classy car might today.

Can we bring back 19th-century Dutch social status telegraphing, please?9

Footnotes

1 Following the Treaty of Union the window tax was also applied in Scotland, but Scotland’s a whole other legal beast that I’m going to quietly ignore for now because it doesn’t really have any bearing on this story.

2 The second-hardest thing about retrospectively graphing the cost of window tax is finding a reliable source for the rates. I used an archived copy of a guru site about Wolverhampton history.

3 Even relatively-recently, the argument that income tax might be repealed as incompatible with British values shows up in political debate. Towards the end of the 19th century, Prime Ministers Disraeli and Gladstone could be relied upon to agree with one another on almost nothing, but both men spoke at length about their desire to abolish income tax, even setting out plans to phase it out… before having to cancel those plans when some financial emergency showed up. Turns out it’s hard to get rid of.

4 There are, of course, other potential reasons for bricked-up windows – even aesthetic ones – but a bit of a giveaway is if the bricking-up reduces the number of original windows to 6, 9, 14 or 19, which are thesholds at which the savings gained by bricking-up are the greatest.

5 You’ve probably heard about how glass remains partially-liquid forever and how this explains why old windows are often thicker at the bottom. You’ve probably also already had it explained to you that this is complete bullshit. I only mention it here to preempt any discussion in the comments.

6 This is even more-pronounced in cities like Amsterdam where a width/frontage tax forced buildings to be as tall and narrow and as close to their neighbours as possible, further limiting opportunities for access to natural light.

7 Yet I’m willing to learn a surprising amount about Dutch tax law of the 19th century. Go figure.

8 Obligatory Pet Shop Boys video link. Can that be a thing please?

9 But definitely not 17th-century Dutch social status telegraphing, please. That shit was bonkers.

Dan Q found GC89T04 Japanse glazen dobbers

This checkin to GC89T04 Japanse glazen dobbers reflects a geocaching.com log entry. See more of Dan's cache logs.

An easy find. Didn’t take nor leave any books, but briefly skimmed the Borland JBuilder 2 Getting Started guide, because it was familiar/nostalgic. Pretty sure I used this tool… about 25 years ago!

Dan Q found GC8R0FY SIX on the beach

This checkin to GC8R0FY SIX on the beach reflects a geocaching.com log entry. See more of Dan's cache logs.

An easy find. As a approached I thought that a couple cuddling here might be in my way, but they were just getting ready to leave as I arrived! SL (love the long thin logbook!), TFTC. Now to make my way back to the station!

Dan Q found GC79PX6 Galgenveld / Field of Gallows

This checkin to GC79PX6 Galgenveld / Field of Gallows reflects a geocaching.com log entry. See more of Dan's cache logs.

Eww. Had to put my hand into two gross holes before finding the (correct) third gross hole I needed to put my hand into. Worth it in the end for a happy smiley face. Thanks for bringing me to this place and teaching me its history. TFTC!

Dan Q found GCAJGEA Welcome to Amsterdam! (Virtual Reward 4.0)

This checkin to GCAJGEA Welcome to Amsterdam! (Virtual Reward 4.0) reflects a geocaching.com log entry. See more of Dan's cache logs.

TFTC! I’m not carrying any tickets for UK transport, but I’ve got a (mildly defaced) British banknote and I found a tram (the number 13, which connected me to my hotel this week) and a ferry (which I then went and caught to go find some more caches!).

Dan Q found GCAJHEN Amsterdam Greed / Hebzucht

This checkin to GCAJHEN Amsterdam Greed / Hebzucht reflects a geocaching.com log entry. See more of Dan's cache logs.

Cash? Not carrying much of that. But my credit card sits at the front of my minimalist wallet and, as a bonus, shows my geocaching username (which is the same as my actual name) without showing the actual card number. TFTC!

Dan Q did not find GC5A7X0 Bicycle Parking

This checkin to GC5A7X0 Bicycle Parking reflects a geocaching.com log entry. See more of Dan's cache logs.

No luck here despite an extended search, the hint, and the spoiler image. Confident I’ve found the right host but no sign in the cache. I wonder if another geocacher is holding it right now, sitting somewhere nearby to sign the log? Or else it’s probably gone missing. 😢

Dan Q found GCAHANJ De Dolphijn

This checkin to GCAHANJ De Dolphijn reflects a geocaching.com log entry. See more of Dan's cache logs.

QEF once some nearby muggles moved along. TFTC.

PS Logbook getting quite full, only space for about 20-30 more signatures.

Dan Q found GCAB935 Bartolotti House

This checkin to GCAB935 Bartolotti House reflects a geocaching.com log entry. See more of Dan's cache logs.

Easy to spot, but I had to wait a while to be able to stealthily retrieve the cache. TFTC!

Dan Q found GC5K1KW Behind the Monument

This checkin to GC5K1KW Behind the Monument reflects a geocaching.com log entry. See more of Dan's cache logs.

Love the monument, delighted to see it. Took me a long, long time to find the cache though! Started by looking near the coordinates but couldn’t find anything likely to host the cache.

Spotted a likely host by the waterside and, evert though the coordinates seemed off, gave a good search there before giving up.

Then went to a nearby stall to buy a souvenir of my trip when I realised another possible route to the coordinates. Turns out there’s a big van parked right now blocking access to the cache! (Looks like they’re setting up for an event, maybe for King’s Day?) Squeezed past and used my phone in selfie mode as a mirror to scan the place I thought the cache might be. Success! Retrieved cache, signed log, and returned.

Thanks for bringing me here, and for a well-hidden cache. Greetings from Oxfordshire, UK!