…

…

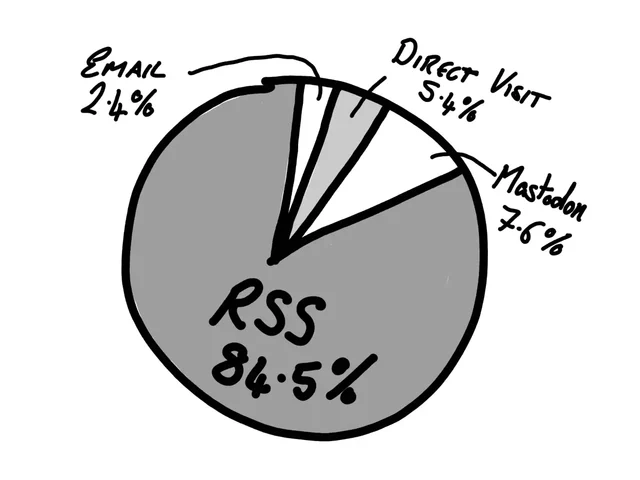

What this tells me?

Well, quite a lot, actually. It tells me that there’s loads of you fine people reading the content on this site, which is very heart-warming. It also tells me that RSS is by far the main way people consume my content. Which is also fantastic, as I think RSS is very important and should always be a first class citizen when it comes to delivering content to people.

…

I didn’t get a chance to participate in Kev’s survey because, well, I don’t target “RSS Zero” and I don’t always catch up on new articles – even by authors I follow closely – until up to a few weeks after they’re published1. But needless to say, I’d have been in the majority: I follow Kev via my feed reader2.

But I was really interested by this approach to understanding your readership: like Kev, I don’t run any kind of analytics on my personal sites. But he’s onto something! If you want to learn about people, why not just ask them?

Okay, there’s going to be a bias: maybe readers who subscribe by RSS are simply more-likely to respond to a survey? Or are more-likely to visit new articles quickly, which was definitely a factor in this short-lived survey? It’s hard to be certain whether these or other factors might have thrown-off Kev’s results.

But then… what isn’t biased? Were Kev running, say, Google Analytics (or Fathom, or Strike, or Hector, or whatever)… then I wouldn’t show up in his results because I block those trackers3 – another, different, kind of bias.

We can’t dodge such bias: not using popular analytics platforms, and not by surveying users. But one of these two options is, at least, respectful of your users’ privacy and bandwidth.

I’m tempted to run a similar survey myself. I might wait until after my long-overdue redesign – teased here – launches, though. Although perhaps that’s just a procrastination stemming from my insecurity that I’ll hear, like, an embarrassingly-low number of responses like three or four and internalise it as failing some kind of popularity contest4! Needs more thought.

Footnotes

1 I’m happy with this approach: I enjoy being able to treat my RSS reader as sort-of a “magazine”, using my categorisations of feeds – which are partially expressed on my Blogroll page – as a theme. Like: “I’m going to spend 20 minutes reading… tech blogs… or personal blogs by people I know personally… or indieweb-centric content… or news (without the sports, of course)…” This approach makes consuming content online feel especially deliberate and intentional: very much like being in control of what I read and when.

2 In fact, it’s by doing so – with a little help from Matthias Pfefferle – that I was inspired to put a “thank you” message in my RSS feed, among other “secret” features!

3 In fact, I block all third-party JavaScript (and some first-party JavaScript!) except where explicitly permitted, but even for sites that I do allow to load all such JavaScript I still have to manually enable analytics trackers if I want them, which I don’t. Also… I sandbox almost all cookies, and I treat virtually all persistent cookies as session cookies and I delete virtually all session cookies 15 seconds after I navigate away from a its sandbox domain or close its tab… so I’m moderately well-anonymised even where I do somehow receive a tracking cookie.

4 Perhaps something to consider after things have gotten easier and I’ve caught up with my backlog a bit.