This weekend I announced and then hosted Homa Night II, an effort to use technology to help bridge the chasms that’ve formed between my diaspora of friends as a result mostly of COVID. To a lesser extent we’ve been made to feel distant from one another for a while as a result of our very diverse locations and lifestyles, but the resulting isolation was certainly compounded by lockdowns and quarantines.

Back in the day we used to have a regular weekly film night called Troma Night, named after the studio who dominated our early events and whose… genre… influenced many of our choices thereafter. We had over 300 such film nights, by my count, before I eventually left our shared hometown of Aberystwyth ten years ago. I wasn’t the last one of the Troma Night regulars to leave town, but more left before me than after.

Earlier this year I hosted Sour Grapes, a murder mystery party (an irregular highlight of our Aberystwyth social calendar, with thanks to Ruth) run entirely online using a mixture of video chat and “second screen” technologies. In some ways that could be seen as the predecessor to Homa Night, although I’d come up with most of the underlying technology to make Homa Night possible on a whim much earlier in the year!

How best to make such a thing happen? When I first started thinking about it, during the first of the UK’s lockdowns, I considered a few options:

-

Streaming video over a telemeeting service (Zoom, Google Meet, etc.)

Very simple to set up, but the quality – as anybody who’s tried this before will attest – is appalling. Being optimised for speech rather than music and sound effects gives the audio a flat, scratchy sound, video compression artefacts that are tolerable when you’re chatting to your boss are really annoying when they stop you reading a crucial subtitle, audio and video often get desynchronised in a way that’s frankly infuriating, and everybody’s download speed is limited by the upload speed of the host, among other issues. The major benefit of these platforms – full-duplex audio – is destroyed by feedback so everybody needs to stay muted while watching anyway. No thanks! -

Teleparty or a similar tool

Teleparty (formerly Netflix Party, but it now supports more services) is a pretty clever way to get almost exactly what I want: synchronised video streaming plus chat alongside. But it only works on Chrome (and some related browsers) and doesn’t work on tablets, web-enabled TVs, etc., which would exclude some of my friends. Everybody requires an account on the service you’re streaming from, potentially further limiting usability, and that also means you’re strictly limited to the media available on those platforms (and further limited again if your party spans multiple geographic distribution regions for that service). There’s definitely things I can learn from Teleparty, but it’s not the right tool for Homa Night. -

“Press play… now!”

The relatively low-tech solution might have been to distribute video files in advance, have people download them, and get everybody to press “play” at the same time! That’s at least slightly less-convenient because people can’t just “turn up”, they have to plan their attendance and set up in advance, but it would certainly have worked and I seriously considered it. There are other downsides, though: if anybody has a technical issue and needs to e.g. restart their player then they’re basically doomed in any attempt to get back in-sync again. We can do better… - A custom-made synchronised streaming service…?

So obviously I ended up implementing my own streaming service. It wasn’t even that hard. In case you want to try your own, here’s how I did it:

Media preparation

First, I used Adobe Premiere to create a video file containing both of the night’s films, bookended and separated by “filler” content to provide an introduction/lobby, an intermission, and a closing “you should have stopped watching by now” message. I made sure that the “intro” was a nice round duration (90s) and suitable for looping because I planned to hold people there until we were all ready to start the film. Thanks to Boris & Oliver for the background music!

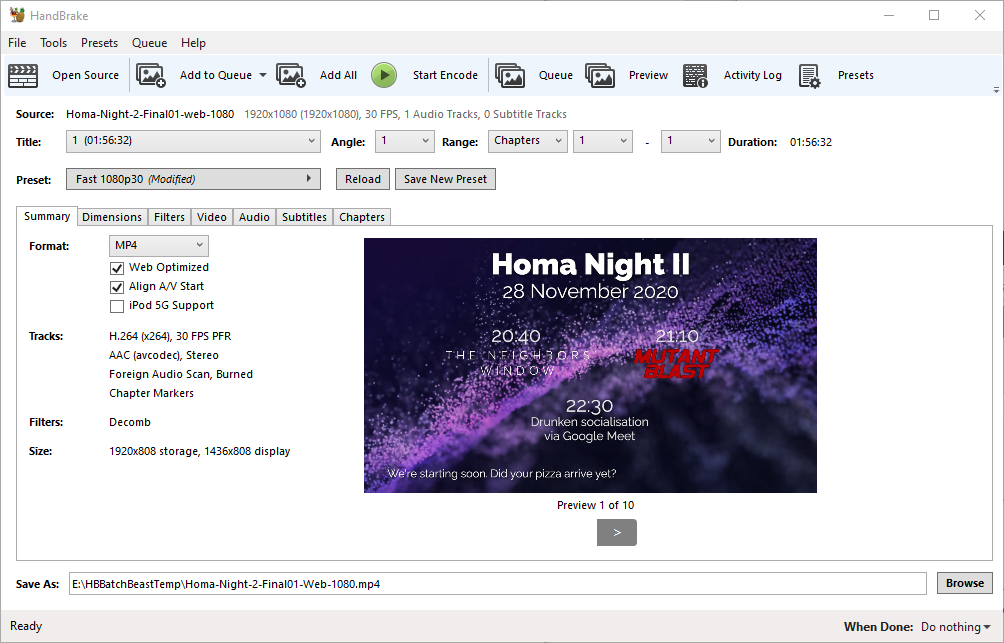

Next, I ran the output through Handbrake to produce “web optimized” versions in 1080p and 720p output sizes. “Web optimized” in this case means that metadata gets added to the start of the file to allow it to start playing without downloading the entire file (streaming) and to allow the calculation of what-part-of-the-file corresponds to what-part-of-the-timeline: the latter, when coupled with a suitable webserver, allows browsers to “skip” to any point in the video without having to watch the intervening part. Naturally I’m encoding with H.264 for the widest possible compatibility.

Real-Time Synchronisation

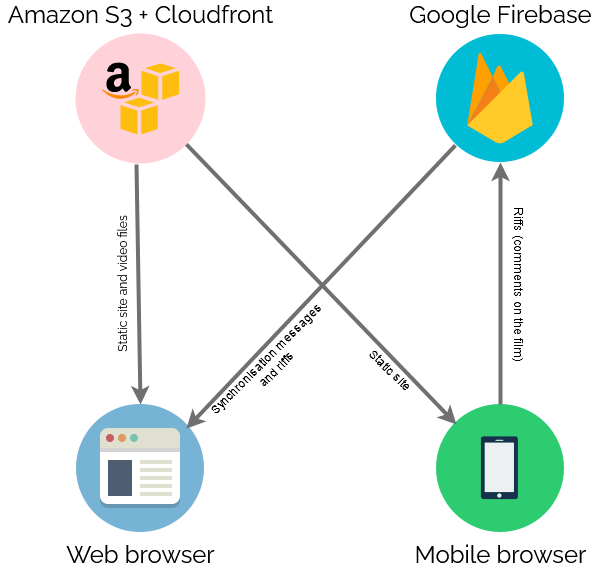

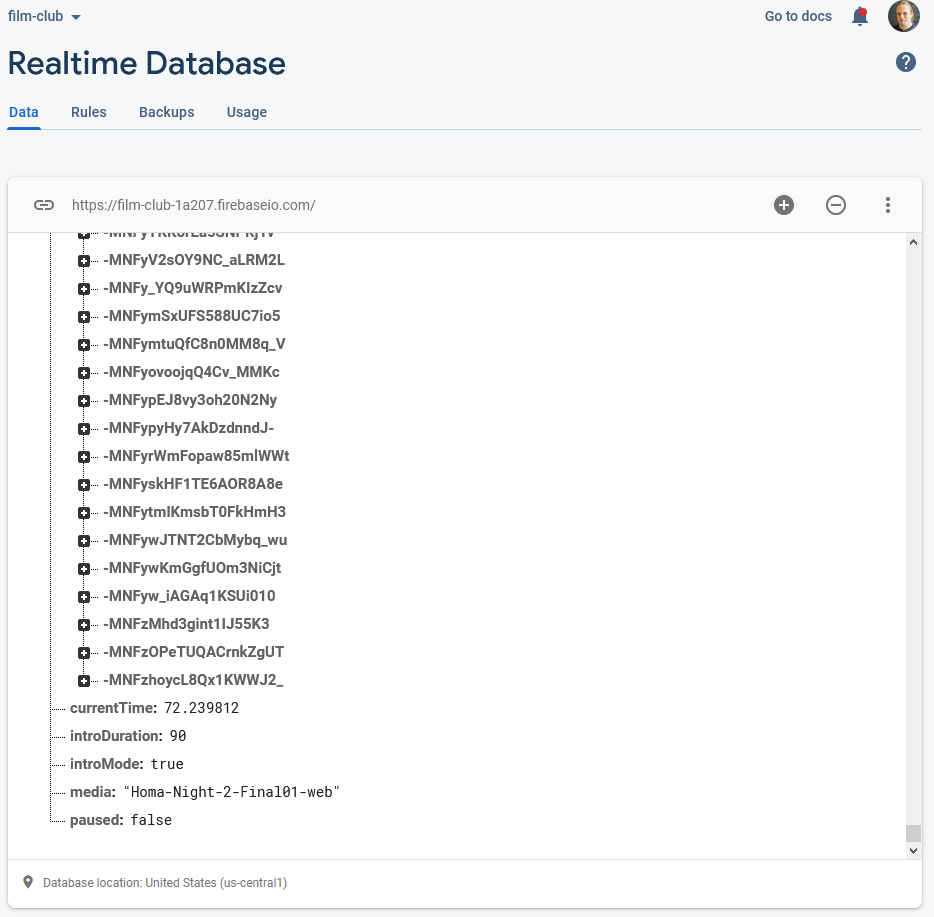

To keep everybody’s viewing experience in-sync, I set up a Firebase account for the application: Firebase provides an easy-to-use Websockets

platform with built-in data synchronisation. Ignoring the authentication and chat features, there wasn’t much

shared here: just the currentTime of the video in seconds, whether or not introMode was engaged (i.e. everybody should loop the first 90 seconds, for now), and

whether or not the video was paused:

To reduce development effort, I never got around to implementing an administrative front-end; I just manually went into the Firebase database and acknowledged “my” computer as being an

administrator, after I’d connected to it, and then ran a little Javascript in my browser’s debugger to tell it to start pushing my video’s currentTime to the server every

few seconds. Anything else I needed to edit I just edited directly from the Firebase interface.

Other web clients’ had Javascript to instruct them to monitor these variables from the Firebase database and, if they were desynchronised by more than 5 seconds, “jump” to the correct point in the video file. The hard part of the code… wasn’t really that hard:

// Rewind if we're passed the end of the intro loop function introModeLoopCheck() { if (!introMode) return; if (video.currentTime > introDuration) video.currentTime = 0; } function fixPlayStatus() { // Handle "intro loop" mode if (remotelyControlled && introMode) { if (video.paused) video.play(); // always play introModeLoopCheck(); return; // don't look at the rest } // Fix current time const desync = Math.abs(lastCurrentTime - video.currentTime); if ( (video.paused && desync > DESYNC_TOLERANCE_WHEN_PAUSED) || (!video.paused && desync > DESYNC_TOLERANCE_WHEN_PLAYING) ) { video.currentTime = lastCurrentTime; } // Fix play status if (remotelyControlled) { if (lastPaused && !video.paused) { video.pause(); } else if (!lastPaused && video.paused) { video.play(); } } // Show/hide paused notification updatePausedNotification(); }

Web front-end

Finally, there needed to be a web page everybody could go to to get access to this. As I was hosting the video on S3+CloudFront anyway, I put the HTML/CSS/JS there too.

I tested in Firefox, Edge, Chrome, and Safari on desktop, and (slightly less) on Firefox, Chrome and Safari on mobile. There were a few quirks to work around, mostly to do with browsers not letting videos make sound until the page has been interacted with after the video element has been rendered, which I carefully worked-around by putting a popup “over” the video to “enable sync”, but mostly it “just worked”.

Delivery

On the night I shared the web address and we kicked off! There were a few hiccups as some people’s browsers got disconnected early on and tried to start playing the film before it was time, and one of these even when fixed ran about a minute behind the others, leading to minor spoilers leaking via the rest of us riffing about them! But on the whole, it worked. I’ve had lots of useful feedback to improve on it for the next version, and I might even try to tidy up my code a bit and open-source the results if this kind of thing might be useful to anybody else.