Sod Pepsi’s navy.Let’s talk about the point after WW2 where the Knights Hospitaller, of medieval crusading fame, ‘accidentally’ became a major European air power.

I shitteth ye not. ?️?️

So, if I asked you to imagine the Knights Hospitaller you probably picture:1) Angry Christians on armoured horses

2) Them being wiped out long ago like the Templars.

3) Some Dan Brown bullshitAnd you would be (mostly) wrong about all three. Which is sort of how this happened.

From the beginning (1113 or so), the Hospitallers were never quite as committed to the angry, horsey thing as the Templars. They had always (ostensibly) been more about protecting pilgrims and healthcare.They also quite liked boats. Which were useful for both.

Over the next 150 years (or so), as the Christian grip on the Holy Lands waned, both military orders got more involved in their other hobbies – banking for the Templars, mucking around in boats for the Hospitaller.This proved to be a surprisingly wise decision on the Hospitaller part. By 1290ish, both Orders were homeless and weakened.As the Templars fatally discovered, being weak AND having the King of France owe you money is a bad combo.

Being a useful NAVY, however, wins you friends.

And this is why your first vision of the Hospitallers is wrong. Because they spent the next 500 YEARS, backed by France and Spain, as one of the most powerful naval forces in the Mediterranean, blocking efforts by the Ottomans to expand westwards by sea.To give you an idea of the trouble they caused: in 1480 Mehmet II sent 70,000 men (against the Knights 4000) to try and boot them out of Rhodes. He failed.Suleiman the Magnificent FINALLY managed it in 1522 with 200,000 men. But even he had to agree to let the survivors leave.

The surviving Hospitallers hopped on their ships (again) and sailed away. After some vigorous lobbying, in 1530 the King of Spain agreed to rent them Malta, in return for a single maltese falcon every year.Because that’s how good rents were pre-housing crisis in Europe.

The Knights turned Malta into ANOTHER fortified island. For the next 200 years ‘the Pope’s own navy’ waged a war of piracy, slavery and (occasionally) pitched sea battles against the Ottomans.From Malta, they blocked Ottoman strategic access to the western med. A point that was not lost on the Ottomans, who sent 40,000 men to try and take the island in 1565 – the ‘Great Siege of Malta’.The Knights, fighting almost to the last man, held out and won.

Now the important thing here is the CONTINUED EXISTENCE AS A SOVEREIGN STATE of the Knights Hospitaller. They held Malta right up until 1798, when Napoleon finally managed to boot them out on his way to Egypt.(Partly because the French contingent of the Knights swapped sides)

The British turned up about three months later and the French were sent packing, but, well, It was the British so:THE KNIGHTS: Can we have our strategically important island back please?

THE BRITISH: What island?

THE KNIGHT: That island

THE BRITISH: Nope. Can’t see an islandAfter the Napoleonic wars no one really wanted to bring up the whole Malta thing with the British (the Putin’s Russia of the era) so the European powers fudged it. They said the Knights were still a sovereign state and they tried to sort them out with a new country. But never didThe Russian Emperor let them hang out in St Petersburg for a while, but that was awkward (Catholicism vs Orthodox). Then the Swedes were persuaded to offer them Gotland.But every offer was conditional on the Knights dropping their claim to Malta. Which they REFUSED to do.

~ wobbly lines ~It’s the 1900s. The Knights are still a stateless state complaining about Malta. What that means legally is a can of worms NO ONE wants to open in international law but they’ve also rediscovered their original mission (healthcare) so everyone kinda ignores them

The Knights become a pseudo-Red Cross organisation. In WW1 they run ambulance trains and have medical battalions, loosely affiliated with the Italian army (still do). In WW2 they do it too.Italy surrenders. The allies move on then…

Oh dear.

Who wrote this peace deal again?

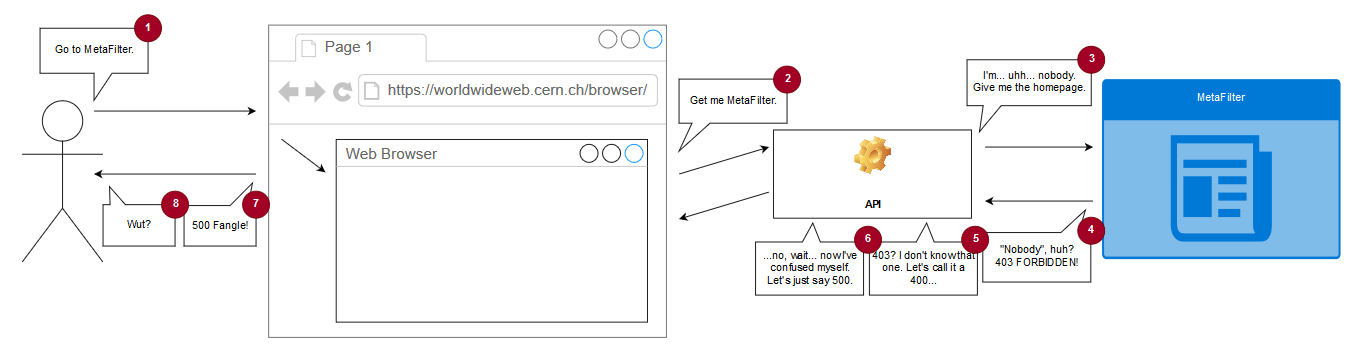

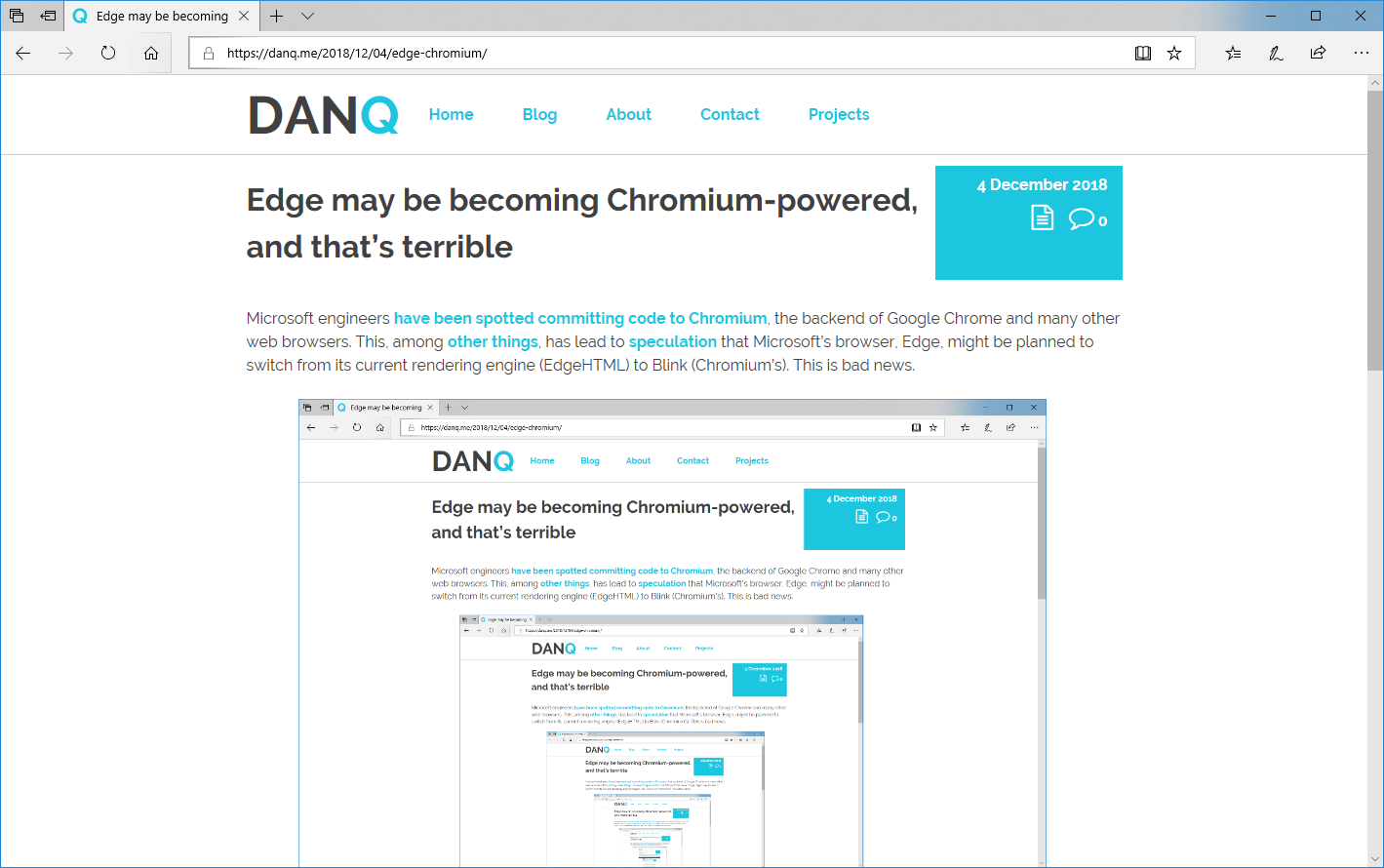

It turns out the Treaty of Peace with Italy should go FIRMLY into the category of ‘things that seemed a good idea at the time’.This is because it presupposes that relations between the west and the Soviets will be good, and so limits Italy’s MILITARY.

This is a problem.

Because as the early Cold War ramps up, the US needs to build up its Euro allies ASAP.But the treaty limits the Italians to 400 airframes, and bans them from owning ANYTHING that might be a bomber.

This can be changed, but not QUICKLY.

Then someone remembers about the Knights

The Knights might not have any GEOGRAPHY, but because everyone avoided dealing with the tricky international law problem it can be argued – with a straight face – that they are still TECHNICALLY A EUROPEAN SOVEREIGN STATE.And they’re not bound by the WW2 peace treaty.

Italy (with US/UK/French blessing) approaches the Knights and explains the problem.The Knights reasonably point out that they’re not in the business of fighting wars anymore, but anything that could be called a SUPPORT aircraft is another matter.

So, in the aftermath of WW2, this is the ballet that happens:The Italians transfer all of their support and training aircraft to the Knights.

This then frees up the ‘cap room’ to allow the US to boost Italy’s warfighting ability WITHOUT breaking the WW2 peace treaty.

And that’s how the Knights Hospitaller ended up becoming a major air power.Eventually the treaties were reworked, and everything was quietly transferred back. I suspect it’s a reason why the sovereign status of the Knights remains unchallenged still today though.

And that’s why today, even thought they are now fully committed to the Red-Cross-esque stuff, they can still issue passports, are a permanent observer at the UN, have a currency…..,and even have a tiny bit of Malta back.

How the Knights Hospitaller ‘accidentally’ became a major European air power

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.