…

I’m writing up this information because Dreamwidth’s legal advocacy work has been largely underrecognized. I have not seen a scrap of mainstream news coverage out there that delves into the unique role that Dreamwidth has played in the NetChoice lawsuits, and in a tech news landscape that inspires so much resignation and despair, I think people deserve to know about how Dreamwidth is putting up a fight. Not only does Dreamwidth refuse to engage in intrusive tracking, it’s proactively participating in lawsuits against state governments that try to force its hand. For all that many lawmakers are trying to make the web worse, Dreamwidth is leveraging itself as proof that a better web is possible.

…

If your mental model of Dreamwidth is “it’s like LiveJournal, but…” then you owe it to yourself to read Coyote’s excellent explanation of how Dreamwidth is so much more: a beacon of privacy-centric and censorship-resistant blog hosting in a world that increasingly seems at-best uninterested and at-worst actively hosting to such things.

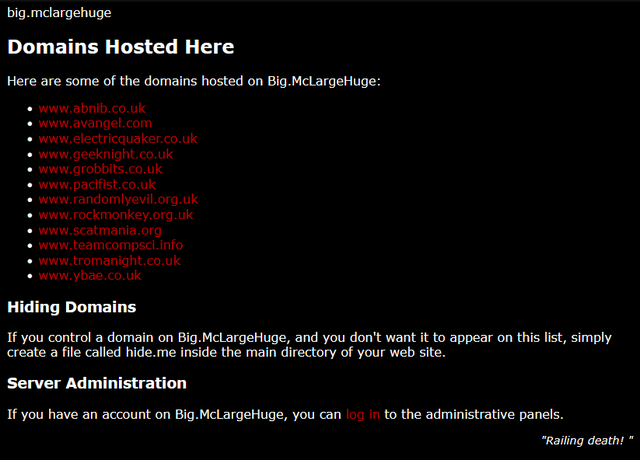

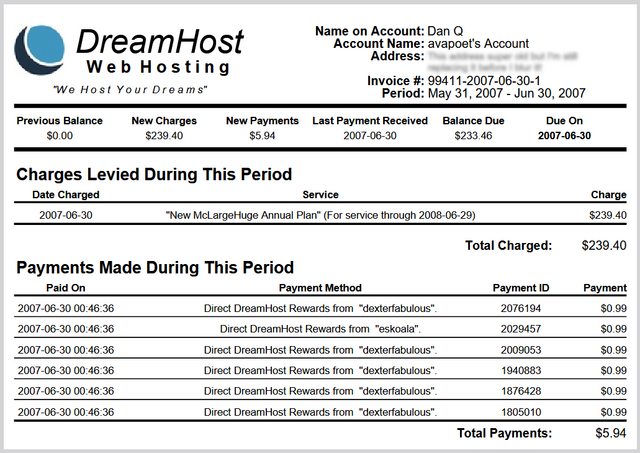

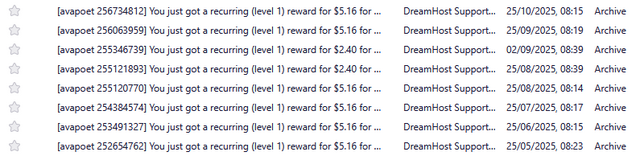

That it’s not for me personally (I’m more a selfhost type) doesn’t mean it’s not a great choice for you: it’s got solid free and reasonably-priced premium tiers and all the kinds of features you’d expect from a service live LiveJournal, or Tumblr, or Medium… but without all of the antifeatures that come with each of those.

And yeah, they’re on the side of the good guys:

Coyote also wrote the excellent You Can Make A Website, if you’re looking for further reading from the same author.