…

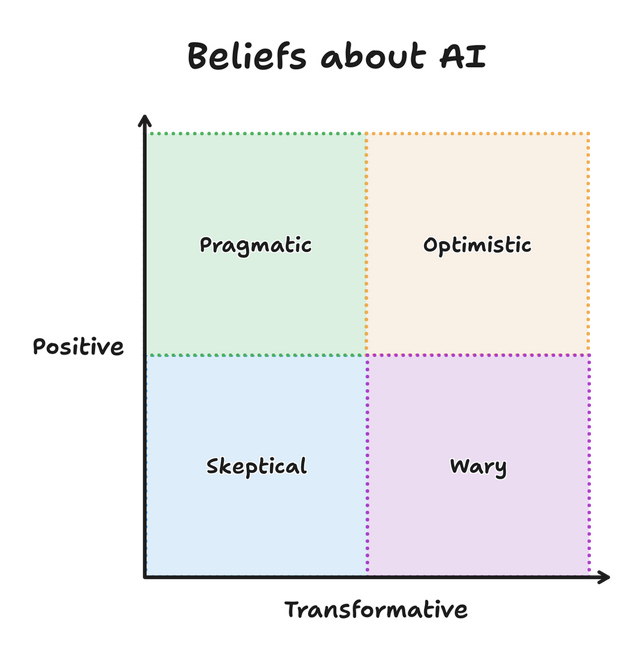

I’ve grouped these four perspectives, but everything here is a spectrum. Depending on the context or day, you might find yourself at any point on the graph. And I’ve attempted to describe each perspectively [sic] generously, because I don’t believe that any are inherently good or bad. I find myself switching between perspectives throughout the day as I implement features, use tools, and read articles. A good team is probably made of members from all perspectives.

Which perspective resonates with you today? Do you also find yourself moving around the graph?

…

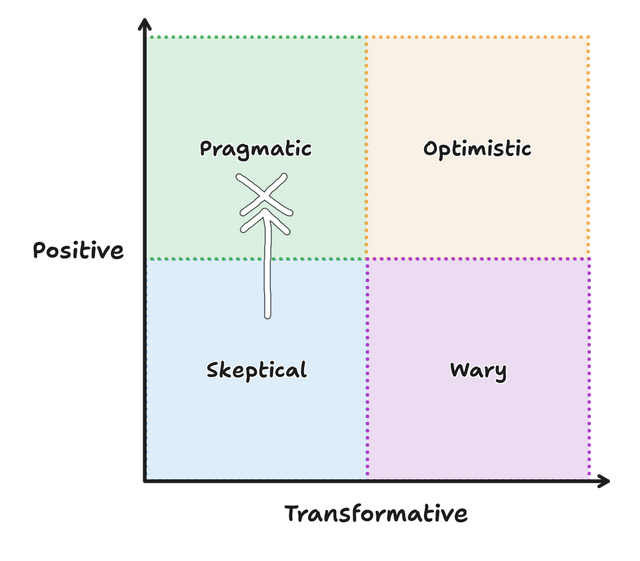

An interesting question from Sean McPherson. He sounds like he’s focussed on LLMs for software development, for which I’ve drifted around a little within the left-hand-side of the graph. But perhaps right now, this morning, you could simplify my feelings like this:

My stance is that AI-assisted coding can be helpful (though the question remains open about whether it’s

“worth it”), so long as you’re not trying to do anything that you couldn’t do yourself, and you know how you’d go about doing it yourself. That is: it’s only useful to

accelerate tasks that are in your “known knowns” space.

My stance is that AI-assisted coding can be helpful (though the question remains open about whether it’s

“worth it”), so long as you’re not trying to do anything that you couldn’t do yourself, and you know how you’d go about doing it yourself. That is: it’s only useful to

accelerate tasks that are in your “known knowns” space.

As I’ve mentioned: the other week I had a coding AI help me with some code that interacted with the Google Sheets API. I know exactly how I’d go about it, but that journey would have to start with re-learning the Google Sheets API, getting an API key and giving it the appropriate permissions, and so on. That’s the kind of task that I’d be happy to outsource to a less-experienced programmer who I knew would bring a somewhat critical eye for browsing StackOverflow, and then give them some pointers on what came back, so it’s a fine candidate for an AI to step in and give it a go. Plus: I’d be treating the output as “legacy code” from the get-go, and (because the resulting tool was only for my personal use) I wasn’t too concerned with the kinds of security and accessibility considerations that GenAI can often make a pig’s ear of. So I was able to palm off the task onto Claude Sonnet and get on with something else in the meantime.

If I wanted to do something completely outside of my wheelhouse: say – “write a program in Fortran to control a robot arm” – an AI wouldn’t be a great choice. Sure, I could “vibe code” something like that, but I’d have no idea whether what it produced was any good! It wouldn’t even be useful as a springboard to learning how to do that, because I don’t have the underlying fundamentals in robotics nor Fortran. I’d be producing AI slop in software form: the kind of thing that comes out when non-programmers assume that AI can completely bridge the gap between their great business idea and a fully working app!

They’ll get a prototype that seems to do what you want, if you squint just right, but the hard part of software engineering isn’t making a barebones proof-of-concept! That’s the easy bit! (That’s why AI can do it pretty well!) The hard bit is making it work all the time, every time; making it scale; making it safe to use; making it maintainable; making it production-ready… etc.

But I do benefit from coding AI sometimes. GenAI’s good at summarisation, which in turn can make it good at relatively-quickly finding things in a sprawling codebase where your explanation of those things is too-woolly to use a conventional regular expression search. It’s good at generating boilerplate that’s broadly-like examples its seen before, which means it can usually be trusted to put together skeleton applications. It’s good at “guessing what comes next” – being, as it is, “fancy autocomplete” – which means it can be helpful for prompting you for the right parameters for that rarely-used function or for speculating what you might be about to do with the well-named variable you just created.

Anyway: Sean’s article was pretty good, and it’s a quick and easy read. Once you’ve read it, perhaps you’ll share where you think you sit, on his diagram?