…

How well does the algorithm perform? Setting it up to work in LIME can be a bit of a pain, depending on your environment. The examples on Tulio Ribeiro’s Github repo are in Python and have been optimised for Jupyter notebooks. I decided to get the code for a basic image analyser running in a Docker container, which involved much head-scratching and the installation of numerous Python libraries and packages along with a bunch of pre-trained models. As ever, the code needed a bit of massaging to get it to run in my environment, but once that was done, it worked well.

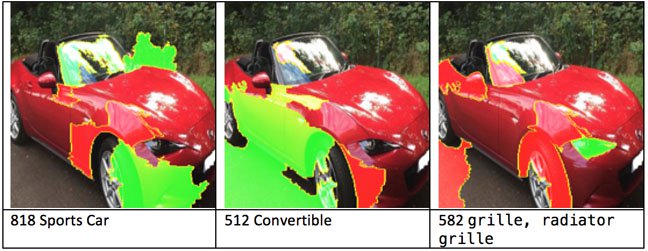

Below are three output images showing the explanation for the top three classifications of the red car above:

In these images, the green area are positive for the image and the red areas negative. What’s interesting here (and this is just my explanation) is that the plus and minuses for convertible and sports car are quite different, although to our minds convertible and sports car are probably similar.

…

A fascinating look at how an neural-net powered AI picture classifier can be reverse-engineered to explain the features of the pictures it saw and how they influenced its decisions. The existence of tools that can perform this kind of work has important implications for the explicability of the output of automated decision-making systems, which becomes ever-more relevant as neural nets are used to drive cars, assess loan applications, and so on.

Remember all the funny examples of neural nets which could identify wolves fine so long as they had snowy backgrounds, because of bias in their training set? The same thing happens with real-world applications, too, resulting in AIs that take on the worst of the biases of the world around them, making them racist, sexist, etc. We need audibility so we can understand and retrain AIs.