Last month I got the opportunity to attend the EEBO-TCP Hackfest, hosted in the (then still very-much under construction) Weston Library at my workplace. I’ve done a couple of hackathons and similar get-togethers before, but this one was somewhat different in that it was unmistakably geared towards a different kind of geek than the technology-minded folks that I usually see at these things. People like me, with a computer science background, were remarkably in the minority.

Instead, this particular hack event attracted a great number of folks from the humanities end of the spectrum. Which is understandable, given its theme: the Early English Books Online Text Creation Partnership (EEBO-TCP) is an effort to digitise and make available in marked-up, machine-readable text formats a huge corpus of English-language books printed between 1475 and 1700. So: a little over three centuries of work including both household names (like Shakespeare, Galileo, Chaucer, Newton, Locke, and Hobbes) and an enormous number of others that you’ll never have heard of.

The hackday event was scheduled to coincide with and celebrate the release of the first 25,000 texts into the public domain, and attendees were challenged to come up with ways to use the newly-available data in any way they liked. As is common with any kind of hackathon, many of the attendees had come with their own ideas half-baked already, but as for me: I had no idea what I’d end up doing! I’m not particularly familiar with the books of the 15th through 17th centuries and I’d never looked at the way in which the digitised texts had been encoded. In short: I knew nothing.

Instead, I’d thought: there’ll be people here who need a geek. A major part of a lot of the freelance work I end up doing (and a lesser part of my work at the Bodleian, from time to time) involves manipulating and mining data from disparate sources, and it seemed to me that these kinds of skills would be useful for a variety of different conceivable projects.

I paired up with a chap called Stephen Gregg, a lecturer in 18th century literature from Bath Spa University. His idea was to use this newly-open data to explore the frequency (and the change in frequency over the centuries) of particular structural features in early printed fiction: features like chapters, illustrations, dedications, notes to the reader, encomia, and so on). This proved to be a perfect task for us to pair-up on, because he had the domain knowledge to ask meaningful questions, and I had the the technical knowledge to write software that could extract the answers from the data. We shared our table with another pair, who had technically-similar goals – looking at the change in the use of features like lists and tables (spoiler: lists were going out of fashion, tables were coming in, during the 17th century) in alchemical textbooks – and ultimately I was able to pass on the software tools I’d written to them to adapt for their purposes, too.

And here’s where I made a discovery: the folks I was working with (and presumably academics of the humanities in general) have no idea quite how powerful data mining tools could be in giving them new opportunities for research and analysis. Within two hours we were getting real results from our queries and were making amendments and refinements in our questions and trying again. Within a further two hours we’d exhausted our original questions and, while the others were writing-up their findings in an attractive way, I was beginning to look at how the structural differences between fiction and non-fiction might be usable as a training data set for an artificial intelligence that could learn to differentiate between the two, providing yet more value from the dataset. And all the while, my teammates – who’d been used to looking at a single book at a time – were amazed by the possibilities we’d uncovered for training computers to do simple tasks while reading thousands at once.

Elsewhere at the hackathon, one group was trying to simulate the view of the shelves of booksellers around the old St. Paul’s Cathedral, another looked at the change in the popularity of colour and fashion-related words over the period (especially challenging towards the beginning of the timeline, where spelling of colours was less-standardised than towards the end), and a third came up with ways to make old playscripts accessible to modern performers.

At the end of the session we presented our findings – by which I mean, Stephen explained what they meant – and talked about the technology and its potential future impact – by which I mean, I said what we’d like to allow others to do with it, if they’re so-inclined. And I explained how I’d come to learn over the course of the day what the word encomium meant.

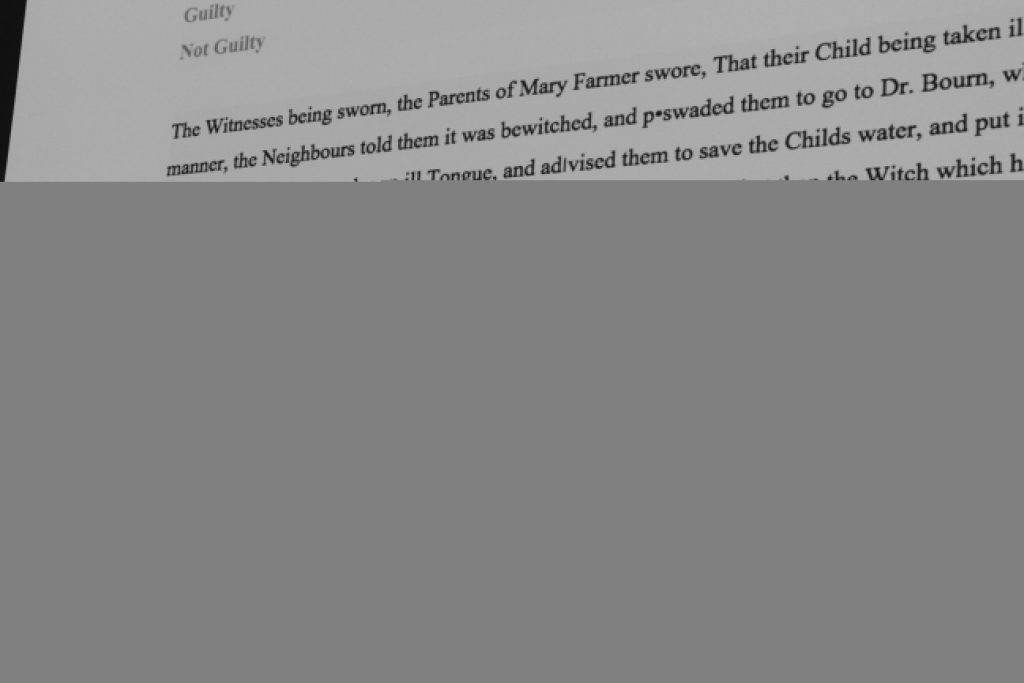

My personal favourite contribution from the event was by Sarah Cole, who adapted the text of a story about a witch trial into a piece of interactive fiction, powered by Twine/Twee, and then allowed us as an audience to collectively “play” her game. I love the idea of making old artefacts more-accessible to modern audiences through new media, and this was a fun and innovative way to achieve this. You can even play her game online!

(by the way: for those of you who enjoy my IF recommendations: have a look at Detritus; it’s a delightful little experimental/experiential game)

But while that was clearly my favourite, the judges were far more impressed by the work of my teammate and I, as well as the team who’d adapted my software and used it to investigate different features of the corpus, and decided to divide the cash price between the four of us. Which was especially awesome, because I hadn’t even realised that there was a prize to be had, and I made the most of it at the Drinking About Museums event I attended later in the day.

If there’s a moral to take from all of this, it’s that you shouldn’t let your background limit your involvement in “hackathon”-like events. This event was geared towards literature, history, linguistics, and the study of the book… but clearly there was value in me – a computer geek, first and foremost – being there. Similarly, a hack event I attended last year, while clearly tech-focussed, wouldn’t have been as good as it was were it not for the diversity of the attendees, who included a good number of artists and entrepreneurs as well as the obligatory hackers.

But for me, I think the greatest lesson is that humanities researchers can benefit from thinking a little bit like computer scientists, once in a while. The code I wrote (which uses Ruby and Nokogiri) is freely available for use and adaptation, and while I’ve no idea whether or not it’ll ever be useful to anybody again, what it represents is the research benefits of inter-disciplinary collaboration. It pleases me to see things like the “Library Carpentry” (software for research, with a library slant) seeming to take off.

And yeah, I love a good hackathon.

Update 2015-04-22 11:59: with thanks to Sarah for pointing me in the right direction, you can play the witch trial game in your browser.

@scatmandan @irny Thanks for sharing this great post Dan. Coincidentally I’m writing it up too, but now I’ve some work to do to match yours!

Humanities scholars “have no idea quite how powerful data mining tools could be” @scatmandan reports on #eebotcphack

@scatmandan @gregg_sh Lovely! Thanks! :) It was a fun day. BTW, if you wanted a link to the game it’s online at http://www.joanbuts.timeimage.org.uk/JoanButs.html

The Bodleian’s own @scatmandan on #eebotcphack: Don’t let your background limit your involvement

Neat! From my understanding, 15th Century English and today’s English are much different. Are there going to be any kind of translations.

It’s not always so bad as you’d think: Don Quixote was first translated to English in 1612, for example, but is still perfectly readable (so long as you don’t mind exceptionally-long and flowery sentences and a handful of pieces of terminology that have since left the popular lexicon). Shakespeare’s earliest plays were published around 1590 and, again, are quite readable (especially with a couple of interpretative notes to help provide context).

Very early texts in this period are harder specifically because English was less-standardised at that point, and more-inclined to be written phonetically. In many ways, the development of the printing press seems to have had a huge impact on standardising English spelling, and so only a hundred years later the printed word exhibits far greater comprehensibility to modern readers than it did before then.

There are no such academic efforts that I’m aware of, but now that these texts are available in the public domain there’s nothing to stop such an endeavour from being easily crowd-sourced: it’d even be possible to do some kind of computer-assisted crowd-sourcing, where a computerised dictionary helps humans to modernise old works. Hell – if you wanted to run such a project, you can submit your proposal to my employer for a chance to win a prize. Just sayin’.

Note that I’m a computer programmer and (very definitely) not a linguist nor a historian, so some or all of the above might be complete bullshit. You have been warned!

I so wish I could do half of what you do, dude.