What is it? What is it for? I’ve never seen anything like it before!

Dan Q

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

You can listen to an audio version of Web! What is it good for?

I have a blind spot. It’s the web.

I just can’t get excited about the prospect of building something for any particular operating system, be it desktop or mobile. I think about the potential lifespan of what would be built and e…

You can listen to an audio version of Web! What is it good for?

I have a blind spot. It’s the web.

I just can’t get excited about the prospect of building something for any particular operating system, be it desktop or mobile. I think about the potential lifespan of what would be built and end up asking myself “why bother?” If something isn’t on the web—and of the web—I find it hard to get excited about it. I’m somewhat jealous of people who can get equally excited about the web, native, hardware, print …in my mind, if it hasn’t got a URL, it’s missing some vital spark.

I know that this is a problem, but I can’t help it. At the very least, I have enough presence of mind to recognise it as being my problem.

…

My problem, too. There are worse problems to have.

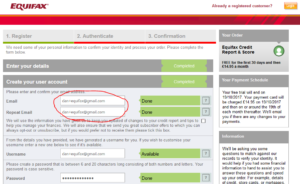

This technique’s about a decade old, but a lot of people still aren’t using it, and I can’t help but suspect that can only be because they didn’t know about it yet, so let’s revisit:

You have a GMail account, right? Or else Google for Domains? Suppose your email address is dan@gmail.com… did you know that also means that you own:

You have a practically infinite number of GMail addresses. Just put a plus sign (+) after your name but before the @-sign and then type anything you like there, and the email will still reach you. You can also insert as many full stops (.) as you like, anywhere in the first half of your email address, and they’ll still reach you, too. And that’s really, really useful.

When you’re asked to give your email address to a company, don’t give them your email address. Instead, give them a mutated form of your email address that will still work, but that identifies exactly who you gave it to. So for example you might give the email address dan+amazon@gmail.com to Amazon, the email address dan+twitter@gmail.com to Twitter, and the email address dan+pornhub@gmail.com to… that other website you have an account on.

Why is this a clever idea? Well, there are a few reasons:

I know that some people get some of these benefits by maintaining a ‘throwaway’ email address. But it’s far more-convenient to use the email address you already have (you’re already logged-in to it and you use it every day)! And if you ever do want a true ‘throwaway’, you’re generally better using Mailinator: when you’re asked for your email address, just mash the keyboard and then put @mailinator.com on the end, to get e.g. dsif9tsnev4y8594es87n65y4@mailinator.com. Copy the first half of the email address to the clipboard, and then when you’re done signing up to whatever spammy service it is, just go to mailinator.com and paste into the box to see what they emailed you.

A handful of badly-configured websites won’t accept email addresses with plus signs in them, claiming that they’re invalid (they’re not). Personally, when I come across these I generally just inform the owner of the site of the bug and then take my business elsewhere; that’s how important it is to me to be able to filter my email properly! But another option is to exploit the fact that you can put as many dots in (the first part of) your GMail address as you like. So you could put d…an@gmail.com in and the email will still reach you, and you can later filter-out emails to that address. I’ll leave it as an exercise for the reader to decide how to encode information about the service you’re signing up to into the pattern and number of dots that you use.

Go forth and avoid spam.

If you’re a web developer and you haven’t come across the Google AMP project yet… then what stone have you been living under? But just in case you have been living under such a stone – or you’re not a web developer – I’ll fill you in. If you believe Google’s elevator pitch, AMP is “…an open-source initiative aiming to make the web better for all… consistently fast, beautiful and high-performing across devices and distribution platforms.”

I believe that AMP is fucking poisonous and that the people who’ve come out against it by saying it’s “controversial” so far don’t go remotely far enough. Let me tell you about why.

When you configure your website for AMP – like the BBC, The Guardian, Reddit, and Medium already have – you deliver copies of your pages written using AMP HTML and AMP JS rather than the HTML and Javascript that you’re normally would. This provides a subset of the functionality you’re used to, but it’s quite a rich subset and gives you a lot of power with minimal effort, whether you’re trying to make carousels, video players, social sharing features, or whatever. Then when your site is found via Google Search on a mobile device, then instead of delivering the user to your AMP HTML page or its regular-HTML alternative… Google delivers your site for you via an ultra-fast precached copy via their own network. So far, a mixed bag, right? Wrong.

Ignoring the facts that you can get locked-in if you try it once, it makes the fake news problem worse than ever, and it breaks the core concepts of a linkable web, the thing that worries me the most is that AMP represents the most-subtle threat to Net Neutrality I’ve ever seen… and it’s from an organisation that is nominally in favour of a free and open Internet but that stands to benefit from a more-closed Internet so long as it’s one that they control.

Google’s stated plan to favour pages that use AMP creates a publisher’s arms race in which content creators are incentivised to produce content in the (open-source but) Google-controlled AMP format to rank higher in the search results, or at least regain parity, versus their competitors. Ultimately, if everybody supported AMP then – ignoring the speed benefits for mobile users (more on that in a moment) – the only winner is Google. Google, who would then have a walled garden of Facebook-beating proportions around the web. Once Google delivers all of your content, there’s no such thing as a free and open Internet any more.

So what about those speed increases? Yes, the mobile web is slower than we’d like and AMP improves that. But with the exception of the precaching – which is something that could be achieved by other means – everything that AMP provides can be done using existing technologies. AMP makes it easy for lazy developers to make their pages faster, quickly, but if speed on mobile devices is the metric for your success: let’s just start making more mobile-friendly pages! We can make the mobile web better and still let it be our Web: we don’t need to give control of it to Google in order to shave a few milliseconds off the load time.

We need to reject AMP, and we need to reject it hard. Right now, it might be sufficient to stand up to your boss and say “no, implementing AMP on our sites is a bad idea.” But one day, it might mean avoiding the use of AMP entirely (there’ll be browser plugins to help you, don’t worry). And if it means putting up with a slightly-slower mobile web while web developers remain lazy, so be it: that’s a sacrifice I’m willing to make to help keep our web free and open. And I hope you will be, too.

Like others, I’m just hoping that Sir Tim will feel the urge to say something about this development soon.

Maybe it’s because I was at Render Conf at the end of last month or perhaps it’s because Three Rings DevCamp – which always gets me inspired – was earlier this month, but I’ve been particularly excited lately to get the chance to play with some of the more “cutting edge” (or at least, relatively-new) web technologies that are appearing on the horizon. It feels like the Web is having a bit of a renaissance of development, spearheaded by the fact that it’s no longer Microsoft that are holding development back (but increasingly Apple) and, perhaps for the first time, the fact that the W3C are churning out standards “ahead” of where the browser vendors are managing to implement technical features, rather than simply reflecting what’s already happening in the world.

It seems to me that HTML5 may well be the final version of HTML. Rather than making grand new releases to the core technology, we’re now – at last! – in a position where it’s possible to iteratively add new techniques in a resilient, progressive manner. We don’t need “HTML6” to deliver us any particular new feature, because the modern web is more-modular and is capable of having additional features bolted on. We’re in a world where browser detection has been replaced with feature detection, to the extent that you can even do non-hacky feature detection in pure CSS, now, and this (thanks to the nature of the Web as a loosely-coupled, resilient platform) means that it’s genuinely possible to progressively-enhance content and get on board with each hot new technology that comes along, if you want, while still delivering content to users on older browsers.

And that’s the dream! A web of progressive-enhancement stays true to Sir Tim’s dream of universal interoperability while still moving forward technologically. I’ve no doubt that there’ll always be people who want to break the Web – even Google do it, sometimes – with single-page Javascript-only web apps, “app shell” websites, mobile-only or desktop-only experiences and “apps” that really ought to have been websites (and perhaps PWAs) to begin with… but the fact that the tools to make a genuinely “progressively-enhanced” web, and those tools are mainstream, is a big deal. If you don’t think we’re at that point yet, I invite you to watch Rachel Andrews‘ fantastic presentation, “Start Using CSS Grid Layout Today”.

Some of the things I’ve been playing with recently include:

Only really supported in Chrome, but there’s a great polyfill, the Intersection Observer API is one of those technologies that make you say “why didn’t we have that already?” It’s very simple: all an Intersection Observer does is to provide event hooks for target objects entering or leaving the viewport, without resorting to polling or hacky code on scroll event captures.

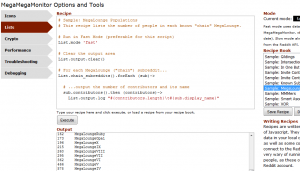

What’s it for? Well the single most-obvious use case is lazy-loading images, a-la Medium or Google Image Search: delivering users a placeholder image or a low-resolution copy until they scroll far enough for the image to come into view (or almost into view) and then downloading the full-resolution version and dynamically replacing it. My first foray into Intersection Observers was to take Medium’s approach and then improve it with a Service Worker in order to make it behave nicely even if the user’s Internet connection was unreliable, but I’ve since applied it to my Reddit browser plugin MegaMegaMonitor: rather than hammering the browser with Javascript the plugin now waits until relevant content enters the viewport before performing resource-intensive tasks.

I’d briefly played with Service Workers before and indeed we’re adding a Service Worker to the next version of Three Rings, which, in conjunction with a manifest.json and the service’s (ongoing) delivery over HTTPS (over H2, where available, since last year), technically makes it a Progressive Web App… and I’ve been looking for opportunities to make use of Service Workers elsewhere in my work, too… but my first dive in to Web Workers was in introducing one to the next upcoming version of MegaMegaMonitor.

Web Workers add true multithreading to Javascript, and in the case of MegaMegaMonitor this means the possibility of pushing the more-intensive work that the plugin has to do out of the main thread and into the background, allowing the user to enjoy an uninterrupted browsing experience while the heavy-lifting goes on in the background. Because I don’t control the domain on which this Web Worker runs (it’s reddit.com, of course!), I’ve also had the opportunity to play with Blobs, which provided a convenient way for me to inject Worker code onto somebody else’s website from within a userscript. This has also lead me to the discovery that it ought to be possible to implement userscripts that inject Service Workers onto websites, which could be used to mashup additional functionality into websites far in advance of that which is typically possible with a userscript… more on that if I get around to implementing such a thing.

The final of the new technologies I’ve been playing with this month is the Fetch API. I’m not pulling any punches when I say that the Fetch API is exactly what XMLHttpRequests should have been from the very beginning. Understanding them properly has finally given me the confidence to stop using jQuery for the one thing for which I always seemed to have had to depend on it for – that is, simplifying Ajax requests! I mean, look at this elegant code:

fetch('posts.json')

.then(function(response) {

return response.json();

})

.then(function(json) {

console.log(json.something.otherThing);

});

Whether or not you’re a fan of Javascript, you’ve got to admit that that’s infinitely more readable than XMLHttpRequest hackery (at least, without the help of a heavyweight library like jQuery).

So that’s some of the stuff I’ve been playing with lately: Intersection Observers, Web Workers, Blobs, and the Fetch API. And I feel all full of optimism on behalf of the Web.

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

I have a vivid, recurring dream. I climb the stairs in my parents’ house to see my old bedroom. In the back corner, I hear a faint humming.

It’s my old computer, still running my 1990s-era bulletin board system (BBS, for short), “The Cave.” I thought I had shut it down ages ago, but it’s been chugging away this whole time without me realizing it—people continued calling my BBS to play games, post messages, and upload files. To my astonishment, it never shut down after all…

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Pornhub has found an innovative way to deliver ads to users of ad blocking software, exploiting limitations in Chrome’s content blocking API…

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

I am the original author of GNU grep. I am also a FreeBSD user, although I live on -stable (and older) and rarely pay attention to -current. However, while searching the -current mailing list for an unrelated reason, I stumbled across some flamage regarding BSD grep vs GNU grep performance. You may have noticed that discussion too...

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

In the beginning there was NCSA Mosaic, and Mosaic called itself NCSA_Mosaic/2.0 (Windows 3.1), and Mosaic displayed pictures along with text, and there was much rejoicing…

Have you ever wondered why every major web browser identifies itself as “Mozilla”? Wonder no longer…

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

The coolest talk of this year’s Blackhat must have been the one of Sean Devlin and Hanno Böck. The talk summarized this early year’s paper, in a very cool way: Sean walked on stage and announced that he didn’t have his slides. He then said that it didn’t matter because he had a good idea on how to retrieve them…

This article is a repost promoting content originally published elsewhere. See more things Dan's reposted.

Here is an example scenario… You receive an email requesting a payment. It could be for rent, it could be fees for a course or any other legitimate reason. Typically, the payment is a significant sum. The email contains the banking details you need to make the payment. Then shortly after the 1st email arrives…

An annual tradition at Three Rings is DevCamp, an event that borrows from the “hackathon” concept and expands it to a week-long code-producing factory for the volunteers of the Three Rings development team. Motivating volunteers is a very different game to motivating paid employees: you can’t offer to pay them more for working harder nor threaten to stop paying them if they don’t work hard enough, so it’s necessary to tap in to whatever it is that drives them to be a volunteer, and help them get more of that out of their volunteering.

At least part of what appeals to all of our developers is a sense of achievement – of producing something that has practical value – as well as of learning new things, applying what they’ve learned, and having a degree of control over the parts of the project they contribute most-directly to. Incidentally, these are the same things that motivate paid developers, too, if a Google search for studies on the subject is to believed. It’s just that employers are rarely able to willing to offer all of those things (and even if they can, you can’t use them to pay your mortgage), so they have to put money on the table too. With my team at Three Rings, I don’t have money to give them, so I have to make up for it with a surplus of those things that developers actually want.

It seems strange to me in hindsight that for the last seven years I’ve spent a week of my year taking leave from my day job in order to work longer, harder, and unpaid for a voluntary project… but that I haven’t yet blogged about it. Over the same timescale I’ve spent about twice as long at DevCamp than I have, for example, skiing, yet I’ve managed to knock out several blog posts on that subject. Part of that might be borne out of the secretive nature of Three Rings, especially in its early days (when involvement with Three Rings pretty-much fingered you as being a Nightline volunteer, which was frowned upon), but nowadays we’ve got a couple of dozen volunteers with backgrounds in a variety of organisations: and many of those of us that ever were Nightliner volunteers have long since graduated and moved-on to other volunteering work besides.

Part of the motivation – one of the perks of being a Three Rings developer – for me at least, is DevCamp itself. Because it’s an opportunity to drop all of my “day job” stuff for a week, go to some beatiful far-flung corner of the country, and (between early-morning geocaching/hiking expeditions and late night drinking tomfoolery) get to spend long days contributing to something awesome. And hanging out with like-minded people while I do so. I like I good hackathon of any variety, but I love me some Three Rings DevCamp!

So yeah: DevCamp is awesome. It’s more than a little different than those days back in 2003 when I wrote all the code and Kit worked hard at distracting me with facts about the laws of Hawaii – for the majority of DevCamp 2016 we had half a dozen developers plus two documentation writers in attendance! – but it’s still fundamentally about the same thing: producing a piece of software that helps about 25,000 volunteers do amazing things and make the world a better place. We’ve collectively given tens, maybe hundreds of thousands of hours of time in developing and supporting it, but that in turn has helped to streamline the organisation of about 16 million person-hours of other volunteering.

So that’s nice.

Oh, and I was delighted that one of my contributions this DevCamp was that I’ve finally gotten around to expanding the functionality of the “gender” property so that there are now more than three options. That’s almost more-exciting than the geocaches. Almost.

Edit: added a missing word in the sentence about how much time our volunteers had given, making it both more-believable and more-impressive.

This is the (long-overdue) last in a three-part blog post about telling stories using virtual reality. Read all of the parts here.

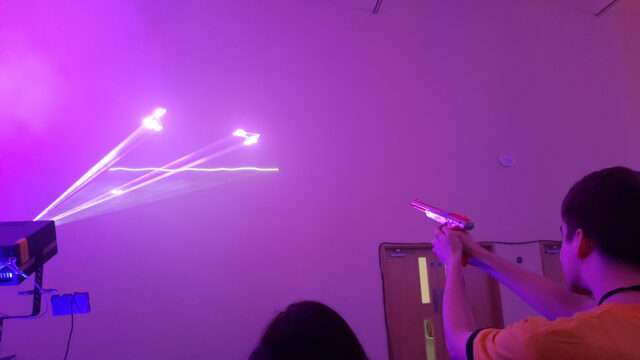

For the first time in two decades, I’ve been playing with virtual reality. This time around, I’ve been using new and upcoming technologies like Google Cardboard and the Oculus Rift. I’m particularly interested in how these new experiences can be used as a storytelling medium by content creators, and the lessons we’ll learn about immersive storytelling by experimenting with them.

It seems to me that the biggest questions that VR content creators will need to start thinking about as we collectively begin to explore this new (or newly-accessible) medium are:

This question mostly relates to creators making “interactive” experiences. Superficially, VR gives user experience designers a running start because there’s little that’s as intuitive as “turning your head to look around” (and, in fact, trying the technology out on a toddler convinced me that it’s adults – who already have an anticipation of what a computer interface ought to be – who are the only ones who’ll find this challenging). On the other hand, most interactive experiences demand more user interaction than simply looking around, and therein lies the challenge. Using a keyboard while you’re wearing a headset is close to impossible (trust me, I’ve tried), although the augmented-reality approach of the Hololens and potentially even the front-facing webcam that’s been added to the HTC Vive PRE might be used to mitigate this. A gamepad is workable, but it’s slightly immersion-breaking in some experiences to hold your hands in a conventional “gamer pose”, as I discovered while playing my Gone Home hackalong: this was the major reason I switched to using a Wiimote.

So far, I’ve seen a few attempts that don’t seem to work, though. The (otherwise) excellent educational solar system exploration tool Titans of Space makes players stare at on-screen buttons for a few seconds to “press” them, which is clunky and unintuitive: in the real world, we don’t press buttons with our eyes! I understand why they’ve done this: they’re ensuring that their software has the absolute minimum interface requirement that’s shared between the platforms that it supports, but that’s a concern too! If content creators plan to target two or more of the competing systems that will launch this year alone, will they have to make usability compromises?

There’s also the question of how we provide ancillary information to players: the long-established paradigms of “health in the bottom left, ammo in the bottom right” don’t work so obviously when they’re hidden in your peripheral vision. Games like Elite Dangerous have tackled this problem from their inception by making a virtualised “real” user interface comprised of the “screens” in the spaceship around you, but it’s an ongoing challenge for titles that target both VR and conventional platforms in future. Wareable made some great observations about these kinds of concerns, too.

In my previous blog post, I talked about a documentary that used 360° cameras to “place” the viewer among the protesters that formed the subject of the documentary. In order to provide some context and to reduce the disorientation experienced by “jumping” from location to location, the creator opted to insert “title slides” between scenes with text explaining what would be seen next. But title slides necessitate that the viewer is looking in a particular direction! In the case of this documentary and several other similar projects I’ve seen, the solution was to put the title in four places – at each of the four cardinal directions – so that no matter which way you were looking you’ll probably be able to find one. But title slides are only a small part of the picture.

Directors producing content – whether interactive or not – for virtual reality will have to think hard about the implications of the fact that their camera (whether a physical camera or – slightly easier and indeed more-controllable – a simulated camera in a 3D-rendered world) can look in any direction. Sets must be designed to be all-encompassing, which poses huge challenges for the traditional methods of producing film and television programmes. Characters’ exits and entrances must be through believable portals: they can’t simply walk off to the left and stop. And, of course, the content creator must find a way to get the audience’s attention when they need it: watching the first few minutes of Backstage with an Elite Ballerina, for example, puts you in a spacious dance studio with a spritely ballerina to follow… but there’s nothing to stop you looking the other way (perhaps by accident), and – if you do – you might miss some of the action or find it difficult to work out where you’re supposed to be looking. Expand that to a complex, busy scene like, say… the ballroom scene in Labyrinth… and you might find yourself feeling completely lost within a matter of minutes (of course, a feeling of being lost might be the emotional response that the director intends, and hey – VR is great for that!).

The potential for VR in some kinds of stories is immense, though. How about a murder mystery story played out in front of you in a dollhouse (showing VR content “in minature” can help with the motion sickness some people feel if they’re “dragged” from scene to scene): you can move your head to peep in to any room and witness the conversations going on, but the murder itself happens during a power cut or otherwise out-of-sight and the surviving characters are left to deduce the clues. In such a (non-interactive) experience the spectator has the option to follow the action in whatever way they like, and perhaps even differently on different playthroughs, putting the focus on the rooms and characters and clues that interest them most… which might affect whether or not they agree with the detective’s assertions at the end…

As I mentioned in the previous blog post, we’ve already seen the evolution of storytelling media on several occasions, such as the jump from theatre to cinema and the opportunities that this change eventually provided. Early screenwriters couldn’t have conceived of some of the tools used in modern films, like the use of long flowing takes for establishing shots or the use of fragmented hand-held shots to add an excited energy to fight scenes. It wasn’t for lack of imagination (Georges Méliès realised back in the nineteenth century that timelapse photography could be used to produce special effects not possible in theatre) but rather a lack of the technology and more-importantly a lack of the maturity of the field. There’s an ongoing artistic process whereby storytellers find new ways to manage their medium from one another: Romeo Must Die may have made clever use of a “zoom-to-X-ray” when a combatant’s bones were broken, but it wouldn’t have been possible if The Matrix hadn’t shown the potential for “bullet time” the previous year. And if we’re going down that road: have you seen the bullet time scene in Zotz!, a film that’s older than the Wachowskis themselves?

Clearly, we’re going to discover new ways of telling stories that aren’t possible with traditional “flat screen” media nor with more-immersive traditional theatre: that’s what makes VR as a storytelling tool so exciting.

Of course, we don’t yet know what storytelling tools we’ll find in this medium, but some ideas I’ve been thinking about are:

![GIF showing a variety people watching VR porn. [SFW]](https://media.giphy.com/media/l41lQODMertvSTIjK/giphy.gif)

As I’m sure I’ve given away these last three blog posts, I’m really interested in the storytelling potential of VR, and you can bet I’ll be bothering you all again with updates of the things I get to play with later this year (and, in fact, some of the cool technologies I’ve managed to get access to just while I’ve been writing up these blog posts).

If you haven’t had a chance to play with contemporary VR, get yourself a cardboard. It’s dirt-cheap and it’s (relatively) low-tech and it’s nowhere near as awesome as “real” hardware solutions… but it’s still a great introduction to what I’m talking about and it’s absolutely worth doing. And if you have, I’d love to hear your thoughts on storytelling using virtual reality, too.

This is the second in a three-part blog post about telling stories using virtual reality. Read all of the parts here.

I’m still waiting to get in on the Oculus Rift and HTC Vive magic when they’re made generally-available, later this year. But for the meantime, I’m enjoying quite how hackable VR technologies are. I chucked my Samsung Galaxy S6 edge into an I Am Cardboard DSCVR, paired it with a gaming PC using TrinusVR, used GlovePIE to hook up a Wii remote (playing games with a keyboard or even a gamepad is challenging if your headset doesn’t have a headstrap, so a one-handed control is needed), and played a game of Gone Home. It’s a cheap and simple way to jump into VR gaming, especially if – like me – you already own the electronic components: the phone, PC, and Wiimote.

While the media seems to mostly fixate on the value of VR in “action” gaming – shoot-’em-ups, flight simulators, etc. – I actually think there’s possibly greater value in it more story-driven genres. I chose Gone Home for my experiment, above, because it’s an adventure that you play at your own pace, where the amount you get out of it as a story depends on your level of attention to detail, not how quickly you can pull a trigger. Especially on this kind of highly-affordable VR gear, “twitchy” experiences that require rapid head turning are particularly unsatisfying, not-least because the response time of even the fastest screens is always going to be significantly slower than that of real life. But as a storytelling medium (especially in an affordable form) it’s got incredible potential.

I was really pleased to discover that some content creators are already experimenting with the storytelling potential of immersive VR experiences. An example would be the video Hong Kong Unrest – a 360° Virtual Reality Documentary, freely-available on YouTube. Standing his camera (presumably a Jump camera rig, or something similar) amongst the crowds of the 2014 Hong Kong protests, the creator of this documentary gives us a great opportunity to feel as though we’re standing right there with the protesters. The sense of immersion of being “with” the protesters is, in itself, a storytelling statement that shows the filmmaker’s bias: you’re encouraged to empathise with the disenfranchised Hong Kong voters, to feel like you’re not only with them in a virtual sense, but emotionally with them in support of their situation. I’m afraid that watching the click-and-drag version of the video doesn’t do it justice: strap a Cardboard to your head to get the full experience.

But aside from the opportunities it presents, Virtual Reality brings huge new challenges for content creators, too. Consider that iconic spaghetti western The Good, The Bad, And The Ugly. The opening scene drops us right into one of the artistic themes of the film – the balance of wide and close-up shots – when it initially shows us a wide open expanse but then quickly fills the frame with the face of Tuco (“The Ugly”), giving us the experience of feeling suddenly cornered and trapped by this dangerous man. That’s a hugely valuable shot (and a director’s wet dream), but it represents something that we simply don’t have a way of translating into an immersive VR setting! Aside from the obvious fact that the viewer could simply turn their head and ruin the surprise of the shot, it’s just not possible to fill the frame with the actor’s face in this kind of way without forcing the focal depth to shift uncomfortably.

That’s not to say that there exist stories that we can’t tell using virtual reality… just that we’re only just beginning to find out feet with this new medium. When stage directors took their first steps into filmography in the early years of the 20th century, they originally tried to shoot films “as if” they were theatre (albeit, initially, silent theatre): static cameras shooting an entire production from a single angle. Later, they discovered ways in which this new medium could provide new ways to tell stories: using title cards to set the scene, close-ups to show actors’ faces more-clearly, panning shots, and so on.

Similarly: so long as we treat the current generation of VR as something different from the faltering steps we took two and a half decades ago, we’re in frontier territory and feeling our way in VR, too. Do you remember when smartphone gaming first became a thing and nobody knew how to make proper user interfaces for it? Often your tiny mobile screen would simply try to emulate classic controllers, with a “d-pad” and “buttons” in the corners of the screen, and it was awful… but nowadays, we better-understand the relationship that people have with their phones and have adapted accordingly (perhaps the ultimate example of this, in my opinion, is the addictive One More Line, a minimalist game with a single-action “press anywhere” interface).

I borrowed an Oculus Rift DK2 from a co-worker’s partner (have I mentioned lately that I have the most awesome co-workers?) to get a little experience with it, and it’s honestly one of the coolest bits of technology I’ve ever had the priviledge of playing with: the graphics, comfort, and responsiveness blows Cardboard out of the water. One of my first adventures – Crytek’s tech demo Back to Dinosaur Island – was a visual spectacle even despite my apparently-underpowered computer (I’d hooked the kit up to Gina, my two-month old 4K-capable media centre/gaming PC: I suspect that Cosmo, my multi-GPU watercooled beast might have fared better). But I’ll have more to say about that – and the lessons I’ve learned – in the final part of this blog post.